一、前言

最近在研究trino on yarn 功能,网上大部分都是关于presto on yarn文章,关于trino on yarn 资料很少,但是本质上差不多,需要修改一些内容比,主要在调试方面这个slider不是很方便,分享下实践过程。

如果Trino集群没有弹性扩缩容需求或者已经有很成熟的K8S容器部署方案你可以忽略这个功能,最后实现效果就是通过slider自动部署以及调整trino node节点的数量实现快速的扩容缩容,查询本身的消耗资源跟yarn没有很大关系,还是跟配置的trino的集群资源有关。在集群资源紧张的情况下,合理调节不同时段资源的分配,比如夜里查询请求很少的情况下,可以释放一部分node节点给Flink Spark去做计算还是很实用的。

二、环境准备

编译apache-slider-0.92.0-incubating

- 下载地址:https://archive.apache.org/dist/incubator/slider/

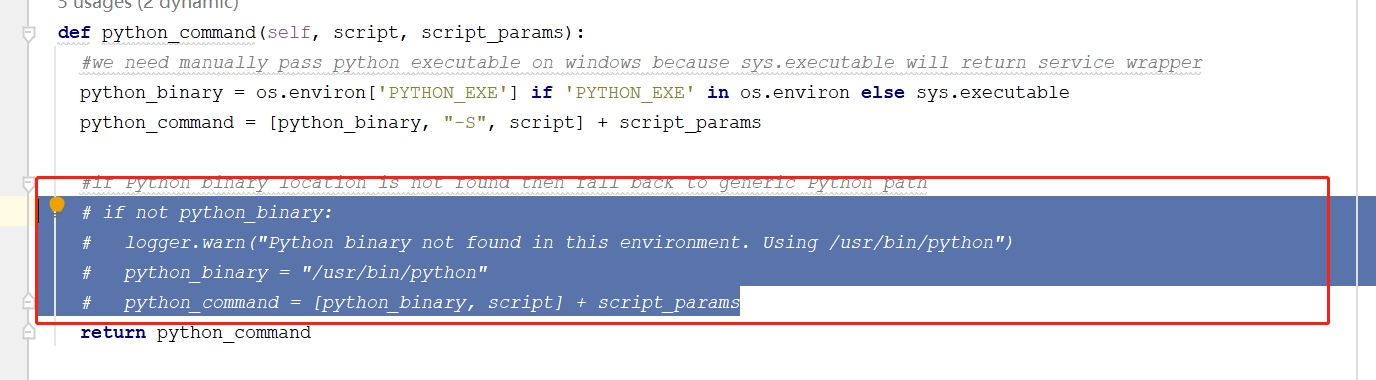

- 修改PythonExecutor.py,不然执行Python失败,参考: https://issues.apache.org/jira/browse/SLIDER-1254

def python_command(self, script, script_params):

#we need manually pass python executable on windows because sys.executable will return service wrapper

python_binary = os.environ['PYTHON_EXE'] if 'PYTHON_EXE' in os.environ else sys.executable

python_command = [python_binary, "-S", script] + script_params

#if Python binary location is not found then fall back to generic Python path

if not python_binary:

logger.warn("Python binary not found in this environment. Using /usr/bin/python")

python_binary = "/usr/bin/python"

python_command = [python_binary, script] + script_params

return python_command

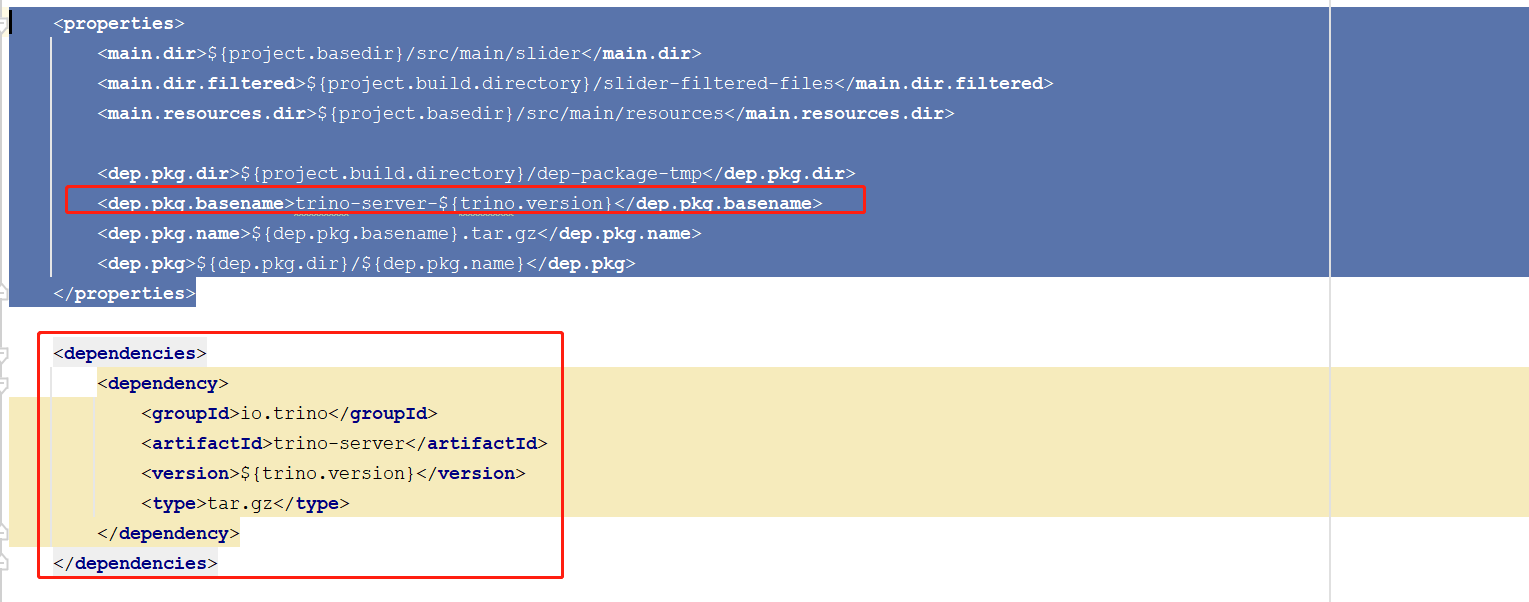

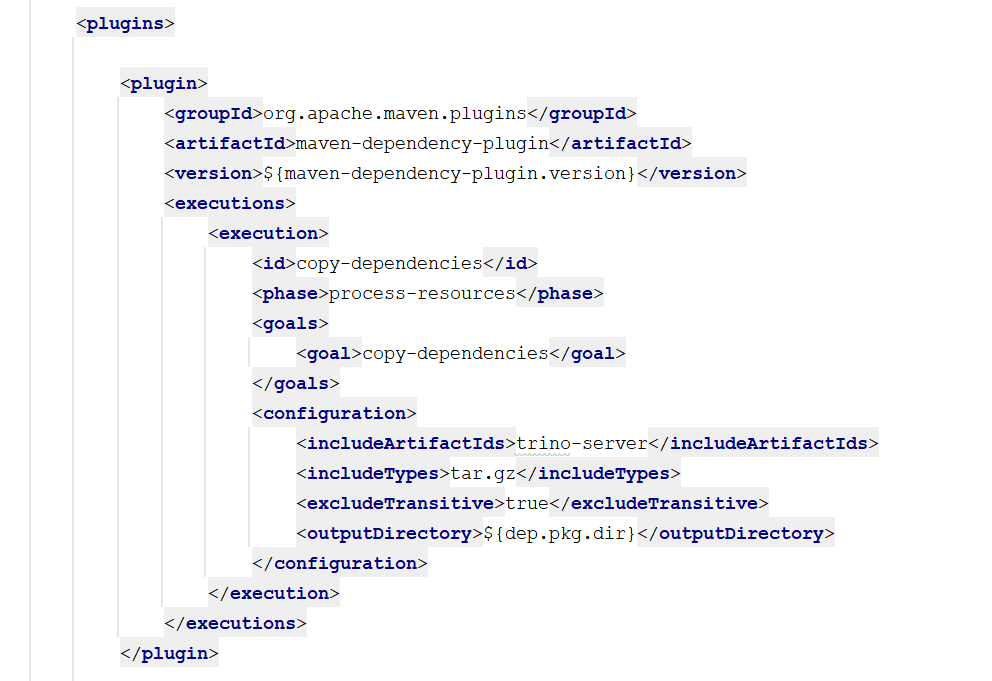

编译trino-yarn

1.GitHub地址:https://github.com/prestodb/presto-yarn.git

2.修改根目录pom文件

3.修改presto-yarn-package pom文件依赖

三、部署安装

1.编译好了以后把2个文件拷贝到服务器上,设置trino-yarn-package appConfig-default.json,resources-default.json,熟悉trino、presto的应该都比较熟悉,附上我的配置参考:

{ "schema": "http://example.org/specification/v2.0.0", "metadata": { }, "global": { "site.global.app_user": "presto", "site.global.user_group": "presto", "site.global.data_dir": "/data/trino/data", "site.global.config_dir": "/data/trino/etc", "site.global.app_name": "trino-server-418", "site.global.app_pkg_plugin": "${AGENT_WORK_ROOT}/app/definition/package/plugins/", "site.global.singlenode": "true", "site.global.coordinator_host": "192.168.2.182", "site.global.presto_query_max_memory": "27GB", "site.global.presto_query_max_memory_per_node": "4GB", "site.global.presto_query_max_total_memory_per_node": "9GB", "site.global.presto_server_port": "8089","site.global.catalog": "{'tpch': ['connector.name=system']}", "site.global.jvm_args": "['-server', '-Xmx50G', '-XX:InitialRAMPercentage=80', '-XX:MaxRAMPercentage=80', '-XX:G1HeapRegionSize=32M', '-XX:+ExplicitGCInvokesConcurrent', '-XX:+ExitOnOutOfMemoryError', '-XX:+HeapDumpOnOutOfMemoryError', '-XX:-OmitStackTraceInFastThrow', '-XX:ReservedCodeCacheSize=512M', '-XX:PerMethodRecompilationCutoff=10000', '-XX:PerBytecodeRecompilationCutoff=10000', '-Djdk.attach.allowAttachSelf=true', '-Djdk.nio.maxCachedBufferSize=2000000', '-XX:+UnlockDiagnosticVMOptions', '-XX:+UseAESCTRIntrinsics', '-XX:+UseG1GC']", "site.global.log_properties": "['io.trino=INFO']", "application.def": ".slider/package/trino/trino-yarn.zip", "java_home": "/home/presto/presto/zulu17.42.21-ca-crac-jdk17.0.7-linux_x64/bin/java" }, "components": { "slider-appmaster": { "jvm.heapsize": "128M" } } }

{ "schema": "http://example.org/specification/v2.0.0", "metadata": { }, "global": { "yarn.vcores": "1" }, "components": { "slider-appmaster": { }, "WORKER": { "yarn.role.priority": "2", "yarn.component.instances": "3", "yarn.component.placement.policy": "1", "yarn.memory": "1500" } } }

详细参考:https://prestodb.io/presto-yarn/installation-yarn-configuration-options.html#appconfig-json

2.启动slider

../bin/slider create presto-query --template appConfig-default.json --resources resources-default.json

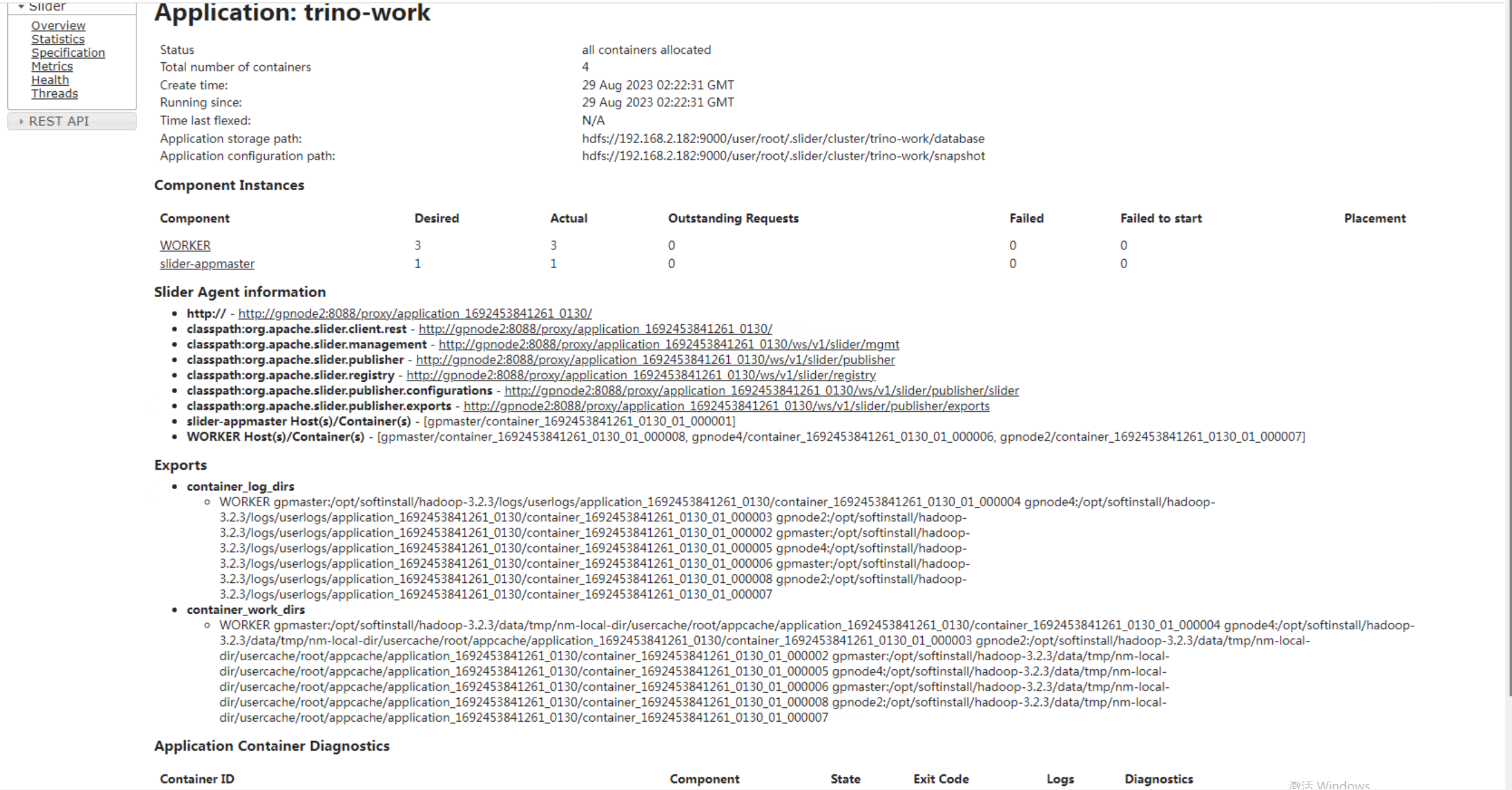

成功效果图

详细的可以参照这个博客,非常的详尽:PrestoOnYarn搭建及其问题解决方案总结_presto on yarn_qq_2368521029的博客-CSDN博客,(我主要写我调试的内容,这方面的内容比较少)

四、调试排错

部署到Yarn 里面后会遇到很多的问题,但是怎么调试这个还是稍微有点麻烦,我给出我的调试方法给大家一个参考。

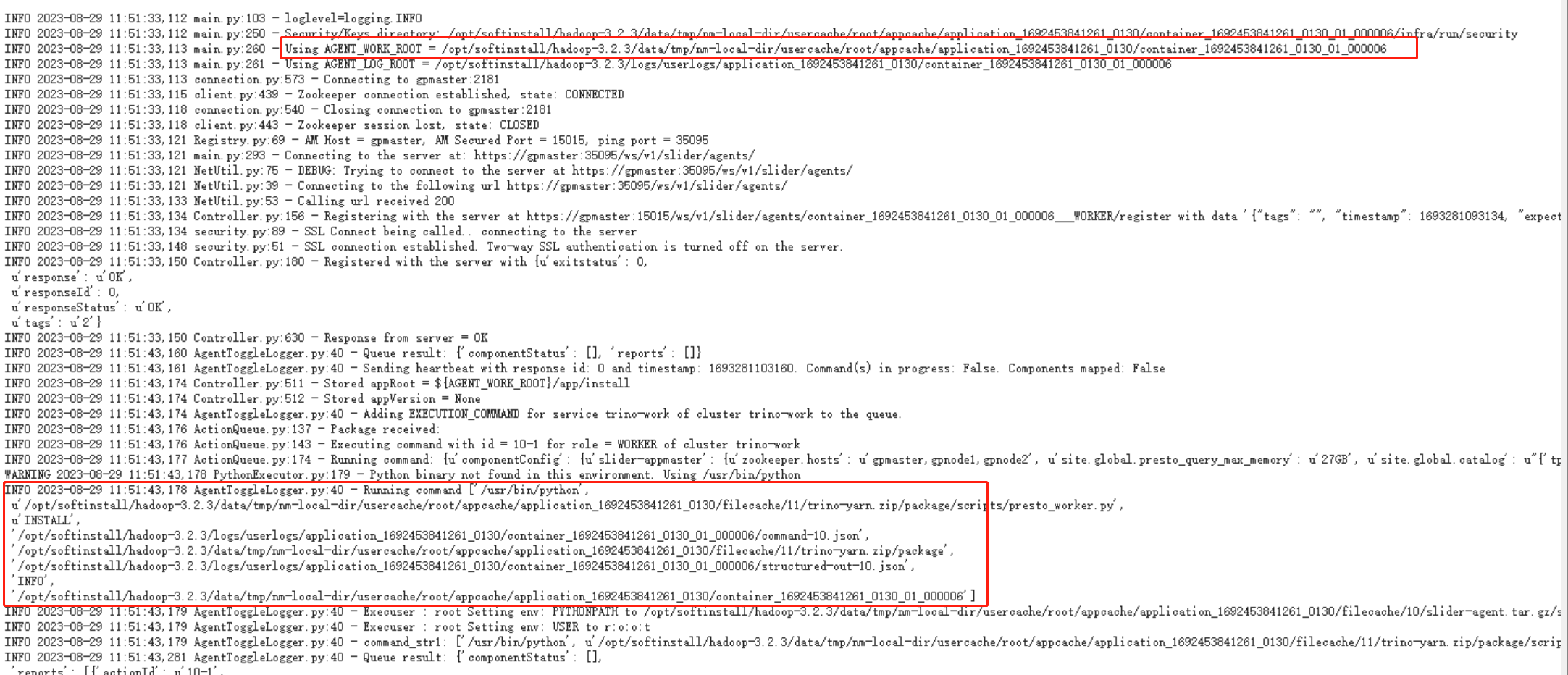

其实程序本身就是通过动态的分发Presto-yarn包里的trino-server文件以及自动生成trino的配置文件,slider是一个通用执行命令的框架。通过日志我们可以看到实际的工作目录,以及具体的执行python脚本命令。

注释掉slider 这部执行语句,让程序空跑,脚本实际并没有执行。

完成1个Work节点的部署就三步,INSTALL--->START---->STATUS

根据具体打印出来的命令手动切换 AGENT_WORK_ROOT 目录,然后手动执行脚本,就能按照实际的报错进行调试,具体参数就是日志里面打印出来的拷贝,给出示例:

[root@gpmaster scripts]# export PYTHONPATH=/opt/softinstall/hadoop-3.2.3/data/tmp/nm-local-dir/usercache/root/appcache/application_1692453841261_0117/filecache/10/slider-agent.tar.gz/slider-agent/jinja2:/opt/softinstall/hadoop-3.2.3/data/tmp/nm-local-dir/usercache/root/appcache/application_1692453841261_0117/filecache/10/slider-agent.tar.gz/slider-agent [root@gpmaster scripts]# python presto_worker.py INSTALL /opt/softinstall/hadoop-3.2.3/logs/userlogs/application_1692453841261_0117/container_1692453841261_0117_01_000002/command-1.json /opt/softinstall/hadoop-3.2.3/data/tmp/nm-local-dir/usercache/root/appcache/application_1692453841261_0117/filecache/11/trino-yarn.zip/package /opt/softinstall/hadoop-3.2.3/logs/userlogs/application_1692453841261_0117/container_1692453841261_0117_01_000002/structured-out-1.json INFO /opt/softinstall/hadoop-3.2.3/data/tmp/nm-local-dir/usercache/root/appcache/application_1692453841261_0117/container_1692453841261_0117_01_000002 2023-08-28 17:04:39,453 - Directory['/opt/softinstall/hadoop-3.2.3/data/tmp/nm-local-dir/usercache/root/appcache/application_1692453841261_0117/container_1692453841261_0117_01_000002/app/install'] {'action': ['delete']}

[root@gpmaster scripts]# python presto_worker.py START /opt/softinstall/hadoop-3.2.3/logs/userlogs/application_1692453841261_0117/container_1692453841261_0117_01_000002/command-1.json /opt/softinstall/hadoop-3.2.3/data/tmp/nm-local-dir/usercache/root/appcache/application_1692453841261_0117/filecache/11/trino-yarn.zip/package /opt/softinstall/hadoop-3.2.3/logs/userlogs/application_1692453841261_0117/container_1692453841261_0117_01_000002/structured-out-1.json INFO /opt/softinstall/hadoop-3.2.3/data/tmp/nm-local-dir/usercache/root/appcache/application_1692453841261_0117/container_1692453841261_0117_01_000002

2023-08-28 17:04:39,453 - Directory['/opt/softinstall/hadoop-3.2.3/data/tmp/nm-local-dir/usercache/root/appcache/application_1692453841261_0117/container_1692453841261_0117_01_000002/app/install'] {'action': ['delete']}

五、1个机器多个节点冲突解决

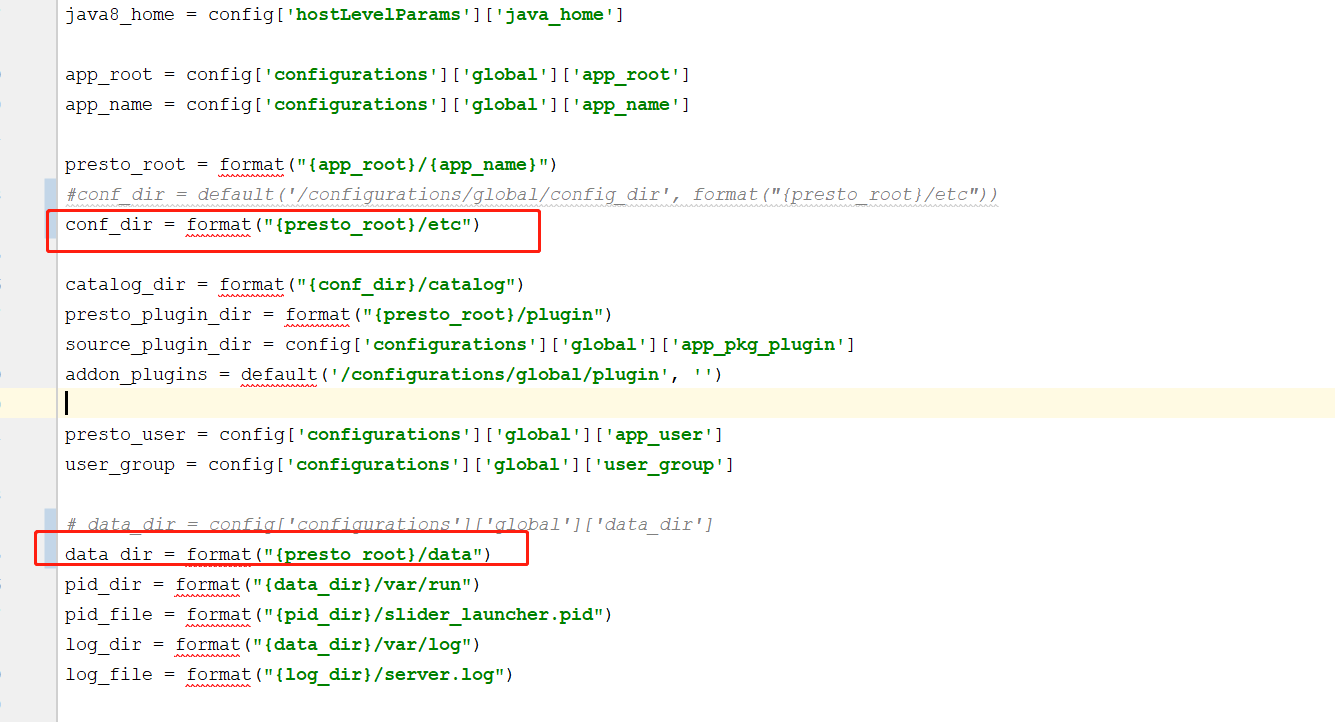

1.文件冲突:把trino的配置文件 etc和data 目录都生成到AGENT_WORK_ROOT下,这样就能解决调度到同一台机器上这2个文件冲突的问题。

主要修改params.py

2.端口冲突:加上随机端口配置,注释掉config.properties-WORKER.j2 模板里面的http-server.http.port={{presto_work_port}},增加随机端口的配置写入。

具体参考实现trino on yarn调度到同一机器上多实例端口冲突问题处理_qq_2368521029的博客-CSDN博客

六、非公版Trino-server打包部署

我们对trino修改过一些功能,所以正常打包出来的文件并不能适合我们的环境,需要把我们自己的Trino给打包进去。

1.首先正常打包出来的包目录trino-yarn/package/files下就是对应的 trino-server 文件,把我们自己的Trino去掉etc和data目录,打包替换成对应的包

2.修改params.py,configure.py 以及config.properties-WORKER.j2 模板,对应生成自己需要的模板

3.打包重新上传到hdfs指定目录

../bin/slider package --install --name trino --package trino-yarn.zip --replacepkg

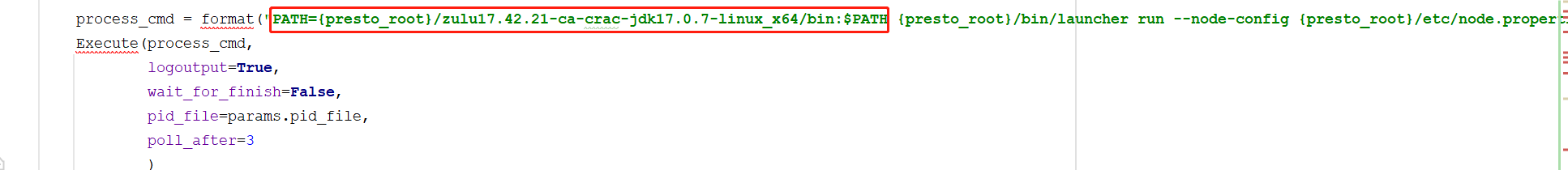

4.指定JDK,目前我们是把JDK直接跟trino-server的目录打包在一起,修改下启动命令