备考CKA

1、权限控制 RBAC

考题

解题思路

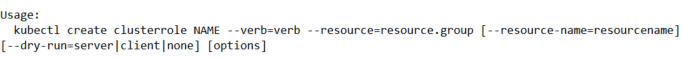

首先创建clusterrole,kubectl create clusterrole -h查看使用帮助,注意usage字段的命令使用方法,根据要求拼接命令

kubectl create clusterrole deployment-clusterrole --verb=create --resource=deployment,statefulset,daemonset

然后创建serviceaccount,kubectl create serviceaccount -h查看使用帮助,注意usage字段的命令使用方法,根据要求拼接命令

kubectl create serviceaccount cicd-token -n app-team1

最后使用rolebinding进行绑定,kubectl create rolebinding -h查看使用帮助,注意usage字段的命令使用方法,根据要求拼接命令

kubectl create rolebinding deployment-clusterrole-rolebinding --clusterrole=deployment-clusterrole --serviceaccount=app-team1:cicd-token

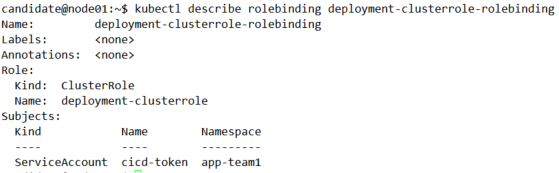

结果验证

kubectl describe rolebinding deployment-clusterrole-rolebinding

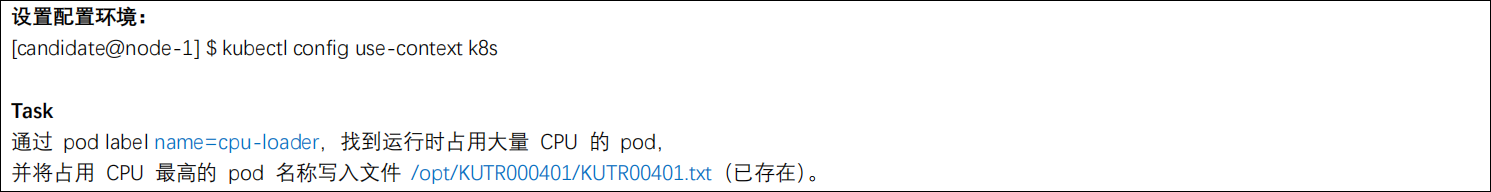

2、查看 pod 的 CPU

考题

解题思路

首先kubectl top pod -h查看使用帮助,注意usage字段的命令使用方法,根据要求拼接命令

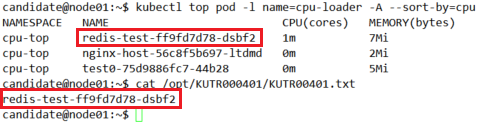

查看cpu-loader标签的资源情况

kubectl top pod -l name=cpu-loader -A

然后使用--sort-by=CPU按照cpu使用率进行排序

kubectl top pod -l name=cpu-loader -A --sort-by=cpu

最后将最高的过滤并输出到指定文件

kubectl top pod -l name=cpu-loader -A --sort-by=cpu | grep -v NAME | head -1 | awk '{print $2}' > /opt/KUTR000401/KUTR00401.txt

结果验证

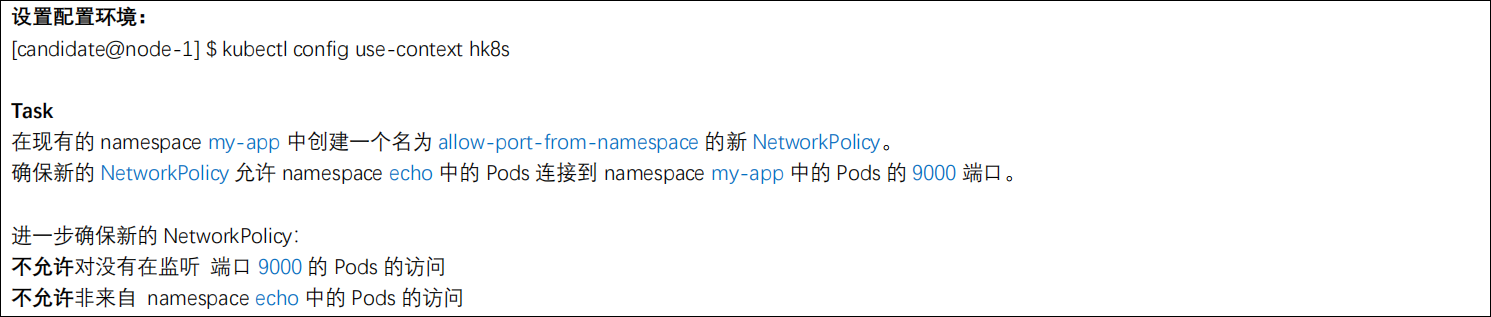

3、配置网络策略 NetworkPolicy

考题

解题思路

前往官网Kubernetes 文档\概念\服务、负载均衡和联网\网络策略查看yaml模版

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: test-network-policy

namespace: default

spec:

podSelector:

matchLabels:

role: db

policyTypes:

- Ingress

- Egress

ingress:

- from:

- ipBlock:

cidr: 172.17.0.0/16

except:

- 172.17.1.0/24

- namespaceSelector:

matchLabels:

project: myproject

- podSelector:

matchLabels:

role: frontend

ports:

- protocol: TCP

port: 6379

egress:

- to:

- ipBlock:

cidr: 10.0.0.0/24

ports:

- protocol: TCP

port: 5978

按照要求进行修改

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: allow-port-from-namespace

namespace: my-app

spec:

podSelector: {}

policyTypes:

- Ingress

ingress:

- from:

- namespaceSelector:

matchLabels:

project: echo

ports:

- protocol: TCP

port: 9000

kubectl apply -f xxx.yaml创建即可

注意1:创建之前需注意kubectl get ns查看是否存在该namespace,若不存在则需先执行kubectl create ns my-app进行创建

注意2: vi编辑器中需先执行:set paste,否则文件缩进可能存在问题

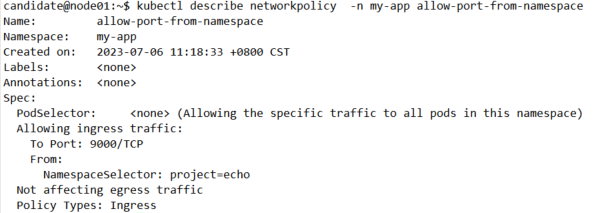

结果验证

kubectl describe networkpolicy -n my-app allow-port-from-namespace

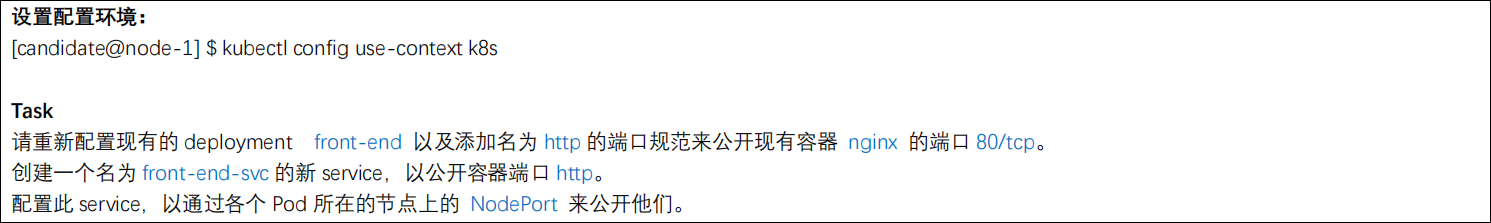

4、暴露服务 service

考题

解题思路

前往官网Kubernetes 文档\教程\无状态的应用\公开外部 IP 地址以访问集群中应用程序查看yaml模版

公开外部 IP 地址以访问集群中应用程序 | Kubernetes

首先按照官网yaml模版为front-end设置容器端口kubectl edit deploy front-end

containers:

- image: vicuu/nginx:hello

imagePullPolicy: IfNotPresent

name: nginx

ports:

- containerPort: 80

protocol: TCP

name: http

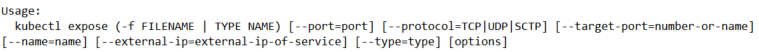

然后kubectl expose -h查看使用帮助,注意usage字段的命令使用方法,根据要求拼接命令

kubectl expose deploy front-end --port=80 --protocol=TCP --target-port=80 --name=front-end-svc --type=NodePort

结果验证

kubectl describe svc front-end-svc

调用验证

candidate@node01:~$ kubectl get po,svc -owide | grep front

pod/front-end-5d546b68bb-m9cm6 1/1 Running 0 8m35s 10.244.140.107 node02 <none> <none>

service/front-end-svc NodePort 10.96.224.101 <none> 80:30629/TCP 6m25s app=front-end

###调用pod ip

candidate@node01:~$ curl 10.244.140.107

Hello World ^_^

###调用svc ip

candidate@node01:~$ curl 10.96.224.101

Hello World ^_^

###调用node ip

candidate@node01:~$ curl node02:30629

Hello World ^_^

###若未做解析,用以下方法去查

candidate@node01:~$ kubectl get node node02 -owide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

node02 Ready <none> 151d v1.26.0 11.0.1.113 <none> Ubuntu 20.04.5 LTS 5.4.0-137-generic containerd://1.6.16

candidate@node01:~$ curl 11.0.1.113:30629

Hello World ^_^

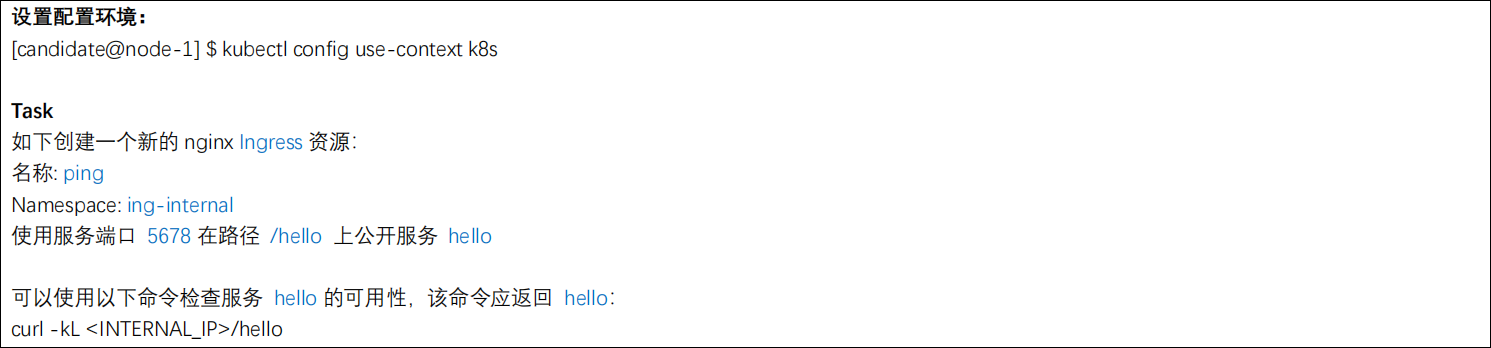

5、创建 ingress

考题

解题思路

前往官网Kubernetes 文档\概念\服务、负载均衡和联网\Ingress查看yaml模版

首先,创建ingressclass

apiVersion: networking.k8s.io/v1

kind: IngressClass

metadata:

labels:

app.kubernetes.io/component: controller

name: nginx-example

annotations:

ingressclass.kubernetes.io/is-default-class: "true"

spec:

controller: k8s.io/ingress-nginx

然后,根据kubectl get svc -n ing-internal所查到的svc信息创建ingress

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: ping

namespace: ing-internal

annotations:

nginx.ingress.kubernetes.io/rewrite-target: /

spec:

ingressClassName: nginx-example

rules:

- http:

paths:

- path: /hello

pathType: Prefix

backend:

service:

name: hello

port:

number: 5678

结果验证

调用验证

candidate@node01:~$ kubectl get po,svc,ing -A -owide | egrep 'hello|ping'

ing-internal pod/hello-8544bccfcb-m2v49 1/1 Running 4 (7h34m ago) 151d 10.244.140.100 node02 <none> <none>

ing-internal service/hello ClusterIP 10.107.160.105 <none> 5678/TCP 151d app=hello

ing-internal ingress.networking.k8s.io/ping nginx-example * 10.98.168.226 80 58m

###调用pod ip

candidate@node01:~$ curl 10.244.140.100

Hello World ^_^

###调用svc ip

candidate@node01:~$ curl 10.107.160.105:5678

Hello World ^_^

###调用ingress ip

candidate@node01:~$ curl 10.98.168.226/hello

Hello World ^_^

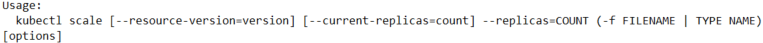

6、扩容 deployment 副本数量

考题

解题思路

使用kubectl scale deploy -h查看使用帮助,注意usage字段的命令使用方法,根据要求拼接命令

执行命令kubectl scale deploy presentation --replicas=4

结果验证

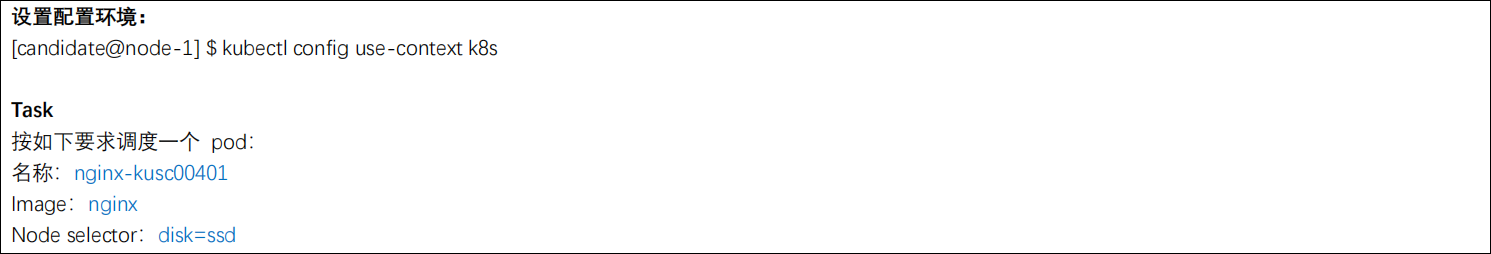

7、调度 pod 到指定节点

考题

解题思路

前往官网Kubernetes 文档\任务\配置 Pods 和容器\将 Pod 分配给节点查看yaml模版

根据要求对yaml进行修改

apiVersion: v1

kind: Pod

metadata:

name: nginx-kusc00401

spec:

containers:

- name: nginx

image: nginx

imagePullPolicy: IfNotPresent

nodeSelector:

disk: ssd

结果验证

执行下列命令查看调度节点

kubectl get node --show-labels | grep disk=ssd

查看pod所在节点是否为该节点

kubectl get po nginx-kusc00401 -owide

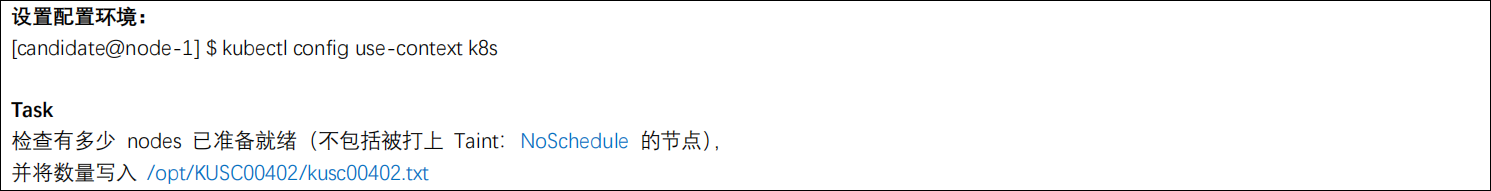

8、查看可用节点数量

考题

解题思路

首先,执行kubectl get node查看是否都为Ready状态

然后,执行kubectl describe node | grep -i taints | grep -iv noschedule过滤掉含有污点的节点信息

最后,执行kubectl describe node | grep -i taints | grep -iv noschedule | wc -l > /opt/KUSC00402/kusc00402.txt计数并写入到文件中

结果验证

执行kubectl describe node | grep -i taints | grep -iv noschedule | wc -l和cat /opt/KUSC00402/kusc00402.txt ,查看结果是否一致

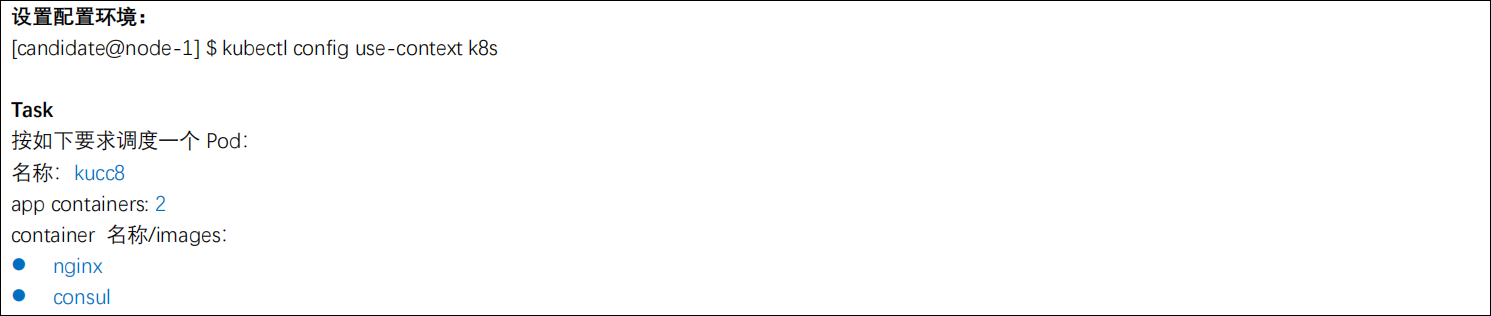

9、创建多容器的 pod

考题

解题思路

前往官网Kubernetes 文档\概念\工作负载\Pod查看yaml模版

根据要求修改yaml

apiVersion: v1

kind: Pod

metadata:

name: kucc8

spec:

containers:

- name: nginx

image: nginx

- name: consul

image: consul

注意: 若下载镜像报错,可添加 imagePullPolicy: IfNotPresent,yaml如下

apiVersion: v1

kind: Pod

metadata:

name: kucc8

spec:

containers:

- name: nginx

image: nginx

imagePullPolicy: IfNotPresent

- name: consul

image: consul

imagePullPolicy: IfNotPresent

结果验证

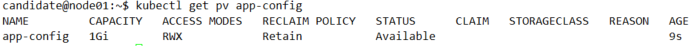

10、创建 PV

考题

解题思路

前往官网Kubernetes 文档\任务\配置 Pods 和容器 配置 Pod 以使用 PersistentVolume 作为存储查看yaml模版

根据要求修改yaml

apiVersion: v1

kind: PersistentVolume

metadata:

name: app-config

spec:

capacity:

storage: 1Gi

accessModes:

- ReadWriteMany

hostPath:

path: "/srv/app-config"

结果验证

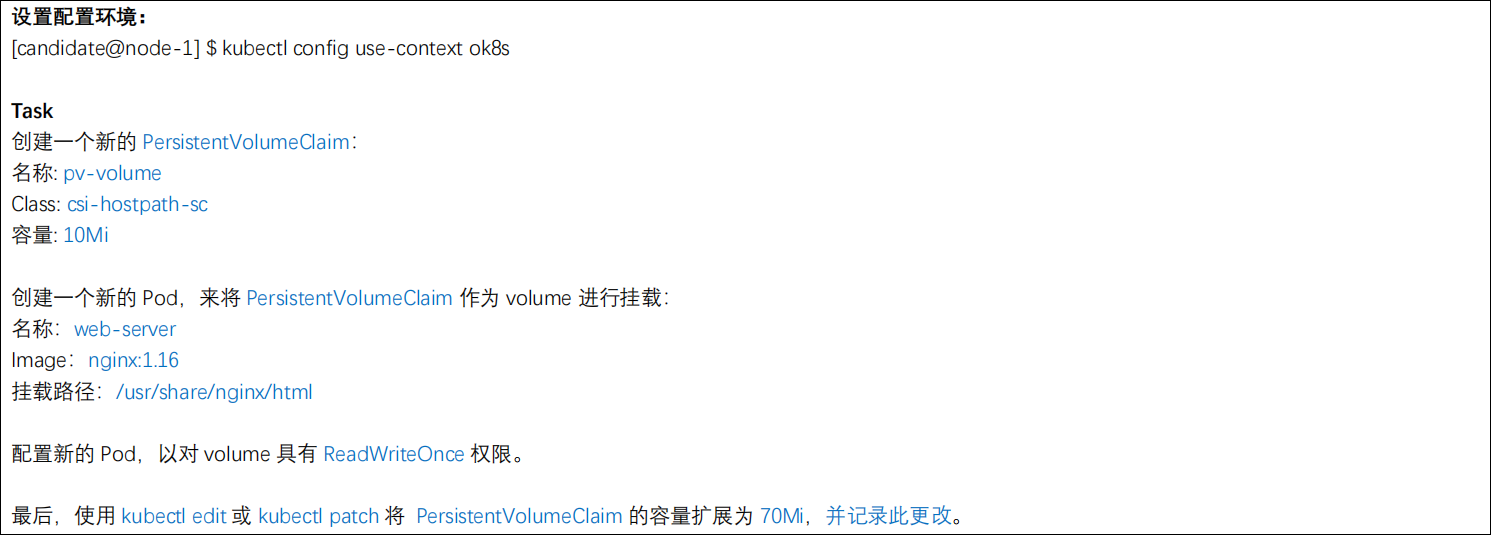

11、创建 PVC

考题

解题思路

前往官网Kubernetes 文档\任务\配置 Pods 和容器 配置 Pod 以使用 PersistentVolume 作为存储查看yaml模版

根据要求修改yaml,创建pvc

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: pv-volume

spec:

storageClassName: csi-hostpath-sc

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 10Mi

根据要求修改yaml,创建pod

apiVersion: v1

kind: Pod

metadata:

name: web-server

spec:

volumes:

- name: task-pv-storage

persistentVolumeClaim:

claimName: pv-volume

containers:

- name: task-pv-container

image: nginx:1.16

volumeMounts:

- mountPath: "/usr/share/nginx/html"

name: task-pv-storage

最后,使用kubectl edit pvc pv-volume或kubectl patch pvc pv-volume将pvc的storage修改为70Mi即可

结果验证

执行kubectl describe pvc pv-volume确认pvc已被修改为70Mi

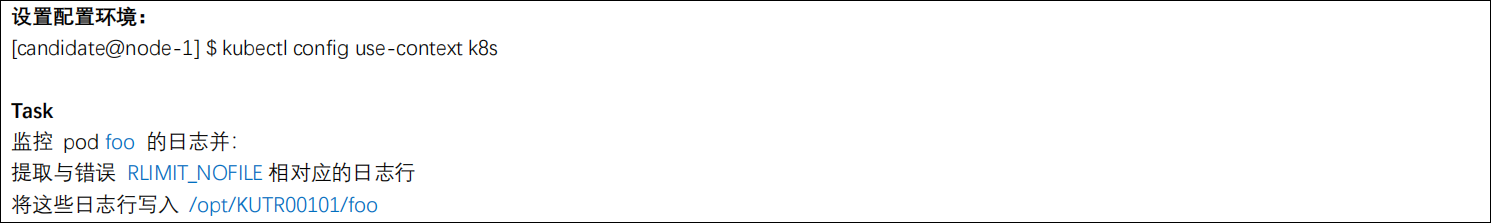

12、查看 pod 日志

考题

解题思路

执行kubectl logs foo | grep "RUMIT_NOFILE" > /opt/KUTR00101/foo

结果验证

cat /opt/KUTR00101/foo查看是否含有相关日志内容

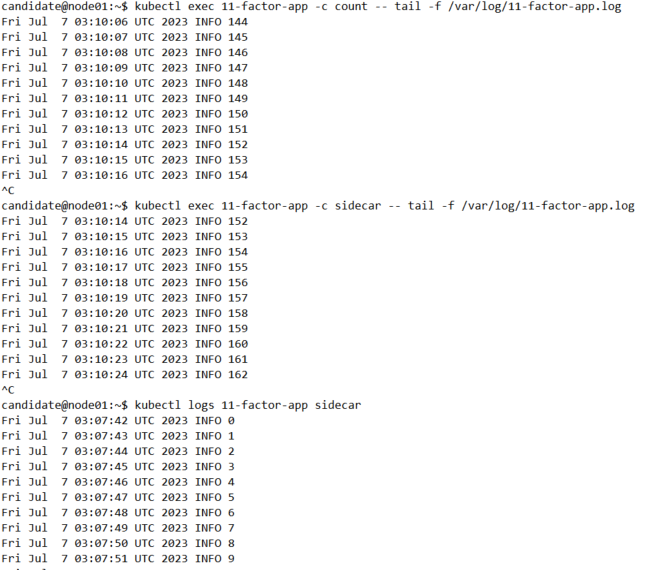

13、使用 sidecar 代理容器日志

考题

解题思路

前往官网Kubernetes 文档\概念\集群管理\日志架构查看yaml模版

因为是要为现有pod增加边车容器,因此需先导出现有pod的yaml文件

kubectl get po 11-factor-app -oyaml

注意:该yaml文件不可直接修改,需对其进行部分删除,精简后如下

apiVersion: v1

kind: Pod

metadata:

annotations:

name: 11-factor-app

namespace: default

spec:

containers:

- args:

- /bin/sh

- -c

- |

i=0; while true; do

echo "$(date) INFO $i" >> /var/log/11-factor-app.log;

i=$((i+1));

sleep 1;

done

image: busybox

imagePullPolicy: IfNotPresent

name: count

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

volumeMounts:

- mountPath: /var/run/secrets/kubernetes.io/serviceaccount

name: kube-api-access-dc9b5

readOnly: true

dnsPolicy: ClusterFirst

enableServiceLinks: true

nodeName: node02

preemptionPolicy: PreemptLowerPriority

priority: 0

restartPolicy: Always

schedulerName: default-scheduler

terminationGracePeriodSeconds: 30

tolerations:

- effect: NoExecute

key: node.kubernetes.io/not-ready

operator: Exists

tolerationSeconds: 300

- effect: NoExecute

key: node.kubernetes.io/unreachable

operator: Exists

tolerationSeconds: 300

volumes:

- name: kube-api-access-dc9b5

projected:

defaultMode: 420

sources:

- serviceAccountToken:

expirationSeconds: 3607

path: token

- configMap:

items:

- key: ca.crt

path: ca.crt

name: kube-root-ca.crt

- downwardAPI:

items:

- fieldRef:

apiVersion: v1

fieldPath: metadata.namespace

path: namespace

然后根据要求和yaml模板对其yaml文件进行修改

apiVersion: v1

kind: Pod

metadata:

annotations:

name: 11-factor-app

namespace: default

spec:

containers:

- args:

- /bin/sh

- -c

- |

i=0; while true; do

echo "$(date) INFO $i" >> /var/log/11-factor-app.log;

i=$((i+1));

sleep 1;

done

image: busybox

imagePullPolicy: IfNotPresent

name: count

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

volumeMounts:

- mountPath: /var/run/secrets/kubernetes.io/serviceaccount

name: kube-api-access-dc9b5

readOnly: true

- name: varlog

mountPath: /var/log

- name: sidecar

image: busybox

args: [/bin/sh,-c,'tail -n+1 -f /var/log/11-factor-app.log']

volumeMounts:

- name: varlog

mountPath: /var/log

dnsPolicy: ClusterFirst

enableServiceLinks: true

nodeName: node02

preemptionPolicy: PreemptLowerPriority

priority: 0

restartPolicy: Always

schedulerName: default-scheduler

terminationGracePeriodSeconds: 30

tolerations:

- effect: NoExecute

key: node.kubernetes.io/not-ready

operator: Exists

tolerationSeconds: 300

- effect: NoExecute

key: node.kubernetes.io/unreachable

operator: Exists

tolerationSeconds: 300

volumes:

- name: kube-api-access-dc9b5

projected:

defaultMode: 420

sources:

- serviceAccountToken:

expirationSeconds: 3607

path: token

- configMap:

items:

- key: ca.crt

path: ca.crt

name: kube-root-ca.crt

- downwardAPI:

items:

- fieldRef:

apiVersion: v1

fieldPath: metadata.namespace

path: namespace

- name: varlog

emptyDir: {}

注意1:varlog需在两个容器中都进行挂载,否则无法查看到该日志文件

注意2: 边车容器的运行命令需用,分割,题目中为空格,是个坑,修改为[/bin/sh,-c,'tail -n+1 -f /var/log/11-factor-app.log']

在执行该yaml文件前,应先执行kubectl delete po 11-factor-app,待pod删除成功后执行yaml文件创建新的pod

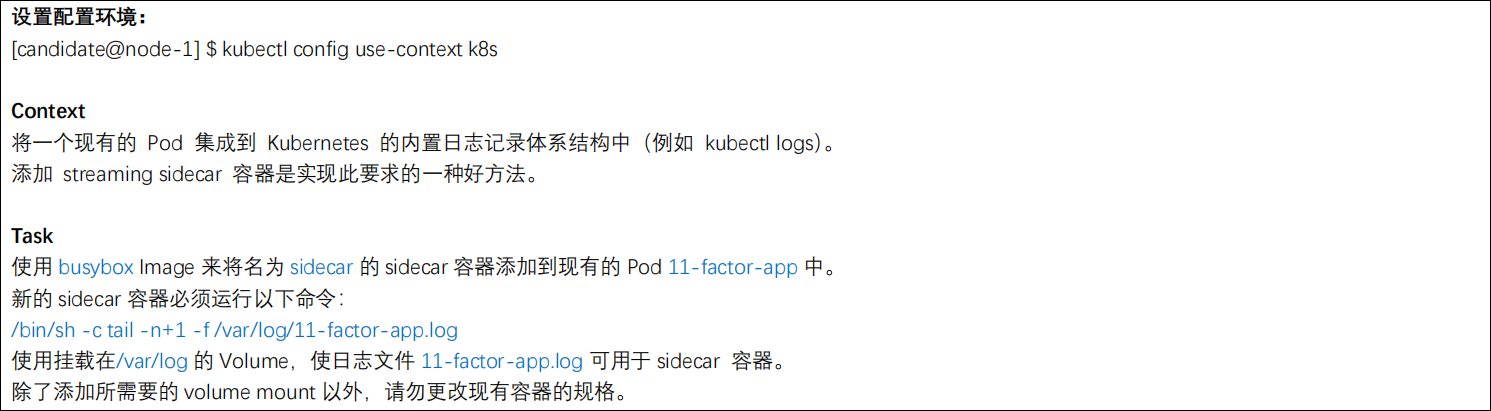

结果验证

分别执行命令kubectl exec 11-factor-app -c count -- tail -f /var/log/11-factor-app.log和kubectl exec 11-factor-app -c sidecar -- tail -f /var/log/11-factor-app.log验证挂载是否成功

再执行kubectl logs -f 11-factor-app sidecar验证日志输出是否成功

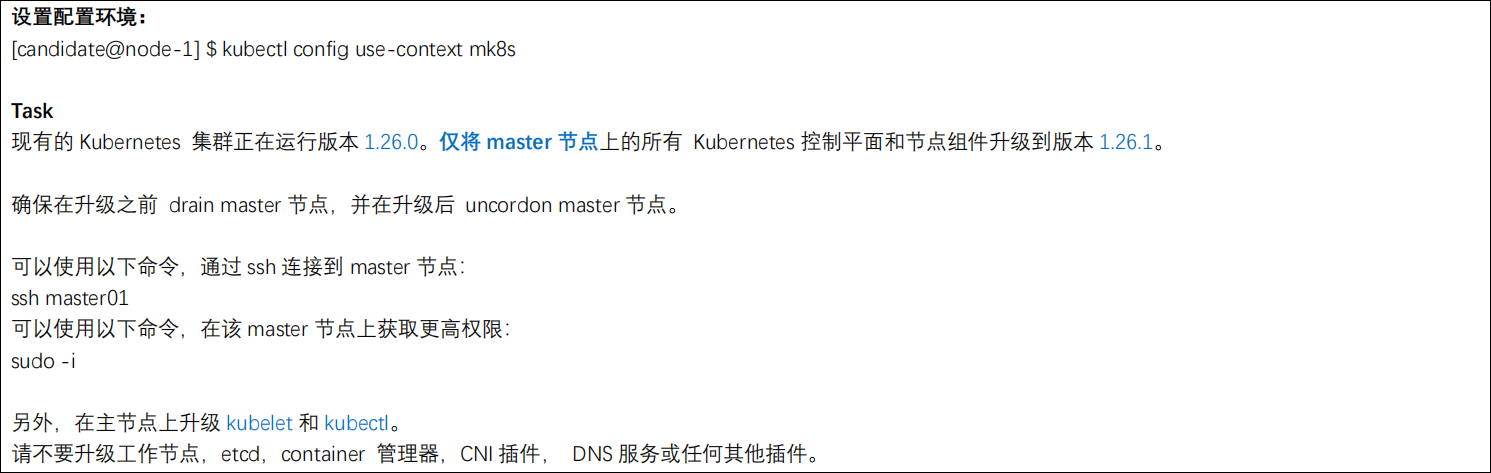

14、升级集群

考题

解题思路

前往官网Kubernetes 文档\任务\管理集群\用 kubeadm 进行管理\升级 kubeadm 集群查看升级教程

#驱逐daemonset以外的其他pod

kubectl drain master01 --ignore-daemonsets

#切换至升级节点

ssh master01

#提权

sudo -i

#更新软件包列表

apt update

#查看kubeadm可升级版本

apt-cache madison kubeadm

#安装对应kubeadm

apt-mark unhold kubeadm && \

apt-get update && apt-get install -y kubeadm=1.26.1-00 && \

apt-mark hold kubeadm

#查看kubeadm版本

kubeadm version

#升级kubeadm版本

kubeadm upgrade apply v1.26.1

#升级 kubelet 和 kubectl

apt-mark unhold kubelet kubectl && \

apt-get update && apt-get install -y kubelet=1.26.1-00 kubectl=1.26.1-00 && \

apt-mark hold kubelet kubectl

#重启kubelet

sudo systemctl daemon-reload

sudo systemctl restart kubelet

#接触不可调度

kubectl uncordon master01

结果验证

kubectl get node查看升级节点的version版本

15、备份还原 etcd

考题

解题思路

前往官网Kubernetes 文档\任务\管理集群\为 Kubernetes 运行 etcd 集群查看操作教程

备份

sudo ETCDCTL_API=3 etcdctl --endpoints=https://11.0.1.111:2379 \

--cacert=/opt/KUIN00601/ca.crt --cert=/opt/KUIN00601/etcd-client.crt --key=/opt/KUIN00601/etcd-client.key \

snapshot save /var/lib/backup/etcd-snapshot.db

注意:若题目没提示,可以从 etcd Pod 的描述中获得 trusted-ca-file、cert-file 和 key-file

备份验证

sudo ETCDCTL_API=3 etcdctl --write-out=table snapshot status /var/lib/backup/etcd-snapshot.db

还原

sudo ETCDCTL_API=3 etcdctl --endpoints=https://11.0.1.111:2379 snapshot restore /data/backup/etcd-snapshot-previous.db

注意1:还原操作可不用指定证书文件

注意2:若备份报错,需要将etcd的/data/目录删除或移走,再重新执行备份命令

结果验证

此题可不做

16、排查集群中故障节点

考题

解题思路

kubectl get node确认node02为NotReady状态

kubectl describe node node02查看到报错为Kubelet stopped posting node status,kubelet停止推送节点状态

ssh node02切换到故障节点

sudo -i提权

systemctl status kubelet 查看kubelet,发现服务已停止

systemctl start kubelet启动kubelet

systemctl status kubelet再次查看kubelet状态,发现启动成功

exit退出root权限

exit退出故障节点

结果验证

kubectl get node查看节点状态是否恢复为Ready

17、节点维护

考题

解题思路

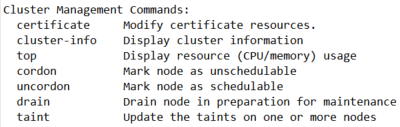

首先kubectl -h查看使用帮助,注意Cluster Management Commands字段

应使用kubectl drain命令,可执行kubectl drain -h继续查看帮助

执行以下命令完成节点不可用并驱离非daemonsets的pod

kubectl drain node02 --ignore-daemonsets

结果验证

kubectl get po -A -owide | grep node02查看是否仅剩daemonset