作业①

实验内容

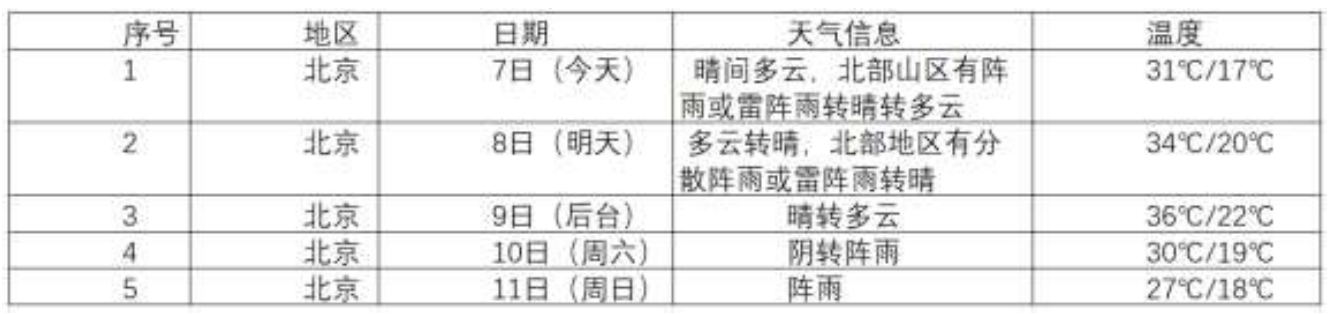

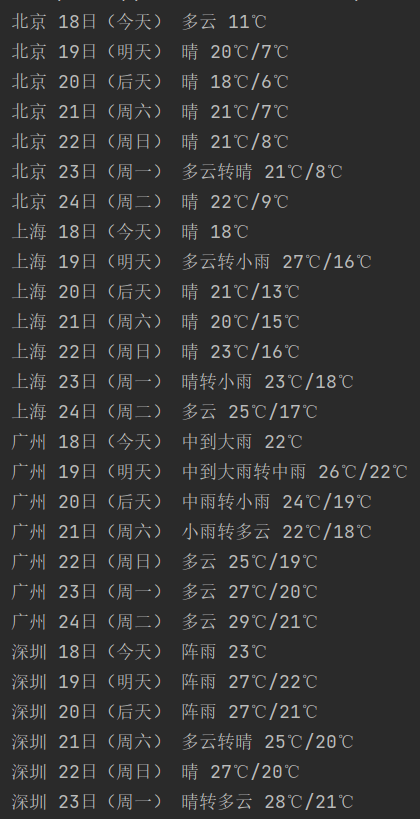

- 要求:在中国气象网(http://www.weather.com.cn)给定城市集的 7日天气预报,并保存在数据库。

- 输出信息:

- Gitee文件夹链接:https://gitee.com/codeshu111/project/blob/master/作业2/爬取天气预报.py

实现

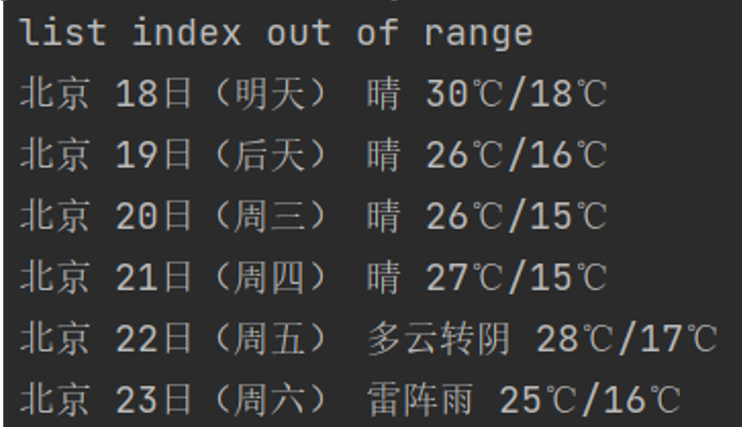

直接用原来的代码会出现如下问题

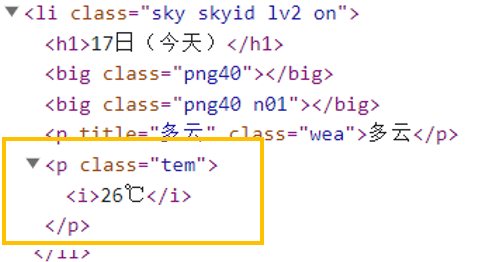

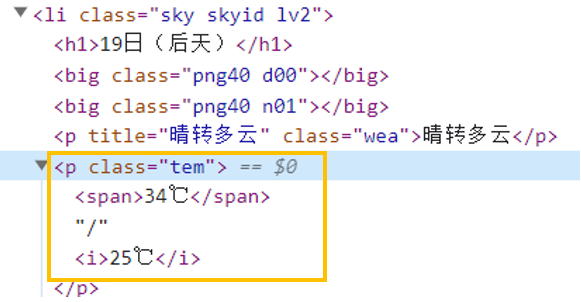

查看网页的HTML代码在当天这一块有一些小变化,当日的天气预报显示的应该是实时气温,没有温度范围

“今天”只显示一个气温:

“明天”显示的为温度范围:

对原来的代码做出一点小修改,让当日的天气预报信息单独输出

- 完整代码如下:

from bs4 import BeautifulSoup

from bs4 import UnicodeDammit

import urllib.request

import sqlite3

#数据库

class WeatherDB:

#连接数据库

def openDB(self):

self.con=sqlite3.connect("weathers.db")

self.cursor=self.con.cursor()

try:#创建数据库

self.cursor.execute("create table weathers (wCity varchar(16),wDate varchar(16),wWeather varchar(64),wTemp varchar(32),constraint pk_weather primary key (wCity,wDate))")

except:

self.cursor.execute("delete from weathers")

#关闭数据库

def closeDB(self):

self.con.commit()

self.con.close()

#插入数据

def insert(self, city, date, weather, temp):

try:

self.cursor.execute("insert into weathers (wCity,wDate,wWeather,wTemp) values (?,?,?,?)",

(city, date, weather, temp))

except Exception as err:

print(err)

#展示数据

def show(self):

self.cursor.execute("select * from weathers")

rows = self.cursor.fetchall()

print("%-16s%-16s%-32s%-16s" % ("city", "date", "weather", "temp"))

for row in rows:

print("%-16s%-16s%-32s%-16s" % (row[0], row[1], row[2], row[3]))

class WeatherForecast:

def __init__(self):

self.headers = {

"User-Agent": "Mozilla/5.0 (Windows; U; Windows NT 6.0 x64; en-US; rv:1.9pre) Gecko/2008072421 Minefield/3.0.2pre"}

self.cityCode = {"北京": "101010100", "上海": "101020100", "广州": "101280101", "深圳": "101280601"}

#爬取信息部分

def forecastCity(self, city):

if city not in self.cityCode.keys():

print(city + " code cannot be found")

return

url = "http://www.weather.com.cn/weather/" + self.cityCode[city] + ".shtml"

try:

req = urllib.request.Request(url, headers=self.headers)

data = urllib.request.urlopen(req)

data = data.read()

dammit = UnicodeDammit(data, ["utf-8", "gbk"])

data = dammit.unicode_markup

soup = BeautifulSoup(data, "lxml")

#css语法选择元素

lis = soup.select("ul[class='t clearfix'] li")

try:#今日

date = lis[0].select('h1')[0].text

weather = lis[0].select('p[class="wea"]')[0].text

temp=lis[0].select('p[class="tem"] i')[0].text#只有一个温度

print(city, date, weather, temp)

self.db.insert(city, date, weather, temp)

except Exception as err:

print(err)

for li in lis[1:]:

try:#其他日期

date = li.select('h1')[0].text#日期

weather = li.select('p[class="wea"]')[0].text#天气

temp = li.select('p[class="tem"] span')[0].text + "/" + li.select('p[class="tem"] i')[0].text#温度范围

print(city, date, weather, temp)

self.db.insert(city, date, weather, temp)#插入数据

except Exception as err:

print(err)

except Exception as err:

print(err)

def process(self, cities):

self.db = WeatherDB()

self.db.openDB()

for city in cities:

self.forecastCity(city)

# self.db.show()

self.db.closeDB()

ws = WeatherForecast()

ws.process(["北京", "上海", "广州", "深圳"])

print("completed")

部分运行结果截图:

心得

这个网站的结构比较简单,没什么坑,代码很容易就看懂了。通过复现这个代码,学会了如何将数据保存到数据库。

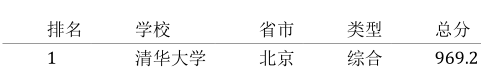

作业②

实验内容

- 要求:用 requests 和 BeautifulSoup 库方法定向爬取股票相关信息,并存储在数据库中。

- 候选网站: 候选网站:东方财富网:https://www.eastmoney.com/

新浪股票:http://finance.sina.com.cn/stock/ - 技巧:在谷歌浏览器中进入 F12 调试模式进行抓包,查找股票列表加载使用的 url,并分析 api 返回的值,并根据所要求的参数可适当更改api 的请求参数。根据 URL 可观察请求的参数f1、f2可获取不同的数值,根据情况可删减请求的参数

- 输出信息

- Gitee文件夹链接:https://gitee.com/codeshu111/project/blob/master/作业2/爬东方财富网.py

实现

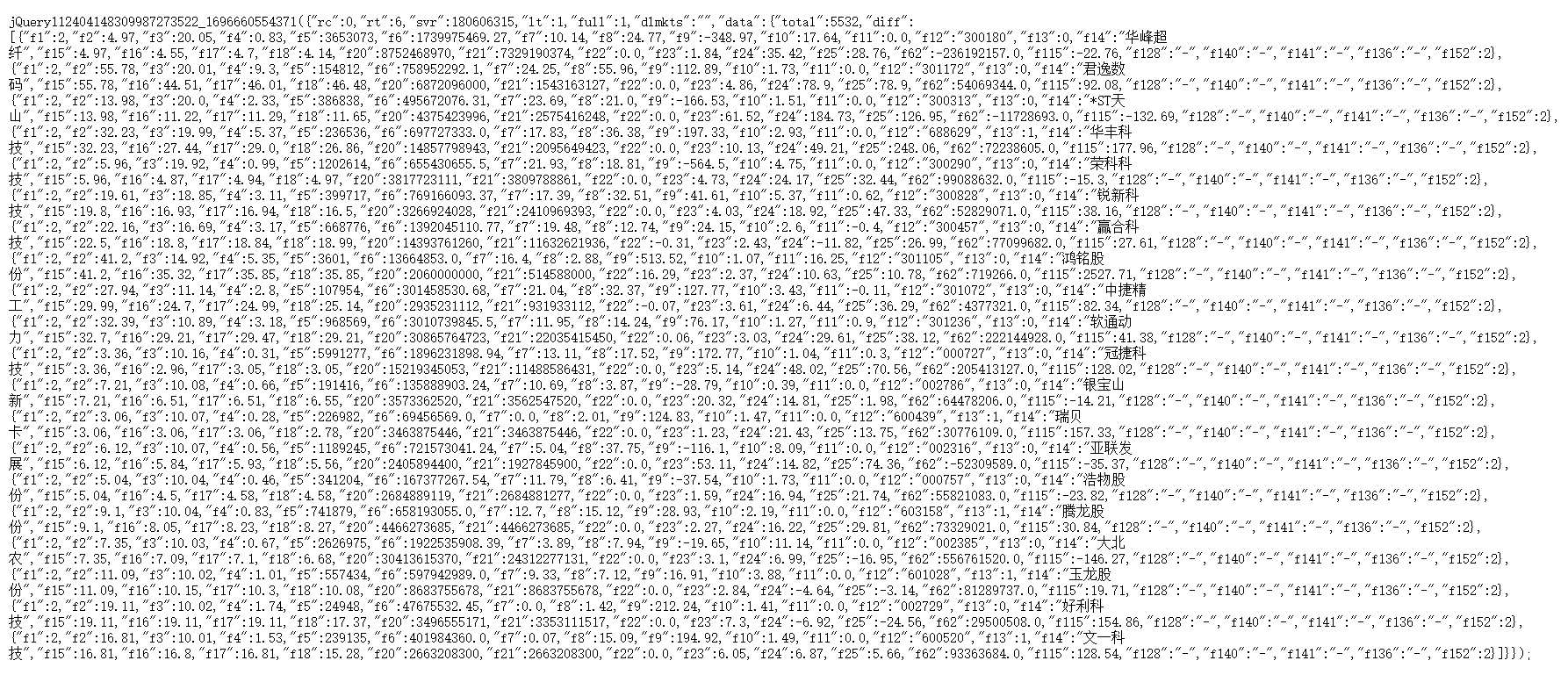

查看网页JSON数据如下,可以通过对比数据知道f1,f2……都是对应的什么数据

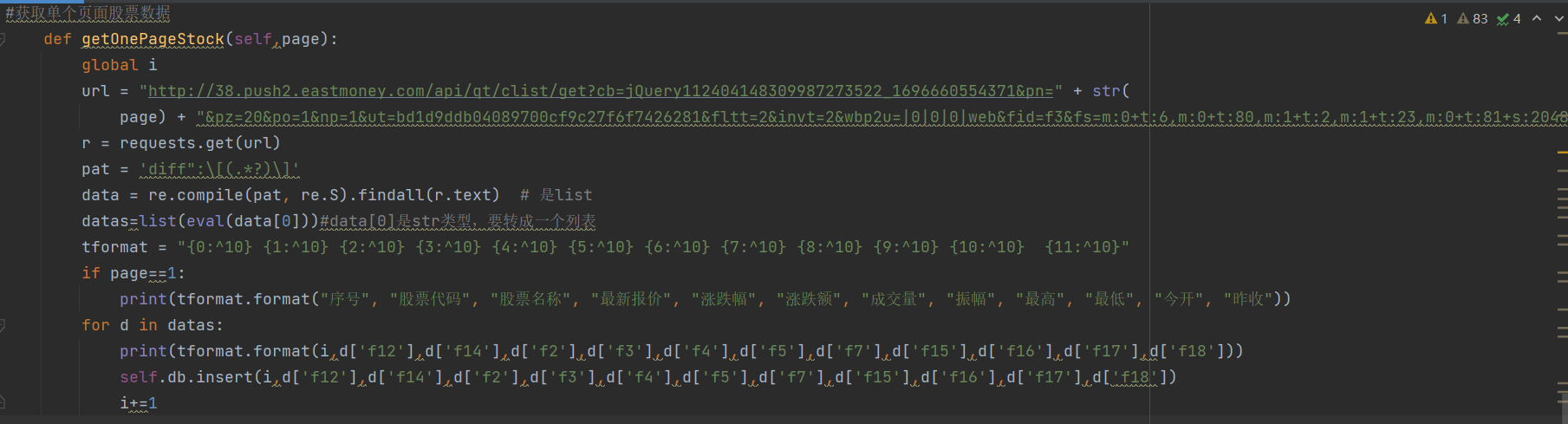

核心部分代码:先用正则表达式匹配到有用的部分,观察返回文本的格式,是列表里套的字典,将字符串用list(eval())转成列表,再根据需要的key访问列表中的字典。

- 完整代码如下:

'''

要求:用 requests 和 BeautifulSoup 库方法定向爬取股票相关信息,并

存储在数据库中。

http://quote.eastmoney.com/center/gridlist.html#hs_a_board

http://38.push2.eastmoney.com/api/qt/clist/get?

cb=jQuery112404148309987273522_1696660554371&pn=1

&pz=20&po=1&np=1&ut=bd1d9ddb04089700cf9c27f6f7426281&fltt=2&invt=2&wbp2u=|0|0|0|web&fid=f3&fs=m:0+t:6,m:0+t:80,m:1+t:2,m:1+t:23,m:0+t:81+s:2048&fields=f1,f2,f3,f4,f5,f6,f7,f8,f9,f10,f12,f13,f14,f15,f16,f17,f18,f20,f21,f23,f24,f25,f22,f11,f62,f128,f136,f115,f152&_=1696660554372

'''

import requests

import re

import pandas as pd

import sqlite3

#数据库操作

class StockDB:

def openDB(self):

self.con=sqlite3.connect("stocks.db")

self.cursor=self.con.cursor()

try:

self.cursor.execute("create table stocks (ID int, stock_code varchar,stock_name varchar,latest_price varchar,change_percent varchar,change_amount varchar,volume varchar ,amplitude varchar,highest varchar,lowest varchar,open_price varchar,close_price varchar)")

except:

self.cursor.execute("delete from stocks")

def closeDB(self):

self.con.commit()

self.con.close()

def insert(self, ID,stock_code,stock_name,latest_price,change_percent,change_amount,volume,amplitude,highest,lowest,open_price,close_price):

try:

self.cursor.execute("insert into stocks (ID,stock_code,stock_name,latest_price,change_percent,change_amount,volume,amplitude,highest,lowest,open_price,close_price) values (?,?,?,?,?,?,?,?,?,?,?,?)",

(ID,stock_code,stock_name,latest_price,change_percent,change_amount,volume,amplitude,highest,lowest,open_price,close_price))

except Exception as err:

print(err)

class myStocks:

#获取单个页面股票数据

def getOnePageStock(self,page):

global i

url = "http://38.push2.eastmoney.com/api/qt/clist/get?cb=jQuery112404148309987273522_1696660554371&pn=" + str(

page) + "&pz=20&po=1&np=1&ut=bd1d9ddb04089700cf9c27f6f7426281&fltt=2&invt=2&wbp2u=|0|0|0|web&fid=f3&fs=m:0+t:6,m:0+t:80,m:1+t:2,m:1+t:23,m:0+t:81+s:2048&fields=f1,f2,f3,f4,f5,f6,f7,f8,f9,f10,f12,f13,f14,f15,f16,f17,f18,f20,f21,f23,f24,f25,f22,f11,f62,f128,f136,f115,f152&_=1696660554372"

#用get方法访问服务器并提取页面数据

r = requests.get(url)

pat = 'diff":\[(.*?)\]'

data = re.compile(pat, re.S).findall(r.text) # 是list

datas=list(eval(data[0]))#data[0]是str类型,要转成一个列表

tformat = "{0:^10} {1:^10} {2:^10} {3:^10} {4:^10} {5:^10} {6:^10} {7:^10} {8:^10} {9:^10} {10:^10} {11:^10}"

if page==1:

print(tformat.format("序号", "股票代码", "股票名称", "最新报价", "涨跌幅", "涨跌额", "成交量", "振幅", "最高", "最低", "今开", "昨收"))

for d in datas:

print(tformat.format(i,d['f12'],d['f14'],d['f2'],d['f3'],d['f4'],d['f5'],d['f7'],d['f15'],d['f16'],d['f17'],d['f18']))

self.db.insert(i,d['f12'],d['f14'],d['f2'],d['f3'],d['f4'],d['f5'],d['f7'],d['f15'],d['f16'],d['f17'],d['f18'])

i+=1

def process(self, pages):#爬取多少页

self.db = StockDB()

self.db.openDB()

for page in range(1,pages+1):

self.getOnePageStock(page)

# self.db.show()

self.db.closeDB()

i=1

sto = myStocks()

sto.process(10)#爬取10页

print("completed")

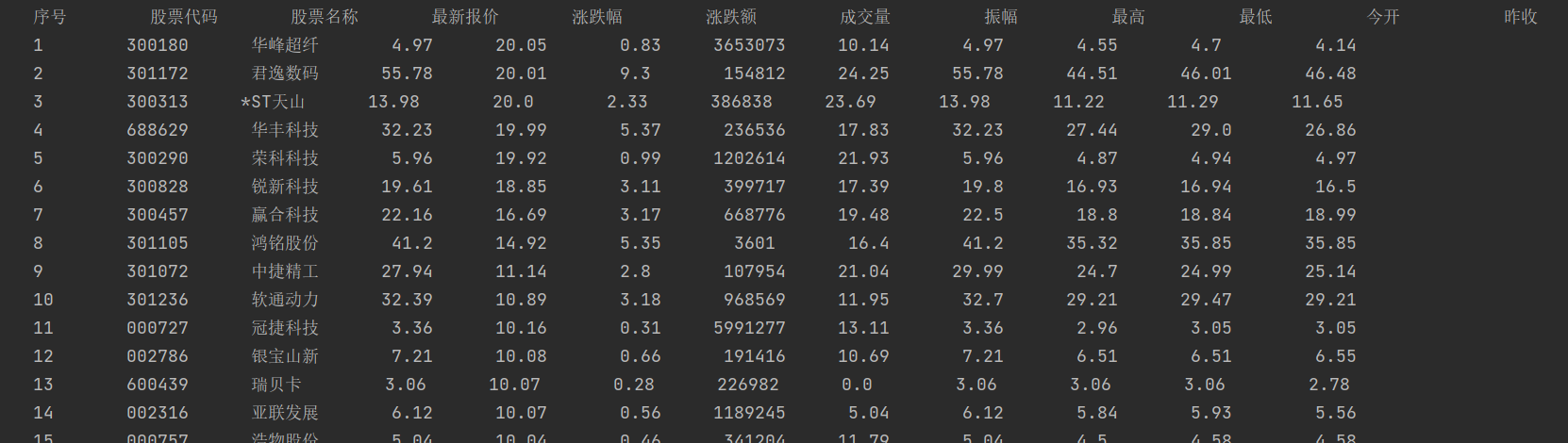

- 部分输出结果:

心得

- 针对JSON数据,用字典的方式访问很方便能获得数据,就是正则表达式匹配的时候,要注意各种方括号花括号小括号。

- 存储到数据库是照着天气预报的代码改的,一开始将核心部分写成函数的方式改起来还是很方便的,就是列太多了,容易把列名打错,showDB()修改感觉很麻烦,就按原来的方式输出的。

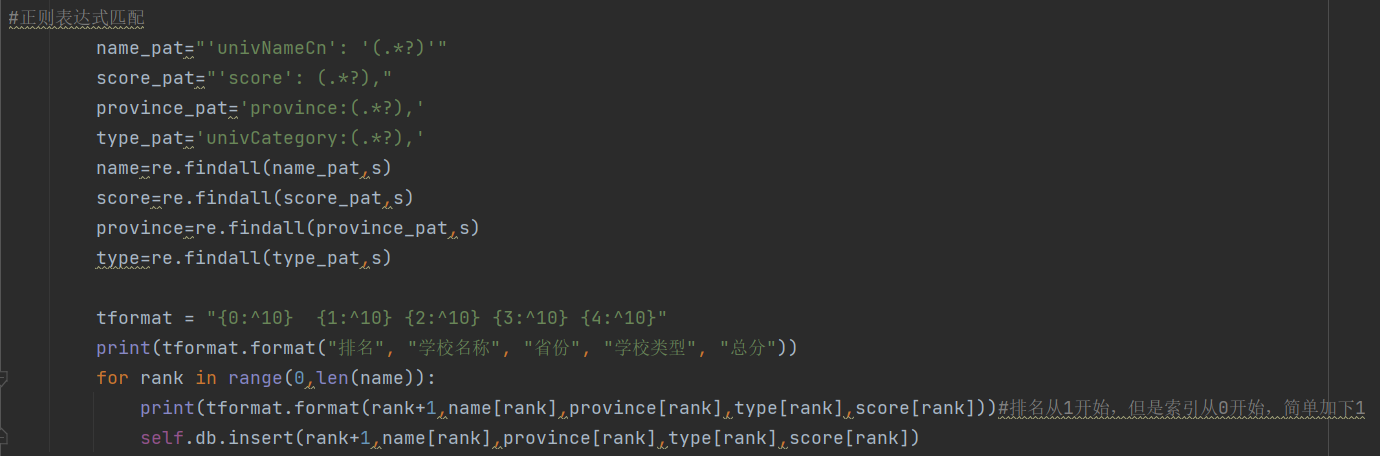

作业③:

实验内容

- 要求: 要求:爬取中国大学 2021 主榜(https://www.shanghairanking.cn/rankings/bcur/2021)所有院校信息,并存储在数据库中,同时将浏览器 F12 调试分析的过程录制 Gif 加

入至博客中。 - 技巧:分析该网站的发包情况,分析获取数据的 api

- 输出信息

- Gitee文件夹链接:https://gitee.com/codeshu111/project/blob/master/作业2/大学排名2021.py

实现

F12抓包的GIF:(不知道为什么录制的时候按F12没反应,用的“右键-检查”)

查看JSON数据的时候打开都是乱码,用大佬发的代码成功将数据爬下来并且转换成Python代码(问chat是这么说的,总之不是乱码了)

-

核心部分代码

还是用的正则表达式匹配

-

完整代码如下:(在实验室的时候还是可以运行的,结果拖着一直没写博客,今天运行不了了……网址打开是404 not found,重新抓包发现payload.js的网址变了,格式也不一样,尝试调了一下但是一直报错,大佬的代码我还是改不出来的)

import requests

import re

import js2py

from io import StringIO

from contextlib import redirect_stdout

import sqlite3

#数据库相关

class universityDB:

def openDB(self):

self.con=sqlite3.connect("university.db")

self.cursor=self.con.cursor()

try:

self.cursor.execute("create table university (Ranking int, name varchar,province_name varchar,type varchar,score varcharhar)")

except:

self.cursor.execute("delete from university")

def closeDB(self):

self.con.commit()

self.con.close()

def insert(self, Ranking,name,province_name,type,score):

try:

self.cursor.execute("insert into stocks (Ranking,name,province_name,type,score) values (?,?,?,?,?)",

( Ranking,name,province_name,type,score))

except Exception as err:

print(err)

class getuniversity:

def getranking(self):

url = r'http://www.shanghairanking.cn/_nuxt/static/1695811954/rankings/bcur/2021/payload.js'

r = requests.get(url, timeout=20)

if r.status_code == 200:

r.encoding = 'utf-8'

html = r.text

js_function=html[len(' NUXT JSONP ("/rankings/bcur/2021", ('):-3]

js_code= f"console.log({js_function})"

js = js2py.EvalJs()

pyf = js2py.translate_js(js_code)

f = StringIO()

with redirect_stdout(f):

exec(pyf)

s = f.getvalue()

#以上是来自大佬同学的代码,获取并处理指定URL页面中的JavaScript代码,并将其转换为Python代码

#print(s)

s=str(s)

#正则表达式匹配

name_pat="'univNameCn': '(.*?)'"

score_pat="'score': (.*?),"

province_pat='province:(.*?),'

type_pat='univCategory:(.*?),'

name=re.findall(name_pat,s)

score=re.findall(score_pat,s)

province=re.findall(province_pat,s)

type=re.findall(type_pat,s)

tformat = "{0:^10} {1:^10} {2:^10} {3:^10} {4:^10}"

print(tformat.format("排名", "学校名称", "省份", "学校类型", "总分"))

for rank in range(0,len(name)):

print(tformat.format(rank+1,name[rank],province[rank],type[rank],score[rank]))#排名从1开始,但是索引从0开始,简单加下1

self.db.insert(rank+1,name[rank],province[rank],type[rank],score[rank])

def process(self):

self.db = universityDB()

self.db.openDB()

self.getranking()

self.db.closeDB()

uni=getuniversity()

uni.process()

print("completed")

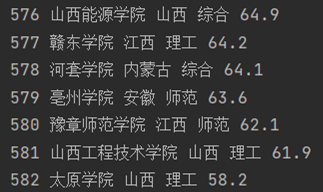

- 部分运行结果:

心得

- 做完两个实验找JSON在哪里的速度变快了

- 数据库部分同样是修改的前面代码,总体变化不大。

- 写正则表达式的时候有个空格实在是肉眼看不出来,写正则表达式还是复制粘贴了再修改,不然容易漏东西,还有中英文的冒号引号啥的。

- 下次一定及时写博客,,,,,过一段时间代码又运行不出来了,还得重新调。