一、认识YAML

1.1 什么是YAML

官网:https://yaml.org/

YAML 是一种用来写配置文件的语言。JSON是YAML的子集,YAML支持整数、浮点数、布尔、字符串、数组和对象等数据类型。任何合法的JSON文档也是YANL文档,

YAML语法规则:

- 使用缩进表示层级关系,缩进不允许使用tab,只能使用空格,同一层级空格数一样多

- 大小写敏感

- 使用#书写注释

- 数组(列表)是使用 - 开头的清单形式

- 对象(字典)的格式与JSON基本相同,但Key不用使用双引号

- 表示对象的 : 和表示数组的 - 后面必须要有空格

- 使用“---”在一个文件中分割多个YAML对象

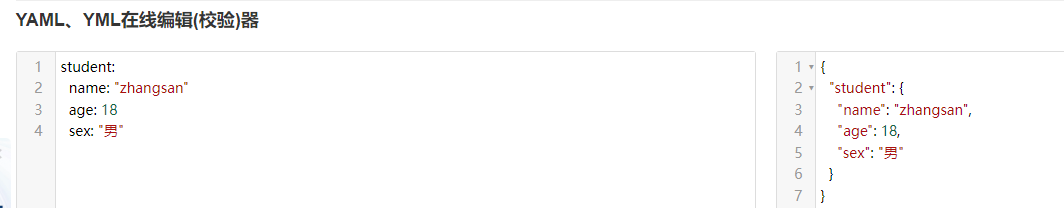

1.2 YAML示例

YAML、YML在线编辑(校验)器:https://www.bejson.com/validators/yaml_editor/

YAML数组

course:

- Python

- Java

- GO

YAML字典对象

student:

name: "zhangsan"

age: 18

sex: "男"

复杂示例,组合数组和对象

user:

score:

- StudentID: 1

Python: 88

Java: 88

GO: 80

- StudentID: 2

Python: 99

Java: 99

GO: 99

students:

- name: "zhangsan"

age: 18

sex: "男"

StudentID: 1

- name: "wanghong"

age: 18

sex: "女"

StudentID: 2

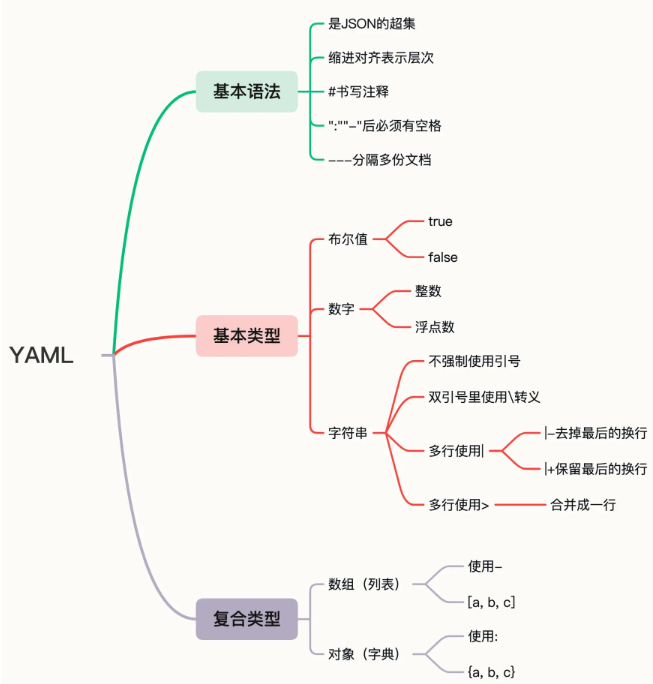

YAML总结:

二、API资源对象Pod

在K8S里,YAML用来声明API对象。

2.1 查看资源对象

kubectl api-resources

kubectl api-resources

NAME SHORTNAMES APIVERSION NAMESPACED KIND

bindings v1 true Binding

componentstatuses cs v1 false ComponentStatus

configmaps cm v1 true ConfigMap

endpoints ep v1 true Endpoints

events ev v1 true Event

limitranges limits v1 true LimitRange

namespaces ns v1 false Namespace

nodes no v1 false Node

persistentvolumeclaims pvc v1 true PersistentVolumeClaim

persistentvolumes pv v1 false PersistentVolume

pods po v1 true Pod

podtemplates v1 true PodTemplate

replicationcontrollers rc v1 true ReplicationController

resourcequotas quota v1 true ResourceQuota

secrets v1 true Secret

serviceaccounts sa v1 true ServiceAccount

services svc v1 true Service

mutatingwebhookconfigurations admissionregistration.k8s.io/v1 false MutatingWebhookConfiguration

validatingwebhookconfigurations admissionregistration.k8s.io/v1 false ValidatingWebhookConfiguration

customresourcedefinitions crd,crds apiextensions.k8s.io/v1 false CustomResourceDefinition

apiservices apiregistration.k8s.io/v1 false APIService

controllerrevisions apps/v1 true ControllerRevision

daemonsets ds apps/v1 true DaemonSet

deployments deploy apps/v1 true Deployment

replicasets rs apps/v1 true ReplicaSet

statefulsets sts apps/v1 true StatefulSet

tokenreviews authentication.k8s.io/v1 false TokenReview

localsubjectaccessreviews authorization.k8s.io/v1 true LocalSubjectAccessReview

selfsubjectaccessreviews authorization.k8s.io/v1 false SelfSubjectAccessReview

selfsubjectrulesreviews authorization.k8s.io/v1 false SelfSubjectRulesReview

subjectaccessreviews authorization.k8s.io/v1 false SubjectAccessReview

horizontalpodautoscalers hpa autoscaling/v2 true HorizontalPodAutoscaler

cronjobs cj batch/v1 true CronJob

jobs batch/v1 true Job

certificatesigningrequests csr certificates.k8s.io/v1 false CertificateSigningRequest

leases coordination.k8s.io/v1 true Lease

bgpconfigurations crd.projectcalico.org/v1 false BGPConfiguration

bgppeers crd.projectcalico.org/v1 false BGPPeer

blockaffinities crd.projectcalico.org/v1 false BlockAffinity

caliconodestatuses crd.projectcalico.org/v1 false CalicoNodeStatus

clusterinformations crd.projectcalico.org/v1 false ClusterInformation

felixconfigurations crd.projectcalico.org/v1 false FelixConfiguration

globalnetworkpolicies crd.projectcalico.org/v1 false GlobalNetworkPolicy

globalnetworksets crd.projectcalico.org/v1 false GlobalNetworkSet

hostendpoints crd.projectcalico.org/v1 false HostEndpoint

ipamblocks crd.projectcalico.org/v1 false IPAMBlock

ipamconfigs crd.projectcalico.org/v1 false IPAMConfig

ipamhandles crd.projectcalico.org/v1 false IPAMHandle

ippools crd.projectcalico.org/v1 false IPPool

ipreservations crd.projectcalico.org/v1 false IPReservation

kubecontrollersconfigurations crd.projectcalico.org/v1 false KubeControllersConfiguration

networkpolicies crd.projectcalico.org/v1 true NetworkPolicy

networksets crd.projectcalico.org/v1 true NetworkSet

endpointslices discovery.k8s.io/v1 true EndpointSlice

events ev events.k8s.io/v1 true Event

flowschemas flowcontrol.apiserver.k8s.io/v1beta3 false FlowSchema

prioritylevelconfigurations flowcontrol.apiserver.k8s.io/v1beta3 false PriorityLevelConfiguration

ingressclasses networking.k8s.io/v1 false IngressClass

ingresses ing networking.k8s.io/v1 true Ingress

networkpolicies netpol networking.k8s.io/v1 true NetworkPolicy

runtimeclasses node.k8s.io/v1 false RuntimeClass

poddisruptionbudgets pdb policy/v1 true PodDisruptionBudget

clusterrolebindings rbac.authorization.k8s.io/v1 false ClusterRoleBinding

clusterroles rbac.authorization.k8s.io/v1 false ClusterRole

rolebindings rbac.authorization.k8s.io/v1 true RoleBinding

roles rbac.authorization.k8s.io/v1 true Role

priorityclasses pc scheduling.k8s.io/v1 false PriorityClass

csidrivers storage.k8s.io/v1 false CSIDriver

csinodes storage.k8s.io/v1 false CSINode

csistoragecapacities storage.k8s.io/v1 true CSIStorageCapacity

storageclasses sc storage.k8s.io/v1 false StorageClass

volumeattachments storage.k8s.io/v1 false VolumeAttachmentPod 为K8S里最小、最简单的资源对象

运行一个Pod

kubectl run pod-demo --image=busybox从已知Pod导出YAML文件

kubectl get pod pod-demo -o yaml > pod-demo.yamlpod-demo.yaml

# cat pod-demo.yaml

apiVersion: v1

kind: Pod

metadata:

annotations:

cni.projectcalico.org/containerID: d8f1de8e76aafb5f15c4e7e8084c2edb0e23015b480a05ef83f75cafa23c0fb4

cni.projectcalico.org/podIP: 10.244.167.133/32

cni.projectcalico.org/podIPs: 10.244.167.133/32

creationTimestamp: "2023-10-14T03:22:48Z"

labels:

run: pod-demo

name: pod-demo

namespace: default

resourceVersion: "421532"

uid: 47d446e6-9548-4b74-9682-01a448bde007

spec:

containers:

- image: busybox

imagePullPolicy: Always

name: pod-demo

resources: {}

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

volumeMounts:

- mountPath: /var/run/secrets/kubernetes.io/serviceaccount

name: kube-api-access-wnj6t

readOnly: true

dnsPolicy: ClusterFirst

enableServiceLinks: true

nodeName: node-1-231

preemptionPolicy: PreemptLowerPriority

priority: 0

restartPolicy: Always

schedulerName: default-scheduler

securityContext: {}

serviceAccount: default

serviceAccountName: default

terminationGracePeriodSeconds: 30

tolerations:

- effect: NoExecute

key: node.kubernetes.io/not-ready

operator: Exists

tolerationSeconds: 300

- effect: NoExecute

key: node.kubernetes.io/unreachable

operator: Exists

tolerationSeconds: 300

volumes:

- name: kube-api-access-wnj6t

projected:

defaultMode: 420

sources:

- serviceAccountToken:

expirationSeconds: 3607

path: token

- configMap:

items:

- key: ca.crt

path: ca.crt

name: kube-root-ca.crt

- downwardAPI:

items:

- fieldRef:

apiVersion: v1

fieldPath: metadata.namespace

path: namespace

status:

conditions:

- lastProbeTime: null

lastTransitionTime: "2023-10-14T03:22:48Z"

status: "True"

type: Initialized

- lastProbeTime: null

lastTransitionTime: "2023-10-14T03:22:59Z"

message: 'containers with unready status: [pod-demo]'

reason: ContainersNotReady

status: "False"

type: Ready

- lastProbeTime: null

lastTransitionTime: "2023-10-14T03:22:59Z"

message: 'containers with unready status: [pod-demo]'

reason: ContainersNotReady

status: "False"

type: ContainersReady

- lastProbeTime: null

lastTransitionTime: "2023-10-14T03:22:48Z"

status: "True"

type: PodScheduled

containerStatuses:

- containerID: containerd://d113a348c62ae5c8772ce3d8d7d7f44fe1ffb66945f6a073b273174b952635b3

image: docker.io/library/busybox:latest

imageID: docker.io/library/busybox@sha256:3fbc632167424a6d997e74f52b878d7cc478225cffac6bc977eedfe51c7f4e79

lastState:

terminated:

containerID: containerd://d113a348c62ae5c8772ce3d8d7d7f44fe1ffb66945f6a073b273174b952635b3

exitCode: 0

finishedAt: "2023-10-14T03:23:45Z"

reason: Completed

startedAt: "2023-10-14T03:23:45Z"

name: pod-demo

ready: false

restartCount: 3

started: false

state:

waiting:

message: back-off 40s restarting failed container=pod-demo pod=pod-demo_default(47d446e6-9548-4b74-9682-01a448bde007)

reason: CrashLoopBackOff

hostIP: 192.168.1.231

phase: Running

podIP: 10.244.167.133

podIPs:

- ip: 10.244.167.133

qosClass: BestEffort

startTime: "2023-10-14T03:22:48Z"2.2 Pod YAML 示例:

- apiVersion

- Kind

- metadata

- spec

vi ngx-pod.yaml

apiVersion: v1

kind: Pod

metadata:

name: ngx-pod

namespace: zhan

labels: ## labels字段非常关键,它可以添加任意数量的Key-Value,目的是为了让pod的信息更加详细

env: dev

spec: ##用来定义该pod更多的资源信息,比如containers, volume, storage

containers: ##定义容器属性

- image: nginx:1.23.2

imagePullPolicy: IfNotPresent ##镜像拉取策略,三种:Always/Never/IfNotPresent,一般默认是IfNotPresent,也就是说只有本地不存在才会远程拉取镜像,可以减少网络消耗。

name: ngx

env: ##定义变量,类似于Dockerfile里面的ENV指令

- name: os

value: "Rocky Linux"

ports:

- containerPort: 80创建namespace

# kubectl create ns zhan

namespace/zhan created

# kubectl get ns

NAME STATUS AGE

default Active 16d

kube-node-lease Active 16d

kube-public Active 16d

kube-system Active 16d

kubernetes-dashboard Active 36h

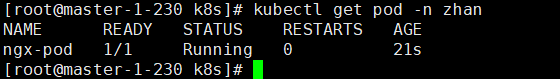

zhan Active 26s使用YAML创建Pod

# kubectl apply -f ngx-pod.yaml

pod/ngx-pod created

# kubectl get pod -n zhan

NAME READY STATUS RESTARTS AGE

ngx-pod 1/1 Running 0 74s

删除pod

]# kubectl delete -f ngx-pod.yaml

pod "ngx-pod" deleted

# kubectl get pod -n zhan

No resources found in zhan namespace.三、Pod原理和生命周期

3.1 Pod 原理

Pod是K8S集群中运行部署应用或服务的最小单元。

在每一个Pod中可以运行多个容器,这些容器共享网络、存储以及CPU/内存等资源。

每个Pod都有一个特殊的Pause容器,被称为根容器。Pause容器的主要作用是为Pod中的其他容器提供一些基本的功能,比如网络和PID命名空间的共享、负责管理Pod内其他容器的生命周期。

- 网络命名空间共享:pause容器为整个Pod创建一个网络命名空间,Pod内的其他容器都将加入这个网络命名空间。Pod中的所有容器都可以共享同一个IP地址和端口空间,从而实现容器间的紧密通信。

- PID命名空间共享:pause容器充当Pod内其他容器的父容器,他们共享同一个PID命名空间。这使得Pod内的容器可以通过进程ID直接发现和相互通信,同时也使Pod具有一个统一的生命周期

- 生命周期管理:pause容器充当Pod内其他容器的父容器,负责协调和管理他们的生命周期。当pause容器启动时,他会成为Pod其他容器的根容器。当pause容器终止时,所有其他容器也会被自动终止,以确保整个Pod的生命周期的一致性。

- 保持Pod状态:pause容器保持运行状态,即使Pod中的其他容器暂时停止或崩溃,也可以确保Pod保持活跃。有助于Kubernetes更准确地监视和管理Pod的状态。

3.2 Pod生命周期

Pod生命周期包括一下几个阶段:

- Pending(挂起):在此阶段,Pod已被创建,但尚未调度到运行节点上。此时,Pod可能还在等待被调度,或者因为某些现在(如资源不足)而无法立即调度。

- Running(运行中):在此阶段,Pod已被调度到一个节点,并创建了所有的容器。至少一个容器正在运行,或者正在启动或氢气。

- Succeeeded(成功):在此阶段,Pod中的所有容器都已成功终止,并且不会再次重启。

- Failed(失败):在此阶段,Pod中的至少一个容器已经失败(退出码非零)。容器已经崩溃或以其他方式出错

- Unknow(未知):此阶段,Pod的状态无法由Kubernetes确定。通常是因为与Pod所在节点的听信失败。

除了这些基本的生命周期阶段外,还有一些更详细的容器状态,用于描述容器在Pod生命周期的不同阶段:

- ContainerCreating:容器正在创建,但尚未启动。

- Terminating(终止中):容器正在终止,但尚未完成。

- Terminated(已终止):容器已终止。

- Waiting(等待):容器处于等待状态,可能是因为它正在等待其他容器启动,或者因为它正在等待资源可用。

- Completed(完成):有一种Pod是一次性的,不需要一直运行,只有执行完成就会是此状态,

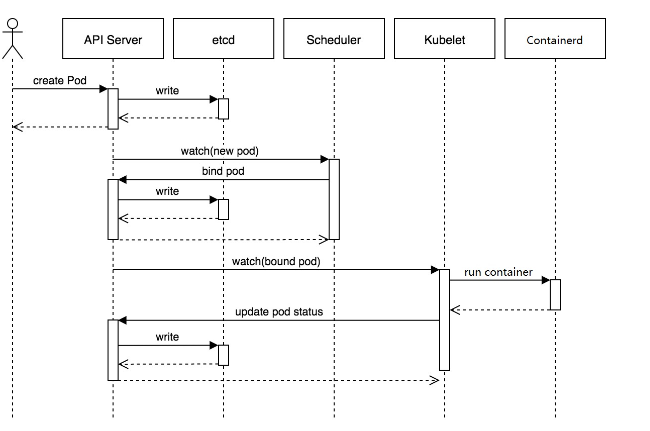

3.3 创建Pod流程

- 用户通过kubectl或者其他API客户端提交Pod对象给API server。

- API server 尝试将Pod对象的相关信息存入到etcd,写入操作完成后API server返回确认信息至客户端。

- API server开始反映etcd中的状态变化。

- 所有的kubernetes组件均使用watch机制来跟踪检查API server上的相关变化。

- kube-scheduler通过watcher观察到API server创建了新的Pod对象但尚未绑定至任何节点。

- kube-scheduler为Pod对象挑选一个工作节点并将结果更新至API server。

- 调度结果由API server更新至etcd,而且API server也开始反映此pod对象的调度结果。

- Pod被调度的目标工作节点上的kubelet尝试在当前节点调用containerd容器启动,并将结果状态返回至API server。

- API server将Pod状态信息存入etcd。

- 在etcd确认写操作完成后,API server将确认信息发送至相关的kubelet。

3.4 删除Pod流程

- 用户请求删除Pod。

- API sercer 将Pod标记为Terminating状态。

- (与第2步同时进行)kubelet在监控到Pod对象装维Terminating状态的同时启动Pod关闭流程。

- (与第2步同时进行)service将EndPoint摘除。

- 如果当前Pod对象定义了preStop hook,则将其标记为Terminating后会以同步的方式执行,宽限期开始计时。

- Podd中的容器进程收到TERM信号。

- 宽限期结束后,若进程仍在运行,会收到SIGKILL信号。

- kubelet请求API server将此Pod对象的宽限期设置为0,从而完成删除操作。

四、Pod资源限制

4.1 Resource Quota

资源配额Resource Quotas(简称quta)是对namespace进行资源配额,限制资源的一种策略。

K8S是一个多用户架构,当多用户或者团队共享一个K8S系统时,SA使用quta防止用户(基于namespace)的资源抢占,定义好资源分配策略。

Quota应用在Namespace上,默认情况下,没有Resource Quota,需要另外创建Quota,并且每个Namespace最多只能有一个Quota对象。

可限制资源类型:

计算资源:limits.cpu 、requests.cpu、limits.memory、requests.memory

存储资源,包括存储资源的总量以及指定story class的总量

requests.storage:存储资源总量,如500Gi

persistentvolumeclaims:pvc的个数

对象数,即可创建的对象的个数。

pods, replicationcontrollers, configmaps, secrets,persistentvolumeclaims,services, services.loadbalancers,services.nodeports

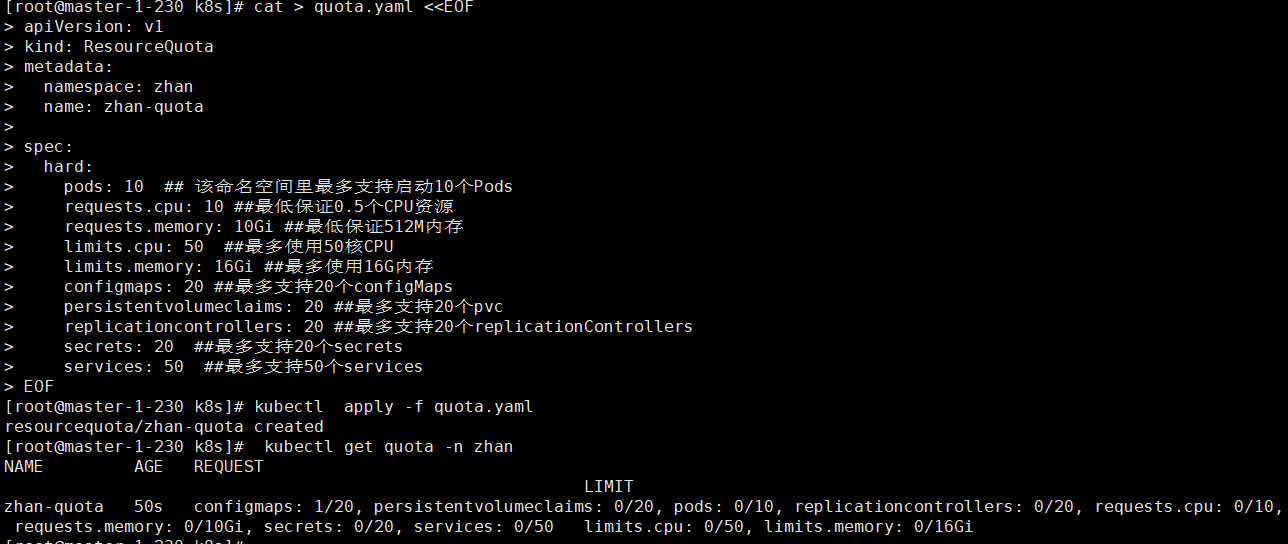

4.1.1 Resource Quota 示例:

cat > quota.yaml <<EOF

apiVersion: v1

kind: ResourceQuota

metadata:

namespace: zhan

name: zhan-quota

spec:

hard:

pods: 5 ## 该命名空间里最多支持启动5个Pods

requests.cpu: 10 ##最低保证10个CPU资源

requests.memory: 10Gi ##最低保证10Gi内存

limits.cpu: 50 ##最多使用50核CPU

limits.memory: 16Gi ##最多使用16G内存

configmaps: 20 ##最多支持20个configMaps

persistentvolumeclaims: 20 ##最多支持20个pvc

replicationcontrollers: 20 ##最多支持20个replicationControllers

secrets: 20 ##最多支持20个secrets

services: 50 ##最多支持50个services

EOF生效

# kubectl apply -f quota.yaml

resourcequota/zhan-quota created查看

# kubectl get quota -n zhan

NAME AGE REQUEST LIMIT

zhan-quota 14s configmaps: 1/20, persistentvolumeclaims: 0/20, pods: 0/5, replicationcontrollers: 0/20, requests.cpu: 0/10, requests.memory: 0/10Gi, secrets: 0/20, services: 0/50 limits.cpu: 0/50, limits.memory: 0/16Gi

4.1.2 测试:

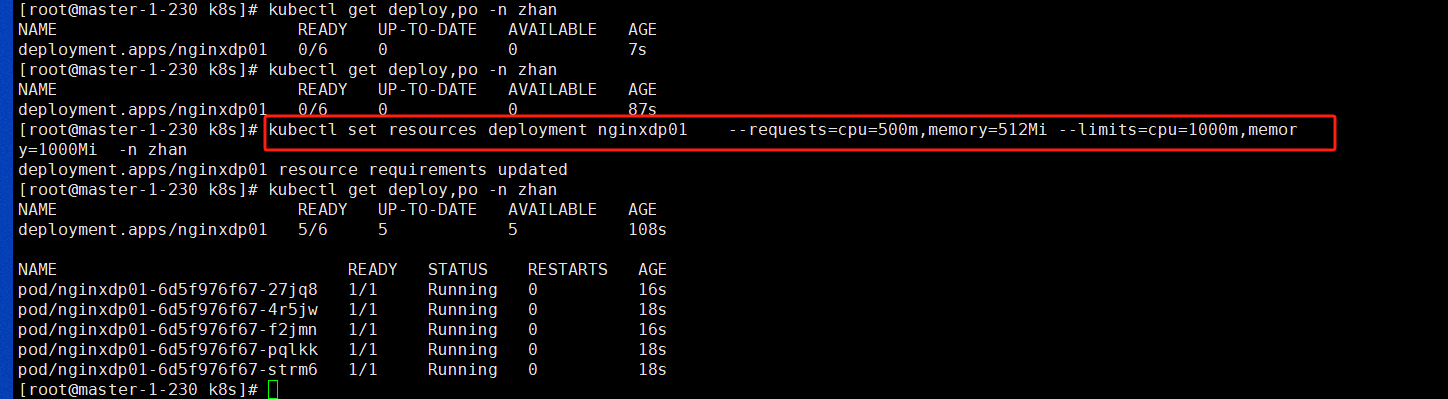

命令行创建deployment,指定Pod副本为6

# kubectl create deployment nginxdp01 --image=nginx:1.25.2 -n zhan --replicas=6

deployment.apps/nginxdp01 created增加内存和cpu资源限制

# kubectl set resources deployment nginxdp01 --requests=cpu=500m,memory=512Mi --limits=cpu=1000m,memory=1000Mi -n zhan

deployment.apps/nginxdp01 resource requirements updated

查看deploy 报错:

查看代码

# kubectl describe deploy nginxdp01 -n zhan

Name: nginxdp01

Namespace: zhan

CreationTimestamp: Sat, 14 Oct 2023 17:19:04 +0800

Labels: app=nginxdp01

Annotations: deployment.kubernetes.io/revision: 2

Selector: app=nginxdp01

Replicas: 6 desired | 5 updated | 5 total | 5 available | 1 unavailable

StrategyType: RollingUpdate

MinReadySeconds: 0

RollingUpdateStrategy: 25% max unavailable, 25% max surge

Pod Template:

Labels: app=nginxdp01

Containers:

nginx:

Image: nginx:1.25.2

Port: <none>

Host Port: <none>

Limits:

cpu: 1

memory: 1000Mi

Requests:

cpu: 500m

memory: 512Mi

Environment: <none>

Mounts: <none>

Volumes: <none>

Conditions:

Type Status Reason

---- ------ ------

ReplicaFailure True FailedCreate

Available True MinimumReplicasAvailable

Progressing True ReplicaSetUpdated

OldReplicaSets: nginxdp01-5f75c7dcdb (0/0 replicas created)

NewReplicaSet: nginxdp01-6d5f976f67 (5/6 replicas created)

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal ScalingReplicaSet 4m59s deployment-controller Scaled up replica set nginxdp01-5f75c7dcdb to 6

Normal ScalingReplicaSet 3m29s deployment-controller Scaled up replica set nginxdp01-6d5f976f67 to 2

Normal ScalingReplicaSet 3m29s deployment-controller Scaled down replica set nginxdp01-5f75c7dcdb to 5 from 6

Normal ScalingReplicaSet 3m29s deployment-controller Scaled up replica set nginxdp01-6d5f976f67 to 3 from 2

Normal ScalingReplicaSet 3m27s deployment-controller Scaled down replica set nginxdp01-5f75c7dcdb to 4 from 5

Normal ScalingReplicaSet 3m27s deployment-controller Scaled up replica set nginxdp01-6d5f976f67 to 4 from 3

Normal ScalingReplicaSet 3m27s deployment-controller Scaled down replica set nginxdp01-5f75c7dcdb to 3 from 4

Normal ScalingReplicaSet 3m27s deployment-controller Scaled up replica set nginxdp01-6d5f976f67 to 5 from 4

Normal ScalingReplicaSet 3m27s deployment-controller Scaled down replica set nginxdp01-5f75c7dcdb to 2 from 3

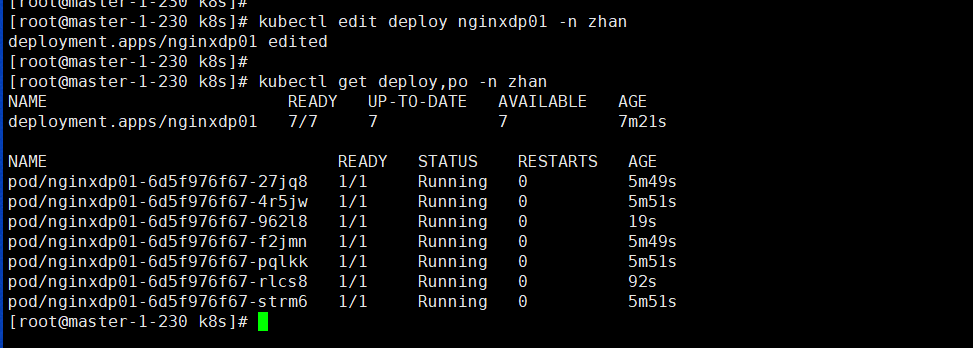

Normal ScalingReplicaSet 3m26s (x3 over 3m27s) deployment-controller (combined from similar events): Scaled down replica set nginxdp01-5f75c7dcdb to 0 from 1修改 quota 对pod限制数为8个

# kubectl apply -f quota.yaml

resourcequota/zhan-quota configured

# kubectl get quota -n zhan

NAME AGE REQUEST LIMIT

zhan-quota 4m30s configmaps: 1/20, persistentvolumeclaims: 0/20, pods: 5/8, replicationcontrollers: 0/20, requests.cpu: 2500m/10, requests.memory: 2560Mi/10Gi, secrets: 0/20, services: 0/50 limits.cpu: 5/50, limits.memory: 5000Mi/16Gi

使用kubectl edit 修改pod数量为7 个

# kubectl edit deploy nginxdp01 -n zhan

deployment.apps/nginxdp01 edited

# kubectl get deploy,po -n zhan

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/nginxdp01 7/7 7 7 7m21s

NAME READY STATUS RESTARTS AGE

pod/nginxdp01-6d5f976f67-27jq8 1/1 Running 0 5m49s

pod/nginxdp01-6d5f976f67-4r5jw 1/1 Running 0 5m51s

pod/nginxdp01-6d5f976f67-962l8 1/1 Running 0 19s

pod/nginxdp01-6d5f976f67-f2jmn 1/1 Running 0 5m49s

pod/nginxdp01-6d5f976f67-pqlkk 1/1 Running 0 5m51s

pod/nginxdp01-6d5f976f67-rlcs8 1/1 Running 0 92s

pod/nginxdp01-6d5f976f67-strm6 1/1 Running 0 5m51s验证deploy 是否正常启动

4.2 Pod的limits和requests

Resource Quota是针对namespace下面所有的Pod的限制,而Pod自身也有限制。

cat > quota-pod.yaml <<EOF

apiVersion: v1

kind: Pod

metadata:

name: quota-pod

namespace: zhan

spec:

containers:

- image: nginx:1.25.2

name: ngx

imagePullPolicy: IfNotPresent

ports:

- containerPort: 80

resources:

limits:

cpu: 0.5 #限制Pod CPU资源最多使用500m,这里的0.5=500m,1=1000m

memory: 2Gi ##限制内存资源最多使用2G

requests:

cpu: 200m #K8s要保证Pod使用的最小cpu资源为200m,如果node上资源满足不了,则不会调度到该node上

memory: 512Mi #K8s要保证Pod使用最小内存为512M,如果node上资源满足不了,则不会调度到该node上

EOF