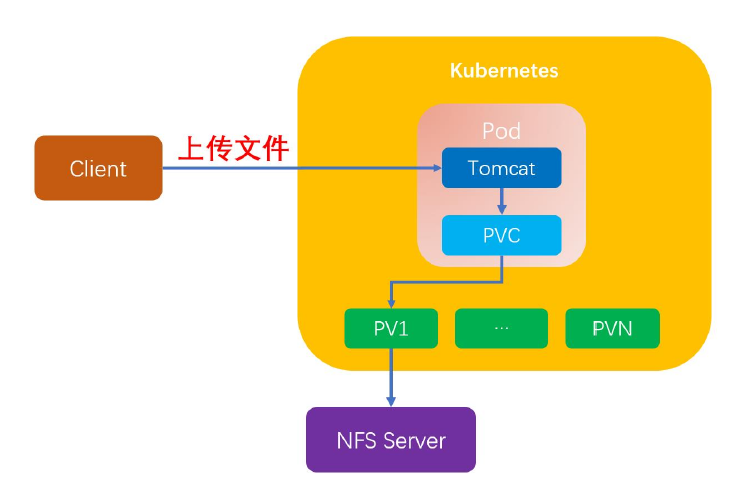

一、基于NFS的存储解决方案

NFS在K8S中的应用及配置

1、前言

NFS是基于网络共享文件的存储解决方案,及网络 文件系统。NFS 运行在一个系统网络上与他人共享目录和文件。通过使用NFS,用户和程序可像访问本地文件一样访问远端系统上的文件。

- nfs:是我们最终的存储

- nfs-client:用来动态创建pv和PVC的,我们称为provisioner

- StorageClass:关联到对应的provisioner就可以使用

- statefulset/deployment/daemonset:需要配置stroageClassName进行使用

优点:

- 部署快,维护简单

- 集中放在一台机器上,网络内部所有计算机可以通过网络访问,不必单独存储

- 从软件层面看,数据可靠性高,服务稳定

缺点:

- 存在单点故障,如果nfs server宕机,所有客户端都不能访问共享目录

- 大数据高并发的场景,nfs 网络/磁盘IO 效率低

2、配置nfs-client的rbac角色授权yaml文件

[root@master-1-230 deploy]# cat rbac.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: kube-system

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: nfs-client-provisioner-runner

rules:

- apiGroups: [""]

resources: ["nodes"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["persistentvolumes"]

verbs: ["get", "list", "watch", "create", "delete"]

- apiGroups: [""]

resources: ["persistentvolumeclaims"]

verbs: ["get", "list", "watch", "update"]

- apiGroups: ["storage.k8s.io"]

resources: ["storageclasses"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["events"]

verbs: ["create", "update", "patch"]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: run-nfs-client-provisioner

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: kube-system

roleRef:

kind: ClusterRole

name: nfs-client-provisioner-runner

apiGroup: rbac.authorization.k8s.io

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: kube-system

rules:

- apiGroups: [""]

resources: ["endpoints"]

verbs: ["get", "list", "watch", "create", "update", "patch"]

---

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: kube-system

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: kube-system

roleRef:

kind: Role

name: leader-locking-nfs-client-provisioner

apiGroup: rbac.authorization.k8s.io3、配置nfs-client-provisioner 资源yaml文件

nfs-client-provisioner 相当于一个nfs客户端程序,用于声明nfs服务端的ip地址、路径

创建storageclass资源时,需要指定使用某个nfs-client-provisioner 提供存储数据

[root@master-1-230 deploy]# cat deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nfs-client-provisioner

labels:

app: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: kube-system

spec:

replicas: 1

strategy:

type: Recreate

selector:

matchLabels:

app: nfs-client-provisioner

template:

metadata:

labels:

app: nfs-client-provisioner

spec:

serviceAccountName: nfs-client-provisioner

containers:

- name: nfs-client-provisioner

image: registry.k8s.io/sig-storage/nfs-subdir-external-provisioner:v4.0.2

volumeMounts:

- name: nfs-client-root

mountPath: /persistentvolumes

env:

- name: PROVISIONER_NAME

value: k8s-sigs.io/nfs-subdir-external-provisioner

- name: NFS_SERVER

value: 192.168.1.230

- name: NFS_PATH

value: /data/nfs

volumes:

- name: nfs-client-root

nfs:

server: 192.168.1.230

path: /data/nfs4、编写storageclass资源的yaml文件

创建一个storageclass资源,storageclass资源需要指定nfs-client-provisioner

[root@master-1-230 deploy]# cat class.yaml

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: nfs-client

provisioner: k8s-sigs.io/nfs-subdir-external-provisioner # or choose another name, must match deployment's env PROVISIONER_NAME'

parameters:

archiveOnDelete: "false" #删除pv的时候,pv内容是否要备份ReclaimPolicy回收策略:

- Delete(删除):当pvc被删除时,PV统一被删除

- Retain(保留):当PVC被删除时,PV并不会被删除,需要手动删除

- Recycle(回收):当PVC被删除时,PV上的数据也会被删除,以便和新的PVC进行绑定(已被遗弃)

5、存储提供(部署NFS服务)

在master节点搭建NFS

NFS服务器IP地址为192.168.1.230,共享目录为/data/nfs

安装nfs

yum install -y nfs-utils nfs-server rpcbind创建共享目录,启动nfs服务

#mkdir -p /data/nfs

# echo "/data/nfs/ *(insecure,rw,sync,no_root_squash)" >> /etc/exports

# cat /etc/exports

/data/nfs/ *(insecure,rw,sync,no_root_squash)

#systemctl enable rpcbind

#systemctl enable nfs-server

#systemctl start rpcbind

#systemctl start nfs-server6、客户端安装nfs-utils

所有客户端安装:

yum install -y nfs-utils rpcbind7、创建所有资源

#kubectl apply -f nfs-storageclass.yaml

storageclass.storage.k8s.io/nfs-storageclass created

#kubectl apply -f rbac.yaml

serviceaccount/nfs-client-provisioner created

clusterrole.rbac.authorization.k8s.io/nfs-client-provisioner-runner created

clusterrolebinding.rbac.authorization.k8s.io/run-nfs-client-provisioner created

role.rbac.authorization.k8s.io/leader-locking-nfs-client-provisioner created

rolebinding.rbac.authorization.k8s.io/leader-locking-nfs-client-provisioner created

#kubectl apply -f deployment.yaml

deployment.apps/nfs-client-provisioner created

#kubectl apply -f class.yaml

storageclass.storage.k8s.io/nfs-client created查看nfs-client-provisioner资源状态

# kubectl get pod -A |grep nfs

kube-system nfs-client-provisioner-79994856c4-jtvxq 1/1 Running 1 (96m ago) 11h查看nfs-client-provisioner 资源详情

# kubectl describe pod nfs-client-provisioner-79994856c4-jtvxq -n kube-system

# kubectl describe pod nfs-client-provisioner-79994856c4-jtvxq -n kube-system

Name: nfs-client-provisioner-79994856c4-jtvxq

Namespace: kube-system

Priority: 0

Service Account: nfs-client-provisioner

Node: node-1-233/192.168.1.233

Start Time: Sat, 25 Nov 2023 22:35:44 +0800

Labels: app=nfs-client-provisioner

pod-template-hash=79994856c4

Annotations: cni.projectcalico.org/containerID: c9a20d6f1a51d7437d5cb19b8462e95af55dfe2b9b5681c91b3d41cf8034e452

cni.projectcalico.org/podIP: 10.244.154.8/32

cni.projectcalico.org/podIPs: 10.244.154.8/32

Status: Running

IP: 10.244.154.8

IPs:

IP: 10.244.154.8

Controlled By: ReplicaSet/nfs-client-provisioner-79994856c4

Containers:

nfs-client-provisioner:

Container ID: containerd://2d17948a58548f90c3822653c4195a9756b3333a9a81d9fe869b9a4378982737

Image: registry.k8s.io/sig-storage/nfs-subdir-external-provisioner:v4.0.2

Image ID: registry.k8s.io/sig-storage/nfs-subdir-external-provisioner@sha256:63d5e04551ec8b5aae83b6f35938ca5ddc50a88d85492d9731810c31591fa4c9

Port: <none>

Host Port: <none>

State: Running

Started: Sun, 26 Nov 2023 08:43:59 +0800

Last State: Terminated

Reason: Unknown

Exit Code: 255

Started: Sat, 25 Nov 2023 22:35:49 +0800

Finished: Sun, 26 Nov 2023 08:43:30 +0800

Ready: True

Restart Count: 1

Environment:

PROVISIONER_NAME: k8s-sigs.io/nfs-subdir-external-provisioner

NFS_SERVER: 192.168.1.230

NFS_PATH: /data/nfs

Mounts:

/persistentvolumes from nfs-client-root (rw)

/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-69dwg (ro)

Conditions:

Type Status

Initialized True

Ready True

ContainersReady True

PodScheduled True

Volumes:

nfs-client-root:

Type: NFS (an NFS mount that lasts the lifetime of a pod)

Server: 192.168.1.230

Path: /data/nfs

ReadOnly: false

kube-api-access-69dwg:

Type: Projected (a volume that contains injected data from multiple sources)

TokenExpirationSeconds: 3607

ConfigMapName: kube-root-ca.crt

ConfigMapOptional: <nil>

DownwardAPI: true

QoS Class: BestEffort

Node-Selectors: <none>

Tolerations: node.kubernetes.io/not-ready:NoExecute op=Exists for 300s

node.kubernetes.io/unreachable:NoExecute op=Exists for 300s

Events: <none>查看storageclass资源状态

# kubectl get sc

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

nfs-client k8s-sigs.io/nfs-subdir-external-provisioner Delete Immediate false 11h

nfs-storageclass k8s-sigs.io/nfs-subdir-external-provisioner Retain Immediate false 11h查看storageclass资源详情

# kubectl describe sc

Name: nfs-client

IsDefaultClass: No

Annotations: kubectl.kubernetes.io/last-applied-configuration={"apiVersion":"storage.k8s.io/v1","kind":"StorageClass","metadata":{"annotations":{},"name":"nfs-client"},"parameters":{"archiveOnDelete":"false"},"provisioner":"k8s-sigs.io/nfs-subdir-external-provisioner"}

Provisioner: k8s-sigs.io/nfs-subdir-external-provisioner

Parameters: archiveOnDelete=false

AllowVolumeExpansion: <unset>

MountOptions: <none>

ReclaimPolicy: Delete

VolumeBindingMode: Immediate

Events: <none>

Name: nfs-storageclass

IsDefaultClass: No

Annotations: kubectl.kubernetes.io/last-applied-configuration={"apiVersion":"storage.k8s.io/v1","kind":"StorageClass","metadata":{"annotations":{},"name":"nfs-storageclass"},"provisioner":"k8s-sigs.io/nfs-subdir-external-provisioner","reclaimPolicy":"Retain"}

Provisioner: k8s-sigs.io/nfs-subdir-external-provisioner

Parameters: <none>

AllowVolumeExpansion: <unset>

MountOptions: <none>

ReclaimPolicy: Retain

VolumeBindingMode: Immediate

Events: <none>8、nfs实现总结

NFS在K8S中的应用场景

1、在PVC中调用StorageClass

创建一个pvc引用storageclass自动创建pv

vim test-pvc.yaml

# cat test-pvc.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: test-pvc01

spec:

storageClassName: nfs-storageclass

accessModes:

- ReadWriteMany

resources:

requests:

storage: 500Mi应用yaml文件

# kubectl apply -f test-pvc.yaml

persistentvolumeclaim/test-pvc created

[root@master-1-230 2.2]# kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

nginx-storage-test-pvc-nginx-storage-stat-0 Bound pvc-f1106903-53db-490b-ba5f-f5981b431063 1Gi RWX nfs-storageclass 11h

nginx-storage-test-pvc-nginx-storage-stat-1 Bound pvc-20a64f91-4637-4bf8-b33f-27597536f44f 1Gi RWX nfs-storageclass 11h

nginx-storage-test-pvc-nginx-storage-stat-2 Bound pvc-d76f65f2-0637-404f-8d08-5f174a07630a 1Gi RWX nfs-storageclass 11h

test-pvc Bound pvc-5cb97247-3811-477c-9b29-5100456d244f 500Mi RWX nfs-storageclass 12h

test-pvc01 Bound pvc-68d96576-f53a-43b0-9ff7-ac95ca8c401b 500Mi RWX nfs-storageclass 52s2、在Statefulset控制器中使用StorageClass

[root@master-1-230 2.2]# cat nginx-deploy-pvc.yaml

apiVersion: v1

kind: Service

metadata:

name: nginx

labels:

app: nginx

spec:

ports:

- port: 80

name: web

clusterIP: None

selector:

app: nginx

---

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: nfs-web

spec:

serviceName: "nginx"

replicas: 1

selector:

matchLabels:

app: nfs-web # has to match .spec.template.metadata.labels

template:

metadata:

labels:

app: nfs-web

spec:

terminationGracePeriodSeconds: 10

containers:

- name: nginx

image: nginx:1.7.9

ports:

- containerPort: 80

name: web

volumeMounts:

- name: www

mountPath: /usr/share/nginx/html

volumeClaimTemplates:

- metadata:

name: www

annotations:

volume.beta.kubernetes.io/storage-class: nfs-storageclass

spec:

accessModes: [ "ReadWriteOnce" ]

resources:

requests:

storage: 1Gi[root@master-1-230 2.2]# kubectl apply -f nginx-deploy-pvc.yaml

service/nginx created

statefulset.apps/nfs-web created

[root@master-1-230 2.2]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nfs-web-0 1/1 Running 0 78s 10.244.29.35 node-1-232 <none> <none>3、应用建议:

对于大型网站对可靠性要求高的企业,nfs网络文件系统的替代软件为分布式系统ceph,fastDFS