【proxysql+replication manager切换MySQL】

【剧本说明】

以下文件在roles目录下

tree proxysql/

proxysql/

├── defaults

│ └── main.yml

├── files

│ └── rpms

│ ├── proxysql-2.4.1-1-centos7.x86_64.rpm

│ └── replication-manager-osc-cgo-2.2.40-1.x86_64.rpm

├── tasks

│ ├── init_cluster.yml

│ ├── install_task.yml

│ ├── main.yml

│ ├── proxysql_config.yml

│ ├── proxysql_mysql_config.yml

│ └── replication_config.yml

├── templates

│ ├── config.toml.j2

│ ├── proxysql.cnf.j2

│ └── proxysql_mysql_config.j2

└── vars

└── main.yml

【对应目录创建脚本】

defaults目录

vim main.yml

---

proxysql_login_admin_host: 127.0.0.1

proxysql_login_admin_password: admin

proxysql_login_admin_port: 6032

proxysql_login_admin_user: admin

proxysql_global_variables:

login_host: "{{ proxysql_login_admin_host }}"

login_password: "{{ proxysql_login_admin_password }}"

login_port: "{{ proxysql_login_admin_port }}"

login_user: "{{ proxysql_login_admin_user }}"

files目录

创建rpm子目录,上传安装包proxysql-2.4.1-1-centos7.x86_64.rpm replication-manager-osc-cgo-2.2.40-1.x86_64.rpm 可以到对应官网下载新版本

templates目录

vim proxysql.cnf.j2

datadir="/var/lib/proxysql"

errorlog="/var/lib/proxysql/proxysql.log"

admin_variables =

{

admin_credentials="admin:admin;cluster1:secret1pass"

mysql_ifaces="0.0.0.0:6032"

cluster_username="cluster1"

cluster_password="secret1pass"

cluster_check_interval_ms=200

cluster_check_status_frequency=100

cluster_mysql_query_rules_save_to_disk=true

cluster_mysql_servers_save_to_disk=true

cluster_mysql_users_save_to_disk=true

cluster_proxysql_servers_save_to_disk=true

cluster_mysql_query_rules_diffs_before_sync=3

cluster_mysql_servers_diffs_before_sync=3

cluster_mysql_users_diffs_before_sync=3

cluster_proxysql_servers_diffs_before_sync=3

}

proxysql_servers =

(

{% for host in proxysql_cluster_servers_group %}

{

hostname="{{ host }}"

port=6032

comment="proxysql_{{ host }}"

}{% if not loop.last %},{% endif %}

{% endfor %}

)

mysql_variables=

{

threads=4

max_connections=30000

default_query_delay=0

default_query_timeout=36000000

have_compress=true

poll_timeout=2000

interfaces="0.0.0.0:6033"

default_schema="information_schema"

stacksize=1048576

server_version="5.5.30"

connect_timeout_server=3000

monitor_username="monitor"

monitor_password="monitor"

monitor_history=600000

monitor_connect_interval=60000

monitor_ping_interval=10000

monitor_read_only_interval=1500

monitor_read_only_timeout=500

ping_interval_server_msec=120000

ping_timeout_server=500

commands_stats=true

sessions_sort=true

connect_retries_on_failure=10

}

mysql_servers =

(

)

mysql_users:

(

)

mysql_query_rules:

(

)

scheduler=

(

)

mysql_replication_hostgroups=

(

)

vim config.toml.j2

[db3306]

title = "db3306"

db-servers-hosts = "{% for host in proxysql_cluster_servers_group %}{{ host }}:3306{% if not loop.last %},{% endif %}{% endfor %}"

db-servers-prefered-master = "{{ proxysql_cluster_servers_group[0] }}"

db-servers-credential = "{{db_servers_credential}}"

replication-credential = "{{replication_credential}}"

failover-mode = "manual"

proxysql=true

proxysql-servers= "{% for host in proxysql_cluster_servers_group %}{{ host }}{% if not loop.last %},{% endif %}{% endfor %}"

proxysql-port=6033

proxysql-admin-port=6032

proxysql-writer-hostgroup="10"

proxysql-reader-hostgroup="20"

proxysql-user="cluster1"

proxysql-password="secret1pass"

proxysql-bootstrap=false

proxysql-bootstrap-hostgroups=false

proxysql-bootstrap-users=false

[Default]

include = "/etc/replication-manager/cluster.d"

monitoring-save-config = false

monitoring-datadir = "/var/lib/replication-manager"

#monitoring-sharedir = "/usr/share/replication-manager"

monitoring-ignore-errors = "WARN0091,WARN0084,WARN0101"

autorejoin = false

## Timeout in seconds between consecutive monitoring

monitoring-ticker = 2

#########

## LOG ##

#########

log-file = "/var/log/replication-manager.log"

log-heartbeat = false

log-syslog = false

log-rotate-max-age = 1

log-rotate-max-backup = 7

log-rotate-max-size = 10

#log-sql-in-monitoring = true

#################

## ARBITRATION ##

#################

arbitration-external = false

arbitration-external-secret = "13787932529099014144"

arbitration-external-hosts = "88.191.151.84:80"

arbitration-peer-hosts ="127.0.0.1:10002"

## Unique value on each replication-manager

arbitration-external-unique-id = 0

##########

## HTTP ##

##########

http-server = true

http-bind-address = "0.0.0.0"

http-port = "10001"

http-auth = false

http-session-lifetime = 3600

http-bootstrap-button = false

http-refresh-interval = 4000

#########

## API ##

#########

api-credentials = "admin:repman"

api-port = "10005"

api-https-bind = false

api-credentials-acl-allow = "admin:cluster proxy db prov,dba:cluster proxy db,foo:"

api-credentials-acl-discard = false

api-credentials-external = "dba:repman,foo:bar"

############

## ALERTS ##

############

mail-from = "replication-manager@localhost"

mail-smtp-addr = "localhost:25"

mail-to = "replication-manager@signal18.io"

mail-smtp-password=""

mail-smtp-user=""

alert-slack-channel = "#support"

alert-slack-url = ""

alert-slack-user = "svar"

##########

# STATS ##

##########

graphite-metrics = false

graphite-carbon-host = "127.0.0.1"

graphite-carbon-port = 2003

graphite-embedded = false

graphite-carbon-api-port = 10002

graphite-carbon-server-port = 10003

graphite-carbon-link-port = 7002

graphite-carbon-pickle-port = 2004

graphite-carbon-pprof-port = 7007

#backup-logical-type = "mydumper"

backup-mydumper-path = "/bin/mydumper"

backup-myloader-path = "/bin/myloader"

backup-mysqlbinlog-path = "/bin/mysqlbinlog"

backup-mysqldump-path = "/bin/mysqldump"

backup-mysqldump-options = "--hex-blob --single-transaction --verbose --all-databases"

##############

# BENCHMARK ##

##############

sysbench-binary-path = "/usr/bin/sysbench"

sysbench-threads = 4

sysbench-time = 100

sysbench-v1 = true

vim proxysql_mysql_config.j2

-- config read write groups

delete from mysql_replication_hostgroups;

insert into mysql_replication_hostgroups ( writer_hostgroup, reader_hostgroup, comment) values (10,20,'proxy');

load mysql servers to runtime;

save mysql servers to disk;

-- config monitor user

set mysql-monitor_username='monitor';

set mysql-monitor_password='monitor';

load mysql variables to runtime;

save mysql variables to disk;

-- config read write split

delete from mysql_query_rules;

insert into mysql_query_rules(rule_id,active,match_pattern,destination_hostgroup,apply) values (1,1,'^select.*for update$',10,1);

insert into mysql_query_rules(rule_id,active,match_pattern,destination_hostgroup,apply) values (2,1,'^select',20,1);

load mysql query rules to runtime;

save mysql query rules to disk;

-- config test user

delete from mysql_users;

insert into mysql_users (username,password,default_hostgroup) values ('monitor','monitor',10);

load mysql users to runtime;

save mysql users to disk;

-- config mysql instance

delete from mysql_servers;

insert into mysql_servers(hostgroup_id,hostname,port) values (10,"{{ proxysql_cluster_servers_group[0] }}",3306);

{% for host in proxysql_cluster_servers_group %}

insert into mysql_servers(hostgroup_id,hostname,port) values (20,"{{ host }}",3306){% if not loop.last %};{% endif %}

{% endfor %};

load mysql servers to runtime;

save mysql servers to disk;

-- config group select

set mysql-set_query_lock_on_hostgroup=0;

load mysql variables to runtime;

save mysql variables to disk;

vars目录

vim main.yml

---

db_servers_credential: "dba:XXXXXX"

replication_credential: "repl:XXXXXX"

tasks目录

vim replication_config.yml

---

- name: 配置 replication conf

template:

src: config.toml.j2

dest: /etc/replication-manager/config.toml

owner: root

group: root

mode: 0644

- name: rm replication-manager cluster1.toml

shell: rm -rf /etc/replication-manager/cluster.d/cluster1.toml

vim init_cluster.yml

---

- name: 注册proxysql cluster

set_fact:

proxysql_cluster_servers_group: []

- name: 循环处理proxysql host

set_fact:

proxysql_cluster_servers_group: "{{proxysql_cluster_servers_group}} + [ '{{ item }}' ]"

with_items: "{{ groups['proxysql'] }}"

vim proxysql_config.yml

---

- name: 配置proxysql conf

template:

src: proxysql.cnf.j2

dest: /etc/proxysql.cnf

owner: root

group: root

mode: 0644

vim proxysql_mysql_config.yml --- - name: 配置proxysql_mysql_conf template: src: proxysql_mysql_config.j2 dest: /tmp/proxysql_mysql_config.j2 owner: root group: root mode: 0644 - name: execute proxysql_mysql_config.sql shell: sleep 10; /usr/local/mysql/bin/mysql -uadmin -padmin -h127.0.0.1 -P6032 < /tmp/proxysql_mysql_config.j2

vim main.yml

---

- name: 复制proxysql replication_manager rpm

copy: src=../files/rpms/{{ item }} dest=/tmp/{{ item }} mode=0644

with_items:

- proxysql-2.4.1-1-centos7.x86_64.rpm

- replication-manager-osc-cgo-2.2.40-1.x86_64.rpm

- name: 安装proxysql replication_manager rpm

yum:

name: /tmp/{{ item }}

state: present

with_items:

- proxysql-2.4.1-1-centos7.x86_64.rpm

- replication-manager-osc-cgo-2.2.40-1.x86_64.rpm

- name: 设置proxysql cluster

set_fact:

proxysqlcluster: true

- name: 设置proxysql cluster

include: init_cluster.yml

- name: 配置proxysql conf

include: proxysql_config.yml

- name: 创建/var/lib/proxysql

shell: mkdir -p /var/lib/proxysql

- name: 修改/var/lib/proxysql

shell: chown -R proxysql:proxysql /var/lib/proxysql

- name: 创建/etc/replication-manager

shell: mkdir -p /etc/replication-manager

- name: 启动 proxysql

shell: systemctl daemon-reload && systemctl enable proxysql.service && systemctl start proxysql.service

- name: 拷贝proxysql mysql

include: proxysql_mysql_config.yml

tags: [proxysql_mysql_config]

when: cluster_role == "master"

- name: 配置replicaton_manager conf

include: replication_config.yml

- name: 启动 replication manager

shell: systemctl daemon-reload && systemctl enable replication-manager.service && systemctl start replication-manager.service

创建ansible的hosts文件,前面换成你的IP,root用户root密码

[proxysql]

IPXXX ansible_user=root ansible_ssh_pass=kd11111 cluster_role=master

IPXXX ansible_user=root ansible_ssh_pass=kd11111 cluster_role=slave

创建playbook文件

vim deploy-proxysql.yml

- hosts:

- proxysql

roles:

- proxysql

然后执行自动化安装,等待安装完成即可

ansible-playbook deploy-proxysql.yml

【检查】

查看proxysql服务:systemctl status proxysql

查看replication manager服务:systemctl status replication-manager.service

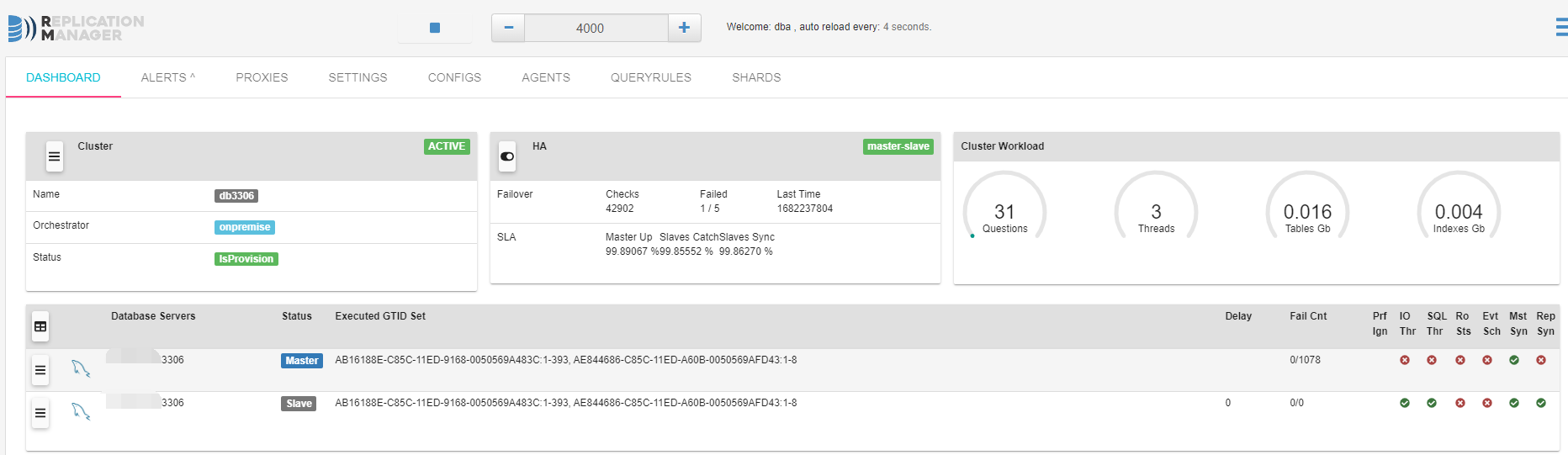

登录页面查看信息

http://IPXXX:10001/#!/dashboard 各项指标正常即可 各项指标切换参考对应的中间件使用方法

【注意事项】

里面var中的变量一个是管理员账号及密码一个是数据库主从的账号及密码,这个得提前创建好。

当前一主一从架构,主库也设置了只读事务的分配,可以根据实际架构情况进行调整。

- ansible-playbook replication playbook proxysql ansibleansible-playbook replication playbook proxysql ansible-playbook ansible-playbook playbook ansible ansible-playbook playbook ansible mongodb index node1 ansible-playbook html ansible ansible-playbook playbook实际 ansible-playbook剧本playbook ansible ansible-playbook集群zookeeper playbook ansible-playbook变量playbook ansible 二进制ansible-playbook playbook ansible