# L2 regulization

* what we have done is add a regularization item in the cost function

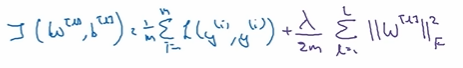

* the cost function in neural network will be

# why is it that shrinking the L2 norm or the parameters might cause less overfitting?

--------------------------------

### 1. one piece of intuition

* if u crank regularization lambda to be really big, they'll be really inventivized to set the weight matrix W to be reasonably close to zero

* so, weights for a lot of hidden units wiil be close to zero that's basically zeroing out a lot of impact of these hidden units

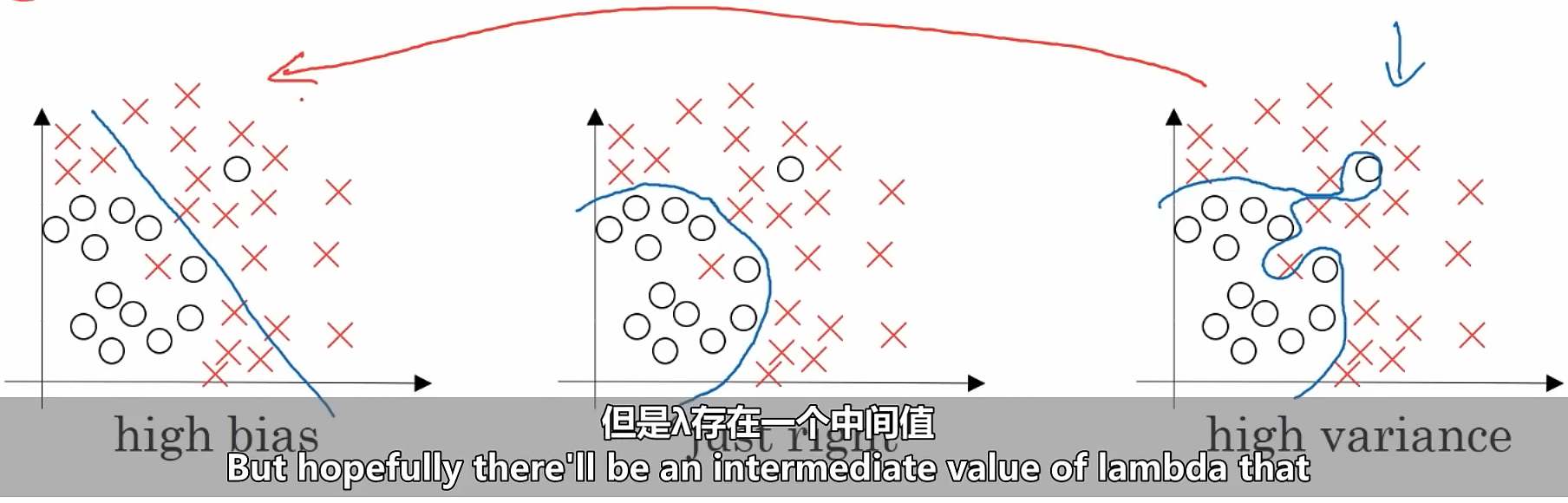

_the impact of lambda value

as shown in the upper image, as the increasing of lambda, the performance of model will be changed form right to the left

so, there will be an intermediate value of lambda that results in result closer to this just right case in the middle

----------------------------------------------

### 2. the second piece of intuition for why regularization helps prevent overfitting

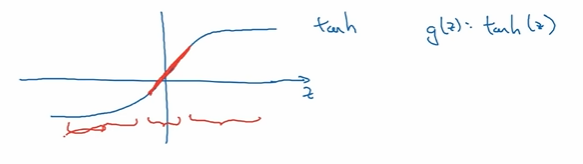

* assume that we're using tanh activation function

#### tanh activation function

* when z is very small, it's just using the linear regime of the tanh function

* only if z is allowed to wander up to larger values or smaller values, the activation function starts to become less linear

-------------------------

so, if we set the lambda very big, then the follow chain action occur

big lambda -> small weight -> small z(the input of activation) -> use the linear regime of the tanh function -> the NN is roughly linear -> basically a linear model and couldn't overfitting

------------------------------------------------