一、API 资源对象Deployment

Deployment YANL示例

vim nginx-deploy.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: myng

name: ng-deploy

spec:

replicas: 2 ##副本数

selector:

matchLabels:

app: myng

template:

metadata:

labels:

app: myng

spec:

containers:

- name: myng

image: nginx:1.25.2

ports:

- name: myng-port

containerPort: 80使用YAML创建deploy:

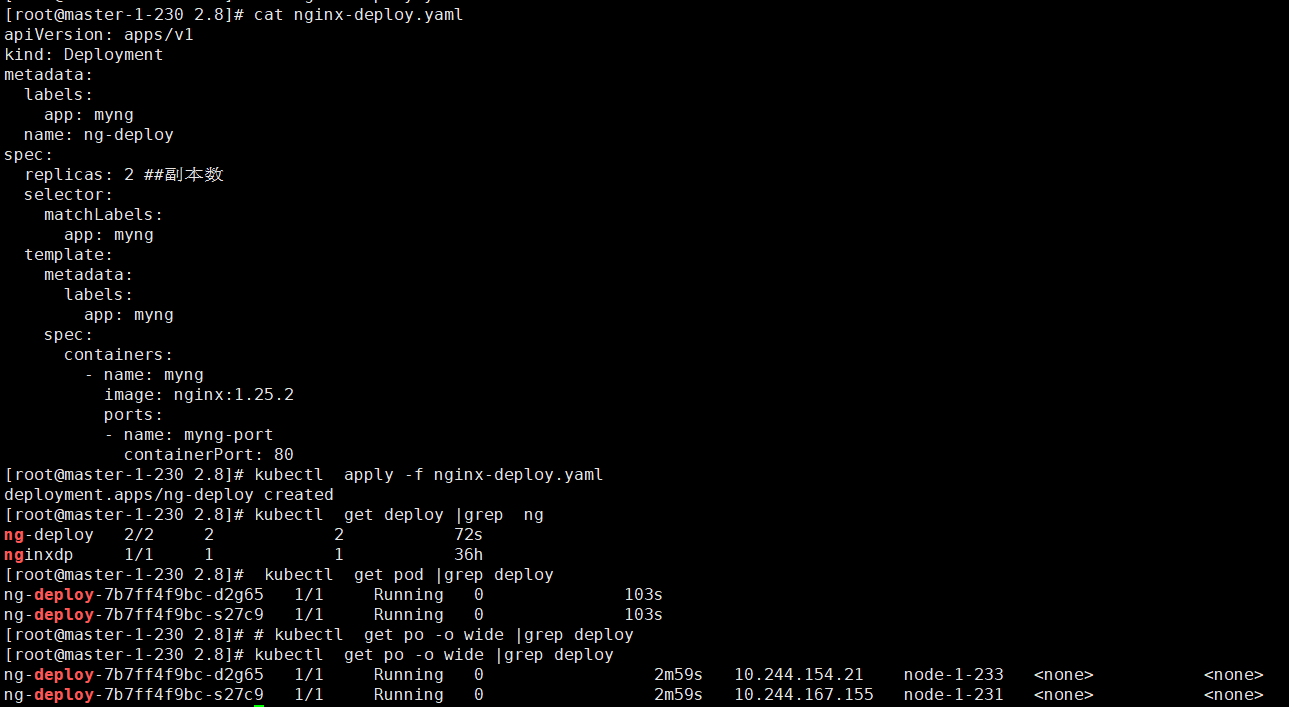

# kubectl apply -f nginx-deploy.yaml

deployment.apps/ng-deploy created查看:

# kubectl get deploy |grep ng

ng-deploy 2/2 2 2 72s

nginxdp 1/1 1 1 36h

# kubectl get pod |grep deploy

ng-deploy-7b7ff4f9bc-d2g65 1/1 Running 0 103s

ng-deploy-7b7ff4f9bc-s27c9 1/1 Running 0 103s查看pod分配到哪个节点

# kubectl get po -o wide |grep deploy

ng-deploy-7b7ff4f9bc-d2g65 1/1 Running 0 2m59s 10.244.154.21 node-1-233 <none> <none>

ng-deploy-7b7ff4f9bc-s27c9 1/1 Running 0 2m59s 10.244.167.155 node-1-231 <none> <none>

二、API资源对象Service

Service检查(svc)YAML示例:

vim ng-svc.yaml

apiVersion: v1

kind: Service

metadata:

name: ngx-svc

spec:

selector:

app: myng

ports:

- protocol: TCP

port: 8080 ##service的port

targetPort: 80 ##pod的port使用YAML创建service:

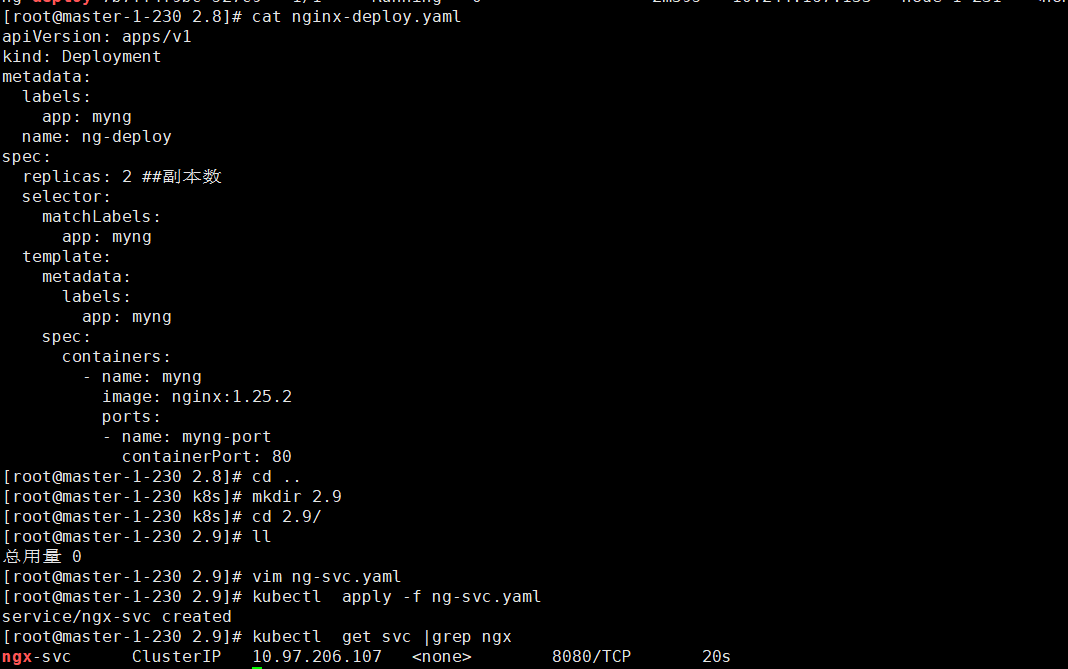

# kubectl apply -f ng-svc.yaml

service/ngx-svc created查看:

# kubectl get svc |grep ngx

ngx-svc ClusterIP 10.97.206.107 <none> 8080/TCP 20s

三种Service类型

1)ClusterIP

该方式为默认类型,默认不用定义type字段。

spec:

selector:

app: myng

type: ClusterIP

ports:

- protocol: TCP

port: 8080 #service的port

targetPort: 80 #pod的port2)NodePort

如果想直接通过k8s节点的IP访问service对应的资源,可使用NodePort,NodePort对应的端口范围:30000-32767

spec:

selector:

app: myng

type: NodePort

ports:

- protocol: TCP

port: 8080 #service的port

targetPort: 80 #pod的port

nodePort: 30009 #可以自定义,也可以不定义,它会自动获取一个端口3)LoadBlancer

该方式需要配合公有云资源实现(比如:阿里云、腾讯云),需要一个公网IP作为入口,然后来负载均衡所有Pod

spec:

selector:

app: myng

type: LoadBlancer

ports:

- protocol: TCP

port: 8080 #service的port

targetPort: 80 #pod的port三、API资源对象DaemonSet

有些场景需要在每个Node上运行Pod(比如flannel插件、calico日志搜集程序),Deployment无法做到,而Daemonet(简称ds)可以。Daemonset目标是在集群的每一个节点只运行Pod。

Daemonset不支持使用kubectl create获取YAML模板,其实Daemonset和Deployment的差异很小,除了kind不一样,还需要去掉replica配置

vi ds-demo.yaml

apiVersion: apps/v1

kind: DaemonSet

metadata:

labels:

app: ds-demo

name: ds-demo

spec:

selector:

matchLabels:

app: ds-demo

template:

metadata:

labels:

app: ds-demo

spec:

containers:

- name: ds-demo

image: nginx:1.23.2

ports:

- name: mysql-port

containerPort: 80使用YAML创建ds

# kubectl apply -f ds-demon.yaml

daemonset.apps/ds-demo created查看

# kubectl get ds

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

ds-demo 3 3 3 3 3 <none> 77s

# kubectl get po

NAME READY STATUS RESTARTS AGE

ds-demo-6vt22 1/1 Running 0 80s

ds-demo-tl86h 1/1 Running 0 80s

ds-demo-vmsr9 1/1 Running 0 80s

ng-deploy-7b7ff4f9bc-d2g65 1/1 Running 0 22m

ng-deploy-7b7ff4f9bc-s27c9 1/1 Running 0 22m

nginxdp-7cf46d7445-8x6gs 1/1 Running 1 (12h ago) 36h

testpod 1/1 Running 1 (12h ago) 25h但是没有在master上启动,默认master 有限制

# kubectl get node

NAME STATUS ROLES AGE VERSION

master-1-230 Ready control-plane 18d v1.27.6

node-1-231 Ready <none> 18d v1.27.6

node-1-232 Ready <none> 18d v1.27.6

node-1-233 Ready <none> 11d v1.27.6

# kubectl describe node master-1-230 |grep -i "taint"

Taints: node-role.kubernetes.io/control-plane:NoSchedule说明:Taints叫污点,如果一个节点上有污点,则不会被调度运行pod。

但是这取决于Pod的一个属性:yoleration(容忍),即这个Pod是否能容忍目标节点是否有污点。

为解决此问题,可以在Pod上增加toleration属性。修改YAML配置如下:

# cat -n ds-demon.yaml

1 apiVersion: apps/v1

2 kind: DaemonSet

3 metadata:

4 labels:

5 app: ds-demo

6 name: ds-demo

7 spec:

8 selector:

9 matchLabels:

10 app: ds-demo

11 template:

12 metadata:

13 labels:

14 app: ds-demo

15 spec:

16 tolerations:

17 - key: node-role.kubernetes.io/control-plane

18 effect: NoSchedule

19 containers:

20 - name: ds-demo

21 image: nginx:1.23.2

22 ports:

23 - name: mysql-port

24 containerPort: 80再次运行YAML

# kubectl apply -f ds-demon.yaml

daemonset.apps/ds-demo configured验证

# kubectl get ds

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

ds-demo 4 4 3 1 3 <none> 9m13s

# kubectl get po -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

ds-demo-57qcd 1/1 Running 0 86s 10.244.167.157 node-1-231 <none> <none>

ds-demo-jzlpn 1/1 Running 0 87s 10.244.154.23 node-1-233 <none> <none>

ds-demo-m8cmz 1/1 Running 0 84s 10.244.29.38 node-1-232 <none> <none>

ds-demo-sfqnt 1/1 Running 0 112s 10.244.183.193 master-1-230 <none> <none>

ng-deploy-7b7ff4f9bc-d2g65 1/1 Running 0 31m 10.244.154.21 node-1-233 <none> <none>

ng-deploy-7b7ff4f9bc-s27c9 1/1 Running 0 31m 10.244.167.155 node-1-231 <none> <none>

nginxdp-7cf46d7445-8x6gs 1/1 Running 1 (12h ago) 36h 10.244.154.20 node-1-233 <none> <none>

testpod 1/1 Running 1 (12h ago) 25h 10.244.167.153 node-1-231 <none> <none>四、API资源对象StatefulSet

Pod根据是否有数据存储分为有状态和无状态:

- 无状态:指Pod运行期间会不会产生重要数据,即使有数据产生,这些数据丢失也不影响整个应用。比如Nginx,Tomcat等应用适合无状态。

- 有状态:指Pod运行期间会产生重要数据,这些数据必须持久化,比如MySql、RedisRabbitMQ

Deployment和Daemonset适合做无状态,而有状态就是Statefulset(简称sts)

说明StatefulSet涉及数据持久化,用的StorageClass。需要先创建一个基于NFS的StorageClass

4.1 在master节点搭建NFS

NFS服务器IP地址为192.168.1.230,共享目录为/data/nfs

安装nfs

yum install -y nfs-utils nfs-server rpcbind创建共享目录,启动nfs服务

#mkdir -p /data/nfs

#echo "/data/nfs/ *(insecure,rw,sync,no_root_squash)" >> /etc/exports

# echo "/data/nfs/ *(insecure,rw,sync,no_root_squash)" >> /etc/exports

# cat /etc/exports

/data/nfs/ *(insecure,rw,sync,no_root_squash)

#systemctl enable rpcbind

#systemctl enable nfs-server

#systemctl start rpcbind

#systemctl start nfs-servernfs 配置生效命令:exportfs -r

4.2 安装SC

使用NFS的sc需要安装NFS provisioner,可以自动创建NFS的pv。使用gitee 导入 https://github.com/kubernetes-sigs/nfs-subdir-external-provisioner

下载源代码

# git clone https://gitee.com/ikubernetesi/nfs-subdir-external-provisioner.git

正克隆到 'nfs-subdir-external-provisioner'...

remote: Enumerating objects: 7784, done.

remote: Counting objects: 100% (7784/7784), done.

remote: Compressing objects: 100% (3052/3052), done.

remote: Total 7784 (delta 4443), reused 7784 (delta 4443), pack-reused 0

接收对象中: 100% (7784/7784), 8.44 MiB | 1.57 MiB/s, done.

处理 delta 中: 100% (4443/4443), done.# cd nfs-subdir-external-provisioner/deploy/

# sed -i 's/namespace: default/namespace: kube-system/' rbac.yaml

# kubectl apply -f rbac.yaml

serviceaccount/nfs-client-provisioner created

clusterrole.rbac.authorization.k8s.io/nfs-client-provisioner-runner created

clusterrolebinding.rbac.authorization.k8s.io/run-nfs-client-provisioner created

role.rbac.authorization.k8s.io/leader-locking-nfs-client-provisioner created

rolebinding.rbac.authorization.k8s.io/leader-locking-nfs-client-provisioner created修改deployment.yaml

sed -i 's/namespace: default/namespace: kube-system/' deployment.yaml ##修改命名空间为kube-system修改nfs-subdir-external-provisioner镜像仓库和nfs地址

cat deployment.yaml

# cat deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nfs-client-provisioner

labels:

app: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

spec:

replicas: 1

strategy:

type: Recreate

selector:

matchLabels:

app: nfs-client-provisioner

template:

metadata:

labels:

app: nfs-client-provisioner

spec:

serviceAccountName: nfs-client-provisioner

containers:

- name: nfs-client-provisioner

image: registry.cn-hangzhou.aliyuncs.com/kavin1028/nfs-subdir-external-provisioner:v4.0.2

#image: registry.k8s.io/sig-storage/nfs-subdir-external-provisioner:v4.0.2

volumeMounts:

- name: nfs-client-root

mountPath: /persistentvolumes

env:

- name: PROVISIONER_NAME

value: k8s-sigs.io/nfs-subdir-external-provisioner

- name: NFS_SERVER

value: 192.168.1.230

- name: NFS_PATH

value: /data/nfs

volumes:

- name: nfs-client-root

nfs:

server: 192.168.1.230

path: /data/nfs应用YAML

# kubectl apply -f deployment.yaml

deployment.apps/nfs-client-provisioner created

# kubectl apply -f class.yaml

storageclass.storage.k8s.io/nfs-client createdSC YAML示例

# cat class.yaml

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: nfs-client

provisioner: k8s-sigs.io/nfs-subdir-external-provisioner # or choose another name, must match deployment's env PROVISIONER_NAME'

parameters:

archiveOnDelete: "false"sts 示例

vim redis-sts.yaml

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: redis-sts

spec:

serviceName: redis-svc ##这里要有一个serviceName,Sts必须和service关联

volumeClaimTemplates:

- metadata:

name: redis-pvc

spec:

storageClassName: nfs-client

accessModes:

- ReadWriteMany

resources:

requests:

storage: 500Mi

replicas: 2

selector:

matchLabels:

app: redis-sts

template:

metadata:

labels:

app: redis-sts

spec:

containers:

- image: redis:6.2

name: redis

ports:

- containerPort: 6379

volumeMounts:

- name: redis-pvc

mountPath: /datavi redis-svc.yaml

apiVersion: v1

kind: Service

metadata:

name: redis-svc

spec:

selector:

app: redis-sts

ports:

- port: 6379

protocol: TCP

targetPort: 6379应用YAML 文件

# kubectl apply -f redis-sts.yaml -f redis-svc.yaml

statefulset.apps/redis-sts created

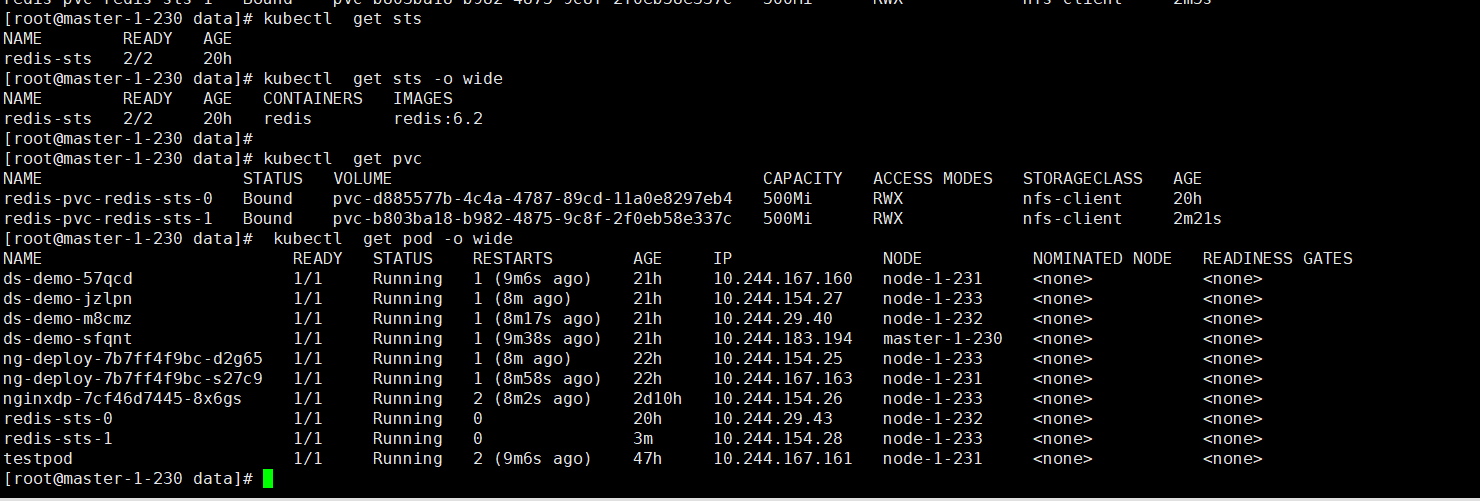

service/redis-svc created对于Sts的Pod,有如下特点:

- Pod名固定有序,后缀从0开始

- 域名固定,格式为:Pod名.Svc名,例如 redis-sts-0.redis-svc

- 每个Pod对应的PVC也是固定

验证:

ping 域名

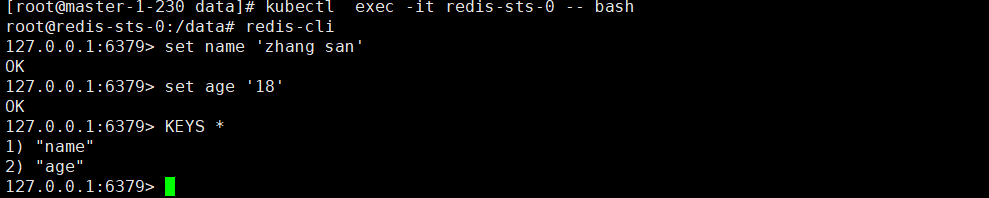

kubectl exec -it redis-sts-0 -- bash ##进去可以ping redis-sts-0.redis-svc 和 redis-sts-1.redis-svc创建key

kubectl exec -it redis-sts-0 -- redis-cli

127.0.0.1:6379> set name 'zhang san'

OK

127.0.0.1:6379> set age '18'

OK

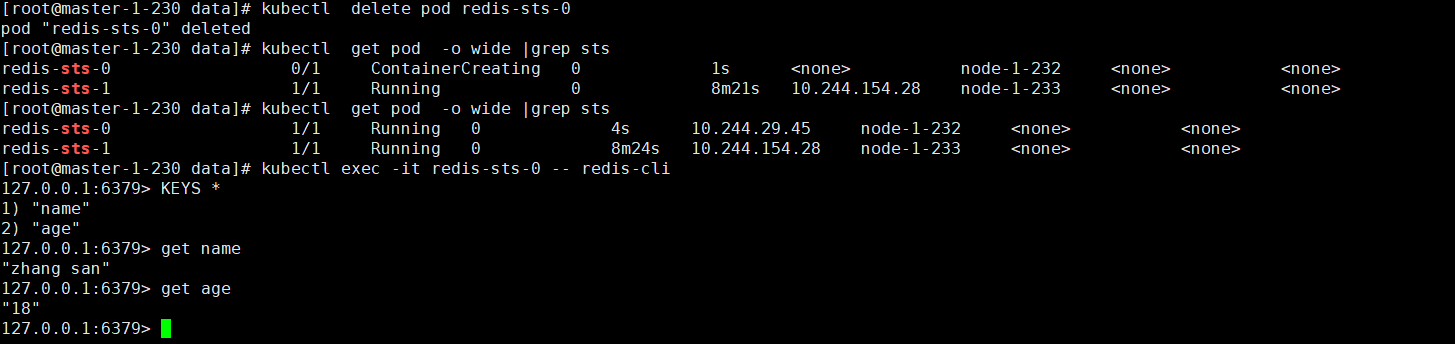

模拟故障

删除后,它会自动重新创建同名Pod,再次进入查看redis key

kubectl exec -it redis-sts-0 -- redis-cli

127.0.0.1:6379> get name

"zhangsan"

127.0.0.1:6379> get age

"18"数据没有丢失