一. 测试环境

1.1 服务器IP分布

| 节点 | IP |

|---|---|

| master-vip | 10.255.61.20 |

| tmp-k8s-master1 | 10.255.61.21 |

| tmp-k8s-master2 | 10.255.61.22 |

| tmp-k8s-master3 | 10.255.61.23 |

| tmp-k8s-node1 | 10.255.61.24 |

| tmp-k8s-node2 | 10.255.61.25 |

| tmp-k8s-node3 | 10.255.61.26 |

| tmp-nfs | 10.255.61.27 |

| tmp-mgr01 | 10.255.61.28 |

| tmp-mgr02 | 10.255.61.29 |

| tmp-mgr03 | 10.255.61.30 |

| mgr-vip | 10.255.61.31 |

1.2 监控访问地址

prometheus访问地址: http://10.255.61.20:30090

grafana访问地址: http://10.255.61.20:31280 用户名/密码:admin/Gioneco@2020

alertmanager访问地址: http://10.255.61.20:30903/

二. 配置监控所有命名空间

默认prometheus默认只监控3个命名空间(default、kube-system、monitoring)的数据,且默认不能包含所有资源类型,需要修改ClusterRole来添加监控内容

2.1.增加权限

kubectl get ClusterRole -n monitoring 找到ClusterRole资源,prometheus-kube-prometheus-prometheus添加如下内容

- apiGroups: # 添加部分

- ""

resources:

- services

- endpoints

- pods

- nodes

- nodes/metrics

- nodes/proxy #cadvisor需要

verbs:

- get

- list

- watch

cadvisor中使用的mtrics路径是:/api/v1/nodes/[节点名称]/proxy/metrics/cadvisor

2.2.设置添加自定义配置

资源对象Promtheus中添加additionalScrapeConfigs 字段

prometheus的配置默认是不能修改的,先使用kubectl –n monitoring get Prometheus查看资源名字

然后使用命令kubectl -n monitoring edit Prometheus k8s,其中命令中的k8s是Prometheus这个资源的名字,不同环境中的名字可能不同

...

spec:

additionalScrapeConfigs:

name: additional-configs

key: prometheus-additional.yaml

...

然后后面可以新建secert文件additional-configs来实现自定义监控,prometheus-additional.yaml中包含了监控内容

2.3 添加自动发现服务

k8s内部服务全部采用自动发现的方式进行监控,包含数据库及中间件

修改prometheus-additional.yaml,添加下面内容

- job_name: 'kubernetes-endpoints'

kubernetes_sd_configs:

- role: endpoints

relabel_configs:

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_scrape]

action: keep

regex: true

# - source_labels: [__meta_kubernetes_service_annotation_prometheus_io_scheme]

# action: replace

# target_label: __scheme__

# regex: (https?)

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_path]

action: replace

target_label: __metrics_path__

regex: (.+)

- source_labels: [__address__, __meta_kubernetes_service_annotation_prometheus_io_port]

action: replace

target_label: __address__

regex: ([^:]+)(?::\d+)?;(\d+)

replacement: $1:$2

- action: labelmap

regex: __meta_kubernetes_service_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

action: replace

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_service_name]

action: replace

target_label: kubernetes_name

- source_labels: [__meta_kubernetes_pod_name]

action: replace

target_label: kubernetes_pod_name

重建secret

kubectl delete secret additional-configs -n monitoring

kubectl create secret generic additional-configs --from-file=prometheus-additional.yaml -n monitoring

三. 监控内容

3.1 k8s组件

k8s是二进制部署,组件内容包括etcd, kube-proxy, apiserver, kubelet, kubernetes-schedule, controller-manager,corendns, calico, ingress。对于二进制部署的组件,使用外部IP地址的方式来添加监控

3.1.1 监控etcd

1)创建service

---

apiVersion: v1

kind: Endpoints

metadata:

labels:

k8s-app: etcd1

name: etcd

namespace: kube-system

subsets:

- addresses:

- ip: 10.255.61.21

- ip: 10.255.61.22

- ip: 10.255.61.23

ports:

- name: etcd #name

port: 2379 #port

protocol: TCP

---

apiVersion: v1

kind: Service

metadata:

labels:

k8s-app: etcd1

name: etcd

namespace: kube-system

spec:

ports:

- name: etcd

port: 2379

protocol: TCP

targetPort: 2379

sessionAffinity: None

type: ClusterIP

2)创建证书的secret

kubectl -n monitoring create secret generic etcd-certs --from-file=/etc/kubernetes/pki/etcd/ca.crt --from-file=/etc/kubernetes/pki/etcd/server.crt --from-file=/etc/kubernetes/pki/etcd/server.key

3)挂载secret

kubectl get Prometheus -n monitoring 找到Prometheus资源,在里面的spec下面挂载secret

spec:

……

secrets:

- etcd-certs

这样写后就能把secret文件挂载到prometheus中了,下面验证下

4)修改prometheus-additional.yaml

添加下面内容

- job_name: 'k8s-etcd'

scheme: https

metrics_path: /metrics

tls_config:

ca_file: /etc/prometheus/secrets/etcd-certs/ca.crt

cert_file: /etc/prometheus/secrets/etcd-certs/server.crt

key_file: /etc/prometheus/secrets/etcd-certs/server.key

static_configs:

- targets:

- 10.255.61.21:2379

- 10.255.61.22:2379

- 10.255.61.23:2379

5)创建secret

kubectl create secret generic additional-configs --from-file=prometheus-additional.yaml -n monitoring

6)验证

Prometheu会自动加载配置,稍等就可以看到etcd监控内容

3.1.2 监控kubernetes-schedule, controller-manager

1)修改prometheus-additional.yaml

这俩不需要证书,直接添加监控项即可

- job_name: kubernetes-schedule

honor_timestamps: true

metrics_path: /metrics

scheme: http

static_configs:

- targets:

- 10.255.61.21:10251

- 10.255.61.22:10251

- 10.255.61.23:10251

- job_name: controller-manager

honor_timestamps: true

metrics_path: /metrics

scheme: http

static_configs:

- targets:

- 10.255.61.21:10252

- 10.255.61.22:10252

- 10.255.61.23:10252

2)创建secret

kubectl delete secret additional-configs -n monitoring

kubectl create secret generic additional-configs --from-file=prometheus-additional.yaml -n monitoring

3)验证

3.1.3 kubeproxy

1)修改配置文件

默认kubeproxy仅本地访问,如果想外部监控需要修改配置文件/etc/kubernetes/kube-proxy.conf中metricsBindAddress为x.x.x.x:10249(本机IP地址)。修改配置文件后,服务会自动重启,systemctl status kube-proxy查看

2)修改prometheus-additional.yaml

- job_name: 'k8s-kube-proxy'

scheme: http

metrics_path: /metrics

static_configs:

- targets:

- 10.255.61.21:10249

- 10.255.61.22:10249

- 10.255.61.23:10249

- 10.255.61.24:10249

- 10.255.61.25:10249

- 10.255.61.26:10249

3)配置生效

kubectl delete secret additional-configs -n monitoring

kubectl create secret generic additional-configs --from-file=prometheus-additional.yaml -n monitoring

4)验证

3.1.4 kubelet, apiserver,coredns

这三个装好kube-prometheus后,我这里测试默认可自动生效,不用调整

3.1.5 calico监控

1)修改配置

默认calico没有暴露指标,需要做下面修改

# kubectl edit ds calico-node -n kube-system

# 修改container name=calico-node的配置;

# 将其env:FELIX_PROMETHEUSMETRICSENABLED修改为“True”

spec:

containers:

- env:

......

- name: FELIX_PROMETHEUSMETRICSENABLED

value: "True"

- name: FELIX_PROMETHEUSMETRICSPORT

value: "9091"

将FELIX_PROMETHEUSMETRICSENABLED=True,其监听端口为9091

测试

# curl http://localhost:9091/metrics

# HELP felix_active_local_endpoints Number of active endpoints on this host.

# TYPE felix_active_local_endpoints gauge

......

2)新增svc

apiVersion: v1

kind: Service

metadata:

annotations:

prometheus.io/scrape: "true"

prometheus.io/port: "9091"

name: calico-svc

namespace: kube-system

spec:

type: ClusterIP

ports:

- protocol: TCP

port: 9091

targetPort: 9091

selector:

k8s-app: calico-node

3)验证

3.1.6 ingress监控

1)修改svc

添加annotations

prometheus.io/port: "10254"

prometheus.io/scrape: "true"

2)验证

3.2 数据库监控

3.2.1 redis监控

包含redis-cluster, redis-sentinel

1)修改redis-cluster-metrics这个svc,添加下面内容

annotations:

prometheus.io/scrape: "true"

prometheus.io/port: "9121"

同理修改redis-sentinel的svc内容

2)验证

稍等即可在endpoint监控项中看到redis的监控,这里有几个redis的副本就会显示几个监控项

3)添加grafana模板

模板ID763,在原先基础上进行了优化,包括了redis-cluster和redis-sentinel

链接:http://10.255.61.20:31280/d/0eerG9eVk/redis-dashboard?orgId=1

3.2.2 mysql监控

1)部署mysql-exporter

以下操作三个节点上都要执行

下载安装包

官方地址:https://github.com/prometheus/mysqld_exporter/releases

#解压

tar -xvzf mysqld_exporter-0.14.0.linux-amd64.tar.gz

mv mysqld_exporter-0.14.0.linux-amd64 mysqld_exporter

mysql中创建用户

CREATE USER 'exporter'@'%' IDENTIFIED BY 'Yh9YKb7P';

GRANT PROCESS, REPLICATION CLIENT, SELECT ON *.* TO 'exporter'@'%';

flush privileges;

验证创建的用户

use mysql

select host,user from user;

创建配置文件

在mysql_exporter文件夹中创建.my.cnf

[client]

user = exporter

password = Yh9YKb7P

host = 10.255.60.121

port = 3306

创建service文件,开启mgr监控参数

cd /usr/lib/systemd/system

cat mysqld_exporter.service

[Unit]

Description=https://prometheus.io

[Service]

Restart=on-failure

ExecStart=/root/mysqld_exporter/mysqld_exporter --config.my-cnf=/root/mysqld_exporter/.my.cnf --web.listen-address=:9104 --collect.perf_schema.replication_group_members --collect.perf_schema.replication_group_member_stats --collect.perf_schema.replication_applier_status_by_worker

[Install]

WantedBy=multi-user.target

启动及测试

#启动服务

systemctl daemon-reload

systemctl start mysqld_exporter.service

#查看运行状态

systemctl status mysqld_exporter.service

#设置开机启动

systemctl enable mysqld_exporter.service

#测试指标

curl localhost:9104/metrics

2)修改prometheus-additional.yaml

- job_name: 'mysql-exporter'

metrics_path: /metrics

static_configs:

- targets:

- 10.255.61.28:9104

- 10.255.61.29:9104

- 10.255.61.30:9104

3)配置生效

kubectl delete secret additional-configs -n monitoring

kubectl create secret generic additional-configs --from-file=prometheus-additional.yaml -n monitoring

4)验证

5)添加grafana模板

模板ID11323,在原有指出上添加了MGR监控项

链接:http://10.255.61.20:31280/d/MQWgroiiz/mysql-overview?orgId=1&refresh=1m

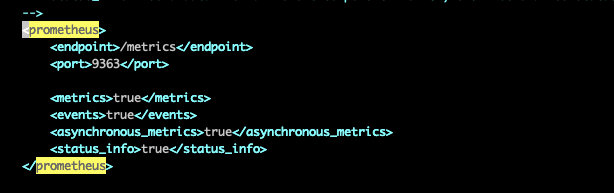

3.2.3 clickhouse监控

默认clickhouse没开启prometheus监控,需要修改配置文件后重新挂载

1)修改配置文件

进入容器找到config.xml文件,取消

2)重新生成configmap

生成新的configmap后,修改sts中的挂载文件

kubectl create cm new-clickhouse-config --from-file=./config.xml -n dw

3)创建svc

apiVersion: v1

kind: Service

metadata:

annotations:

prometheus.io/scrape: "true"

prometheus.io/port: "9363"

name: clickhouse-svc-monitor

namespace: dw

spec:

type: ClusterIP

ports:

- protocol: TCP

port: 9363

targetPort: 9363

selector:

clickhouse.altinity.com/chi: clickhouse

4)验证

5)添加grafana模板14192

链接:http://10.255.61.20:31280/d/thEkJB_Mz/clickhouse?orgId=1&refresh=30s

3.3 中间件监控

包括kafka, minio, harbor, emqx, consul

3.3.1 kafka监控

1)修改kafka-exporter的deploy配置

因在kafka中添加了鉴权,使用helm部署的时候会自动创建exporter,并且kafka-exporter使用了普通用户consumer来暴露指标,此时因为权限问题不能看到topic信息,需要需改deploy中的命令参数,如下

2)修改svc

在kafka-test1-metrics这个svc中添加

annotation:

prometheus.io/port: "9308"

prometheus.io/scrape: "true"

3)验证

4)添加grafana模板

添加模板ID: 10122

链接地址:http://10.255.61.20:31280/d/kafka-topics/kafka-dashboard

3.3.2 监控minio

1)修改svc为nodeport,因为使用自动发现的时候,默认指标路径是/metrics,而minio指标的默认路径是/minio/v2/metrics/cluster,这里直接使用外部IP进行添加监控更简单一些。

kubectl edit svc minio -n minio

将svc类型修改为nodePort

2)修改prometheus-additional.yaml

- job_name: 'minio-cluster'

metrics_path: /minio/v2/metrics/cluster

static_configs:

- targets:

- 10.255.61.20:39000

3)配置生效

kubectl delete secret additional-configs -n monitoring

kubectl create secret generic additional-configs --from-file=prometheus-additional.yaml -n monitoring

4)验证

5)添加grafana模板

minio 模板ID:13502,然后在此基础上对监控指标进行优化

链接:http://10.255.61.20:31280/d/TgmJnqnnk/minio-cluster-dashboard?orgId=1

3.3.3 emqx监控

emqx目前版本是4.x, 监控需要使用Pushgateway

1)部署pushgateway

pushgateway-deploy.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app.kubernetes.io/name: pushgateway

app.kubernetes.io/version: v1.0.1

name: pushgateway

namespace: emqx

spec:

selector:

matchLabels:

app.kubernetes.io/name: pushgateway

replicas: 1

revisionHistoryLimit: 5

strategy:

type: RollingUpdate

rollingUpdate:

maxSurge: "25%"

maxUnavailable: "25%"

template:

metadata:

labels:

app.kubernetes.io/name: pushgateway

app.kubernetes.io/version: v1.0.1

spec:

containers:

- name: pushgateway

image: quay.io/prometheus/pushgateway:v1.0.1

imagePullPolicy: IfNotPresent

livenessProbe:

initialDelaySeconds: 60

periodSeconds: 10

successThreshold: 1

failureThreshold: 3

httpGet:

path: /

port: "app-port"

readinessProbe:

initialDelaySeconds: 60

periodSeconds: 10

successThreshold: 1

failureThreshold: 3

httpGet:

path: /

port: "app-port"

ports:

- name: "app-port"

containerPort: 9091

volumeMounts:

- name: host-time

mountPath: /etc/localtime

readOnly: true

resources:

limits:

memory: "1000Mi"

cpu: 1

requests:

memory: "300Mi"

cpu: 300m

volumes:

- name: host-time

hostPath:

path: /etc/localtime

pushgateway-svc.yaml

apiVersion: v1

kind: Service

metadata:

labels:

app.kubernetes.io/name: pushgateway

app.kubernetes.io/version: v1.0.1

name: pushgateway-np

namespace: emqx

spec:

type: NodePort

ports:

- protocol: TCP

port: 9091

nodePort: 30910

selector:

app.kubernetes.io/name: pushgateway

app.kubernetes.io/version: v1.0.1

2)验证

这里需要使用nodePort的方式来暴露pushgateway服务,因为我们要把emqx的指标推送到pushgateway后,prometheus再从pushgateway中获取emqx指标。

3)修改emqx配置文件并重新挂载

从 EMQX Enterprise v4.1.0 开始,emqx_statsd 更名为 emqx_prometheus,我们使用此插件来推送数据到pushgateway

touch emqx_prometheus.conf

prometheus.push.gateway.server = http://10.255.61.20:30910

prometheus.interval = 15000

kubectl create configmap emqx-prometheus-config --from-file=./emqx_prometheus.conf -n emqx

kubectl edit sts emqx-cluster -n emqx 挂载配置文件

volumeMounts:

- mountPath: /opt/emqx/etc/plugins/emqx_prometheus.conf

name: emqx-prometheus

subPath: emqx_prometheus.conf

volumes:

- configMap:

defaultMode: 420

name: emqx-prometheus-config

name: emqx-prometheus

4)修改prometheus-additional.yaml

- job_name: 'pushgateway-emqx'

metrics_path: /metrics

scrape_interval: 5s

honor_labels: true

static_configs:

# Pushgateway IP address and port

- targets: ['10.255.61.20:30910']

5)验证

6)添加grafana模板

参考https://docs.emqx.com/zh/cloud/latest/deployments/prometheus.html#api-%E9%85%8D%E7%BD%AE

下载grafana的 EMQ.json ⽂件,导入即可

链接:http://10.255.61.20:31280/d/tjRlQw6Zk/emqx-dashboard?orgId=1

3.3.4 harbor监控

1)修改配置文件

harbor部署时,values.yaml中没有开启监控metrics,需要修改925行设置enabled: true, 重新生成pod才能看到相应的metrics服务,如下

2)修改svc

修改上面的harbor-exporter服务,添加annotaions,方便实现自动发现

prometheus.io/port: "8001"

prometheus.io/scrape: "true"

3)验证

4)添加grafana模板

grafana模板ID:14075,然后在此基础上对监控指标进行优化

链接地址: http://10.255.61.20:31280/d/gb9Oy6UMz/habor?orgId=1&refresh=1m

3.3.5 consul监控

1)修改svc

添加annotations

prometheus.io/port: "9107"

prometheus.io/scrape: "true"

2)验证

3)添加grafana模板

grafana模板ID: 12049,在原有基础上优化监控项

链接地址:http://10.255.61.20:31280/d/g7j0xhjZk/consul-exporter-dashboard?orgId=1

3.4 容器和服务监控

3.4.1 安装metrics-server

对于容器状态比如副本数的监控,需要安装metrics-server,命令如下

helm repo add bitnami https://charts.bitnami.com/bitnami

helm repo update

helm search repo bitnami/metrics-server

helm install metrics-server bitnami/metrics-server \

--set apiService.create=true

排错

部署的会报错no such host

错误的原因:这是因为没有内网的DNS服务器,所以metrics-server无法解析节点名字。

解决方法:修改deploy,在command添加下面两行

command:

- /metrics-server

- --kubelet-preferred-address-types=InternalIP,Hostname,InternalDNS,ExternalDNS,ExternalIP

- --kubelet-insecure-tls

2)添加grafana监控模板

grafana中添加模板ID: 13105

- kube-prometheus 集群 prometheus kube k8skube-prometheus集群prometheus kube kube-prometheus kube-prometheus prometheus kube kube-prometheus prometheus operator简介 kube-prometheus prometheus架构kube kube-prometheus prometheus组件dashboard prometheus metrics kube-state-metrics集群 kube-state-metrics集群prometheus metrics prometheus集群k8s k8 kube_prometheus