作业①

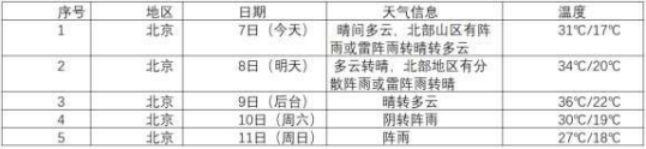

- 要求:在中国气象网(http://www.weather.com.cn)给定城市集的7日天气预报,并保存在数据库。

- 输出信息

- Gitee 文件夹链接为:https://gitee.com/zjy-w/crawl_project/tree/master/作业2/1

(1)代码

from bs4 import BeautifulSoup

from bs4 import UnicodeDammit

import urllib.request

import sqlite3

class WeatherDB:

def openDB(self):

self.con = sqlite3.connect("weathers.db")

self.cursor = self.con.cursor()

try:

self.cursor.execute(

"CREATE TABLE weathers (wCity VARCHAR(16), wDate VARCHAR(16), wWeather VARCHAR(64), wTemp VARCHAR(32), CONSTRAINT pk_weather PRIMARY KEY(wCity, wDate))")

except:

self.cursor.execute("DELETE FROM weathers")

def closeDB(self):

self.con.commit()

self.con.close()

def insert(self, city, date, weather, temp):

sql = "INSERT INTO weathers (wCity, wDate, wWeather, wTemp) VALUES (?, ?, ?, ?)"

try:

self.cursor.execute(sql, (city, date, weather, temp))

except Exception as err:

print(err)

def show(self):

self.cursor.execute("SELECT * FROM weathers")

rows = self.cursor.fetchall()

print("{0:^18}{1:{4}^18}{2:{4}^21}{3:{4}^13}".format("城市", "日期", "天气情况", "气温", chr(12288)))

for row in rows:

print("{0:^18}{1:{4}^20}{2:{4}^18}{3:{4}^18}".format(row[0], row[1], row[2], row[3], chr(12288)))

class WeatherForecast:

def __init__(self):

self.headers = {

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/58.0.3029.110 Safari/537.3"}

self.cityCode = {"北京": "101010100", "上海": "101020100", "广州": "101280101","深圳": "101280601", }

def forecastCity(self, city):

if city not in self.cityCode.keys():

print(city + " code cannot be found")

return

url = "http://www.weather.com.cn/weather/" + self.cityCode[city] + ".shtml"

try:

req = urllib.request.Request(url, headers=self.headers)

data = urllib.request.urlopen(req)

data = data.read()

dammit = UnicodeDammit(data, ["utf-8", "gbk"])

data = dammit.unicode_markup

soup = BeautifulSoup(data, "lxml")

lis = soup.select("ul[class='t clearfix'] li")

for li in lis:

try:

date = li.select('h1')[0].text

weather = li.select('p[class="wea"]')[0].text

temp = li.select('p[class="tem"]')[0].text.strip()

# print(city,date,weather,temp)

self.db.insert(city, date, weather, temp)

except Exception as err:

print(err)

except Exception as err:

print(err)

def process(self, cities):

self.db = WeatherDB()

self.db.openDB()

for city in cities:

self.forecastCity(city)

self.db.show()

self.db.closeDB()

ws = WeatherForecast()

ws.process(["北京", "上海", "广州", "深圳"])

print("completed")

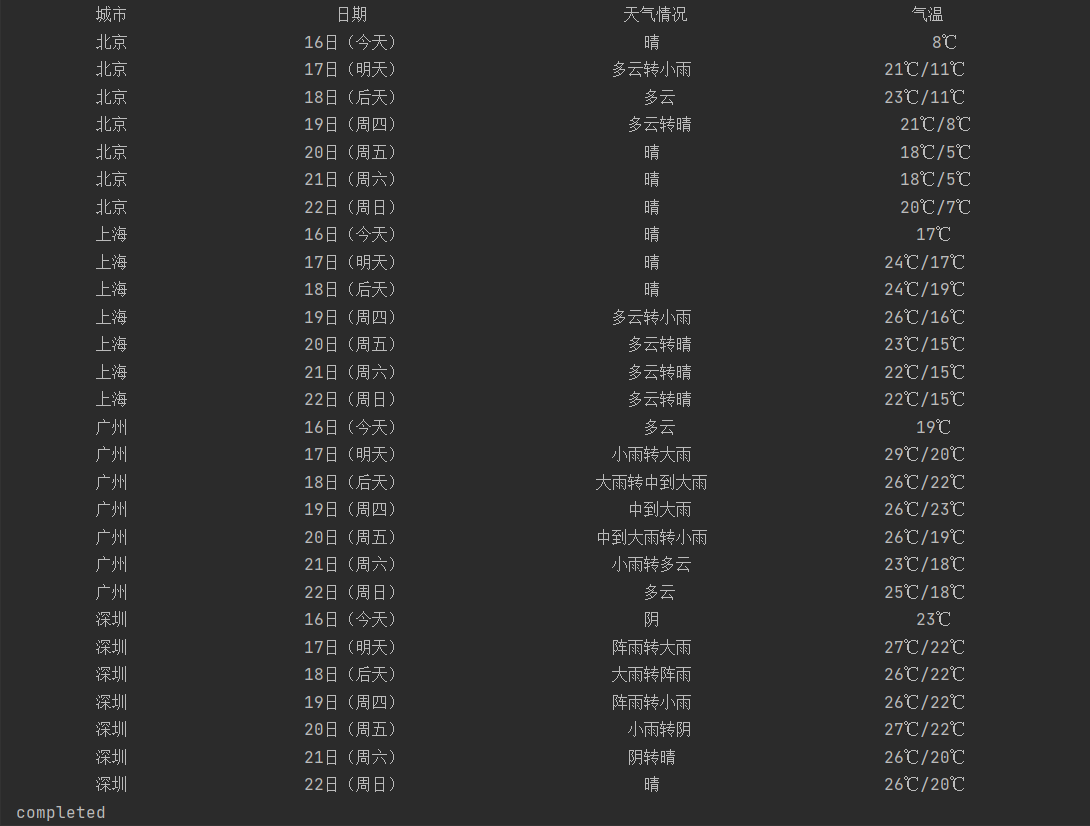

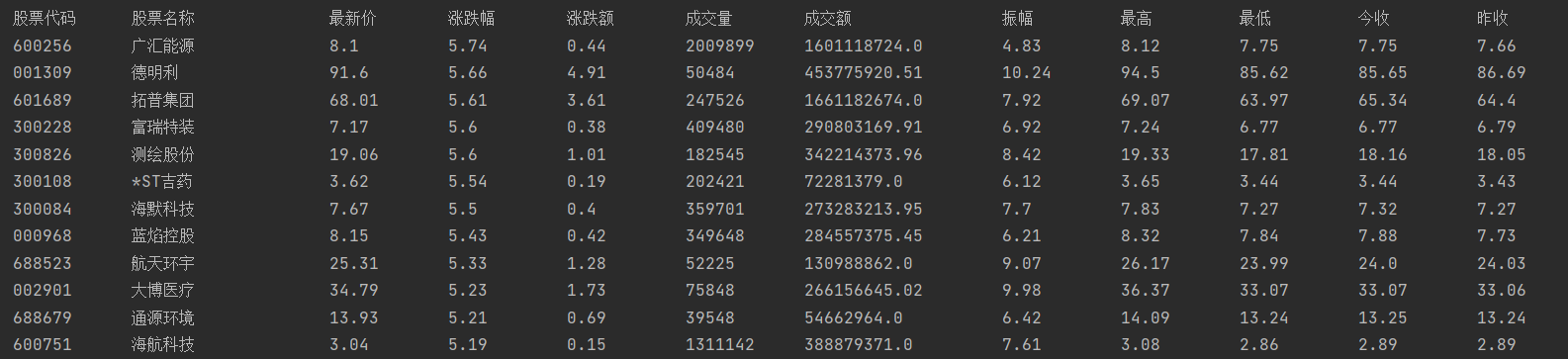

运行结果

数据库可视化

(2)心得体会

该实验总体上没有太大难度,是复现之前的代码。唯一的差别就在于要把数据存入数据库,这里初步尝试了有关数据库的操作,总体较为顺利。

作业②

- 要求:用 requests 和 BeautifulSoup 库方法定向爬取股票相关信息,并存储在数据库中。

- 候选网站:东方财富网:https://www.eastmoney.com/

新浪股票:http://finance.sina.com.cn/stock/ - 技巧:在谷歌浏览器中进入 F12 调试模式进行抓包,查找股票列表加载使用的 url,并分析 api 返回的值,并根据所要求的参数可适当更改api 的请求参数。根据 URL 可观察请求的参数 f1、f2 可获取不同的数值,根据情况可删减请求的参数。

参考链接:https://zhuanlan.zhihu.com/p/50099084 - 输出信息

- Gitee 文件夹链接为:https://gitee.com/zjy-w/crawl_project/tree/master/作业2/2

根据上述教程观察页面结构,发现是由pn来决定页数,所需的信息则是通过'f2': "最新报价",'f4': "涨跌额",'f3': "涨跌幅",'f5': "成交量",'f6': "成交额",'f7': "振幅",'f12': "股票代码",'f14': "股票名称",'f15': "最高",'f16': "最低",'f17': "今开",'f18': "昨收"来确定。最后选择爬取第7页到第14页。

(1)代码

import json

import requests

import re

import sqlite3

class StockDB:

def openDB(self):

self.con=sqlite3.connect("stocks.db")

self.cursor=self.con.cursor()

try:

self.cursor.execute("create table stocks(stockCode varchar(16),stockName varchar(16),Newprice varchar(16),RiseFallpercent varchar(16),RiseFall varchar(16),Turnover varchar(16),Dealnum varchar(16),Amplitude varchar(16),highest varchar(16),lowest varchar(16),today varchar(16),yesterday varchar(16))")

except:

self.cursor.execute("delete from stocks")

def closeDB(self):

self.con.commit()

self.con.close()

def insert(self,stockList):

try:

self.cursor.executemany("insert into stocks (stockcode,stockname,newprice,risefallpercent,risefall,turnover,dealnum,Amplitude,highest,lowest,today,yesterday) values (?,?,?,?,?,?,?,?,?,?,?,?)",

stockList)

except Exception as err:

print(err)

def show(self):

self.cursor.execute("select * from stocks")

rows = self.cursor.fetchall()

print("{:8}\t{:16}\t{:8}\t{:8}\t{:8}\t{:8}"

"\t{:16}\t{:8}\t{:8}\t{:8}\t{:8}\t{:8}".format("股票代码","股票名称","最新价","涨跌幅","涨跌额","成交量","成交额","振幅","最高","最低","今收","昨收",chr(12288)))

for row in rows:

print("{:8}\t{:16}\t{:8}\t{:8}\t{:8}\t{:8}"

"\t{:16}\t{:8}\t{:8}\t{:8}\t{:8}\t{:8}".format(row[0], row[1], row[2], row[3], row[4], row[5], row[6], row[7], row[8], row[9], row[10], row[11],chr(12288)))

class stock:

def __init__(self):

self.db = StockDB()

def getHTML(url):

try:

headers = {

"User-Agent": "Mozilla/5.0 (Windows; U; Windows NT 6.0 x64; en-US; rv:1.9pre) Gecko/2008072421 Minefield/3.0.2pre"}

r = requests.get(url, timeout=30, headers=headers)

r.raise_for_status()

r.encoding = r.apparent_encoding

return r.text

except Exception as e:

print(e)

def getStockData(self, html):

data = re.search(r'\[.*]', html).group()

stocks = re.findall(r'{.*?}', data)

stocks = [json.loads(x) for x in stocks]

att = {"股票代码": 'f12', "股票名称": 'f14', "最新报价": 'f2', "涨跌幅": 'f3', "涨跌额": 'f4', "成交量": 'f5', "成交额": 'f6',

"振幅": 'f7', "最高": 'f15', "最低": 'f16', "今开": 'f17', "昨收": 'f18'}

stockList = []

for stock in stocks:

ls = []

for i in att:

ls.append(stock[att[i]])

ls = tuple(ls)

stockList.append(ls)

self.db.insert(stockList)

def process(self):

self.db.openDB()

for page in range(7, 14):

url = "http://56.push2.eastmoney.com/api/qt/clist/get?cb=jQuery1124032040654482613706_1635234281838&pn="+ str(page) + "&pz=20&po=1&np=1&ut=bd1d9ddb04089700cf9c27f6f7426281&fltt=2&invt=2&fid=f3&fs=m:0+t:6,m:0+t:80,m:1+t:2,m:1+t:23&fields=f1,f2,f3,f4,f5,f6,f7,f8,f9,f10,f12,f13,f14,f15,f16,f17,f18,f20,f21,f23,f24,f25,f22,f11,f62,f128,f136,f115,f152&_=1635234281839"

html = stock.getHTML(url)

self.getStockData(html)

self.db.show()

self.db.closeDB()

def main():

s = stock()

s.process()

print("completed")

if __name__ =="__main__":

main()

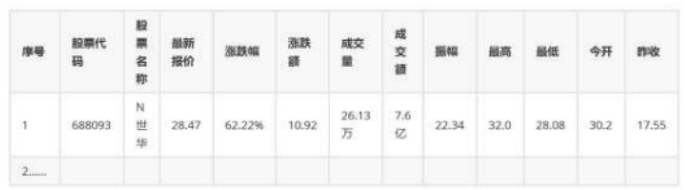

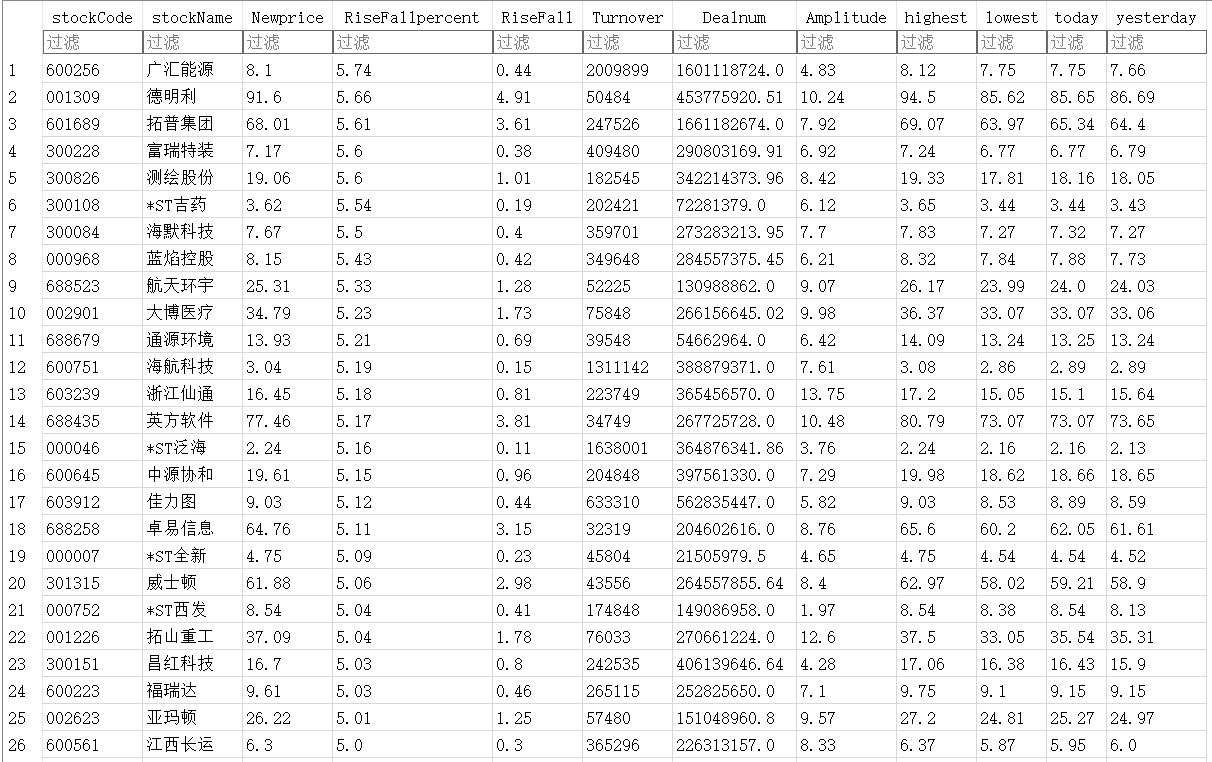

运行结果

数据库可视化

(2)心得体会

该代码主要是通过json对所需内容进行抓取,教程中还有更完善的即可抓取到不同板块的股票内容,本题我只抓取了沪深京A股,更深入的可以通过fs参数获取到不同股票的信息。最后则和上面相同,将其信息存入到数据库当中。

作业③

- 要求:爬取中国大学 2021 主榜(https://www.shanghairanking.cn/rankings/bcur/2021)所有院校信息,并存储在数据库中,同时将浏览器 F12 调试分析的过程录制 Gif 加入至博客中。

技巧:分析该网站的发包情况,分析获取数据的 api - 输出信息

- Gitee 文件夹链接为:https://gitee.com/zjy-w/crawl_project/tree/master/作业2/3

浏览器 F12 调试分析的过程录制 Gif如下:

(1)代码

import re

import requests

import sqlite3

def get_university_ranking():

province_mapping = {

'k': '江苏', 'n': '山东', 'o': '河南', 'p': '河北', 'q': '北京', 'r': '辽宁',

's': '陕西', 't': '四川', 'u': '广东', 'v': '湖北', 'w': '湖南', 'x': '浙江',

'y': '安徽', 'z': '江西', 'A': '黑龙江', 'B': '吉林', 'C': '上海', 'D': '福建',

'E': '山西', 'F': '云南', 'G': '广西', 'I': '贵州', 'J': '甘肃', 'K': '内蒙古',

'L': '重庆', 'M': '天津', 'N': '新疆', 'Y': '海南'

}

univ_category_mapping = {

'f': '综合', 'e': '理工', 'h': '师范', 'm': '农业', 'T': '林业'

}

url = 'https://www.shanghairanking.cn/_nuxt/static/1697106492/rankings/bcur/2021/payload.js'

header = {

"User-Agent": "Mozilla/5.0 (Windows; U; Windows NT 6.0 x64; en-US; rv:1.9pre) Gecko/2008072421 Minefield/3.0.2pre"

}

resp = requests.get(url, headers=header)

resp.raise_for_status()

resp.encoding = resp.apparent_encoding

obj = re.compile(

r'univNameCn:"(?P<univNameCn>.*?)",.*?'

r'univCategory:(?P<univCategory>.*?),.*?'

r'province:(?P<province>.*?),'

r'score:(?P<score>.*?),'

, re.S)

results = []

for idx, it in enumerate(obj.finditer(resp.text), start=1):

univNameCn = it.group('univNameCn')

univCategory = it.group('univCategory')

province = it.group('province')

score = it.group('score')

mapped_province = province_mapping.get(province, province)

mapped_category = univ_category_mapping.get(univCategory, univCategory)

results.append((idx, univNameCn, mapped_province, mapped_category, score))

return results

def save_to_db(results):

conn = sqlite3.connect('university.db')

c = conn.cursor()

c.execute('''CREATE TABLE IF NOT EXISTS university

(rank INTEGER, school_name TEXT, province TEXT, category TEXT, score REAL)''')

c.executemany('INSERT INTO university VALUES (?, ?, ?, ?, ?)', results)

conn.commit()

conn.close()

print("结果已保存到数据库university.db")

if __name__ == '__main__':

results = get_university_ranking()

tplt = "{0:^10}\t{1:^10}\t{2:^12}\t{3:^12}\t{4:^9}"

print(tplt.format("排名", "学校名称", "省份", "学校类型", "总分"))

for u in results:

rank = str(u[0])

school_name = u[1]

province = u[2]

category = u[3]

score = u[4]

print(tplt.format(u[0], u[1], u[2], u[3], u[4]))

save_to_db(results)

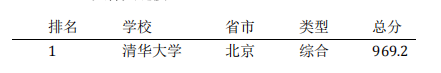

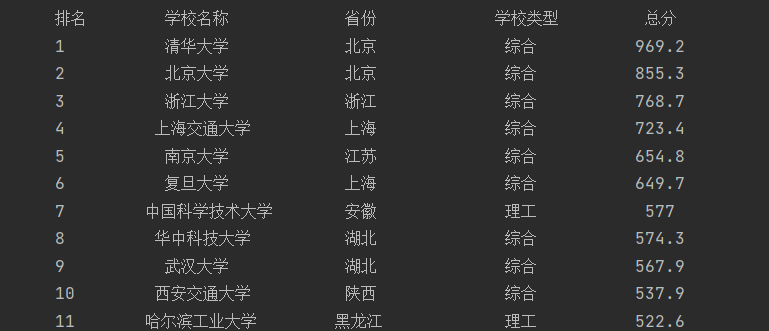

运行结果

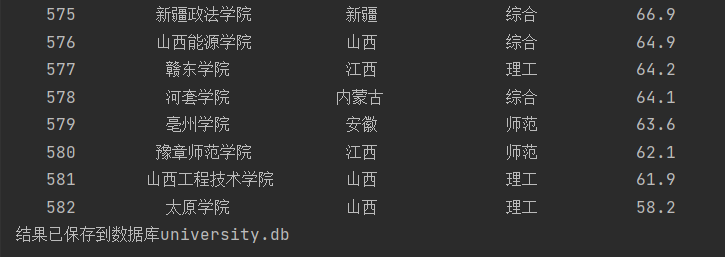

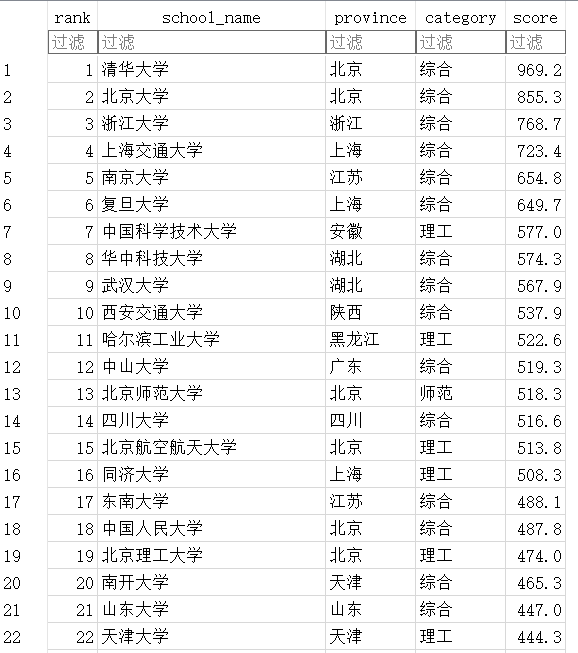

数据库可视化

(2)心得体会

这个作业其实前面类似的已经做过几次了,这次主要是在于最后是抓取了全部的大学排名并且保存为数据库。主要是通过F12发现payload.js中包含所有所需的参数,说明这个 JavaScript 文件保存了所有大学的信息,故确定爬取对象。后面则是较为普遍的使用正则进行提取。最后保存至数据库中。