一、容器化的几种架构方式

1.、容器的由来及变迁史

Docker->Docker-compose ->Docer swarm ->Kubernetes

2、微服务容器化的几种解决方案

| 特性 | Docker Swarm | Kubernetes |

| 安装和集群配置 | 安装简单,集群不强大 | 但在很复杂,集群非常强大 |

| 图形用户界面 | 没有官方图形界面,依托第三方 | 自带功能完善的Dashboard |

| 可伸缩性 | 高度可伸缩,比Kubernetes快 | 高可伸缩性和快速扩展 |

| 自动伸缩 | 不能自动缩放 | 可自动缩放 |

| 负载均衡 | 可自动平衡集群中容器之间的流量 | 基于iptables/ipvs(调度算法丰富) |

| 规模 | 1000节点、50000容器 | 节点不超过5000、Pod总数不超过150000、容器总数不超过300000、每个节点的pod数量不超过100 |

| 核心功能 | 管理节点高可用、调度任务、服务发现、回滚、更新、通讯安全、容器HA、服务自愈、配置管理、健康检查、服务伸缩 |

资源调度、服务发现、服务编排、资源逻辑管理、服务自愈、安全配置管理、job任务支持、自动回滚、内部域名服务、健康检查、扩容伸缩、负载均衡、灰度升级、应用HA、容灾恢复 |

| 设计初衷 | 跨宿主机管理容器集群 | 支持分布式、服务化的应用架构 |

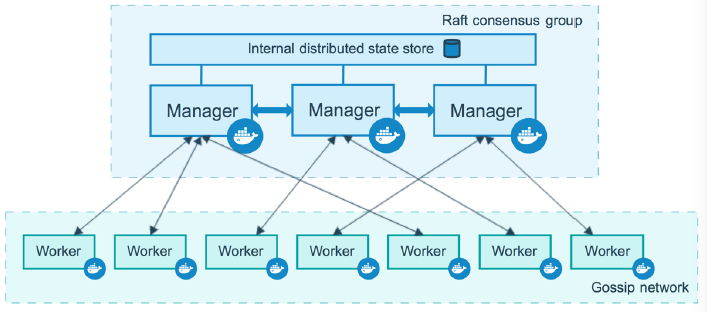

2.1、Docer Swarm架构图

Manager nodes

- 维护一个集群的状态

- 对service进行调度

- 为Swarm提供外部可调用的API接口

Work nodes

- 用来执行Task

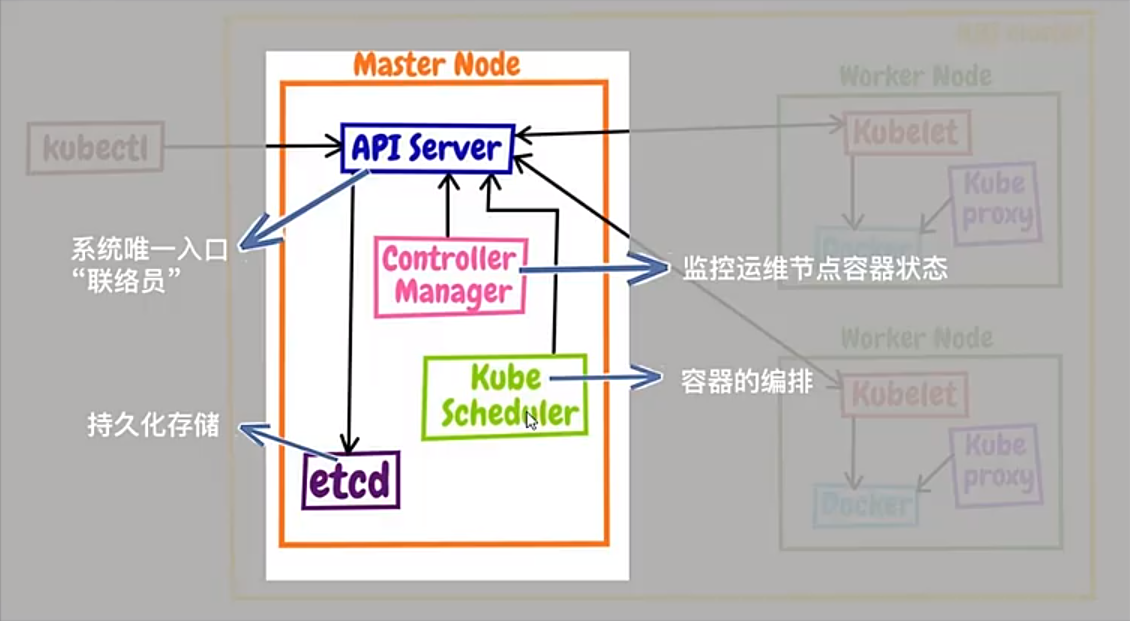

2.2 Kubernetes架构图

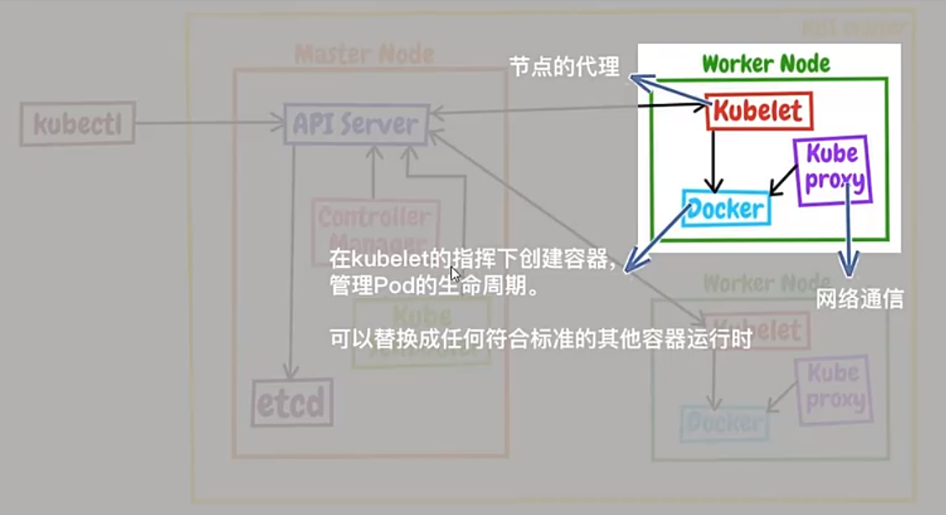

- Master Node:作为控制节点,对集群进行调度管理;Master Node 由API Server、Schheduler、Cluster State Store和Controller-Manger Server组成

- Work Node:作为真正的工作节点,运行业务应用的容器;Work Node包含kubelet、kube proxy和Container Runtime

- etcd:保存整个集群的状态

- apiserver:提供资源操作的唯一入口,并提供认证、授权、访问控制、API注册和服务发现等机制

- controller manager:负责维护集群的状态,比如:故障检测、自动扩展、滚动更新等

- scheduler:负责资源的调度,安装预定的调度策略将Pod调度到相应的机器

- kubelet:负责维护集群的生命周期,同时也负责Volume(csi)和网络(cni)的管理

- Container runtime:负责镜像管理以及Pod和容器的真正运行(cri)

- kube-proxy:负责为Service提供cluster内部的服务发现和负载均衡

3、部署方式选择

kubeadm

- 简单优雅

- 支持高可用

- 升级方便

- 官方支持

kubernetes二进制

- 早期线上主流方式

- 部署时间长

- 稳定性高

Kubernetes-as-a-Service(公有云服务)

- 阿里云:ACK

- aws cloud: EKS

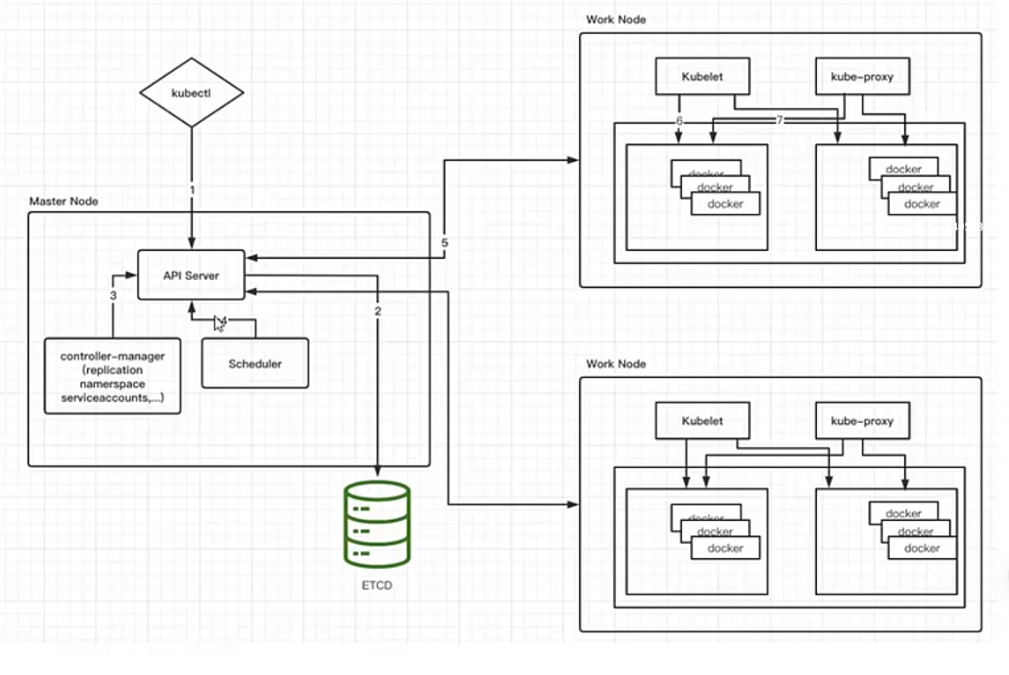

二、POD创建过程原理

- 用户通过kubelet或web端向apiserver发送创建Pod请求

- apiserver会做出认证/鉴权响应,然后检查信息并把数据存储到ETCD数据库,创建deployment资源并初始化

- controller-manager通过list-watch机制,检查发现新的deployment,将资源加到内部工作队列,然后检查发现资源没有关联的pod和replicaset,启用deployment controller创建replicaset资源,再通过replicaset controller创建pod

- controller-manager 创建完成后将deployment,replicaset,pod资源更新到etcd

- scheduler也通过list-watch机制,检测发现新的pod,并通过预选及优选策略算法,来计算pod最终可调度的node节点,并通过apiserver将数据更新至etcd

- kubelet 每隔20s向apiserver通过Nodename获取自身node上所需要的pod清单;通过与自己的内部缓存进行比较,如果有新的资源则触发钩子调用CNI接口给pod创建pod网络,调用CRI接口启动容器,调用CSI进行存储卷的挂载,然后启动pod容器

- kube-proxy为新创建的pod注册动态dns到CoreOS。给oid的service添加iptables/ipvs规则,用于服务发现和负载均衡

- controller通过control loop(控制循环)将当前pod状态与用户所期望的状态做对比,如果当前状态与用户期望状态不同,则controller会将pod修改为用户期望状态,实在不行回将此pod删除,然后重新创建pod

三、Scheduler的多种调度策略总结上

1、简洁

Kubernetes 的调度去Scheduler,主要任务是把pod按照预定的策略分配到集群的节点上。

- 公平:如何保证每个节点能分配到资源?

- 资源高效利用:如何能把集群所有资源最大化利用

- 效率:能够尽快地对大批量的pod完成调度工作

- 灵活:允许特殊场景的应用调度到特定的节点

Sheduler是作为单独的程序运行,启动之后会一直监听apiserver,获取Podspec.NodeName 为空的Pod,对给个Pod都会创建一个binding,表明该pod应该放到哪个节点上。

2、调度过程

调度分为以下几个部分:

- 首先过滤掉不满足条件的节点,此过程成为预选(predicate)

- 然后对通过的节点按照优先级排序,此过程为优选(priority)

- 最后从中选择优先级最高的节点。如果中间任何一个步骤有错误,直接返回错误

Predicate有一些列算法使用:

- PodFitsResources:节点上剩余的资源是否大于pod请求的资源

- PodFitsHost:如果pod指定了NodeName,检查节点名称是否和NodeName匹配

- PodFitsHostPorts:节点上已使用的port是否和pod申请的port冲突

- PodselectorMatches:过滤掉和Pod指定的label不匹配的节点

- NoDiskConflict:已经mount的volume和pod指定的volume不冲突,触发他们都是只读

如果predicate过程中没有合适的节点,pod会一直在pending状态,不断重试调度,直到有节点满足条件。如果有多个节点满足条件,就继续执行priorities过程:按照优先级大小对节点排序。

3、Node标签概念

每个node节点默认会有很多标签,标签在日常工作中就类似我们的一个标识;看到标签就知道这台node的主要用处

3.1 为节点加标签

[root@master-1-230 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master-1-230 Ready control-plane 7d10h v1.27.6

node-1-231 Ready <none> 7d10h v1.27.6

node-1-232 Ready <none> 7d10h v1.27.6

node-1-233 Ready <none> 6d1h v1.27.6

[root@master-1-230 ~]# kubectl get nodes --show-labels

NAME STATUS ROLES AGE VERSION LABELS

master-1-230 Ready control-plane 7d10h v1.27.6 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=master-1-230,kubernetes.io/os=linux,node-role.kubernetes.io/control-plane=,node.kubernetes.io/exclude-from-external-load-balancers=

node-1-231 Ready <none> 7d10h v1.27.6 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,ingress=true,kubernetes.io/arch=amd64,kubernetes.io/hostname=node-1-231,kubernetes.io/os=linux

node-1-232 Ready <none> 7d10h v1.27.6 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,ingress=true,kubernetes.io/arch=amd64,kubernetes.io/hostname=node-1-232,kubernetes.io/os=linux

node-1-233 Ready <none> 6d1h v1.27.6 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=node-1-233,kubernetes.io/os=linux

[root@master-1-230 ~]# kubectl label nodes node-1-231 apptype=core

node/node-1-231 labeled

[root@master-1-230 ~]# kubectl get nodes --show-labels

NAME STATUS ROLES AGE VERSION LABELS

master-1-230 Ready control-plane 7d10h v1.27.6 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=master-1-230,kubernetes.io/os=linux,node-role.kubernetes.io/control-plane=,node.kubernetes.io/exclude-from-external-load-balancers=

node-1-231 Ready <none> 7d10h v1.27.6 apptype=core,beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,ingress=true,kubernetes.io/arch=amd64,kubernetes.io/hostname=node-1-231,kubernetes.io/os=linux

node-1-232 Ready <none> 7d10h v1.27.6 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,ingress=true,kubernetes.io/arch=amd64,kubernetes.io/hostname=node-1-232,kubernetes.io/os=linux

node-1-233 Ready <none> 6d1h v1.27.6 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=node-1-233,kubernetes.io/os=linux

[root@master-1-230 ~]# kubectl label nodes node-1-231 apptype-

node/node-1-231 unlabeled4、调度策略

4.1 nodeSelect

nodeSelect是节点选择约束的最简单推荐形式。可以将nodeSelector字段设置为希望的目标节点所具有的节点标签。Kubernetes 只会将Pod调度到指定的标签节点上。

4.1.1 没有设置nodeSelect策略

# cat pod-demo.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: busybox

spec:

replicas: 3

selector:

matchLabels:

app: busybox

template:

metadata:

labels:

app: busybox

spec:

containers:

- name: busybox

image: busybox

imagePullPolicy: IfNotPresent

command:

- /bin/sh

- -c

- sleep 3000[root@master-1-230 1.3]# kubectl apply -f pod-demo.yaml

deployment.apps/busybox created

[root@master-1-230 ~]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

busybox-7bb7774f46-qjn8v 1/1 Running 0 22s 10.244.29.4 node-1-232 <none> <none>

busybox-7bb7774f46-vtc75 1/1 Running 0 22s 10.244.154.6 node-1-233 <none> <none>

busybox-7bb7774f46-wv5dt 1/1 Running 0 22s 10.244.167.135 node-1-231 <none> <none>

testdp-85858fd689-bgn5s 1/1 Running 2 (139m ago) 7d1h 10.244.167.131 node-1-231 <none> <none>发现busybox pod运行在3个的node上

删除pod资源

[root@master-1-230 1.3]# kubectl delete -f pod-demo.yaml

deployment.apps "busybox" deleted4.1.2 设置nodeSelector再观察运行结果

# cat pod-demo.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: busybox

spec:

replicas: 3

selector:

matchLabels:

app: busybox

template:

metadata:

labels:

app: busybox

spec:

nodeSelector:

apptype: core ## 选择节点为core的标签

containers:

- name: busybox

image: busybox

imagePullPolicy: IfNotPresent

command:

- /bin/sh

- -c

- sleep 3000[root@master-1-230 1.3]# kubectl apply -f pod-demo.yaml

deployment.apps/busybox created

[root@master-1-230 1.3]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

busybox-7668d5bbd7-75j56 1/1 Running 0 36s 10.244.167.136 node-1-231 <none> <none>

busybox-7668d5bbd7-cm5hl 1/1 Running 0 36s 10.244.167.137 node-1-231 <none> <none>

busybox-7668d5bbd7-gzftw 1/1 Running 0 36s 10.244.167.138 node-1-231 <none> <none>pod busybox 运行在指定标签节点(apptype: core)

4.2 亲和性和反亲和性

使用亲和性与反亲和性的一些好处:

- 亲和性、反亲和性语言的表达能力强。nodeSelect 只能选择所有固定标签的节点

亲和性:

- requireDuringSchedulingInoredDuringExecution:调度器只有在规则满足的时候才会执行调度。此功能类型nodeSelector,但语言表达能力更强

- preferredDuringSchedulingIgnoredDuringExecution:调度器会尝试寻找满足对应规则的节点。如果找不到匹配的节点,调度器仍然会在其他节点调度该Pod

NodeAffinity(节点亲和性):

- 如果pod标明调度策略规则是“软需求”,这样调度器在无法找到匹配节点时仍然调度该Pod

- 如果pod标明调度策略规则是“硬要求”,如果node解放店不满足需求,pod不会被调度,而是一直处于pending状态

PodAffinity(亲和性)/PodAntiAffinity(互斥性):

- Pod亲和性主要解决pod可以和哪些pod部署在同一个拓扑域中的问题

- Pod互斥性主要解决pod不能和哪些pod部署在同一个拓扑域中的问题

Kubernetes提供的操作符有以下几种:

| 调度策略 | 匹配符标签 | 操作符 | 拓扑域支持 | 调度目标 |

| nodeAffinity | node节点 |

In,NotIn,Exists,DoesNotExist. Gt,Lt |

否 | 指定主机 |

| podAffinity | pod | In,NotIn,Exists,DoesNotExist | 是 | Pod与指定Pod同一拓扑域 |

|

podAnitAffienity |

pod |

In,NotIn,Exists,DoesNotExist | 是 | Pod与指定Pod不在同一拓扑域 |

Kubernetes提供的操作符有以下几种:

- In:label的值在某个列表

- NotIn:label的值不在某个列表中

- Gt:label的值大于某个值

- Lt:label的值小于某个值

- Exists:某个label的值小于某个值

- DoesNotExist:某个label不存在

验证:

NodeAffinity:

[root@master-1-230 1.3]# cat pod-nodeaff-demo.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: affinity

labels:

app: affinity

spec:

replicas: 1

revisionHistoryLimit: 3

selector:

matchLabels:

app: affinity

template:

metadata:

labels:

app: affinity

spec:

containers:

- name: nginx

image: nginx:1.7.9

ports:

- containerPort: 80

name: nginxweb

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution: # 硬策略

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/hostname

operator: In

values:

- node-1-233

preferredDuringSchedulingIgnoredDuringExecution: # 软策略

- weight: 1

preference:

matchExpressions:

- key: apptype

operator: In

values:

- core[root@master-1-230 1.3]# kubectl apply -f pod-nodeaff-demo.yaml

deployment.apps/affinity created

[root@master-1-230 1.3]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

affinity-7f586f85d4-7wpqs 1/1 Running 0 29s 10.244.154.7 node-1-233 <none> <none>podAffinity:

[root@master-1-230 1.3]# cat pod-podaff-demo.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: affinity

labels:

app: affinity

spec:

replicas: 1

revisionHistoryLimit: 15

selector:

matchLabels:

app: affinity

template:

metadata:

labels:

app: affinity

spec:

containers:

- name: nginx

image: nginx:1.7.9

ports:

- containerPort: 80

name: nginxweb

affinity:

podAffinity:

requiredDuringSchedulingIgnoredDuringExecution: # 硬策略

- labelSelector:

matchExpressions:

- key: app

operator: In

values:

- busybox

topologyKey: kubernetes.io/hostname[root@master-1-230 1.3]# kubectl apply -f pod-podaff-demo.yaml

deployment.apps/affinity configured

[root@master-1-230 1.3]#

[root@master-1-230 1.3]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

affinity-747849f989-g86rg 1/1 Running 0 51s 10.244.167.142 node-1-231 <none> <none>

busybox-7668d5bbd7-4694l 1/1 Running 0 3m57s 10.244.167.139 node-1-231 <none> <none>

busybox-7668d5bbd7-dztrb 1/1 Running 0 3m57s 10.244.167.141 node-1-231 <none> <none>

busybox-7668d5bbd7-qpbmk 1/1 Running 0 3m57s 10.244.167.140 node-1-231 <none> <none>

testdp-85858fd689-pdh2q 1/1 Running 0 3m 10.244.29.5 node-1-232 <none> <none>podAntiAffinity

[root@master-1-230 1.3]# cat pod-podantiaff-demo.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: affinity

labels:

app: affinity

spec:

replicas: 1

revisionHistoryLimit: 15

selector:

matchLabels:

app: affinity

template:

metadata:

labels:

app: affinity

spec:

containers:

- name: nginx

image: nginx:1.7.9

ports:

- containerPort: 80

name: nginxweb

affinity:

podAntiAffinity:

requiredDuringSchedulingIgnoredDuringExecution: # 硬策略

- labelSelector:

matchExpressions:

- key: app

operator: In

values:

- busybox

topologyKey: kubernetes.io/hostname[root@master-1-230 1.3]# kubectl apply -f pod-podantiaff-demo.yaml

deployment.apps/affinity configured

[root@master-1-230 1.3]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

affinity-564f689dc-9j4cw 1/1 Running 0 65s 10.244.154.8 node-1-233 <none> <none>

busybox-7668d5bbd7-4694l 1/1 Running 0 11m 10.244.167.139 node-1-231 <none> <none>

busybox-7668d5bbd7-dztrb 1/1 Running 0 11m 10.244.167.141 node-1-231 <none> <none>

busybox-7668d5bbd7-qpbmk 1/1 Running 0 11m 10.244.167.140 node-1-231 <none> <none>

实验升级:

1、如果不满足“Node硬亲和”?

[root@master-1-230 not]# cat not_pod-nodeaff-demo.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: affinity

labels:

app: affinity

spec:

replicas: 1

revisionHistoryLimit: 3

selector:

matchLabels:

app: affinity

template:

metadata:

labels:

app: affinity

spec:

containers:

- name: nginx

image: nginx:1.7.9

ports:

- containerPort: 80

name: nginxweb

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution: # 硬策略

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/hostname

operator: In

values:

- node-1-233111[root@master-1-230 not]# kubectl apply -f not_pod-nodeaff-demo.yaml

deployment.apps/affinity created

[root@master-1-230 ~]# kubectl get pod

NAME READY STATUS RESTARTS AGE

affinity-59b9fdffd4-fcp29 0/1 Pending 0 7s

您在 /var/spool/mail/root 中有新邮件

[root@master-1-230 ~]# kubectl get pod

NAME READY STATUS RESTARTS AGE

affinity-59b9fdffd4-fcp29 0/1 Pending 0 26s

[root@master-1-230 ~]# kubectl describe pod affinity-59b9fdffd4-fcp29

Name: affinity-59b9fdffd4-fcp29

Namespace: default

Priority: 0

Service Account: default

Node: <none>

Labels: app=affinity

pod-template-hash=59b9fdffd4

Annotations: <none>

Status: Pending

IP:

IPs: <none>

Controlled By: ReplicaSet/affinity-59b9fdffd4

Containers:

nginx:

Image: nginx:1.7.9

Port: 80/TCP

Host Port: 0/TCP

Environment: <none>

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-7g49m (ro)

Conditions:

Type Status

PodScheduled False

Volumes:

kube-api-access-7g49m:

Type: Projected (a volume that contains injected data from multiple sources)

TokenExpirationSeconds: 3607

ConfigMapName: kube-root-ca.crt

ConfigMapOptional: <nil>

DownwardAPI: true

QoS Class: BestEffort

Node-Selectors: <none>

Tolerations: node.kubernetes.io/not-ready:NoExecute op=Exists for 300s

node.kubernetes.io/unreachable:NoExecute op=Exists for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Warning FailedScheduling 57s default-scheduler 0/4 nodes are available: 1 node(s) had untolerated taint {node-role.kubernetes.io/control-plane: }, 3 node(s) didn't match Pod's node affinity/selector. preemption: 0/4 nodes are available: 4 Preemption is not helpful for scheduling..2、如果不满足“node软亲和”?

[root@master-1-230 not]# cat not1_pod-nodeaff-demo.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: affinity

labels:

app: affinity

spec:

replicas: 1

revisionHistoryLimit: 3

selector:

matchLabels:

app: affinity

template:

metadata:

labels:

app: affinity

spec:

containers:

- name: nginx

image: nginx:1.7.9

ports:

- containerPort: 80

name: nginxweb

affinity:

nodeAffinity:

preferredDuringSchedulingIgnoredDuringExecution: # 软策略

- weight: 1

preference:

matchExpressions:

- key: apptype

operator: In

values:

- corenot[root@master-1-230 not]# kubectl apply -f not1_pod-nodeaff-demo.yaml

deployment.apps/affinity created

[root@master-1-230 not]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

affinity-7bc8469b66-4f49x 1/1 Running 0 19s 10.244.154.9 node-1-233 <none> <none>

[root@master-1-230 not]# 3、如果不满足“pod硬亲和”?

[root@master-1-230 not]# cat not_pod-podaff-demo.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: affinity

labels:

app: affinity

spec:

replicas: 1

revisionHistoryLimit: 15

selector:

matchLabels:

app: affinity

template:

metadata:

labels:

app: affinity

spec:

containers:

- name: nginx

image: nginx:1.7.9

ports:

- containerPort: 80

name: nginxweb

affinity:

podAffinity:

requiredDuringSchedulingIgnoredDuringExecution: # 硬策略

- labelSelector:

matchExpressions:

- key: app

operator: In

values:

- busyboxnot

topologyKey: kubernetes.io/hostname

[root@master-1-230 not]# [root@master-1-230 not]# kubectl get pod

No resources found in default namespace.

您在 /var/spool/mail/root 中有新邮件

[root@master-1-230 not]# kubectl apply -f not_pod-podaff-demo.yaml

deployment.apps/affinity created

[root@master-1-230 not]# kubectl get pod

NAME READY STATUS RESTARTS AGE

affinity-5c66b5c66b-jp8sv 0/1 Pending 0 14s

[root@master-1-230 not]# kubectl describe pod affinity-5c66b5c66b-jp8sv

Name: affinity-5c66b5c66b-jp8sv

Namespace: default

Priority: 0

Service Account: default

Node: <none>

Labels: app=affinity

pod-template-hash=5c66b5c66b

Annotations: <none>

Status: Pending

IP:

IPs: <none>

Controlled By: ReplicaSet/affinity-5c66b5c66b

Containers:

nginx:

Image: nginx:1.7.9

Port: 80/TCP

Host Port: 0/TCP

Environment: <none>

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-9tfsn (ro)

Conditions:

Type Status

PodScheduled False

Volumes:

kube-api-access-9tfsn:

Type: Projected (a volume that contains injected data from multiple sources)

TokenExpirationSeconds: 3607

ConfigMapName: kube-root-ca.crt

ConfigMapOptional: <nil>

DownwardAPI: true

QoS Class: BestEffort

Node-Selectors: <none>

Tolerations: node.kubernetes.io/not-ready:NoExecute op=Exists for 300s

node.kubernetes.io/unreachable:NoExecute op=Exists for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Warning FailedScheduling 39s default-scheduler 0/4 nodes are available: 1 node(s) had untolerated taint {node-role.kubernetes.io/control-plane: }, 3 node(s) didn't match pod affinity rules. preemption: 0/4 nodes are available: 4 Preemption is not helpful for scheduling..4、如果不满足“pod软亲和”?

[root@master-1-230 not]# cat not1_pod-podantiaff-demo.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: affinity

labels:

app: affinity

spec:

replicas: 1

revisionHistoryLimit: 15

selector:

matchLabels:

app: affinity

template:

metadata:

labels:

app: affinity

spec:

containers:

- name: nginx

image: nginx:1.7.9

ports:

- containerPort: 80

name: nginxweb

affinity:

podAntiAffinity:

requiredDuringSchedulingIgnoredDuringExecution: # 硬策略

- labelSelector:

matchExpressions:

- key: app

operator: In

values:

- busyboxnot1

topologyKey: kubernetes.io/hostname[root@master-1-230 not]# kubectl apply -f not1_pod-podantiaff-demo.yaml

deployment.apps/affinity created

[root@master-1-230 not]# kubectl get pod

NAME READY STATUS RESTARTS AGE

affinity-6cb4595d67-j48h8 1/1 Running 0 11s四、Scheduler的多种调度策略总结下

1、污点(taints)与容忍(tolerations)

对于nodeAffinity 无论是硬策略还是软策略方式,都是调度pod到预期节点,而Taints恰好与之相反,如果一个节点标记为Taints,除非pod也被表示为可以容忍,否则该Taints节点不可以被调度pod。节点被设置了污点,正常pod都不会被调度到该节点。

场景:

- 很多企业有基于GPU的算力集群,GPU的节点是不能让普通pod调度,需要对GPU的节点打上污点,防止pod被调度。

- 用户希望把Master节点暴露给Kubernetes系统组件使用,kubeadm搭建的集群默认给master节点添加一个污点标记,属于pod都没有被调度到master节点。

污点组成:key=value:effect

每个污点都有一个key和value作为污点的标签,其中value可以为空,effect描述污点的作用。当前taint effect支持以下三个选项:

- NoSchedule:表示k8s不会将Pod调度到具有该污点的Node上

- PreferNoSchedule:k8s将尽量避免将Pod调度到具有该污点的Node上。

- NoExecute:k8s将不会将Pod调度到具有该污点的Node上,同时会将Node上已经存在的Pod驱逐出去。

#设置污点

[root@master-1-230 1.3]# kubectl taint nodes node-1-231 devops=node-1-231:NoSchedule

node/node-1-231 tainted

#查看污点

[root@master-1-230 1.3]# kubectl describe nodes |grep -P "Name:|Taints"

Name: master-1-230

Taints: node-role.kubernetes.io/control-plane:NoSchedule

Name: node-1-231

Taints: devops=node-1-231:NoSchedule

Name: node-1-232

Taints: <none>

Name: node-1-233

Taints: <none>

#删除污点

kubectl[root@master-1-230 1.3]# kubectl taint nodes node-1-231 devops=node-1-231:NoSchedule-

node/node-1-231 untainted实验:

[root@master-1-230 1.3]# cat pod-taint-demo.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: taint

labels:

app: taint

spec:

replicas: 5

revisionHistoryLimit: 10

selector:

matchLabels:

app: taint

template:

metadata:

labels:

app: taint

spec:

containers:

- name: nginx

image: nginx:1.7.9

ports:

- name: http

containerPort: 80

tolerations:

- key: "devops"

operator: "Exists"

effect: "NoSchedule"[root@master-1-230 1.3]# kubectl apply -f pod-taint-demo.yaml

deployment.apps/taint created

[root@master-1-230 1.3]# kubectl describe nodes |grep -P "Name:|Taints"

Name: master-1-230

Taints: node-role.kubernetes.io/control-plane:NoSchedule

Name: node-1-231

Taints: devops=node-1-231:NoSchedule

Name: node-1-232

Taints: <none>

Name: node-1-233

Taints: <none>

您在 /var/spool/mail/root 中有新邮件

[root@master-1-230 1.3]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

taint-6cf8784b77-68nnr 1/1 Running 0 73s 10.244.154.11 node-1-233 <none> <none>

taint-6cf8784b77-7sjd8 1/1 Running 0 73s 10.244.154.12 node-1-233 <none> <none>

taint-6cf8784b77-dnx62 1/1 Running 0 73s 10.244.167.144 node-1-231 <none> <none>

taint-6cf8784b77-sxfts 1/1 Running 0 73s 10.244.167.143 node-1-231 <none> <none>

taint-6cf8784b77-vcztj 1/1 Running 0 73s 10.244.29.6 node-1-232 <none> <none>注意:

对应tolerations属性的写法,其中 key、value、effect与Node的Taint设置需要保持一致。

- 如果operator的值是Exists,则value属性可省略

- 如果operator的值是Equal,则表示其key与value之间的关系是equal(等于)

- 如果不指定operator属性,则默认值是Equal

两个特殊的值:

- 空的key如果再配合Exists就能匹配所有的key与value,也能容忍所有node的所有污点(Taints)

- 空的effect匹配所有的effect

4.4 指定pod运行在固定节点

Pod.spec.nodeName 将Pod直接调度到指定Node节点上,会跳过Scheduler的调度策略,该匹配规则是强制匹配。

[root@master-1-230 1.3]# cat 6nodename.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: myweb

spec:

selector:

matchLabels:

app: myweb

replicas: 3

template:

metadata:

labels:

app: myweb

spec:

nodeName: node-1-231 # 指定固定的节点

containers:

- name: myweb

image: nginx

ports:

- containerPort: 80[root@master-1-230 1.3]# kubectl apply -f 6nodename.yaml

deployment.apps/myweb created

您在 /var/spool/mail/root 中有新邮件

[root@master-1-230 1.3]# kubectl get pod

NAME READY STATUS RESTARTS AGE

myweb-6dbb8cffc7-5bnwz 1/1 Running 0 50s

myweb-6dbb8cffc7-lnv9l 1/1 Running 0 50s

myweb-6dbb8cffc7-wqknf 1/1 Running 0 50s

taint-6cf8784b77-68nnr 1/1 Running 0 7m41s

taint-6cf8784b77-7sjd8 1/1 Running 0 7m41s

taint-6cf8784b77-dnx62 1/1 Running 0 7m41s

taint-6cf8784b77-sxfts 1/1 Running 0 7m41s

taint-6cf8784b77-vcztj 1/1 Running 0 7m41s

五、总结

nodeName:调度到固定节点;场景:验证特定节点上的数据/故障复现等

nodeSelect:会将Pod调度到拥有所指定的每个标签的节点上;场景:集群中的节点涉及特殊部门/业务线/测试情况

podAffinity:Pod亲和性主要解决pod可能和哪些pod部署在同一个拓扑域的问题;场景:上下游应用存在大量数据交互

podAntiAffinity:Pod互斥性主要解决pod不能和哪些pod部署在同一个拓扑域中的问题;场景:多个计算类应用不适合调度到同一台节点

nodeAffinity:指定应用可以调度到指定的节点;场景:核心应用必须调度到核心的节点

nodeAntiAffinity:指定应用不可以调度到指定的节点;场景:核心节点不允许被普通的应用调度

taints:该Taints节点不可以被调度pod;场景:GPU集群/master节点等

toleratons:配置了tolerations的pod是可以被调度到指定的Taints节点

GPU pod配置nodeAffinity必须要调度到指定的节点,同时该节点打了Taints不允许被其他pod调度