YOLOV5.5-P5(640)部署到OpenVINO<一、环境安装与性能验证>

YOLOV5.5-P6(1280)部署到OpenVINO<二、环境安装与性能验证>

环境:

- WIN10 &VS2019

- openvino_2021.4.582(C++SDK,非py版,2021.11.19最新版)

- yolov5.5(其他版本没试)

在OpenVINO官方论坛搜索到一篇有关YOLO的部署,写得很详细(PS:比百度出来的垃圾博客好多了),链接:https://www.intel.cn/content/www/cn/zh/search.html?ws=text#q=yolov5&t=Developers&sort=relevancy

进去后搜索yolov5,我把下载的宝贵文档传到百度云:

链接:https://pan.baidu.com/s/1dq5W5hSj69OEn9t95bVDfQ

提取码:ygia

一、OpenVINO 2021.4.582安装

安装见我的博客:https://www.cnblogs.com/winslam/p/15577630.html

二、yolov5.5环境安装

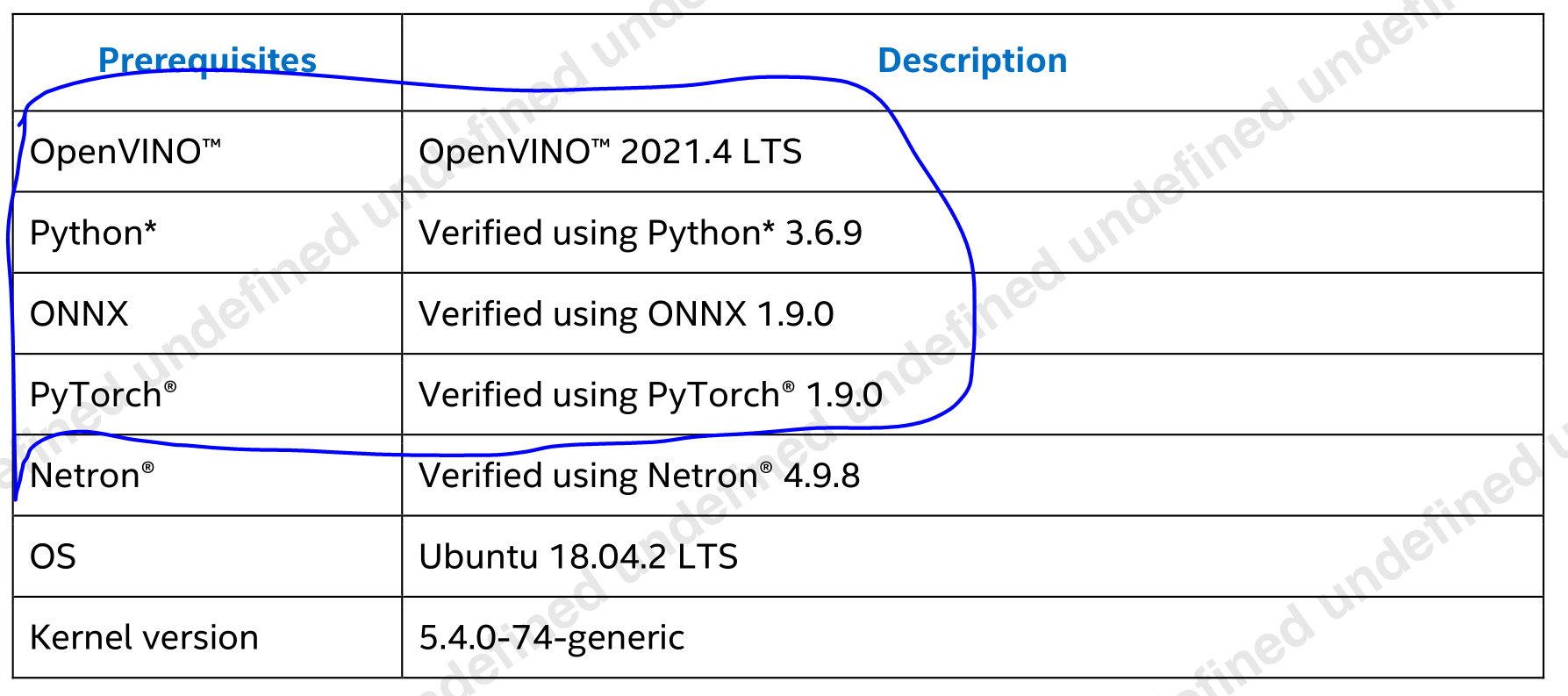

下图是文档中给出的依赖,我们优先安装其中对应版本,后续在安装yolov5.5要求的其他依赖。

以下安装都在清华源

安装openvino(尽量和openvino SDK版本对应上):

pip install openvino==2021.4.2

创建python3.6.9环境:

conda create -n yolov5.5 python==3.6.9

conda activate yolov5.5

安装onnx:

pip install onnx==1.9.0

安装pytorch1.9.0& torch0.10.0:

pip3 install torch==1.9.0+cu102 torchvision==0.10.0+cu102 -f https://download.pytorch.org/whl/cu102/torch_stable.html

下载yolov5.5:

链接:https://github.com/ultralytics/yolov5/releases/tag/v5.0

拉到最下面,源码、模型都有

安装yolov5.5其余依赖:

pip install -r requirements.txt

三、.pt模型转换onnx

进入链接:https://github.com/ultralytics/yolov5/releases/tag/v5.0

拉到最下面,下载yolov5s.pt模型,放到yolov5中models文件路径下,在pycharm中打开export.py,设置命令行参数:

--weights yolov5s.pt --img 640 --batch 1

将生成的yolov5s.onnx文件放到netron中(netron:https://netron.app/),搜索关键字:Transpose,查看网络三个输出层: Conv_245,Conv_261,Conv_277 (分别代表3个尺度的featureMap:1*255*80*80、1*255*40*40、1*255*20*20)

四、模型转换到IR(xml + bin)

将上述onnx模型复制到路径:

C:\Program Files (x86)\Intel\openvino_2021.4.582\deployment_tools\model_optimizer

管理员模式打开CMD,执行:

python mo.py --input_model yolov5s.onnx --model_name yolov5s -s 255 --reverse_input_channels --output Conv_245,Conv_261,Conv_277

生成xml、bin文件

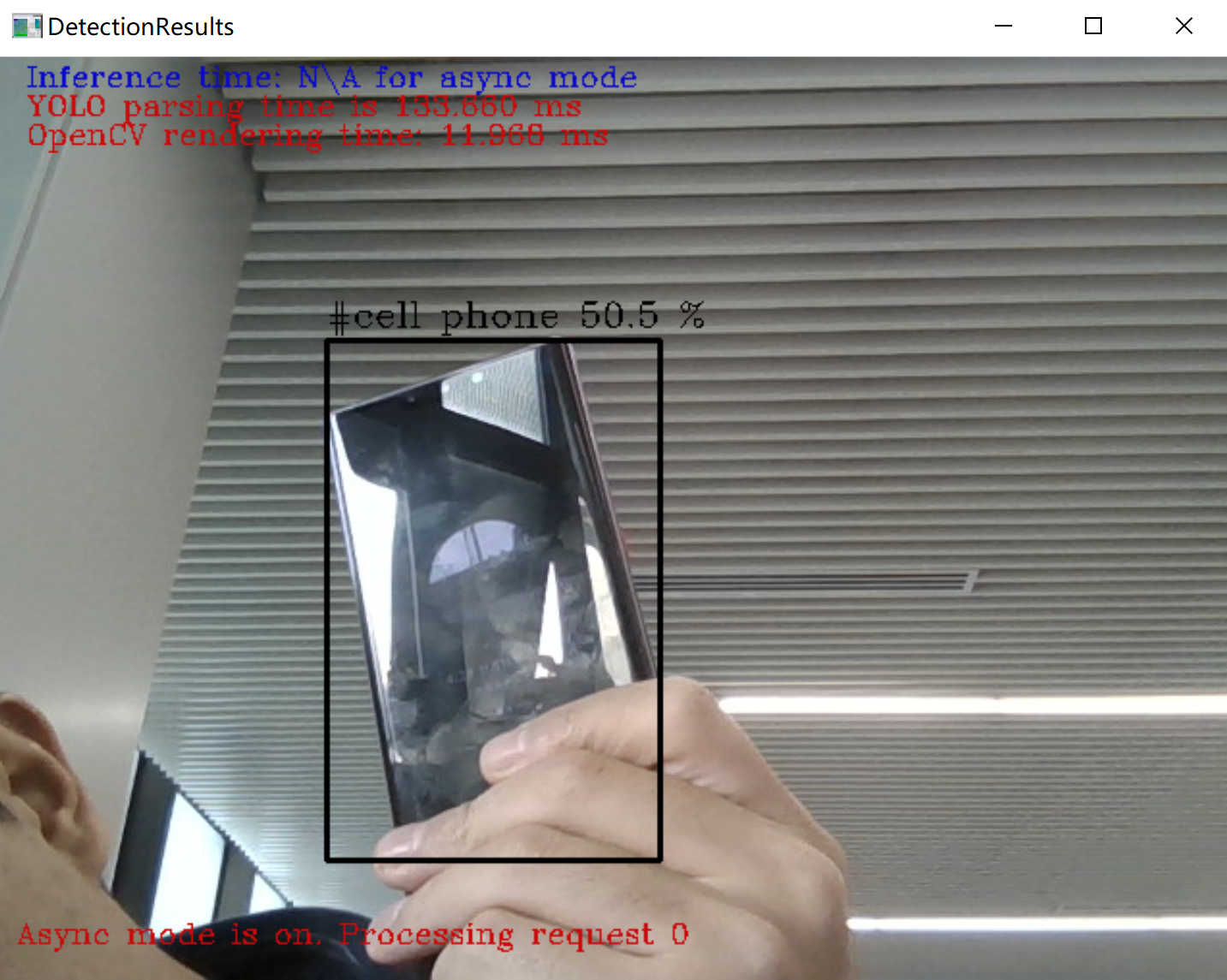

五、模型验证

将上述xml、bin文件拷贝到yolov5.5根目录,执行推理脚本:

命令行参数:

-m yolov5s.xml -i cam -at yolov5 --labels coco.names -d GPU

推理脚本:

pytorch_YOLO_OpenVINO_demo.py:

(参考:https://github.com/sammysun0711/pytorch_YOLO_OpenVINO_demo,我将项目拉到了我的gitee:https://gitee.com/ruiyushi/pytorch_YOLO_OpenVINO_demo)

1 #!/usr/bin/env python 2 """ 3 Copyright (C) 2018-2019 Intel Corporation 4 Licensed under the Apache License, Version 2.0 (the "License"); 5 you may not use this file except in compliance with the License. 6 You may obtain a copy of the License at 7 http://www.apache.org/licenses/LICENSE-2.0 8 Unless required by applicable law or agreed to in writing, software 9 distributed under the License is distributed on an "AS IS" BASIS, 10 WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. 11 See the License for the specific language governing permissions and 12 limitations under the License. 13 """ 14 from __future__ import print_function, division 15 16 import logging 17 import os 18 import sys 19 from argparse import ArgumentParser, SUPPRESS 20 from math import exp as exp 21 from time import time 22 import numpy as np 23 24 import ngraph 25 import cv2 26 from openvino.inference_engine import IENetwork, IECore 27 28 logging.basicConfig(format="[ %(levelname)s ] %(message)s", level=logging.INFO, stream=sys.stdout) 29 log = logging.getLogger() 30 31 32 def build_argparser(): 33 parser = ArgumentParser(add_help=False) 34 args = parser.add_argument_group('Options') 35 args.add_argument('-h', '--help', action='help', default=SUPPRESS, help='Show this help message and exit.') 36 args.add_argument("-m", "--model", help="Required. Path to an .xml file with a trained model.", 37 required=True, type=str) 38 args.add_argument("-at", "--architecture_type", help='Required. Specify model\' architecture type.', 39 type=str, required=True, 40 choices=('yolov3', 'yolov4', 'yolov5', 'yolov4-p5', 'yolov4-p6', 'yolov4-p7')) 41 args.add_argument("-i", "--input", help="Required. Path to an image/video file. (Specify 'cam' to work with " 42 "camera)", required=True, type=str) 43 args.add_argument("-l", "--cpu_extension", 44 help="Optional. Required for CPU custom layers. Absolute path to a shared library with " 45 "the kernels implementations.", type=str, default=None) 46 args.add_argument("-d", "--device", 47 help="Optional. Specify the target device to infer on; CPU, GPU, FPGA, HDDL or MYRIAD is" 48 " acceptable. The sample will look for a suitable plugin for device specified. " 49 "Default value is CPU", default="CPU", type=str) 50 args.add_argument("--labels", help="Optional. Labels mapping file", default=None, type=str) 51 args.add_argument("-t", "--prob_threshold", help="Optional. Probability threshold for detections filtering", 52 default=0.5, type=float) 53 args.add_argument("-iout", "--iou_threshold", help="Optional. Intersection over union threshold for overlapping " 54 "detections filtering", default=0.4, type=float) 55 args.add_argument("-ni", "--number_iter", help="Optional. Number of inference iterations", default=1, type=int) 56 args.add_argument("-pc", "--perf_counts", help="Optional. Report performance counters", default=False, 57 action="store_true") 58 args.add_argument("-r", "--raw_output_message", help="Optional. Output inference results raw values showing", 59 default=False, action="store_true") 60 args.add_argument("--no_show", help="Optional. Don't show output", action='store_true') 61 return parser 62 63 64 class YoloParams: 65 # ------------------------------------------- Extracting layer parameters ------------------------------------------ 66 # Magic numbers are copied from yolo samples 67 def __init__(self, param, side, yolo_type): 68 self.coords = 4 if 'coords' not in param else int(param['coords']) 69 self.classes = 80 if 'classes' not in param else int(param['classes']) 70 self.side = side 71 72 if yolo_type == 'yolov4': 73 self.num = 3 74 self.anchors = [12.0, 16.0, 19.0, 36.0, 40.0, 28.0, 36.0, 75.0, 76.0, 55.0, 72.0, 146.0, 142.0, 110.0, 75 192.0, 243.0, 76 459.0, 401.0] 77 elif yolo_type == 'yolov4-p5': 78 self.num = 4 79 self.anchors = [13.0, 17.0, 31.0, 25.0, 24.0, 51.0, 61.0, 45.0, 48.0, 102.0, 119.0, 96.0, 97.0, 189.0, 80 217.0, 184.0, 81 171.0, 384.0, 324.0, 451.0, 616.0, 618.0, 800.0, 800.0] 82 elif yolo_type == 'yolov4-p6': 83 self.num = 4 84 self.anchors = [13.0, 17.0, 31.0, 25.0, 24.0, 51.0, 61.0, 45.0, 61.0, 45.0, 48.0, 102.0, 119.0, 96.0, 97.0, 85 189.0, 86 97.0, 189.0, 217.0, 184.0, 171.0, 384.0, 324.0, 451.0, 324.0, 451.0, 545.0, 357.0, 616.0, 87 618.0, 1024.0, 1024.0] 88 elif yolo_type == 'yolov4-p7': 89 self.num = 5 90 self.anchors = [13.0, 17.0, 22.0, 25.0, 27.0, 66.0, 55.0, 41.0, 57.0, 88.0, 112.0, 69.0, 69.0, 177.0, 136.0, 91 138.0, 92 136.0, 138.0, 287.0, 114.0, 134.0, 275.0, 268.0, 248.0, 268.0, 248.0, 232.0, 504.0, 445.0, 93 416.0, 640.0, 640.0, 94 812.0, 393.0, 477.0, 808.0, 1070.0, 908.0, 1408.0, 1408.0] 95 else: 96 self.num = 3 97 self.anchors = [10.0, 13.0, 16.0, 30.0, 33.0, 23.0, 30.0, 61.0, 62.0, 45.0, 59.0, 119.0, 116.0, 90.0, 156.0, 98 198.0, 373.0, 326.0] 99 100 def log_params(self): 101 params_to_print = {'classes': self.classes, 'num': self.num, 'coords': self.coords, 'anchors': self.anchors} 102 [log.info(" {:8}: {}".format(param_name, param)) for param_name, param in params_to_print.items()] 103 104 105 def letterbox(img, size=(640, 640), color=(114, 114, 114), auto=True, scaleFill=False, scaleup=True): 106 # Resize image to a 32-pixel-multiple rectangle https://github.com/ultralytics/yolov3/issues/232 107 shape = img.shape[:2] # current shape [height, width] 108 w, h = size 109 110 # Scale ratio (new / old) 111 r = min(h / shape[0], w / shape[1]) 112 if not scaleup: # only scale down, do not scale up (for better test mAP) 113 r = min(r, 1.0) 114 115 # Compute padding 116 ratio = r, r # width, height ratios 117 new_unpad = int(round(shape[1] * r)), int(round(shape[0] * r)) 118 dw, dh = w - new_unpad[0], h - new_unpad[1] # wh padding 119 if auto: # minimum rectangle 120 dw, dh = np.mod(dw, 64), np.mod(dh, 64) # wh padding 121 elif scaleFill: # stretch 122 dw, dh = 0.0, 0.0 123 new_unpad = (w, h) 124 ratio = w / shape[1], h / shape[0] # width, height ratios 125 126 dw /= 2 # divide padding into 2 sides 127 dh /= 2 128 129 if shape[::-1] != new_unpad: # resize 130 img = cv2.resize(img, new_unpad, interpolation=cv2.INTER_LINEAR) 131 top, bottom = int(round(dh - 0.1)), int(round(dh + 0.1)) 132 left, right = int(round(dw - 0.1)), int(round(dw + 0.1)) 133 img = cv2.copyMakeBorder(img, top, bottom, left, right, cv2.BORDER_CONSTANT, value=color) # add border 134 135 top2, bottom2, left2, right2 = 0, 0, 0, 0 136 if img.shape[0] != h: 137 top2 = (h - img.shape[0]) // 2 138 bottom2 = top2 139 img = cv2.copyMakeBorder(img, top2, bottom2, left2, right2, cv2.BORDER_CONSTANT, value=color) # add border 140 elif img.shape[1] != w: 141 left2 = (w - img.shape[1]) // 2 142 right2 = left2 143 img = cv2.copyMakeBorder(img, top2, bottom2, left2, right2, cv2.BORDER_CONSTANT, value=color) # add border 144 return img 145 146 147 def scale_bbox(x, y, height, width, class_id, confidence, im_h, im_w, resized_im_h=640, resized_im_w=640): 148 gain = min(resized_im_w / im_w, resized_im_h / im_h) # gain = old / new 149 pad = (resized_im_w - im_w * gain) / 2, (resized_im_h - im_h * gain) / 2 # wh padding 150 x = int((x - pad[0]) / gain) 151 y = int((y - pad[1]) / gain) 152 153 w = int(width / gain) 154 h = int(height / gain) 155 156 xmin = max(0, int(x - w / 2)) 157 ymin = max(0, int(y - h / 2)) 158 xmax = min(im_w, int(xmin + w)) 159 ymax = min(im_h, int(ymin + h)) 160 # Method item() used here to convert NumPy types to native types for compatibility with functions, which don't 161 # support Numpy types (e.g., cv2.rectangle doesn't support int64 in color parameter) 162 return dict(xmin=xmin, xmax=xmax, ymin=ymin, ymax=ymax, class_id=class_id.item(), confidence=confidence.item()) 163 164 165 def entry_index(side, coord, classes, location, entry): 166 side_power_2 = side ** 2 167 n = location // side_power_2 168 loc = location % side_power_2 169 return int(side_power_2 * (n * (coord + classes + 1) + entry) + loc) 170 171 172 def parse_yolo_region(blob, resized_image_shape, original_im_shape, params, threshold, yolo_type): 173 # ------------------------------------------ Validating output parameters ------------------------------------------ 174 out_blob_n, out_blob_c, out_blob_h, out_blob_w = blob.shape 175 predictions = 1.0 / (1.0 + np.exp(-blob)) 176 177 assert out_blob_w == out_blob_h, "Invalid size of output blob. It sould be in NCHW layout and height should " \ 178 "be equal to width. Current height = {}, current width = {}" \ 179 "".format(out_blob_h, out_blob_w) 180 181 # ------------------------------------------ Extracting layer parameters ------------------------------------------- 182 orig_im_h, orig_im_w = original_im_shape 183 resized_image_h, resized_image_w = resized_image_shape 184 objects = list() 185 186 side_square = params.side[1] * params.side[0] 187 188 # ------------------------------------------- Parsing YOLO Region output ------------------------------------------- 189 bbox_size = int(out_blob_c / params.num) # 4+1+num_classes 190 # print('bbox_size = ' + str(bbox_size)) 191 # print('bbox_size = ' + str(bbox_size)) 192 for row, col, n in np.ndindex(params.side[0], params.side[1], params.num): 193 bbox = predictions[0, n * bbox_size:(n + 1) * bbox_size, row, col] 194 195 x, y, width, height, object_probability = bbox[:5] 196 class_probabilities = bbox[5:] 197 if object_probability < threshold: 198 continue 199 # print('resized_image_w = ' + str(resized_image_w)) 200 # print('out_blob_w = ' + str(out_blob_w)) 201 202 x = (2 * x - 0.5 + col) * (resized_image_w / out_blob_w) 203 y = (2 * y - 0.5 + row) * (resized_image_h / out_blob_h) 204 if int(resized_image_w / out_blob_w) == 8 & int(resized_image_h / out_blob_h) == 8: # 80x80, 205 idx = 0 206 elif int(resized_image_w / out_blob_w) == 16 & int(resized_image_h / out_blob_h) == 16: # 40x40 207 idx = 1 208 elif int(resized_image_w / out_blob_w) == 32 & int(resized_image_h / out_blob_h) == 32: # 20x20 209 idx = 2 210 elif int(resized_image_w / out_blob_w) == 64 & int(resized_image_h / out_blob_h) == 64: # 20x20 211 idx = 3 212 elif int(resized_image_w / out_blob_w) == 128 & int(resized_image_h / out_blob_h) == 128: # 20x20 213 idx = 4 214 215 if yolo_type == 'yolov4-p5' or yolo_type == 'yolov4-p6' or yolo_type == 'yolov4-p7': 216 width = (2 * width) ** 2 * params.anchors[idx * 8 + 2 * n] 217 height = (2 * height) ** 2 * params.anchors[idx * 8 + 2 * n + 1] 218 else: 219 width = (2 * width) ** 2 * params.anchors[idx * 6 + 2 * n] 220 height = (2 * height) ** 2 * params.anchors[idx * 6 + 2 * n + 1] 221 222 class_id = np.argmax(class_probabilities) 223 confidence = class_probabilities[class_id] 224 objects.append(scale_bbox(x=x, y=y, height=height, width=width, class_id=class_id, confidence=confidence, 225 im_h=orig_im_h, im_w=orig_im_w, resized_im_h=resized_image_h, 226 resized_im_w=resized_image_w)) 227 return objects 228 229 230 def intersection_over_union(box_1, box_2): 231 width_of_overlap_area = min(box_1['xmax'], box_2['xmax']) - max(box_1['xmin'], box_2['xmin']) 232 height_of_overlap_area = min(box_1['ymax'], box_2['ymax']) - max(box_1['ymin'], box_2['ymin']) 233 if width_of_overlap_area < 0 or height_of_overlap_area < 0: 234 area_of_overlap = 0 235 else: 236 area_of_overlap = width_of_overlap_area * height_of_overlap_area 237 box_1_area = (box_1['ymax'] - box_1['ymin']) * (box_1['xmax'] - box_1['xmin']) 238 box_2_area = (box_2['ymax'] - box_2['ymin']) * (box_2['xmax'] - box_2['xmin']) 239 area_of_union = box_1_area + box_2_area - area_of_overlap 240 if area_of_union == 0: 241 return 0 242 return area_of_overlap / area_of_union 243 244 245 def main(): 246 args = build_argparser().parse_args() 247 248 model_xml = args.model 249 model_bin = os.path.splitext(model_xml)[0] + ".bin" 250 251 # ------------- 1. Plugin initialization for specified device and load extensions library if specified ------------- 252 log.info("Creating Inference Engine...") 253 ie = IECore() 254 if args.cpu_extension and 'CPU' in args.device: 255 ie.add_extension(args.cpu_extension, "CPU") 256 257 # -------------------- 2. Reading the IR generated by the Model Optimizer (.xml and .bin files) -------------------- 258 log.info("Loading network files:\n\t{}\n\t{}".format(model_xml, model_bin)) 259 net = IENetwork(model=model_xml, weights=model_bin) 260 261 # ---------------------------------- 3. Load CPU extension for support specific layer ------------------------------ 262 # if "CPU" in args.device: 263 # supported_layers = ie.query_network(net, "CPU") 264 # not_supported_layers = [l for l in net.layers.keys() if l not in supported_layers] 265 # if len(not_supported_layers) != 0: 266 # log.error("Following layers are not supported by the plugin for specified device {}:\n {}". 267 # format(args.device, ', '.join(not_supported_layers))) 268 # log.error("Please try to specify cpu extensions library path in sample's command line parameters using -l " 269 # "or --cpu_extension command line argument") 270 # sys.exit(1) 271 # 272 # assert len(net.inputs.keys()) == 1, "Sample supports only YOLO V3 based single input topologies" 273 274 # ---------------------------------------------- 4. Preparing inputs ----------------------------------------------- 275 log.info("Preparing inputs") 276 input_blob = next(iter(net.inputs)) 277 278 # Defaulf batch_size is 1 279 net.batch_size = 1 280 281 # Read and pre-process input images 282 n, c, h, w = net.inputs[input_blob].shape 283 284 ng_func = ngraph.function_from_cnn(net) 285 yolo_layer_params = {} 286 for node in ng_func.get_ordered_ops(): 287 layer_name = node.get_friendly_name() 288 if layer_name not in net.outputs: 289 continue 290 shape = list(node.inputs()[0].get_source_output().get_node().shape) 291 yolo_params = YoloParams(node._get_attributes(), shape[2:4], args.architecture_type) 292 yolo_layer_params[layer_name] = (shape, yolo_params) 293 294 if args.labels: 295 with open(args.labels, 'r') as f: 296 labels_map = [x.strip() for x in f] 297 else: 298 labels_map = None 299 300 input_stream = 0 if args.input == "cam" else args.input 301 302 is_async_mode = True 303 cap = cv2.VideoCapture(input_stream) 304 number_input_frames = int(cap.get(cv2.CAP_PROP_FRAME_COUNT)) 305 number_input_frames = 1 if number_input_frames != -1 and number_input_frames < 0 else number_input_frames 306 307 wait_key_code = 1 308 309 # Number of frames in picture is 1 and this will be read in cycle. Sync mode is default value for this case 310 if number_input_frames != 1: 311 ret, frame = cap.read() 312 else: 313 is_async_mode = False 314 wait_key_code = 0 315 316 # ----------------------------------------- 5. Loading model to the plugin ----------------------------------------- 317 log.info("Loading model to the plugin") 318 exec_net = ie.load_network(network=net, num_requests=2, device_name=args.device) 319 320 cur_request_id = 0 321 next_request_id = 1 322 render_time = 0 323 parsing_time = 0 324 325 # ----------------------------------------------- 6. Doing inference ----------------------------------------------- 326 log.info("Starting inference...") 327 print("To close the application, press 'CTRL+C' here or switch to the output window and press ESC key") 328 print("To switch between sync/async modes, press TAB key in the output window") 329 while cap.isOpened(): 330 # Here is the first asynchronous point: in the Async mode, we capture frame to populate the NEXT infer request 331 # in the regular mode, we capture frame to the CURRENT infer request 332 if is_async_mode: 333 ret, next_frame = cap.read() 334 else: 335 ret, frame = cap.read() 336 337 if not ret: 338 break 339 340 if is_async_mode: 341 request_id = next_request_id 342 in_frame = letterbox(frame, (w, h)) 343 else: 344 request_id = cur_request_id 345 in_frame = letterbox(frame, (w, h)) 346 # resize input_frame to network size 347 in_frame = in_frame.transpose((2, 0, 1)) # Change data layout from HWC to CHW 348 in_frame = in_frame.reshape((n, c, h, w)) 349 350 # Start inference 351 start_time = time() 352 exec_net.start_async(request_id=request_id, inputs={input_blob: in_frame}) 353 354 # Collecting object detection results 355 objects = list() 356 if exec_net.requests[cur_request_id].wait(-1) == 0: 357 det_time = time() - start_time 358 output = exec_net.requests[cur_request_id].outputs 359 start_time = time() 360 361 for layer_name, out_blob in output.items(): 362 # out_blob = out_blob.reshape(net.layers[layer_name].out_data[0].shape) 363 layer_params = yolo_layer_params[ 364 layer_name] # YoloParams(net.layers[layer_name].params, out_blob.shape[2]) 365 out_blob.shape = layer_params[0] 366 # log.info("Layer {} parameters: ".format(layer_name)) 367 # layer_params.log_params() 368 objects += parse_yolo_region(out_blob, in_frame.shape[2:], 369 # in_frame.shape[2:], layer_params, 370 frame.shape[:-1], layer_params[1], 371 args.prob_threshold, args.architecture_type) 372 parsing_time = time() - start_time 373 374 # Filtering overlapping boxes with respect to the --iou_threshold CLI parameter 375 objects = sorted(objects, key=lambda obj: obj['confidence'], reverse=True) 376 for i in range(len(objects)): 377 if objects[i]['confidence'] == 0: 378 continue 379 for j in range(i + 1, len(objects)): 380 # if objects[i]['class_id'] != objects[j]['class_id']: # Only compare bounding box with same class id 381 # continue 382 if intersection_over_union(objects[i], objects[j]) > args.iou_threshold: 383 objects[j]['confidence'] = 0 384 385 # Drawing objects with respect to the --prob_threshold CLI parameter 386 objects = [obj for obj in objects if obj['confidence'] >= args.prob_threshold] 387 388 if len(objects) and args.raw_output_message: 389 log.info("\nDetected boxes for batch {}:".format(1)) 390 log.info(" Class ID | Confidence | XMIN | YMIN | XMAX | YMAX | COLOR ") 391 392 origin_im_size = frame.shape[:-1] 393 for obj in objects: 394 # Validation bbox of detected object 395 if obj['xmax'] > origin_im_size[1] or obj['ymax'] > origin_im_size[0] or obj['xmin'] < 0 or obj['ymin'] < 0: 396 continue 397 color = (int(max(255 - obj['class_id'] * 7.5, 0)), 398 max(255 - obj['class_id'] * 10, 0), max(255 - obj['class_id'] * 12.5, 0)) 399 det_label = labels_map[obj['class_id']] if labels_map and len(labels_map) >= obj['class_id'] else \ 400 str(obj['class_id']) 401 402 if args.raw_output_message: 403 log.info( 404 "{:^9} | {:10f} | {:4} | {:4} | {:4} | {:4} | {} ".format(det_label, obj['confidence'], obj['xmin'], 405 obj['ymin'], obj['xmax'], obj['ymax'], 406 color)) 407 408 cv2.rectangle(frame, (obj['xmin'], obj['ymin']), (obj['xmax'], obj['ymax']), color, 2) 409 cv2.putText(frame, 410 "#" + det_label + ' ' + str(round(obj['confidence'] * 100, 1)) + ' %', 411 (obj['xmin'], obj['ymin'] - 7), cv2.FONT_HERSHEY_COMPLEX, 0.6, color, 1) 412 413 # Draw performance stats over frame 414 inf_time_message = "Inference time: N\A for async mode" if is_async_mode else \ 415 "Inference time: {:.3f} ms".format(det_time * 1e3) 416 render_time_message = "OpenCV rendering time: {:.3f} ms".format(render_time * 1e3) 417 async_mode_message = "Async mode is on. Processing request {}".format(cur_request_id) if is_async_mode else \ 418 "Async mode is off. Processing request {}".format(cur_request_id) 419 parsing_message = "YOLO parsing time is {:.3f} ms".format(parsing_time * 1e3) 420 421 cv2.putText(frame, inf_time_message, (15, 15), cv2.FONT_HERSHEY_COMPLEX, 0.5, (200, 10, 10), 1) 422 cv2.putText(frame, render_time_message, (15, 45), cv2.FONT_HERSHEY_COMPLEX, 0.5, (10, 10, 200), 1) 423 cv2.putText(frame, async_mode_message, (10, int(origin_im_size[0] - 20)), cv2.FONT_HERSHEY_COMPLEX, 0.5, 424 (10, 10, 200), 1) 425 cv2.putText(frame, parsing_message, (15, 30), cv2.FONT_HERSHEY_COMPLEX, 0.5, (10, 10, 200), 1) 426 427 start_time = time() 428 if not args.no_show: 429 cv2.imshow("DetectionResults", frame) 430 cv2.imwrite("demo_result.png", frame) 431 render_time = time() - start_time 432 433 if is_async_mode: 434 cur_request_id, next_request_id = next_request_id, cur_request_id 435 frame = next_frame 436 437 if not args.no_show: 438 key = cv2.waitKey(wait_key_code) 439 440 # ESC key 441 if key == 27: 442 break 443 # Tab key 444 if key == 9: 445 exec_net.requests[cur_request_id].wait() 446 is_async_mode = not is_async_mode 447 log.info("Switched to {} mode".format("async" if is_async_mode else "sync")) 448 449 cv2.destroyAllWindows() 450 451 452 if __name__ == '__main__': 453 sys.exit(main() or 0)

六、速度对比

这里贴一个yolov5m版本的效果图,自行对比上图看看吧

yolov5m、5l、5x版本模型转换和上面一样,先转onnx,再转IR,如下图是二者的转IR指令:

# for 5s 5m

python mo.py --input_model yolov5s.onnx --model_name yolov5s -s 255 --reverse_input_channels --output Conv_245,Conv_261,Conv_277 python mo.py --input_model yolov5m.onnx --model_name yolov5m -s 255 --reverse_input_channels --output Conv_324,Conv_340,Conv_356

# for 5l 5x

python mo.py --input_model yolov5l.onnx --model_name yolov5l -s 255 --reverse_input_channels --output Conv_403,Conv_419,Conv_435

python mo.py --input_model yolov5x.onnx --model_name yolov5x -s 255 --reverse_input_channels --output Conv_482,Conv_498,Conv_514

有没有发现,最后输出层名称都是以16为公差的等差数列。