初识Kubernetes

Kubernetes 是一个可移植、可扩展的开源平台,用于管理容器化的工作负载和服务,方便进行声明式配置和自动化。Kubernetes 拥有一个庞大且快速增长的生态系统,其服务、支持和工具的使用范围广泛。Kubernetes将一系列的主机看做是一个受管理的海量资源,这些海量资源组成了一个能够方便进行扩展的操作系统。而在Kubernetes中运行着的容器则可以视为是这个操作系统中运行的“进程”,通过Kubernetes这一中央协调器,解决了基于容器应用程序的调度、伸缩、访问负载均衡以及整个系统的管理和监控的问题。

Kubernetes组件

一组工作机器,称为 节点, 会运行容器化应用程序。每个集群至少有一个工作节点。

工作节点会托管 Pod ,而 Pod 就是作为应用负载的组件。 控制平面管理集群中的工作节点和 Pod。 在生产环境中,控制平面通常跨多台计算机运行, 一个集群通常运行多个节点,提供容错性和高可用性。

Kubernetes的核心组件主要由两部分组成:Master组件和Node组件,其中Matser组件提供了集群层面的管理功能,它们负责响应用户请求并且对集群资源进行统一的调度和管理。Node组件会运行在集群的所有节点上,它们负责管理和维护节点中运行的Pod,为Kubernetes集群提供运行时环境。

Master组件主要包括:

- kube-apiserver:负责对外暴露Kubernetes API;

- etcd:用于存储Kubernetes集群的所有数据;

- kube-scheduler: 负责为新创建的Pod选择可供其运行的节点;

- kube-controller-manager: 包含Node Controller,Deployment Controller,Endpoint Controller等等,通过与apiserver交互使相应的资源达到预期状态。

Node组件主要包括:

- kubelet:负责维护和管理节点上Pod的运行状态;

- kube-proxy:负责维护主机上的网络规则以及转发。

- Container Runtime:如Docker,rkt,runc等提供容器运行时环境

参考官方文档:https://kubernetes.io/zh-cn/docs/home/

Kubernetes部署Prometheus

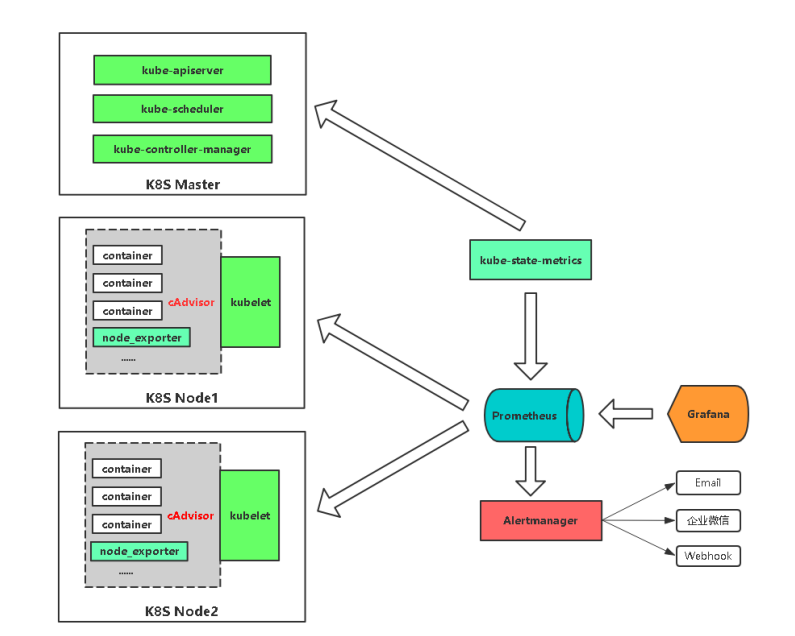

Kubernetes监控思路

kubernetes本身监控:

- Node资源利用率

- Node数量

- 每个Node运行Pod数量

- 资源对象状态

Pod监控:

- Pod总数量及每个控制器预期数量

- Pod状态

- 容器资源利用率:CPU、内存、网络

| 监控指标 | 具体实现 | 举例 |

|---|---|---|

| Pod性能 | cAdvisor | CPU、内存、网络 |

| Node性能 | node-exporter | CPU、内存、网络 |

| K8S资源对象 | kube-state-metrics | Pod、Deployment、Service |

- Pod

kubelet的节点使用cAdvisor提供的metrics接口获取该节点所有Pod和容器相关的性能指标数据。(不可访问)

- Node

使用node_exporter收集器采集节点资源利用率。

项目地址:https://github.com/prometheus/node_exporter

- K8s资源对象

kube-state-metrics采集了k8s中各种资源对象的状态信息。

项目地址:https://github.com/kubernetes/kube-state-metrics

在Kubernetes平台部署相关组件

prometheus-deployment.yaml # 部署Prometheus

prometheus-configmap.yaml # Prometheus配置文件,主要配置Kubernetes服务发现 prometheus-rules.yaml # Prometheus告警规则

grafana.yaml # 可视化展示

node-exporter.yml # 采集节点资源,通过DaemonSet方式部署,并声明让Prometheus收集 kube-state-metrics.yaml # 采集K8s资源,并声明让Prometheus收集

alertmanager-configmap.yaml # 配置文件,配置发件人和收件人

alertmanager-deployment.yaml # 部署Alertmanager告警组件

使用ConfigMaps管理应用配置

当使用Deployment管理和部署应用程序时,用户可以方便了对应用进行扩容或者缩容,从而产生多个Pod实例。为了能够统一管理这些Pod的配置信息,在Kubernetes中可以使用ConfigMaps资源定义和管理这些配置,并且通过环境变量或者文件系统挂载的方式让容器使用这些配置。

- 这里将使用ConfigMaps管理Prometheus的配置文件,创建prometheus-config.yml文件,并写入以下内容:

apiVersion: v1

kind: ConfigMap

metadata:

name: prometheus-config

namespace: monitor

data:

prometheus.yml: |

rule_files: # 引入报警规则文件

- /etc/config/rules/*.rules # 引入定义的警报规则

scrape_configs:

- job_name: prometheus

static_configs:

- targets:

- localhost:9090

# 创建监控任务kubernetes-apiservers,这里指定了服务发现模式为endpoints。Promtheus会查找当前集群中所有的endpoints配置,并通过relabel进行判断是否为apiserver对应的访问地址:

- job_name: kubernetes-apiservers

kubernetes_sd_configs:

- role: endpoints

relabel_configs:

- action: keep

regex: default;kubernetes;https

source_labels:

- __meta_kubernetes_namespace

- __meta_kubernetes_service_name

- __meta_kubernetes_endpoint_port_name

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

insecure_skip_verify: true

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

#在relabel_configs配置中第一步用于判断当前endpoints是否为kube-apiserver对用的地址。第二步,替换监控采集地址到kubernetes.default.svc:443即可。重新加载配置文件,重建Promthues实例,得到以下结果

- job_name: kubernetes-nodes-kubelet

kubernetes_sd_configs:

- role: node # 发现集群中的节点

relabel_configs:

# 将标签(.*)作为新标签名,原有值不变

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

insecure_skip_verify: true

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

# 各节点的kubelet组件中除了包含自身的监控指标信息以外,kubelet组件还内置了对cAdvisor的支持。cAdvisor能够获取当前节点上运行的所有容器的资源使用情况,通过访问kubelet的/metrics/cadvisor地址可以获取到cadvisor的监控指标,因此和获取kubelet监控指标类似,这里同样通过node模式自动发现所有的kubelet信息,并通过适当的relabel过程,修改监控采集任务的配置。 与采集kubelet自身监控指标相似,这里也有两种方式采集cadvisor中的监控指标:

# 直接访问kubelet的/metrics/cadvisor地址,需要跳过ca证书认证:

- job_name: kubernetes-nodes-cadvisor

kubernetes_sd_configs:

- role: node

relabel_configs:

# 将标签(.*)作为新标签名,原有值不变

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

# 实际访问指标接口 https://NodeIP:10250/metrics/cadvisor,这里替换默认指标URL路径

- target_label: __metrics_path__

replacement: /metrics/cadvisor

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

insecure_skip_verify: true

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

# 或者insecure_skip_verify: true 语句去掉,通过api-server提供的代理地址访问kubelet的/metrics/cadvisor地址

#添加 - target_label: __address__

# replacement: kubernetes.monitor.svc:443

#将这个修改为api入口 replacement: /aip/v1/nodes/${1}/proxy/metrics/cadvisor

- job_name: kubernetes-service-endpoints

kubernetes_sd_configs:

- role: endpoints # 从Service列表中的Endpoint发现Pod为目标

relabel_configs:

# Service没配置注解prometheus.io/scrape的不采集

- action: keep

regex: true

source_labels:

- __meta_kubernetes_service_annotation_prometheus_io_scrape

# 重命名采集目标协议

- action: replace

regex: (https?)

source_labels:

- __meta_kubernetes_service_annotation_prometheus_io_scheme

target_label: __scheme__

# 重命名采集目标指标URL路径

- action: replace

regex: (.+)

source_labels:

- __meta_kubernetes_service_annotation_prometheus_io_path

target_label: __metrics_path__

# 重命名采集目标地址

- action: replace

regex: ([^:]+)(?::\d+)?;(\d+)

replacement: $1:$2

source_labels:

- __address__

- __meta_kubernetes_service_annotation_prometheus_io_port

target_label: __address__

# 将K8s标签(.*)作为新标签名,原有值不变

- action: labelmap

regex: __meta_kubernetes_service_label_(.+)

# 生成命名空间标签

- action: replace

source_labels:

- __meta_kubernetes_namespace

target_label: kubernetes_namespace

# 生成Service名称标签

- action: replace

source_labels:

- __meta_kubernetes_service_name

target_label: kubernetes_name

# 为Prometheus创建监控采集任务kubernetes-pods

- job_name: kubernetes-pods

kubernetes_sd_configs:

- role: pod # 发现所有Pod为目标

# 重命名采集目标协议

relabel_configs:

- action: keep

regex: true

source_labels:

- __meta_kubernetes_pod_annotation_prometheus_io_scrape

# 重命名采集目标指标URL路径

- action: replace

regex: (.+)

source_labels:

- __meta_kubernetes_pod_annotation_prometheus_io_path

target_label: __metrics_path__

# 重命名采集目标地址

- action: replace

regex: ([^:]+)(?::\d+)?;(\d+)

replacement: $1:$2

source_labels:

- __address__

- __meta_kubernetes_pod_annotation_prometheus_io_port

target_label: __address__

# 将K8s标签(.*)作为新标签名,原有值不变

- action: labelmap

regex: __meta_kubernetes_pod_label_(.+)

# 生成命名空间标签

- action: replace

source_labels:

- __meta_kubernetes_namespace

target_label: kubernetes_namespace

# 生成Service名称标签

- action: replace

source_labels:

- __meta_kubernetes_pod_name

target_label: kubernetes_pod_name

# 通过以上relabel过程实现对Pod实例的过滤,以及采集任务地址替换,从而实现对特定Pod实例监控指标的采集。需要说明的是kubernetes-pods并不是只针对Node Exporter而言,对于用户任意部署的Pod实例,只要其提供了对Prometheus的支持,用户都可以通过为Pod添加注解的形式为其添加监控指标采集的支持。

alerting: # 在底部添加alerting配置。配置Prometheus与Alertmanager通信

alertmanagers:

- static_configs:

- targets: ["alertmanager:80"]

定义Prometheus警报规则并引入配置

- 这里将使用ConfigMaps管理Prometheus的告警规则,创建prometheus-rules.yml文件,并写入以下内容:

apiVersion: v1

kind: ConfigMap

metadata:

name: prometheus-rules

namespace: monitor

data:

general.rules: |

groups:

- name: general.rules # 定义这组告警的组名

rules:

- alert: InstanceDown # 警报名 可理解为警告的标题

expr: up == 0 # 判断某值的规则 up{instance="192.168.23.11:9090"}

for: 1m # 上面规则持续5分钟为0进行告警,5分钟内触发是pending状态

labels: # 定义警报标签

severity: error # 定义警报等级为 error

annotations: # 备注描述

summary: "Instance {{ $labels.instance }} 停止工作" # 警告中呈现的具体信息可以写在这里

description: "{{ $labels.instance }} job {{ $labels.job }} 已经停止5分钟以上."

node.rules: |

groups:

- name: node.rules

rules:

- alert: NodeFilesystemUsage

expr: |

100 - (node_filesystem_free{fstype=~"ext4|xfs"} /

node_filesystem_size{fstype=~"ext4|xfs"} * 100) > 80

for: 1m

labels:

severity: warning

annotations:

summary: "Instance {{ $labels.instance }} : {{ $labels.mountpoint }} 分区使用率过高"

description: "{{ $labels.instance }}: {{ $labels.mountpoint }} 分区使用大于80% (当前值: {{ $value }})"

- alert: NodeMemoryUsage

expr: |

100 - (node_memory_MemFree+node_memory_Cached+node_memory_Buffers) /

node_memory_MemTotal * 100 > 80

for: 1m

labels:

severity: warning

annotations:

summary: "Instance {{ $labels.instance }} 内存使用率过高"

description: "{{ $labels.instance }}内存使用大于80% (当前值: {{ $value }})"

- alert: NodeCPUUsage

expr: |

100 - (avg(irate(node_cpu_seconds_total{mode="idle"}[5m])) by (instance) * 100) > 60

for: 1m

labels:

severity: warning

annotations:

summary: "Instance {{ $labels.instance }} CPU使用率过高"

description: "{{ $labels.instance }}CPU使用大于60% (当前值: {{ $value }})"

- alert: KubeNodeNotReady

expr: |

kube_node_status_condition{condition="Ready",status="true"} == 0

for: 1m

labels:

severity: error

annotations:

message: '{{ $labels.node }} 已经有10多分钟没有准备好了.'

pod.rules: |

groups:

- name: pod.rules

rules:

- alert: PodCPUUsage

expr: |

sum(rate(container_cpu_usage_seconds_total{image!=""}[1m]) * 100) by (pod, namespace) > 80

for: 5m

labels:

severity: warning

annotations:

summary: "命名空间: {{ $labels.namespace }} | Pod名称: {{ $labels.pod }} CPU使用大于80% (当前值: {{ $value }})"

- alert: PodMemoryUsage

expr: |

sum(container_memory_rss{image!=""}) by(pod, namespace) /

sum(container_spec_memory_limit_bytes{image!=""}) by(pod, namespace) * 100 != +inf > 80

for: 5m

labels:

severity: warning

annotations:

summary: "命名空间: {{ $labels.namespace }} | Pod名称: {{ $labels.pod }} 内存使用大于80% (当前值: {{ $value }})"

- alert: PodNetworkReceive

expr: |

sum(rate(container_network_receive_bytes_total{image!="",name=~"^k8s_.*"}[5m]) /1000) by (pod,namespace) > 30000

for: 5m

labels:

severity: warning

annotations:

summary: "命名空间: {{ $labels.namespace }} | Pod名称: {{ $labels.pod }} 入口流量大于30MB/s (当前值: {{ $value }}K/s)"

- alert: PodNetworkTransmit

expr: |

sum(rate(container_network_transmit_bytes_total{image!="",name=~"^k8s_.*"}[5m]) /1000) by (pod,namespace) > 30000

for: 5m

labels:

severity: warning

annotations:

summary: "命名空间: {{ $labels.namespace }} | Pod名称: {{ $labels.pod }} 出口流量大于30MB/s (当前值: {{ $value }}/K/s)"

- alert: PodRestart

expr: |

sum(changes(kube_pod_container_status_restarts_total[1m])) by (pod,namespace) > 0

for: 1m

labels:

severity: warning

annotations:

summary: "命名空间: {{ $labels.namespace }} | Pod名称: {{ $labels.pod }} Pod重启 (当前值: {{ $value }})"

- alert: PodFailed

expr: |

sum(kube_pod_status_phase{phase="Failed"}) by (pod,namespace) > 0

for: 5s

labels:

severity: error

annotations:

summary: "命名空间: {{ $labels.namespace }} | Pod名称: {{ $labels.pod }} Pod状态Failed (当前值: {{ $value }})"

- alert: PodPending

expr: |

sum(kube_pod_status_phase{phase="Pending"}) by (pod,namespace) > 0

for: 1m

labels:

severity: error

annotations:

summary: "命名空间: {{ $labels.namespace }} | Pod名称: {{ $labels.pod }} Pod状态Pending (当前值: {{ $value }})"

使用Deployment部署Prometheus

ConfigMap资源创建成功后,我们就可以通过Volume挂载的方式,将Prometheus的配置文件挂载到容器中。 这里我们通过Deployment部署Prometheus Server实例,创建prometheus-deployment.yml文件,并写入以下内容:

apiVersion: apps/v1

kind: Deployment

metadata:

name: prometheus

namespace: monitor

labels:

k8s-app: prometheus

spec:

replicas: 1

selector:

matchLabels:

k8s-app: prometheus

template:

metadata:

labels:

k8s-app: prometheus

spec:

serviceAccountName: prometheus

initContainers:

- name: "init-chown-data"

image: "busybox:latest"

imagePullPolicy: "IfNotPresent"

command: ["chown", "-R", "65534:65534", "/data"]

volumeMounts:

- name: prometheus-data

mountPath: /data

subPath: ""

containers:

- name: prometheus-server-configmap-reload

image: "jimmidyson/configmap-reload:v0.1"

imagePullPolicy: "IfNotPresent"

args:

- --volume-dir=/etc/config

- --webhook-url=http://localhost:9090/-/reload

volumeMounts:

- name: config-volume

mountPath: /etc/config

readOnly: true

resources:

limits:

cpu: 10m

memory: 10Mi

requests:

cpu: 10m

memory: 10Mi

- name: prometheus-server

image: "prom/prometheus:v2.20.0"

imagePullPolicy: "IfNotPresent"

args:

- --config.file=/etc/config/prometheus.yml

- --storage.tsdb.path=/data

- --web.console.libraries=/etc/prometheus/console_libraries

- --web.console.templates=/etc/prometheus/consoles

- --web.enable-lifecycle

ports:

- containerPort: 9090

readinessProbe:

httpGet:

path: /-/ready

port: 9090

initialDelaySeconds: 30

timeoutSeconds: 30

livenessProbe:

httpGet:

path: /-/healthy

port: 9090

initialDelaySeconds: 30

timeoutSeconds: 30

resources:

limits:

cpu: 500m

memory: 1500Mi

requests:

cpu: 200m

memory: 1000Mi

volumeMounts:

- name: config-volume

mountPath: /etc/config

- name: prometheus-data

mountPath: /data

subPath: ""

- name: prometheus-rules

mountPath: /etc/config/rules

volumes:

- name: config-volume

configMap:

name: prometheus-config

- name: prometheus-rules

configMap:

name: prometheus-rules

- name: prometheus-data

persistentVolumeClaim:

claimName: prometheus

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: prometheus

namespace: monitor

spec:

storageClassName: "managed-nfs-storage"

accessModes:

- ReadWriteMany

resources:

requests:

storage: 10Gi

---

apiVersion: v1

kind: Service

metadata:

name: prometheus

namespace: monitor

spec:

type: NodePort

ports:

- name: http

port: 9090

protocol: TCP

targetPort: 9090

nodePort: 30090

selector:

k8s-app: prometheus

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: prometheus

namespace: monitor

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: prometheus

rules:

- apiGroups:

- ""

resources:

- nodes

- nodes/metrics

- services

- endpoints

- pods

verbs:

- get

- list

- watch

- apiGroups:

- ""

resources:

- configmaps

verbs:

- get

- nonResourceURLs:

- "/metrics"

verbs:

- get

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: prometheus

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: prometheus

subjects:

- kind: ServiceAccount

name: prometheus

namespace: monitor

该文件中分别定义了Service、Deployment、PVC动态存储、ServiceAccount、ClusterRole、ClusterRoleBinding,Service类型为NodePort,这样我们可以通过虚拟机IP和端口访问到Prometheus实例。为了能够让Prometheus实例实现持久化存储,定义了PersistentVolumeClaim动态创建PV,将数据挂在到PV中。

为了能够让Prometheus能够访问收到认证保护的Kubernetes API,我们首先需要做的是,对Prometheus进行访问授权。在Kubernetes中主要使用基于角色的访问控制模型(Role-Based Access Control),用于管理Kubernetes下资源访问权限。首先我们需要在Kubernetes下定义角色(ClusterRole),并且为该角色赋予相应的访问权限。同时创建Prometheus所使用的账号(ServiceAccount),最后则是将该账号与角色进行绑定(ClusterRoleBinding)。其中需要注意的是ClusterRole是全局的,不需要指定命名空间。而ServiceAccount是属于特定命名空间的资源。

在完成角色权限以及用户的绑定之后,就可以指定Prometheus使用特定的ServiceAccount创建Pod实例。

使用NodeExporter监控集群资源使用情况

为了能够采集集群中各个节点的资源使用情况,我们需要在各节点中部署一个Node Exporter实例。在本章的“部署Prometheus”小节,我们使用了Kubernetes内置的控制器之一Deployment。Deployment能够确保Prometheus的Pod能够按照预期的状态在集群中运行,而Pod实例可能随机运行在任意节点上。而与Prometheus的部署不同的是,对于Node Exporter而言每个节点只需要运行一个唯一的实例,此时,就需要使用Kubernetes的另外一种控制器Daemonset。顾名思义,Daemonset的管理方式类似于操作系统中的守护进程。Daemonset会确保在集群中所有(也可以指定)节点上运行一个唯一的Pod实例。

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: node-exporter

namespace: monitor

labels:

k8s-app: node-exporter

spec:

selector:

matchLabels:

k8s-app: node-exporter

version: v0.15.2

template:

metadata:

labels:

k8s-app: node-exporter

version: v0.15.2

spec:

containers:

- name: prometheus-node-exporter

image: "prom/node-exporter:v0.15.2"

imagePullPolicy: "IfNotPresent"

args:

- --path.procfs=/host/proc

- --path.sysfs=/host/sys

ports:

- name: metrics

containerPort: 9100

hostPort: 9100

volumeMounts:

- name: proc

mountPath: /host/proc

readOnly: true

- name: sys

mountPath: /host/sys

readOnly: true

resources:

limits:

cpu: 10m

memory: 50Mi

requests:

cpu: 10m

memory: 50Mi

hostNetwork: true

hostPID: true

hostIPC: true

volumes:

- name: proc

hostPath:

path: /proc

- name: sys

hostPath:

path: /sys

- name: rootfs

hostPath:

path: /

- name: dev

hostPath:

path: /dev

---

apiVersion: v1

kind: Service

metadata:

name: node-exporter

namespace: monitor

annotations:

prometheus.io/scrape: "true"

spec:

clusterIP: None

ports:

- name: metrics

port: 9100

protocol: TCP

targetPort: 9100

selector:

k8s-app: node-exporter

由于Node Exporter需要能够访问宿主机,因此这里指定了hostNetwork和hostPID,让Pod实例能够以主机网络以及系统进程的形式运行。同时YAML文件中也创建了NodeExporter相应的Service。这样通过Service就可以访问到对应的NodeExporter实例。

使用Deployment部署kube-state-metrics采集k8s中各种资源对象的状态信息,具体方案如官网示例:https://github.com/kubernetes/kube-state-metrics/tree/main/examples/standard

apiVersion: apps/v1

kind: Deployment

metadata:

name: kube-state-metrics

namespace: monitor

labels:

k8s-app: kube-state-metrics

spec:

selector:

matchLabels:

k8s-app: kube-state-metrics

version: v1.3.0

replicas: 1

template:

metadata:

labels:

k8s-app: kube-state-metrics

version: v1.3.0

spec:

serviceAccountName: kube-state-metrics

containers:

- name: kube-state-metrics

image: lizhenliang/kube-state-metrics:v1.8.0

ports:

- name: http-metrics

containerPort: 8080

- name: telemetry

containerPort: 8081

readinessProbe:

httpGet:

path: /healthz

port: 8080

initialDelaySeconds: 5

timeoutSeconds: 5

- name: addon-resizer

image: lizhenliang/addon-resizer:1.8.6

resources:

limits:

cpu: 100m

memory: 30Mi

requests:

cpu: 100m

memory: 30Mi

env:

- name: MY_POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: MY_POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: config-volume

mountPath: /etc/config

command:

- /pod_nanny

- --config-dir=/etc/config

- --container=kube-state-metrics

- --cpu=100m

- --extra-cpu=1m

- --memory=100Mi

- --extra-memory=2Mi

- --threshold=5

- --deployment=kube-state-metrics

volumes:

- name: config-volume

configMap:

name: kube-state-metrics-config

---

apiVersion: v1

kind: ConfigMap

metadata:

name: kube-state-metrics-config

namespace: monitor

data:

NannyConfiguration: |-

apiVersion: nannyconfig/v1alpha1

kind: NannyConfiguration

---

apiVersion: v1

kind: Service

metadata:

name: kube-state-metrics

namespace: monitor

annotations:

# 为了区分哪些Pod实例是可以供Prometheus进行采集的

prometheus.io/scrape: 'true' # 自动发现集群中的 Service

spec:

ports:

- name: http-metrics

port: 8080

targetPort: http-metrics

protocol: TCP

- name: telemetry

port: 8081

targetPort: telemetry

protocol: TCP

selector:

k8s-app: kube-state-metrics

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: kube-state-metrics

namespace: monitor

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: kube-state-metrics

rules:

- apiGroups: [""]

resources:

- configmaps

- secrets

- nodes

- pods

- services

- resourcequotas

- replicationcontrollers

- limitranges

- persistentvolumeclaims

- persistentvolumes

- namespaces

- endpoints

verbs: ["list", "watch"]

- apiGroups: ["apps"]

resources:

- statefulsets

- daemonsets

- deployments

- replicasets

verbs: ["list", "watch"]

- apiGroups: ["batch"]

resources:

- cronjobs

- jobs

verbs: ["list", "watch"]

- apiGroups: ["autoscaling"]

resources:

- horizontalpodautoscalers

verbs: ["list", "watch"]

- apiGroups: ["networking.k8s.io", "extensions"]

resources:

- ingresses

verbs: ["list", "watch"]

- apiGroups: ["storage.k8s.io"]

resources:

- storageclasses

verbs: ["list", "watch"]

- apiGroups: ["certificates.k8s.io"]

resources:

- certificatesigningrequests

verbs: ["list", "watch"]

- apiGroups: ["policy"]

resources:

- poddisruptionbudgets

verbs: ["list", "watch"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

name: kube-state-metrics-resizer

namespace: monitor

rules:

- apiGroups: [""]

resources:

- pods

verbs: ["get"]

- apiGroups: ["extensions","apps"]

resources:

- deployments

resourceNames: ["kube-state-metrics"]

verbs: ["get", "update"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: kube-state-metrics

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: kube-state-metrics

subjects:

- kind: ServiceAccount

name: kube-state-metrics

namespace: monitor

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: kube-state-metrics

namespace: monitor

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: kube-state-metrics-resizer

subjects:

- kind: ServiceAccount

name: kube-state-metrics

namespace: monitor

AlertManager

AlertManager处理由客户端应用程序(如Prometheus服务器)发送的警报。

它负责重复数据消除、分组,并将它们路由到正确的接收器集成(如电子邮件、PagerDuty或monitorGenie)。

它还负责消除和抑制警报。

通过翻译官方文档可以了解到,AlertManager是负责为Prometheus(本身不会发送警报)发送警报的工具.

AlertManager不是简单发送警报,可以消除重复警报,分组,抑制警报功能.并支持多接收器.

Prometheus->触发定义的警报规则->AlertManager->发送警报到指定通知渠道.

为了能让Prometheus发送警报,我们需要:

- 搭建AlertManager服务.

- 定义AlertManager通知配置.

- 定义Prometheus警报规则并引入.

- 测试警报.

- 定义通知模板.

定义AlertManager通知配置

apiVersion: v1

kind: ConfigMap

metadata:

name: alertmanager-config

namespace: monitor

data:

alertmanager.yml: |

global:

resolve_timeout: 5m # 处理超时时间,默认为5min

smtp_smarthost: 'smtp.163.com:25' # 邮箱smtp服务器代理

smtp_from: 'baojingtongzhi@163.com' # 发送邮箱名称

smtp_auth_username: 'baojingtongzhi@163.com' # 邮箱帐户

smtp_auth_password: 'NCKBJTSASSXMRQBM' # 邮箱授权码(注意是授权码,不知道自己查一下)

# 定义警报接收者信息

receivers:

- name: default-receiver # 警报

email_configs: # 邮箱配置

- to: "zhenliang369@163.com" # 接收警报的email配置

route:

group_interval: 1m # 重复发送警报的周期

group_wait: 10s # 组内等待时间,触发阈值后,XXs后发送本组警报

receiver: default-receiver # 发送警报的接收者的名称

repeat_interval: 1m # 每个组之前间隔时间(group_by设定的值划分的组)

搭建Alertmanager服务

apiVersion: apps/v1

kind: Deployment

metadata:

name: alertmanager

namespace: monitor

spec:

replicas: 1

selector:

matchLabels:

k8s-app: alertmanager

version: v0.14.0

template:

metadata:

labels:

k8s-app: alertmanager

version: v0.14.0

spec:

containers:

- name: prometheus-alertmanager

image: "prom/alertmanager:v0.14.0"

imagePullPolicy: "IfNotPresent"

args:

- --config.file=/etc/config/alertmanager.yml

- --storage.path=/data

- --web.external-url=/

ports:

- containerPort: 9093

readinessProbe:

httpGet:

path: /#/status

port: 9093

initialDelaySeconds: 30

timeoutSeconds: 30

volumeMounts:

- name: config-volume

mountPath: /etc/config

- name: storage-volume

mountPath: "/data"

subPath: ""

resources:

limits:

cpu: 10m

memory: 50Mi

requests:

cpu: 10m

memory: 50Mi

- name: prometheus-alertmanager-configmap-reload

image: "jimmidyson/configmap-reload:v0.1"

imagePullPolicy: "IfNotPresent"

args:

- --volume-dir=/etc/config

- --webhook-url=http://localhost:9093/-/reload

volumeMounts:

- name: config-volume

mountPath: /etc/config

readOnly: true

resources:

limits:

cpu: 10m

memory: 10Mi

requests:

cpu: 10m

memory: 10Mi

volumes:

- name: config-volume

configMap:

name: alertmanager-config

- name: storage-volume

persistentVolumeClaim:

claimName: alertmanager

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: alertmanager

namespace: monitor

spec:

storageClassName: managed-nfs-storage

accessModes:

- ReadWriteOnce

resources:

requests:

storage: "2Gi"

---

apiVersion: v1

kind: Service

metadata:

name: alertmanager

namespace: monitor

spec:

type: "NodePort"

ports:

- name: http

port: 80

protocol: TCP

targetPort: 9093

nodePort: 30093

selector:

k8s-app: alertmanager

访问IP:30093可以查看AlertManager的web界面(类似prometheus的web界面).

可视化视图工具grafana

参考官网示例:https://grafana.com/docs/grafana/latest/setup-grafana/installation/kubernetes/

n apiVersion: apps/v1

kind: Deployment

metadata:

name: grafana

namespace: monitor

spec:

replicas: 1

selector:

matchLabels:

app: grafana

template:

metadata:

labels:

app: grafana

spec:

containers:

- name: grafana

image: grafana/grafana:7.1.0

ports:

- containerPort: 3000

protocol: TCP

resources:

limits:

cpu: 100m

memory: 256Mi

requests:

cpu: 100m

memory: 256Mi

volumeMounts:

- name: grafana-data

mountPath: /var/lib/grafana

subPath: grafana

securityContext:

fsGroup: 472

runAsUser: 472

volumes:

- name: grafana-data

persistentVolumeClaim:

claimName: grafana

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: grafana

namespace: monitor

spec:

storageClassName: "managed-nfs-storage"

accessModes:

- ReadWriteMany

resources:

requests:

storage: 5Gi

---

apiVersion: v1

kind: Service

metadata:

name: grafana

namespace: monitor

spec:

type: NodePort

ports:

- port : 80

targetPort: 3000

nodePort: 30030

selector:

app: grafana