问题描述

代码如下,内容十分简单,只是list path的操作。

点击查看代码

DataLakeServiceClient dataLakeServiceClient = new DataLakeServiceClientBuilder().endpoint(blob).sasToken(sasToken).buildClient();

DataLakeFileSystemClient testFs = dataLakeServiceClient.getFileSystemClient("test");

DataLakeDirectoryClient testDir = testFs.getDirectoryClient("test");

testDir.listPaths().forEach(

s-> System.out.println(s.getName())

);

报错信息

点击查看报错信息

Exception in thread "main" com.azure.storage.file.datalake.models.DataLakeStorageException: If you are using a StorageSharedKeyCredential, and the server returned an error message that says 'Signature did not match', you can compare the string to sign with the one generated by the SDK. To log the string to sign, pass in the context key value pair 'Azure-Storage-Log-String-To-Sign': true to the appropriate method call.

If you are using a SAS token, and the server returned an error message that says 'Signature did not match', you can compare the string to sign with the one generated by the SDK. To log the string to sign, pass in the context key value pair 'Azure-Storage-Log-String-To-Sign': true to the appropriate generateSas method call.

Please remember to disable 'Azure-Storage-Log-String-To-Sign' before going to production as this string can potentially contain PII.

Status code 403, "{"error":{"code":"AuthenticationFailed","message":"Server failed to authenticate the request. Make sure the value of Authorization header is formed correctly including the signature.\nRequestId:b44da7b7-a01f-002d-70e3-a12a79000000\nTime:2023-06-18T12:48:32.9335073Z","detail":{"AuthenticationErrorDetail":"Signature fields not well formed."}}}"

at java.base/java.lang.invoke.MethodHandle.invokeWithArguments(MethodHandle.java:732)

at com.azure.core.implementation.http.rest.ResponseExceptionConstructorCache.invoke(ResponseExceptionConstructorCache.java:56)

调查过程

检查SAS Token

SAS Token确认有效。

检查SDK和存储账户设置

调查发现,storage account并没有启用HNS(ADLS Gen2),然而使用的类DataLakeServiceClient和包Azure File Data Lake client library for Java均为ADLS Gen2设计。且SDK文档中明确写明不支持未开启HNS的storage account。

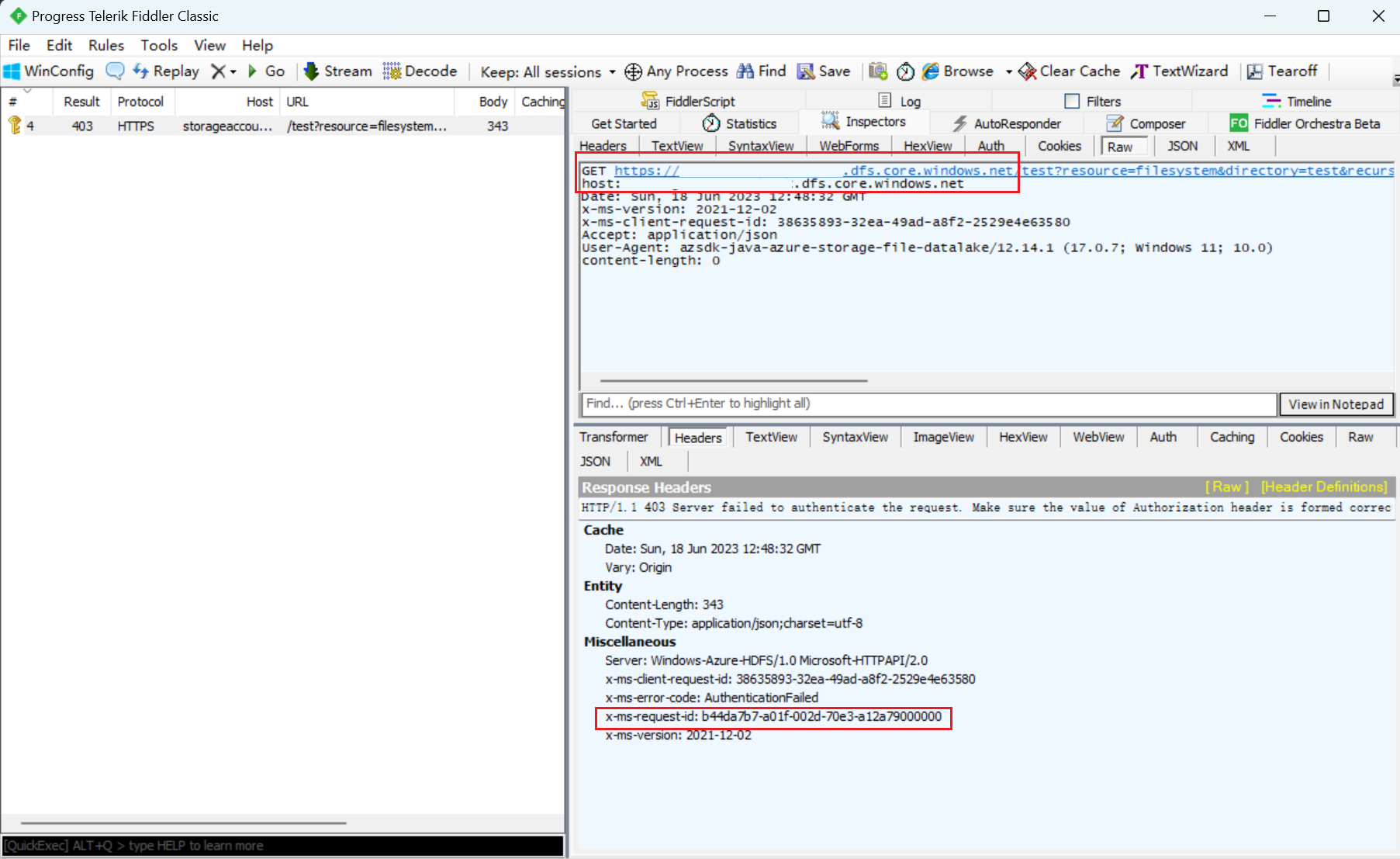

抓取网络请求包

云服务SDK最终都是将代码转换为REST API,而ADLS和普通的Blob使用不同REST API。因此只要能抓取实际的HTTP请求查看便能确定问题所在。

实际抓包结果表明,尽管代码中配置endpoint为https://account-name.blob.core.windows.net,最终SDK发送出去的请求去往了account-name.dfs.core.windows.net。

JVM抓包方式

参考fiddler官方文档:Configure a Java Application to Use Fiddler

Fiddler证书安装到本机,直接通过Java代码配置。

配置JVM使用Fiddler作为代理代码

// Set fiddler as proxy server for JVM

System.setProperty("http.proxyHost", "127.0.0.1");

System.setProperty("https.proxyHost", "127.0.0.1");

System.setProperty("http.proxyPort", "8888");

System.setProperty("https.proxyPort", "8888");

// set trusted certificate store of JVM to Windows-ROOT

System.setProperty("javax.net.ssl.trustStoreType","Windows-ROOT");

结论

报错信息不能反映实际错误原因。但考虑到客户用例为文档明确写明的不支持的用法,因此也属正常情况。解决方式就是使用正确的类,可以参考官方在Github上给出的示例用法。Azure Blob Java SDK - ListContainersExample