第二次作业

作业①:

要求:

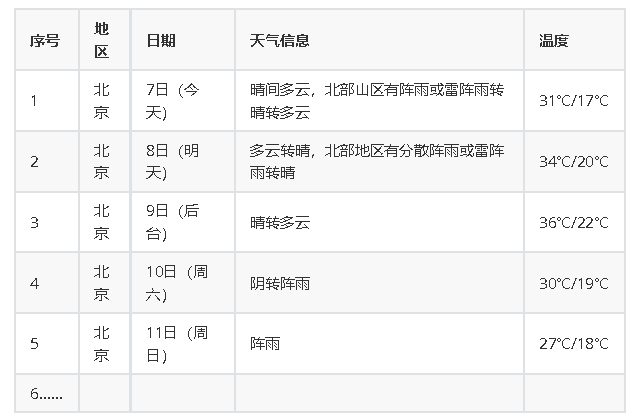

在中国气象网(http://www.weather.com.cn)给定城市集的7日天气预报,并保存在数据库。

输出信息:

Gitee文件夹链接:

https://gitee.com/yangxxin/crawl_project/tree/master/实践作业代码/第二次作业/题目一

代码如下:

from bs4 import BeautifulSoup

from bs4 import UnicodeDammit

import urllib.request

import sqlite3

class WeatherDB:

def openDB(self):

self.con=sqlite3.connect("weathers.db")

self.cursor=self.con.cursor()

try:

self.cursor.execute("create table weathers (wCity varchar(16),wDate varchar(16),wWeather varchar(64),wTemp varchar(32),constraint pk_weather primary key (wCity,wDate))")

except:

self.cursor.execute("delete from weathers")

def closeDB(self):

self.con.commit()

self.con.close()

def insert(self, city, date, weather, temp):

try:

self.cursor.execute("insert into weathers (wCity,wDate,wWeather,wTemp) values (?,?,?,?)",

(city, date, weather, temp))

except Exception as err:

print(err)

def show(self):

self.cursor.execute("select * from weathers")

rows = self.cursor.fetchall()

print("%-16s%-16s%-32s%-16s" % ("city", "date", "weather", "temp"))

for row in rows:

print("%-16s%-16s%-32s%-16s" % (row[0], row[1], row[2], row[3]))

class WeatherForecast:

def __init__(self):

self.headers = {

"User-Agent": "Mozilla/5.0 (Windows; U; Windows NT 6.0 x64; en-US; rv:1.9pre) Gecko/2008072421 Minefield/3.0.2pre"}

self.cityCode = {"北京": "101010100", "上海": "101020100", "广州": "101280101", "深圳": "101280601"}

def forecastCity(self, city):

if city not in self.cityCode.keys():

print(city + " code cannot be found")

return

url = "http://www.weather.com.cn/weather/" + self.cityCode[city] + ".shtml"

try:

req = urllib.request.Request(url, headers=self.headers)

data = urllib.request.urlopen(req)

data = data.read()

dammit = UnicodeDammit(data, ["utf-8", "gbk"])

data = dammit.unicode_markup

soup = BeautifulSoup(data, "lxml")

lis = soup.select("ul[class='t clearfix'] li")

for li in lis:

try:

date=li.select('h1')[0].text

weather=li.select('p[class="wea"]')[0].text

temp=li.select('p[class="tem"] span')[0].text+"/"+li.select('p[class="tem"] i')[0].text

print(city,date,weather,temp)

self.db.insert(city,date,weather,temp)

except Exception as err:

print(err)

except Exception as err:

print(err)

def process(self, cities):

self.db = WeatherDB()

self.db.openDB()

for city in cities:

self.forecastCity(city)

self.db.show()

self.db.closeDB()

ws = WeatherForecast()

ws.process(["北京", "上海", "广州", "深圳"])

print("completed")

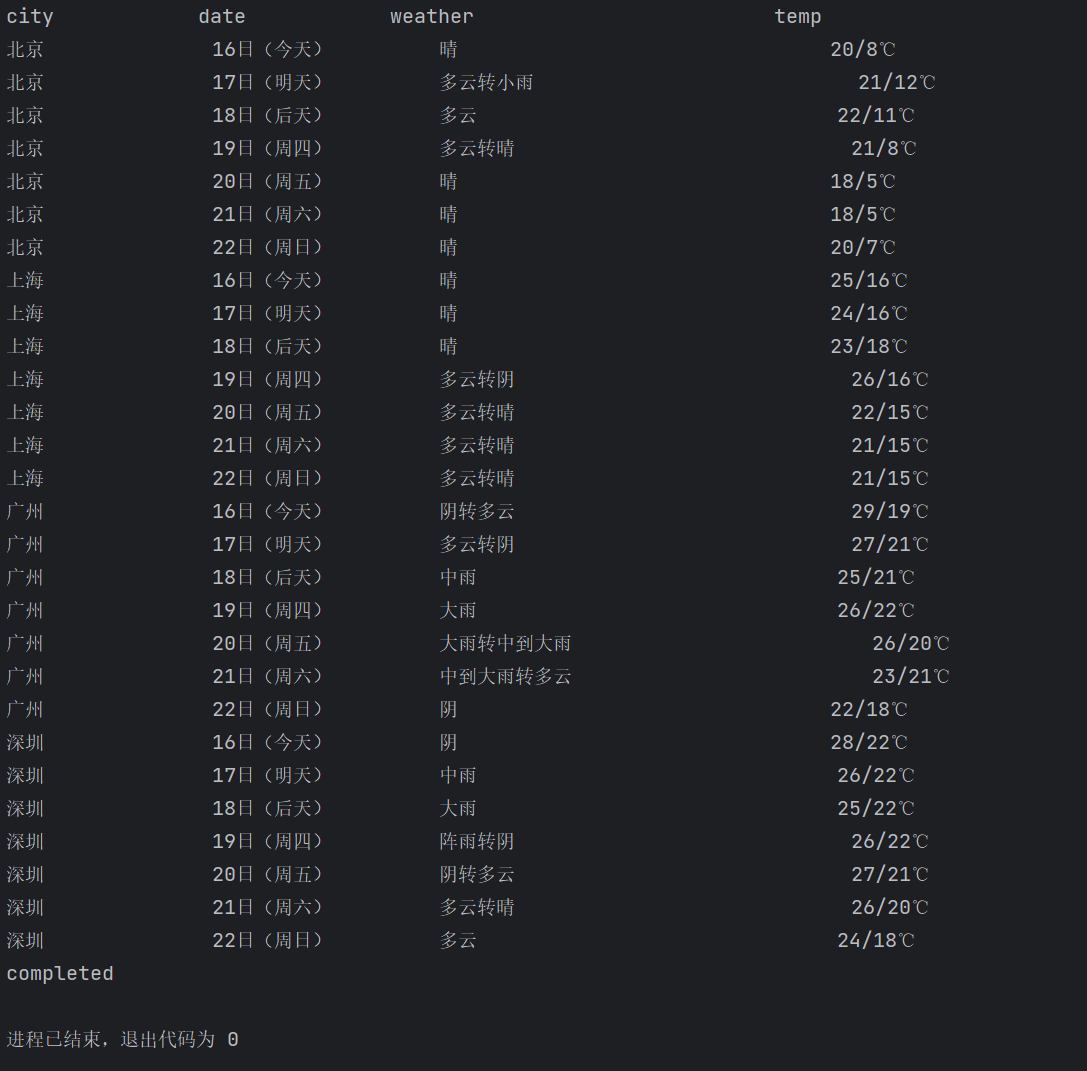

运行结果:

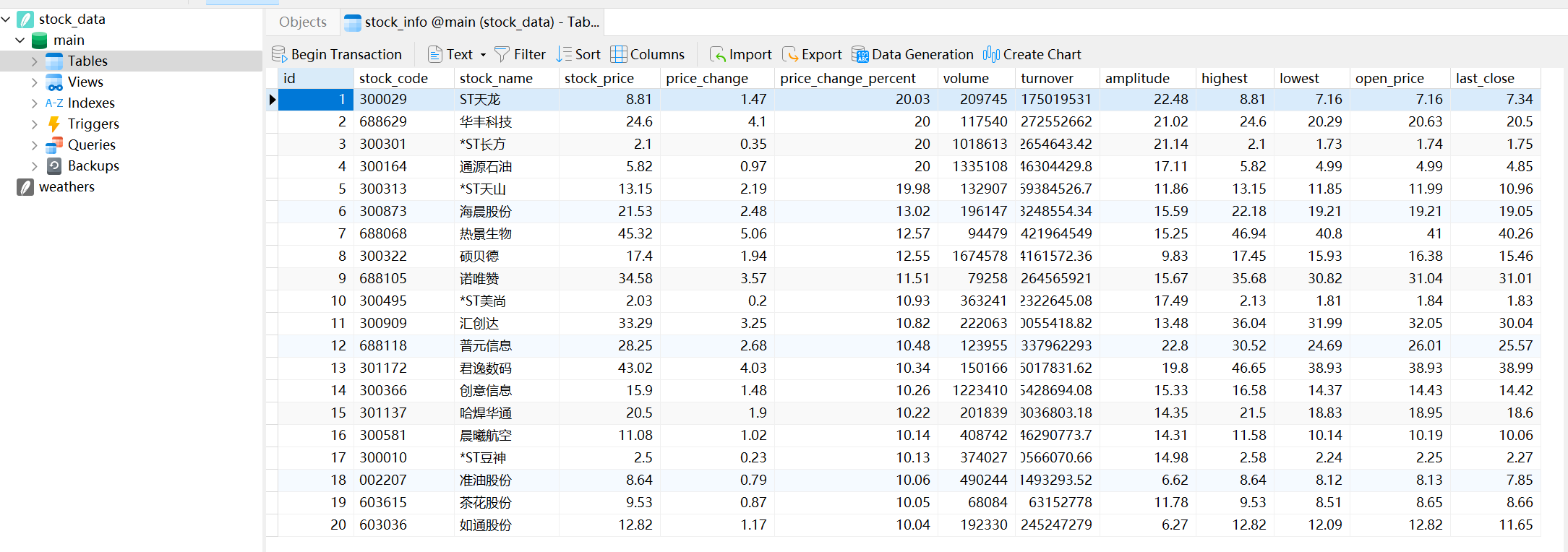

使用Navicat可视化数据库:

心得体会:

通过对实践例子的复现,对于bs库的使用有了更深的理解,并初步了解了如何用sqlite3将爬取数据存储到数据库中,并通过navicat可视化数据库。

作业②:

要求:

用requests和BeautifulSoup库方法定向爬取股票相关信息,并存储在数据库中。

候选网站:东方财富网:https://www.eastmoney.com/

新浪股票:http://finance.sina.com.cn/stock/

技巧:在谷歌浏览器中进入F12调试模式进行抓包,查找股票列表加载使用的url,并分析api返回的值,并根据所要求的参数可适当更改api的请求参数。根据URL可观察请求的参数f1、f2可获取不同的数值,根据情况可删减请求的参数。

参考链接:https://zhuanlan.zhihu.com/p/50099084

输出信息:

Gitee文件夹链接:

https://gitee.com/yangxxin/crawl_project/tree/master/实践作业代码/第二次作业/题目二

代码如下:

import requests

import re

import sqlite3

# 用get方法访问服务器并提取页面数据

def getHtml(page,cmd):

url = ("http://66.push2.eastmoney.com/api/qt/clist/get?cb=jQuery112409097606620255823_1696662149317&pn=1&pz=20&po=1&np="+str(page)+

"&ut=bd1d9ddb04089700cf9c27f6f7426281&fltt=2&invt=2&wbp2u=|0|0|0|web&"+cmd+

"&fields=f1,f2,f3,f4,f5,f6,f7,f8,f9,f10,f12,f13,f14,f15,f16,f17,f18,f20,f21,f23,f24,f25,f22,f11,f62,f128,f136,f115,f152&_=1696662149318")

r = requests.get(url)

pat = "\"diff\":\[(.*?)\]"

data = re.compile(pat, re.S).findall(r.text)

return data

def getOnePageStock(cmd, page):

# 提供的JSON数组

data = getHtml(page,cmd)

datas = data[0].split("},")

#分解每条股票

# 连接到SQLite数据库(如果不存在,则会创建一个新的数据库文件)

conn = sqlite3.connect('stock_data.db')

cursor = conn.cursor()

# 创建股票信息表

cursor.execute('''CREATE TABLE IF NOT EXISTS stock_info (

id INTEGER PRIMARY KEY,

stock_code TEXT,

stock_name TEXT,

stock_price REAL,

price_change REAL,

price_change_percent REAL,

volume INTEGER,

turnover REAL,

amplitude REAL,

highest REAL,

lowest REAL,

open_price REAL,

last_close REAL

)''')

# 解析JSON数组并将数据存储到数据库中

for item in datas:

# 使用字符串操作来提取键值对

stock_info = {}

pairs = item.split(',')

for pair in pairs:

key, value = pair.split(':')

key = key.strip('"')

value = value.strip('"')

stock_info[key] = value

# 提取需要的字段

stock_code = stock_info.get('f12', 'N/A')

stock_name = stock_info.get('f14', 'N/A')

stock_price = float(stock_info.get('f2', 0.0))

price_change_percent = float(stock_info.get('f3', 0.0))

price_change = float(stock_info.get('f4', 0.0))

volume = int(stock_info.get('f5', 0))

turnover = float(stock_info.get('f6', 0.0))

amplitude = float(stock_info.get('f7', 0.0))

highest = float(stock_info.get('f15', 0.0))

lowest = float(stock_info.get('f16', 0.0))

open_price = float(stock_info.get('f17', 0.0))

last_close = float(stock_info.get('f18', 0.0))

# 插入数据到数据库中

cursor.execute(

"INSERT INTO stock_info (stock_code, stock_name, stock_price, price_change_percent, price_change, volume, turnover, amplitude, highest, lowest, open_price, last_close) VALUES (?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?)",

(stock_code, stock_name, stock_price, price_change_percent, price_change, volume, turnover, amplitude, highest,

lowest, open_price, last_close))

conn.commit()

# 查询股票信息

cursor.execute("SELECT * FROM stock_info")

# 获取查询结果

stocks = cursor.fetchall()

# 获取查询结果的列名

columns = [desc[0] for desc in cursor.description]

# 打印列标签

print("\t".join(columns))

# 打印股票信息

for stock in stocks:

# 打印每行数据

print("\t".join(map(str, stock)))

# 关闭数据库连接

conn.close()

page = 1

getOnePageStock("fid=f3&fs=m:0+t:6,m:0+t:80,m:1+t:2,m:1+t:23,m:0+t:81+s:2048", page)

# cmd = {

# "沪深A股": "fid=f3&fs=m:0+t:6,m:0+t:80,m:1+t:2,m:1+t:23,m:0+t:81+s:2048",

# "上证A股": "fid=f3&fs=m:1+t:2,m:1+t:23"

# }

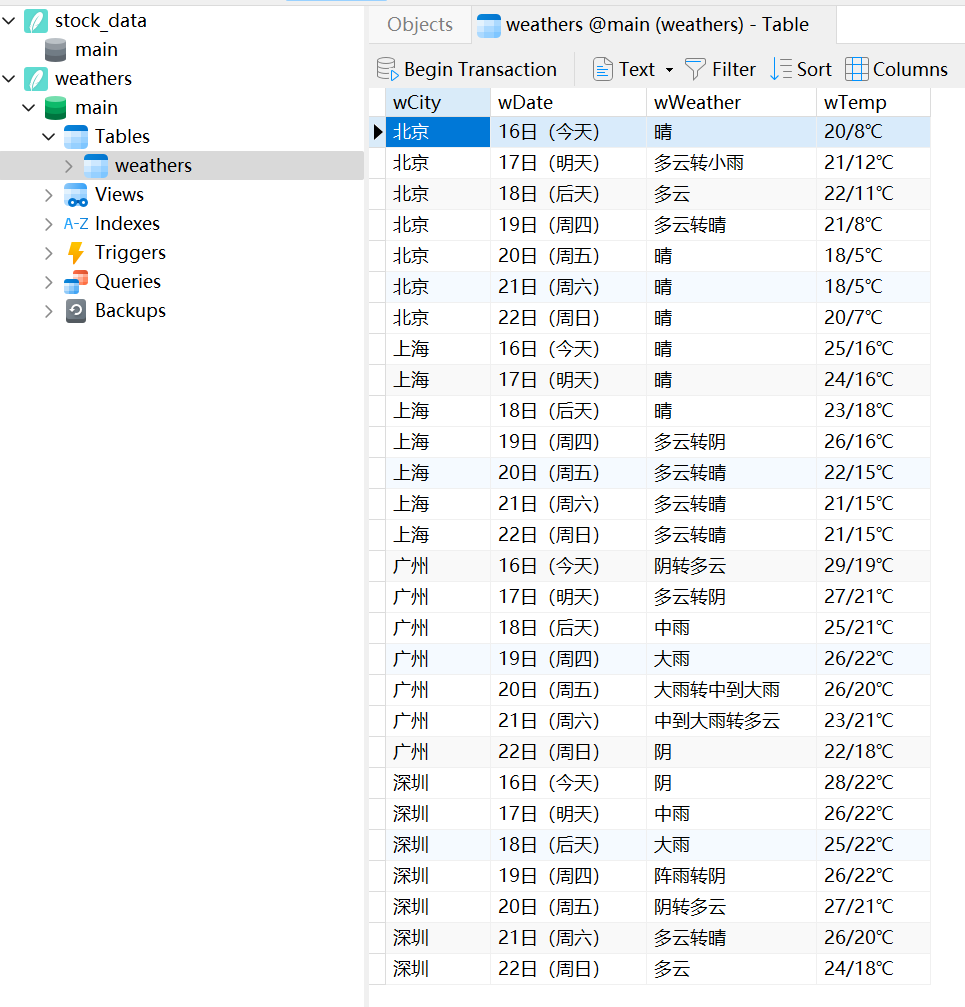

运行结果:

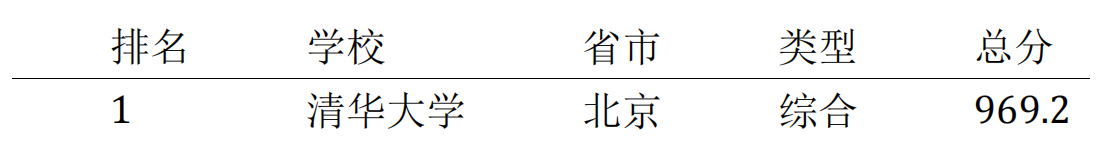

使用Navicat可视化数据库:

心得体会:

通过本题加深了对正则表达式的理解,对如何抓包有了基本的了解,对如何将数据存储到数据库并通过navicat可视化有了进一步的认知,明白了如何将数据库中的数据打印出来。

作业③:

要求:

爬取中国大学2021主榜(https://www.shanghairanking.cn/rankings/bcur/2021)所有院校信息,并存储在数据库中,同时将浏览器F12调试分析的过程录制Gif加入至博客中。

技巧:分析该网站的发包情况,分析获取数据的api

使用F12抓包的gif图片:

输出信息:

Gitee文件夹链接:

https://gitee.com/yangxxin/crawl_project/tree/master/实践作业代码/第二次作业/题目三

代码如下:

import requests

import re

import pandas as pd

import sqlite3

# 发送HTTP请求并获取响应

url = 'https://www.shanghairanking.cn/_nuxt/static/1697106492/rankings/bcur/2021/payload.js'

response = requests.get(url)

response.encoding = response.apparent_encoding

data_text = response.text

# 提取映射关系

def extract_mapping(data_text):

start_index = data_text.find("o") + 3

end_index = data_text.find(")")

key_list = data_text[start_index:end_index].split(',')

start_index_1 = data_text.rfind("}") + 1

end_index_1 = data_text.rfind(")") - 2

value_list = data_text[start_index_1:end_index_1].split(',')

mapper = dict(zip(key_list, value_list))

return mapper

mapper = extract_mapping(data_text)

# 使用正则表达式匹配学校名称和总分

name = re.findall(r'univNameCn:"(.*?)",', data_text)

score = re.findall(r',score:(.*?),', data_text)

province_new = [mapper[item] for item in re.findall(r'province:(.*?),', data_text)]

category_new = [mapper[item] for item in re.findall(r'univCategory:(.*?),', data_text)]

# 创建Pandas DataFrame

df = pd.DataFrame({

'排名': range(1, len(name) + 1),

'学校': name,

'省市': province_new,

'类型': category_new,

'总分': score

})

# 创建数据库连接

conn = sqlite3.connect('university_data.db')

# 创建表格(如果不存在)

cursor = conn.cursor()

cursor.execute('''CREATE TABLE IF NOT EXISTS universities

(排名 INTEGER, 学校 TEXT, 省市 TEXT, 类型 TEXT, 总分 REAL)''')

# 将DataFrame中的数据逐行插入数据库

for _, row in df.iterrows():

cursor.execute("INSERT INTO universities (排名, 学校, 省市, 类型, 总分) VALUES (?, ?, ?, ?, ?)",

(row["排名"], row["学校"], row["省市"], row["类型"], row["总分"]))

# 提交更改

conn.commit()

# 关闭连接

conn.close()

# 打印DataFrame

pd.set_option('display.max_columns', None) # 显示所有列

pd.set_option('display.expand_frame_repr', False) # 不自动换行

pd.set_option('display.max_rows', None) # 显示所有行

print(df)

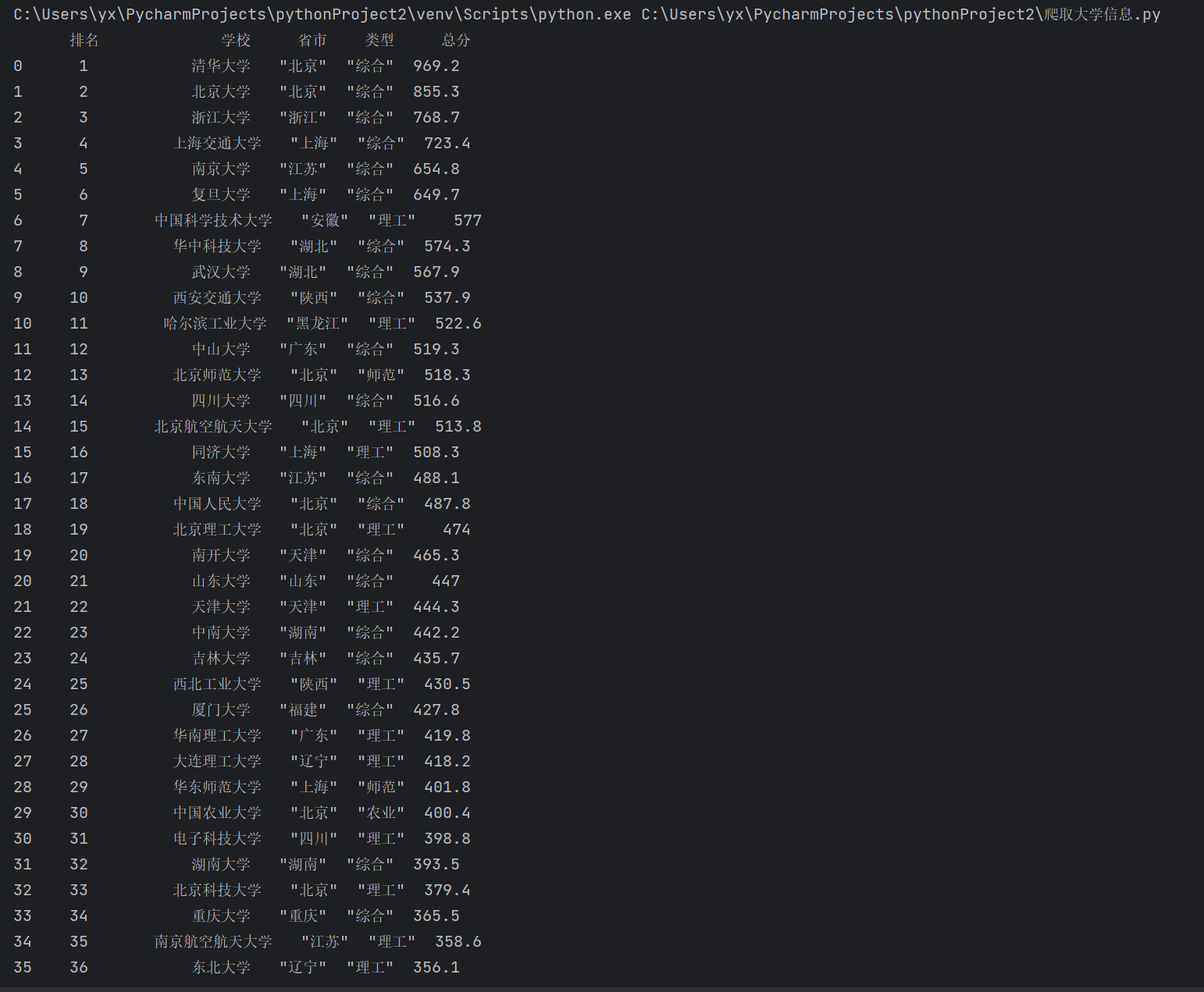

运行结果(部分):

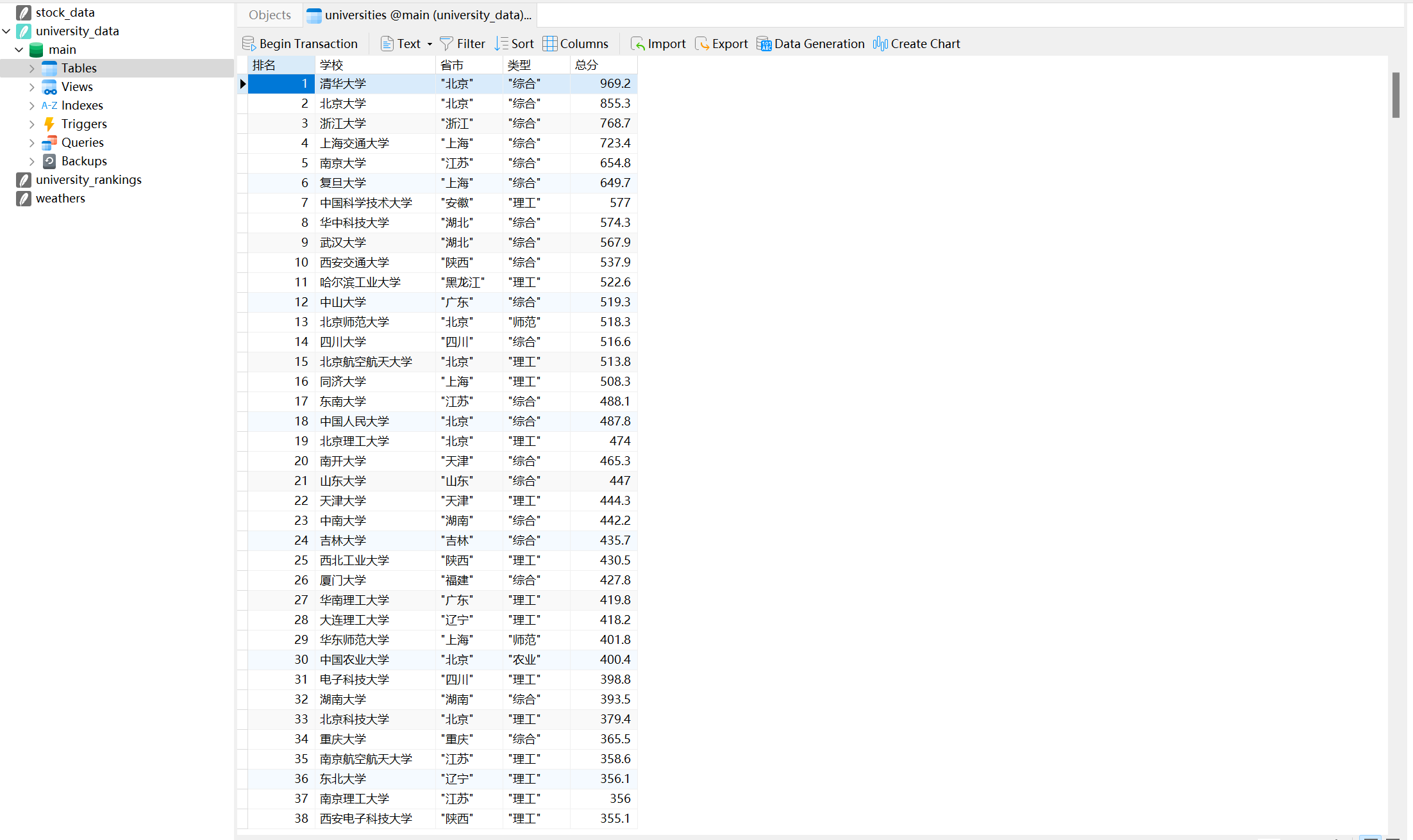

使用Navicat可视化数据库(部分,实际已经爬取所有数据):

心得体会:

通过本题基本掌握了如何将数据写入数据库并通过navicat可视化,对如何抓包有了更深的理解,熟悉了正则表达式的使用,进一步提升了爬虫程序的编写能力。