1.1 查看Token过期时间

执行下面命令查看所有Token:

kubectl get secret -n kube-system

找到之前创建的new.yaml文件,进去查看使用的Token:

[root@k8s-master01 ~]# cat new.yaml apiVersion: kubeadm.k8s.io/v1beta3 bootstrapTokens: - groups: - system:bootstrappers:kubeadm:default-node-token token: 7t2weq.bjbawausm0jaxury # 这个就是我们要看的Token值 ttl: 24h0m0s usages: - signing - authentication kind: InitConfiguration localAPIEndpoint: advertiseAddress: 192.168.1.8 bindPort: 6443 nodeRegistration: criSocket: /run/containerd/containerd.sock imagePullPolicy: IfNotPresent name: k8s-master01 taints: - effect: NoSchedule key: node-role.kubernetes.io/master --- apiServer: certSANs: - 192.168.1.100 timeoutForControlPlane: 4m0s apiVersion: kubeadm.k8s.io/v1beta3 certificatesDir: /etc/kubernetes/pki clusterName: kubernetes controlPlaneEndpoint: 192.168.1.100:16443 controllerManager: {} dns: {} etcd: local: dataDir: /var/lib/etcd imageRepository: registry.cn-hangzhou.aliyuncs.com/google_containers kind: ClusterConfiguration kubernetesVersion: v1.23.17 networking: dnsDomain: cluster.local podSubnet: 172.16.0.0/12 serviceSubnet: 10.244.0.0/16 scheduler: {} You have mail in /var/spool/mail/root

复制上面的Token值。

把对应的Token值过滤出来:

[root@k8s-master01 ~]# kubectl get secret -n kube-system | grep 7t2weq bootstrap-token-7t2weq bootstrap.kubernetes.io/token 6 23m

然后使用yaml的格式查看:

[root@k8s-master01 ~]# kubectl get secret -n kube-system bootstrap-token-7t2weq -oyaml apiVersion: v1 data: auth-extra-groups: c3lzdGVtOmJvb3RzdHJhcHBlcnM6a3ViZWFkbTpkZWZhdWx0LW5vZGUtdG9rZW4= expiration: MjAyMy0wMy0zMFQwMToyNjoxMFo= # 这里就是我们的Token过期时间 token-id: N3Qyd2Vx token-secret: YmpiYXdhdXNtMGpheHVyeQ== usage-bootstrap-authentication: dHJ1ZQ== usage-bootstrap-signing: dHJ1ZQ== kind: Secret metadata: creationTimestamp: "2023-03-29T01:26:10Z" name: bootstrap-token-7t2weq namespace: kube-system resourceVersion: "352" uid: e1ee3360-1dc2-41d0-b86d-44591cd4b497 type: bootstrap.kubernetes.io/token

复制上面的Token过期码。

它默认是二进制的,给它转换一下,以下就是过期时间:

[root@k8s-master01 ~]# echo "MjAyMy0wMy0zMFQwMToyNjoxMFo=" | base64 -d 2023-03-30T01:26:10Z

1.2 Pod网段不一致解释

通过下面命令查看发现Pod的网段不一致,有的使用自己定义的Pod网段,有的使用宿主机的网段。

[root@k8s-master01 ~]# kubectl get po -n kube-system -owide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES calico-kube-controllers-6f6595874c-j9hdl 1/1 Running 0 62m 172.25.244.195 k8s-master01 <none> <none> calico-node-2d5p7 1/1 Running 0 62m 192.168.1.9 k8s-master02 <none> <none> calico-node-7n5mw 1/1 Running 0 62m 192.168.1.8 k8s-master01 <none> <none> calico-node-9pdmh 1/1 Running 0 62m 192.168.1.11 k8s-node01 <none> <none> calico-node-b8sh2 1/1 Running 0 62m 192.168.1.12 k8s-node02 <none> <none> calico-node-chdsf 1/1 Running 0 62m 192.168.1.10 k8s-master03 <none> <none> calico-typha-6b6cf8cbdf-llhvd 1/1 Running 0 62m 192.168.1.12 k8s-node02 <none> <none> coredns-65c54cc984-8t757 1/1 Running 0 64m 172.25.244.194 k8s-master01 <none> <none> coredns-65c54cc984-mf4nd 1/1 Running 0 64m 172.25.244.193 k8s-master01 <none> <none> etcd-k8s-master01 1/1 Running 0 64m 192.168.1.8 k8s-master01 <none> <none> etcd-k8s-master02 1/1 Running 0 64m 192.168.1.9 k8s-master02 <none> <none> etcd-k8s-master03 1/1 Running 0 63m 192.168.1.10 k8s-master03 <none> <none> kube-apiserver-k8s-master01 1/1 Running 0 64m 192.168.1.8 k8s-master01 <none> <none> kube-apiserver-k8s-master02 1/1 Running 0 64m 192.168.1.9 k8s-master02 <none> <none> kube-apiserver-k8s-master03 1/1 Running 1 (63m ago) 63m 192.168.1.10 k8s-master03 <none> <none> kube-controller-manager-k8s-master01 1/1 Running 1 (63m ago) 64m 192.168.1.8 k8s-master01 <none> <none> kube-controller-manager-k8s-master02 1/1 Running 0 64m 192.168.1.9 k8s-master02 <none> <none> kube-controller-manager-k8s-master03 1/1 Running 0 63m 192.168.1.10 k8s-master03 <none> <none> kube-proxy-5vp9t 1/1 Running 0 50m 192.168.1.12 k8s-node02 <none> <none> kube-proxy-65s6x 1/1 Running 0 50m 192.168.1.11 k8s-node01 <none> <none> kube-proxy-lzj8c 1/1 Running 0 50m 192.168.1.10 k8s-master03 <none> <none> kube-proxy-r56m7 1/1 Running 0 50m 192.168.1.8 k8s-master01 <none> <none> kube-proxy-sbdm2 1/1 Running 0 50m 192.168.1.9 k8s-master02 <none> <none> kube-scheduler-k8s-master01 1/1 Running 1 (63m ago) 64m 192.168.1.8 k8s-master01 <none> <none> kube-scheduler-k8s-master02 1/1 Running 0 64m 192.168.1.9 k8s-master02 <none> <none> kube-scheduler-k8s-master03 1/1 Running 0 63m 192.168.1.10 k8s-master03 <none> <none> metrics-server-5cf8885b66-jpd6z 1/1 Running 0 59m 172.17.125.1 k8s-node01 <none> <none>

复制Pod的名称。

查看Pod的yaml文件,把hostNetwork过滤出来:

[root@k8s-master01 ~]# kubectl get po calico-node-2d5p7 -n kube-system -oyaml | grep hostNetwo hostNetwork: true

注意:如果Pod采用的是hostNetwork的形式,它就会使用你的宿主机的网络去启动你的Pod,这样的话就会使用你的宿主机的IP地址,并且会在你的宿主机上去监听一个Pod的端口号,比如一个Pod占用了9093的端口,那么你的宿主机就可以看到9093的端口,如果没有采用hostnetwork的方式,会使用你自己定义的Pod网络去启动你的Pod,这个是没有任何影响的!

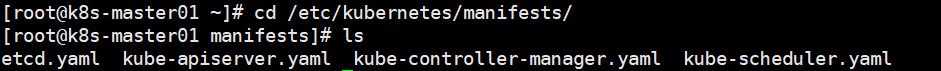

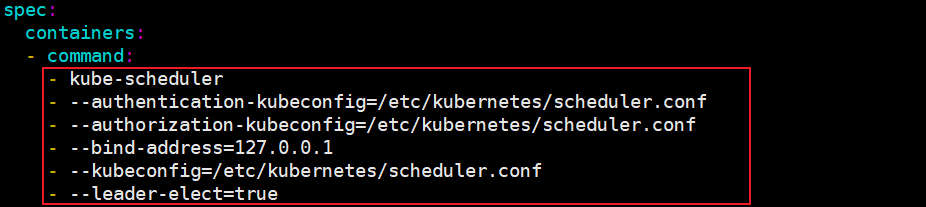

1.3 更改组件配置

k8s组件默认的配置文件再/etc/kubernetes/manifests/下面

如果想要更改添加配置的话,可以在command下面进行添加:

保存退出即可!

想要使配置生效的话,千万不要使用:

kubectl create -f kube-apiserver.yaml

K8S会自动检测你的配置文件,会更新你的Pod,如果等不及的话可以使用下面。

要使用下面命令就可以了:

systemctl restart kubelet

1.4 取消master节点上的污点

你会发现我们新部署的Pod,除了etcd、apiserver、controller-manager、scheduler这些系统组件外,我们自己的部署的Pod它只会在node01、node02上,是因为我们使用kubeadm的方式,它会自动的给master节点添加一个污点,告诉master节点,这个master节点不要部署Pod,所以Pod部署不到master节点上,

[root@k8s-master01 manifests]# kubectl get po -n kube-system -owide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES calico-kube-controllers-6f6595874c-j9hdl 1/1 Running 0 149m 172.25.244.195 k8s-master01 <none> <none> calico-node-2d5p7 1/1 Running 0 149m 192.168.1.9 k8s-master02 <none> <none> calico-node-7n5mw 1/1 Running 0 149m 192.168.1.8 k8s-master01 <none> <none> calico-node-9pdmh 1/1 Running 0 149m 192.168.1.11 k8s-node01 <none> <none> calico-node-b8sh2 1/1 Running 0 149m 192.168.1.12 k8s-node02 <none> <none> calico-node-chdsf 1/1 Running 0 149m 192.168.1.10 k8s-master03 <none> <none> calico-typha-6b6cf8cbdf-llhvd 1/1 Running 0 149m 192.168.1.12 k8s-node02 <none> <none> coredns-65c54cc984-8t757 1/1 Running 0 152m 172.25.244.194 k8s-master01 <none> <none> coredns-65c54cc984-mf4nd 1/1 Running 0 152m 172.25.244.193 k8s-master01 <none> <none> etcd-k8s-master01 1/1 Running 0 152m 192.168.1.8 k8s-master01 <none> <none> etcd-k8s-master02 1/1 Running 0 151m 192.168.1.9 k8s-master02 <none> <none> etcd-k8s-master03 1/1 Running 0 151m 192.168.1.10 k8s-master03 <none> <none> kube-apiserver-k8s-master01 1/1 Running 0 152m 192.168.1.8 k8s-master01 <none> <none> kube-apiserver-k8s-master02 1/1 Running 0 151m 192.168.1.9 k8s-master02 <none> <none> kube-apiserver-k8s-master03 1/1 Running 1 (151m ago) 151m 192.168.1.10 k8s-master03 <none> <none> kube-controller-manager-k8s-master01 1/1 Running 1 (151m ago) 152m 192.168.1.8 k8s-master01 <none> <none> kube-controller-manager-k8s-master02 1/1 Running 0 151m 192.168.1.9 k8s-master02 <none> <none> kube-controller-manager-k8s-master03 1/1 Running 0 151m 192.168.1.10 k8s-master03 <none> <none> kube-proxy-5vp9t 1/1 Running 0 137m 192.168.1.12 k8s-node02 <none> <none> kube-proxy-65s6x 1/1 Running 0 137m 192.168.1.11 k8s-node01 <none> <none> kube-proxy-lzj8c 1/1 Running 0 137m 192.168.1.10 k8s-master03 <none> <none> kube-proxy-r56m7 1/1 Running 0 137m 192.168.1.8 k8s-master01 <none> <none> kube-proxy-sbdm2 1/1 Running 0 137m 192.168.1.9 k8s-master02 <none> <none> kube-scheduler-k8s-master01 1/1 Running 1 (151m ago) 152m 192.168.1.8 k8s-master01 <none> <none> kube-scheduler-k8s-master02 1/1 Running 0 151m 192.168.1.9 k8s-master02 <none> <none> kube-scheduler-k8s-master03 1/1 Running 0 150m 192.168.1.10 k8s-master03 <none> <none> metrics-server-5cf8885b66-jpd6z 1/1 Running 0 146m 172.17.125.1 k8s-node01 <none> <none>

先来查看master节点上有哪些污点:

[root@k8s-master01 manifests]# kubectl describe node -l node-role.kubernetes.io/master= | grep Taints Taints: node-role.kubernetes.io/master:NoSchedule Taints: node-role.kubernetes.io/master:NoSchedule Taints: node-role.kubernetes.io/master:NoSchedule

如果你想把所有master节点的污点都删掉:

kubectl taint node -l node-role.kubernetes.io/master node-role.kubernetes.io/master:NoSchedule-

如果你想只删除一个master节点上的污点:

[root@k8s-master03 ~]# kubectl taint node k8s-master03 node-role.kubernetes.io/master:NoSchedule-

node/k8s-master03 untainted

这时候你在查看Pod就会部署到master上:

[root@k8s-master01 manifests]# kubectl get po -n kube-system -owide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES calico-kube-controllers-6f6595874c-j9hdl 1/1 Running 0 149m 172.25.244.195 k8s-master01 <none> <none> calico-node-2d5p7 1/1 Running 0 149m 192.168.1.9 k8s-master02 <none> <none> calico-node-7n5mw 1/1 Running 0 149m 192.168.1.8 k8s-master01 <none> <none> calico-node-9pdmh 1/1 Running 0 149m 192.168.1.11 k8s-node01 <none> <none> calico-node-b8sh2 1/1 Running 0 149m 192.168.1.12 k8s-node02 <none> <none> calico-node-chdsf 1/1 Running 0 149m 192.168.1.10 k8s-master03 <none> <none> calico-typha-6b6cf8cbdf-llhvd 1/1 Running 0 149m 192.168.1.12 k8s-node02 <none> <none> coredns-65c54cc984-8t757 1/1 Running 0 152m 172.25.244.194 k8s-master01 <none> <none> coredns-65c54cc984-mf4nd 1/1 Running 0 152m 172.25.244.193 k8s-master01 <none> <none> etcd-k8s-master01 1/1 Running 0 152m 192.168.1.8 k8s-master01 <none> <none> etcd-k8s-master02 1/1 Running 0 151m 192.168.1.9 k8s-master02 <none> <none> etcd-k8s-master03 1/1 Running 0 151m 192.168.1.10 k8s-master03 <none> <none> kube-apiserver-k8s-master01 1/1 Running 0 152m 192.168.1.8 k8s-master01 <none> <none> kube-apiserver-k8s-master02 1/1 Running 0 151m 192.168.1.9 k8s-master02 <none> <none> kube-apiserver-k8s-master03 1/1 Running 1 (151m ago) 151m 192.168.1.10 k8s-master03 <none> <none> kube-controller-manager-k8s-master01 1/1 Running 1 (151m ago) 152m 192.168.1.8 k8s-master01 <none> <none> kube-controller-manager-k8s-master02 1/1 Running 0 151m 192.168.1.9 k8s-master02 <none> <none> kube-controller-manager-k8s-master03 1/1 Running 0 151m 192.168.1.10 k8s-master03 <none> <none> kube-proxy-5vp9t 1/1 Running 0 137m 192.168.1.12 k8s-node02 <none> <none> kube-proxy-65s6x 1/1 Running 0 137m 192.168.1.11 k8s-node01 <none> <none> kube-proxy-lzj8c 1/1 Running 0 137m 192.168.1.10 k8s-master03 <none> <none> kube-proxy-r56m7 1/1 Running 0 137m 192.168.1.8 k8s-master01 <none> <none> kube-proxy-sbdm2 1/1 Running 0 137m 192.168.1.9 k8s-master02 <none> <none> kube-scheduler-k8s-master01 1/1 Running 1 (151m ago) 152m 192.168.1.8 k8s-master01 <none> <none> kube-scheduler-k8s-master02 1/1 Running 0 151m 192.168.1.9 k8s-master02 <none> <none> kube-scheduler-k8s-master03 1/1 Running 0 150m 192.168.1.10 k8s-master03 <none> <none> metrics-server-5cf8885b66-jpd6z 1/1 Running 0 146m 172.17.125.1 k8s-node01 <none> <none>

注意事项:单个删除master的名称,并不是你的主机名!!!

单个删除master的节点名称是从:

[root@k8s-master03 ~]# kubectl get node NAME STATUS ROLES AGE VERSION k8s-master01 Ready control-plane,master 166m v1.23.17 k8s-master02 Ready control-plane,master 165m v1.23.17 k8s-master03 Ready control-plane,master 165m v1.23.17 k8s-node01 Ready <none> 164m v1.23.17 k8s-node02 Ready <none> 164m v1.23.17

1.5 验证集群

查看namespaces命名空间下的Pod:

[root@k8s-master01]# kubectl get po --all-namespaces NAMESPACE NAME READY STATUS RESTARTS AGE kube-system calico-kube-controllers-6f6595874c-j9hdl 1/1 Running 0 168m kube-system calico-node-2d5p7 1/1 Running 0 168m kube-system calico-node-7n5mw 1/1 Running 0 168m kube-system calico-node-9pdmh 1/1 Running 0 168m kube-system calico-node-b8sh2 1/1 Running 0 168m kube-system calico-node-chdsf 1/1 Running 0 168m kube-system calico-typha-6b6cf8cbdf-llhvd 1/1 Running 0 168m kube-system coredns-65c54cc984-8t757 1/1 Running 0 171m kube-system coredns-65c54cc984-mf4nd 1/1 Running 0 171m kube-system etcd-k8s-master01 1/1 Running 0 171m kube-system etcd-k8s-master02 1/1 Running 0 170m kube-system etcd-k8s-master03 1/1 Running 0 170m kube-system kube-apiserver-k8s-master01 1/1 Running 0 171m kube-system kube-apiserver-k8s-master02 1/1 Running 0 170m kube-system kube-apiserver-k8s-master03 1/1 Running 1 (170m ago) 170m kube-system kube-controller-manager-k8s-master01 1/1 Running 1 (170m ago) 171m kube-system kube-controller-manager-k8s-master02 1/1 Running 0 170m kube-system kube-controller-manager-k8s-master03 1/1 Running 0 170m kube-system kube-proxy-5vp9t 1/1 Running 0 156m kube-system kube-proxy-65s6x 1/1 Running 0 156m kube-system kube-proxy-lzj8c 1/1 Running 0 156m kube-system kube-proxy-r56m7 1/1 Running 0 156m kube-system kube-proxy-sbdm2 1/1 Running 0 156m kube-system kube-scheduler-k8s-master01 1/1 Running 1 (170m ago) 171m kube-system kube-scheduler-k8s-master02 1/1 Running 0 170m kube-system kube-scheduler-k8s-master03 1/1 Running 0 170m kube-system metrics-server-5cf8885b66-jpd6z 1/1 Running 0 165m kubernetes-dashboard dashboard-metrics-scraper-7fcdff5f4c-7q7wv 1/1 Running 0 164m kubernetes-dashboard kubernetes-dashboard-85f59f8ff7-gws8d 1/1 Running 0 164m

查看监控Pod的数据:cpu、内存等:

[root@k8s-master01 ~]# kubectl top po -n kube-system NAME CPU(cores) MEMORY(bytes) calico-kube-controllers-6f6595874c-j9hdl 2m 32Mi calico-node-2d5p7 25m 95Mi calico-node-7n5mw 25m 93Mi calico-node-9pdmh 28m 105Mi calico-node-b8sh2 18m 112Mi calico-node-chdsf 24m 108Mi calico-typha-6b6cf8cbdf-llhvd 2m 25Mi coredns-65c54cc984-8t757 1m 17Mi coredns-65c54cc984-mf4nd 1m 21Mi etcd-k8s-master01 32m 92Mi etcd-k8s-master02 28m 80Mi etcd-k8s-master03 27m 85Mi kube-apiserver-k8s-master01 40m 252Mi kube-apiserver-k8s-master02 34m 252Mi kube-apiserver-k8s-master03 30m 195Mi kube-controller-manager-k8s-master01 12m 83Mi kube-controller-manager-k8s-master02 2m 44Mi kube-controller-manager-k8s-master03 1m 40Mi kube-proxy-5vp9t 3m 15Mi kube-proxy-65s6x 6m 20Mi kube-proxy-lzj8c 7m 20Mi kube-proxy-r56m7 4m 32Mi kube-proxy-sbdm2 5m 21Mi kube-scheduler-k8s-master01 2m 34Mi kube-scheduler-k8s-master02 3m 40Mi kube-scheduler-k8s-master03 2m 33Mi metrics-server-5cf8885b66-jpd6z 2m 16Mi

查看k8s的svc:

第一个是K8S的svc:

[root@k8s-master01 ~]# kubectl get svc NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE kubernetes ClusterIP 10.244.0.1 <none> 443/TCP 6h38m

第二个就是pod-dns的svc:

[root@k8s-master01 ~]# kubectl get svc -n kube-system NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE calico-typha ClusterIP 10.244.42.134 <none> 5473/TCP 6h37m kube-dns ClusterIP 10.244.0.10 <none> 53/UDP,53/TCP,9153/TCP 6h40m metrics-server ClusterIP 10.244.225.26 <none> 443/TCP 6h34m

测试上面两个IP是否是通的:

[root@k8s-master01 ~]# telnet 10.244.0.1 443 Trying 10.244.0.1... Connected to 10.244.0.1. Escape character is '^]'. [root@k8s-master01 ~]# telnet 10.244.0.10 53 Trying 10.244.0.10... Connected to 10.244.0.10. Escape character is '^]'.

查看namespaces命名空间下的Pod(附加IP地址):

[root@k8s-master01 ~]# kubectl get po --all-namespaces -owide NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES kube-system calico-kube-controllers-6f6595874c-j9hdl 1/1 Running 0 6h42m 172.25.244.195 k8s-master01 <none> <none> kube-system calico-node-2d5p7 1/1 Running 0 6h42m 192.168.1.9 k8s-master02 <none> <none> kube-system calico-node-7n5mw 1/1 Running 0 6h42m 192.168.1.8 k8s-master01 <none> <none> kube-system calico-node-9pdmh 1/1 Running 0 6h42m 192.168.1.11 k8s-node01 <none> <none> kube-system calico-node-b8sh2 1/1 Running 0 6h42m 192.168.1.12 k8s-node02 <none> <none> kube-system calico-node-chdsf 1/1 Running 0 6h42m 192.168.1.10 k8s-master03 <none> <none> kube-system calico-typha-6b6cf8cbdf-llhvd 1/1 Running 0 6h42m 192.168.1.12 k8s-node02 <none> <none> kube-system coredns-65c54cc984-8t757 1/1 Running 0 6h45m 172.25.244.194 k8s-master01 <none> <none> kube-system coredns-65c54cc984-mf4nd 1/1 Running 0 6h45m 172.25.244.193 k8s-master01 <none> <none> kube-system etcd-k8s-master01 1/1 Running 0 6h45m 192.168.1.8 k8s-master01 <none> <none> kube-system etcd-k8s-master02 1/1 Running 0 6h44m 192.168.1.9 k8s-master02 <none> <none> kube-system etcd-k8s-master03 1/1 Running 0 6h44m 192.168.1.10 k8s-master03 <none> <none> kube-system kube-apiserver-k8s-master01 1/1 Running 0 6h45m 192.168.1.8 k8s-master01 <none> <none> kube-system kube-apiserver-k8s-master02 1/1 Running 0 6h44m 192.168.1.9 k8s-master02 <none> <none> kube-system kube-apiserver-k8s-master03 1/1 Running 1 (6h44m ago) 6h44m 192.168.1.10 k8s-master03 <none> <none> kube-system kube-controller-manager-k8s-master01 1/1 Running 1 (6h44m ago) 6h45m 192.168.1.8 k8s-master01 <none> <none> kube-system kube-controller-manager-k8s-master02 1/1 Running 0 6h44m 192.168.1.9 k8s-master02 <none> <none> kube-system kube-controller-manager-k8s-master03 1/1 Running 0 6h44m 192.168.1.10 k8s-master03 <none> <none> kube-system kube-proxy-5vp9t 1/1 Running 0 6h30m 192.168.1.12 k8s-node02 <none> <none> kube-system kube-proxy-65s6x 1/1 Running 0 6h30m 192.168.1.11 k8s-node01 <none> <none> kube-system kube-proxy-lzj8c 1/1 Running 0 6h30m 192.168.1.10 k8s-master03 <none> <none> kube-system kube-proxy-r56m7 1/1 Running 0 6h30m 192.168.1.8 k8s-master01 <none> <none> kube-system kube-proxy-sbdm2 1/1 Running 0 6h30m 192.168.1.9 k8s-master02 <none> <none> kube-system kube-scheduler-k8s-master01 1/1 Running 1 (6h44m ago) 6h45m 192.168.1.8 k8s-master01 <none> <none> kube-system kube-scheduler-k8s-master02 1/1 Running 0 6h44m 192.168.1.9 k8s-master02 <none> <none> kube-system kube-scheduler-k8s-master03 1/1 Running 0 6h43m 192.168.1.10 k8s-master03 <none> <none> kube-system metrics-server-5cf8885b66-jpd6z 1/1 Running 0 6h39m 172.17.125.1 k8s-node01 <none> <none> kubernetes-dashboard dashboard-metrics-scraper-7fcdff5f4c-7q7wv 1/1 Running 0 6h38m 172.27.14.193 k8s-node02 <none> <none> kubernetes-dashboard kubernetes-dashboard-85f59f8ff7-gws8d 1/1 Running 0 6h38m 172.17.125.2 k8s-node01 <none> <none>

验证所有节点都能ping通master节点的Pod:

[root@k8s-master01 ~]# ping 172.25.244.195 PING 172.25.244.195 (172.25.244.195) 56(84) bytes of data. 64 bytes from 172.25.244.195: icmp_seq=1 ttl=64 time=0.046 ms 64 bytes from 172.25.244.195: icmp_seq=2 ttl=64 time=0.054 ms 64 bytes from 172.25.244.195: icmp_seq=3 ttl=64 time=0.020 ms ----------------------------------------------------------------------------- [root@k8s-master02 ~]# ping 172.25.244.195 PING 172.25.244.195 (172.25.244.195) 56(84) bytes of data. 64 bytes from 172.25.244.195: icmp_seq=1 ttl=63 time=0.696 ms 64 bytes from 172.25.244.195: icmp_seq=2 ttl=63 time=0.307 ms 64 bytes from 172.25.244.195: icmp_seq=3 ttl=63 time=0.127 ms ----------------------------------------------------------------------------- [root@k8s-master03 ~]# ping 172.25.244.195 PING 172.25.244.195 (172.25.244.195) 56(84) bytes of data. 64 bytes from 172.25.244.195: icmp_seq=1 ttl=63 time=0.889 ms 64 bytes from 172.25.244.195: icmp_seq=2 ttl=63 time=0.354 ms 64 bytes from 172.25.244.195: icmp_seq=3 ttl=63 time=0.116 ms ------------------------------------------------------------------------- [root@k8s-node01 ~]# ping 172.25.244.195 PING 172.25.244.195 (172.25.244.195) 56(84) bytes of data. 64 bytes from 172.25.244.195: icmp_seq=1 ttl=63 time=1.14 ms 64 bytes from 172.25.244.195: icmp_seq=2 ttl=63 time=0.366 ms 64 bytes from 172.25.244.195: icmp_seq=3 ttl=63 time=0.202 ms ------------------------------------------------------------------------- [root@k8s-node02 ~]# ping 172.25.244.195 PING 172.25.244.195 (172.25.244.195) 56(84) bytes of data. 64 bytes from 172.25.244.195: icmp_seq=1 ttl=63 time=2.74 ms 64 bytes from 172.25.244.195: icmp_seq=2 ttl=63 time=0.398 ms 64 bytes from 172.25.244.195: icmp_seq=3 ttl=63 time=0.153 ms

进入到Pod内部:

[root@k8s-master01 ~]# kubectl exec -ti calico-node-chdsf -n kube-system -- sh Defaulted container "calico-node" out of: calico-node, upgrade-ipam (init), install-cni (init), flexvol-driver (init) sh-4.4#

编辑Pod的yaml文件:

[root@k8s-master01 ~]# kubectl edit svc kubernetes-dashboard -n !$ kubectl edit svc kubernetes-dashboard -n kubernetes-dashboard Edit cancelled, no changes made.

1.6 kubectl命令详解

官网链接:https://kubernetes.io/zh/docs/reference/kubectl/cheatsheet/

添加

查看集群中有多少个节点:

[root@k8s-master01 ~]# kubectl get node NAME STATUS ROLES AGE VERSION k8s-master01 Ready control-plane,master 7h23m v1.23.17 k8s-master02 Ready control-plane,master 7h22m v1.23.17 k8s-master03 Ready control-plane,master 7h22m v1.23.17 k8s-node01 Ready <none> 7h21m v1.23.17 k8s-node02 Ready <none> 7h22m v1.23.17

查看集群中节点的IP地址:

[root@k8s-master01 ~]# kubectl get node -owide NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME k8s-master01 Ready control-plane,master 7h24m v1.23.17 192.168.1.8 <none> CentOS Linux 7 (Core) 4.19.12-1.el7.elrepo.x86_64 containerd://1.6.19 k8s-master02 Ready control-plane,master 7h23m v1.23.17 192.168.1.9 <none> CentOS Linux 7 (Core) 4.19.12-1.el7.elrepo.x86_64 containerd://1.6.19 k8s-master03 Ready control-plane,master 7h23m v1.23.17 192.168.1.10 <none> CentOS Linux 7 (Core) 4.19.12-1.el7.elrepo.x86_64 containerd://1.6.19 k8s-node01 Ready <none> 7h22m v1.23.17 192.168.1.11 <none> CentOS Linux 7 (Core) 4.19.12-1.el7.elrepo.x86_64 containerd://1.6.19 k8s-node02 Ready <none> 7h22m v1.23.17 192.168.1.12 <none> CentOS Linux 7 (Core) 4.19.12-1.el7.elrepo.x86_64 containerd

如果没有自动补全的功能可以按照下面要求做:

apt-get install bash-completion

source <(kubectl completion bash)

echo "source <(kubectl completion bash)" >> ~/.bashrc

查看kubeconfig的配置:

[root@k8s-master01 ~]# kubectl config view apiVersion: v1 clusters: - cluster: certificate-authority-data: DATA+OMITTED server: https://192.168.1.100:16443 name: kubernetes contexts: - context: cluster: kubernetes user: kubernetes-admin name: kubernetes-admin@kubernetes current-context: kubernetes-admin@kubernetes kind: Config preferences: {} users: - name: kubernetes-admin user: client-certificate-data: REDACTED client-key-data: REDACTED

切换k8s集群:

kubectl config use-context hk8s

创建应用:

kubectl create -f dashboard.yaml

可以连续创建两个:

kubectl create -f dashboard.yaml,user.yaml

kubectl create -f dashboard.yaml -f user.yaml

如果创建应用已存在的话,可以使用apply,不会提醒已存在:

kubectl apply -f dashboard.yaml

使用命令去创建deployment:

[root@k8s-master01 ~]# kubectl create deployment nginx --image=nginx

deployment.apps/nginx created

查看创建的deployment:

[root@k8s-master01 ~]# kubectl get deploy NAME READY UP-TO-DATE AVAILABLE AGE nginx 1/1 1 1 26s

查看deployment的nginx:

[root@k8s-master01 ~]# kubectl get deploy nginx -oyaml apiVersion: apps/v1 kind: Deployment metadata: annotations: deployment.kubernetes.io/revision: "1" creationTimestamp: "2023-03-29T09:17:36Z" generation: 1 labels: app: nginx name: nginx namespace: default resourceVersion: "52457" uid: 0dc4741b-c5b6-49f9-ad2f-af11effa7026 spec: progressDeadlineSeconds: 600 replicas: 1 revisionHistoryLimit: 10 selector: matchLabels: app: nginx strategy: rollingUpdate: maxSurge: 25% maxUnavailable: 25% type: RollingUpdate template: metadata: creationTimestamp: null labels: app: nginx spec: containers: - image: nginx imagePullPolicy: Always name: nginx resources: {} terminationMessagePath: /dev/termination-log terminationMessagePolicy: File dnsPolicy: ClusterFirst restartPolicy: Always schedulerName: default-scheduler securityContext: {} terminationGracePeriodSeconds: 30 status: availableReplicas: 1 conditions: - lastTransitionTime: "2023-03-29T09:17:55Z" lastUpdateTime: "2023-03-29T09:17:55Z" message: Deployment has minimum availability. reason: MinimumReplicasAvailable status: "True" type: Available - lastTransitionTime: "2023-03-29T09:17:36Z" lastUpdateTime: "2023-03-29T09:17:55Z" message: ReplicaSet "nginx-85b98978db" has successfully progressed. reason: NewReplicaSetAvailable status: "True" type: Progressing observedGeneration: 1 readyReplicas: 1 replicas: 1 updatedReplicas: 1

不去创建资源,只生成yaml文件:

[root@k8s-master01 ~]# kubectl create deployment nginx --image=nginx --dry-run -oyaml W0329 17:25:49.903849 94990 helpers.go:622] --dry-run is deprecated and can be replaced with --dry-run=client. apiVersion: apps/v1 kind: Deployment metadata: creationTimestamp: null labels: app: nginx name: nginx spec: replicas: 1 selector: matchLabels: app: nginx strategy: {} template: metadata: creationTimestamp: null labels: app: nginx spec: containers: - image: nginx name: nginx resources: {} status: {}

不去创建资源,只生成yaml文件:

[root@k8s-master01 ~]# kubectl create deployment nginx2 --image=nginx --dry-run=client -oyaml apiVersion: apps/v1 kind: Deployment metadata: creationTimestamp: null labels: app: nginx2 name: nginx2 spec: replicas: 1 selector: matchLabels: app: nginx2 strategy: {} template: metadata: creationTimestamp: null labels: app: nginx2 spec: containers: - image: nginx name: nginx resources: {} status: {}

不创建资源,只创建yaml文件,并输出到文件里:

kubectl create deployment nginx2 --image=nginx --dry-run=client -oyaml > nginx2-dp.yaml

删除

删除创建的deploy:

[root@k8s-master01 ~]# kubectl delete deploy nginx deployment.apps "nginx" deleted

通过yaml文件去删除deploy:

[root@k8s-master01 ~]# kubectl delete -f user.yaml

指定命令空间删除Pod:

[root@k8s-master01 ~]# kubectl delete po dashboard-metrics-scraper-7fcdff5f4c-7q7wv -n kubernetes-dashboard pod "dashboard-metrics-scraper-7fcdff5f4c-7q7wv" deleted

如果你的yaml文件中没有指定namespaces的话,在删除的时候也要加上它的命名空间,如果文件已经指定了那就不需要在指定了:

[root@k8s-master01 ~]# kubectl delete -f user.yaml -n kubernetes-system

查看

查看有多少个services:

[root@k8s-master01 ~]# kubectl get services NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE kubernetes ClusterIP 10.244.0.1 <none> 443/TCP 8h

可以缩写:

[root@k8s-master01 ~]# kubectl get svc NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE kubernetes ClusterIP 10.244.0.1 <none> 443/TCP 8h

查看某个命名空间下的:

[root@k8s-master01 ~]# kubectl get svc -n kube-system NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE calico-typha ClusterIP 10.244.42.134 <none> 5473/TCP 8h kube-dns ClusterIP 10.244.0.10 <none> 53/UDP,53/TCP,9153/TCP 8h metrics-server ClusterIP 10.244.225.26 <none> 443/TCP 8h

查看所有命名空间的:

[root@k8s-master01 ~]# kubectl get svc -A NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE default kubernetes ClusterIP 10.244.0.1 <none> 443/TCP 8h kube-system calico-typha ClusterIP 10.244.42.134 <none> 5473/TCP 8h kube-system kube-dns ClusterIP 10.244.0.10 <none> 53/UDP,53/TCP,9153/TCP 8h kube-system metrics-server ClusterIP 10.244.225.26 <none> 443/TCP 8h kubernetes-dashboard dashboard-metrics-scraper ClusterIP 10.244.183.40 <none> 8000/TCP 8h kubernetes-dashboard kubernetes-dashboard NodePort 10.244.3.158 <none> 443:30374/TCP 8h

输出所有命名空间:

[root@k8s-master01 ~]# kubectl get po -A NAMESPACE NAME READY STATUS RESTARTS AGE kube-system calico-kube-controllers-6f6595874c-j9hdl 1/1 Running 0 8h kube-system calico-node-2d5p7 1/1 Running 0 8h kube-system calico-node-7n5mw 1/1 Running 0 8h kube-system calico-node-9pdmh 1/1 Running 0 8h kube-system calico-node-b8sh2 1/1 Running 0 8h kube-system calico-node-chdsf 1/1 Running 0 8h kube-system calico-typha-6b6cf8cbdf-llhvd 1/1 Running 0 8h kube-system coredns-65c54cc984-8t757 1/1 Running 0 8h kube-system coredns-65c54cc984-mf4nd 1/1 Running 0 8h kube-system etcd-k8s-master01 1/1 Running 0 8h kube-system etcd-k8s-master02 1/1 Running 0 8h kube-system etcd-k8s-master03 1/1 Running 0 8h kube-system kube-apiserver-k8s-master01 1/1 Running 0 8h kube-system kube-apiserver-k8s-master02 1/1 Running 0 8h kube-system kube-apiserver-k8s-master03 1/1 Running 1 (8h ago) 8h kube-system kube-controller-manager-k8s-master01 1/1 Running 1 (8h ago) 8h kube-system kube-controller-manager-k8s-master02 1/1 Running 0 8h kube-system kube-controller-manager-k8s-master03 1/1 Running 0 8h kube-system kube-proxy-5vp9t 1/1 Running 0 8h kube-system kube-proxy-65s6x 1/1 Running 0 8h kube-system kube-proxy-lzj8c 1/1 Running 0 8h kube-system kube-proxy-r56m7 1/1 Running 0 8h kube-system kube-proxy-sbdm2 1/1 Running 0 8h kube-system kube-scheduler-k8s-master01 1/1 Running 1 (8h ago) 8h kube-system kube-scheduler-k8s-master02 1/1 Running 0 8h kube-system kube-scheduler-k8s-master03 1/1 Running 0 8h kube-system metrics-server-5cf8885b66-jpd6z 1/1 Running 0 8h kubernetes-dashboard dashboard-metrics-scraper-7fcdff5f4c-45zfn 1/1 Running 0 6m17s kubernetes-dashboard kubernetes-dashboard-85f59f8ff7-gws8d 1/1 Running 0 8h

输出所有命名空间下的Pod和IP地址:

[root@k8s-master01 ~]# kubectl get po -A -owide NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES kube-system calico-kube-controllers-6f6595874c-j9hdl 1/1 Running 0 8h 172.25.244.195 k8s-master01 <none> <none> kube-system calico-node-2d5p7 1/1 Running 0 8h 192.168.1.9 k8s-master02 <none> <none> kube-system calico-node-7n5mw 1/1 Running 0 8h 192.168.1.8 k8s-master01 <none> <none> kube-system calico-node-9pdmh 1/1 Running 0 8h 192.168.1.11 k8s-node01 <none> <none> kube-system calico-node-b8sh2 1/1 Running 0 8h 192.168.1.12 k8s-node02 <none> <none> kube-system calico-node-chdsf 1/1 Running 0 8h 192.168.1.10 k8s-master03 <none> <none> kube-system calico-typha-6b6cf8cbdf-llhvd 1/1 Running 0 8h 192.168.1.12 k8s-node02 <none> <none> kube-system coredns-65c54cc984-8t757 1/1 Running 0 8h 172.25.244.194 k8s-master01 <none> <none> kube-system coredns-65c54cc984-mf4nd 1/1 Running 0 8h 172.25.244.193 k8s-master01 <none> <none> kube-system etcd-k8s-master01 1/1 Running 0 8h 192.168.1.8 k8s-master01 <none> <none> kube-system etcd-k8s-master02 1/1 Running 0 8h 192.168.1.9 k8s-master02 <none> <none> kube-system etcd-k8s-master03 1/1 Running 0 8h 192.168.1.10 k8s-master03 <none> <none> kube-system kube-apiserver-k8s-master01 1/1 Running 0 8h 192.168.1.8 k8s-master01 <none> <none> kube-system kube-apiserver-k8s-master02 1/1 Running 0 8h 192.168.1.9 k8s-master02 <none> <none> kube-system kube-apiserver-k8s-master03 1/1 Running 1 (8h ago) 8h 192.168.1.10 k8s-master03 <none> <none> kube-system kube-controller-manager-k8s-master01 1/1 Running 1 (8h ago) 8h 192.168.1.8 k8s-master01 <none> <none> kube-system kube-controller-manager-k8s-master02 1/1 Running 0 8h 192.168.1.9 k8s-master02 <none> <none> kube-system kube-controller-manager-k8s-master03 1/1 Running 0 8h 192.168.1.10 k8s-master03 <none> <none> kube-system kube-proxy-5vp9t 1/1 Running 0 8h 192.168.1.12 k8s-node02 <none> <none> kube-system kube-proxy-65s6x 1/1 Running 0 8h 192.168.1.11 k8s-node01 <none> <none> kube-system kube-proxy-lzj8c 1/1 Running 0 8h 192.168.1.10 k8s-master03 <none> <none> kube-system kube-proxy-r56m7 1/1 Running 0 8h 192.168.1.8 k8s-master01 <none> <none> kube-system kube-proxy-sbdm2 1/1 Running 0 8h 192.168.1.9 k8s-master02 <none> <none> kube-system kube-scheduler-k8s-master01 1/1 Running 1 (8h ago) 8h 192.168.1.8 k8s-master01 <none> <none> kube-system kube-scheduler-k8s-master02 1/1 Running 0 8h 192.168.1.9 k8s-master02 <none> <none> kube-system kube-scheduler-k8s-master03 1/1 Running 0 8h 192.168.1.10 k8s-master03 <none> <none> kube-system metrics-server-5cf8885b66-jpd6z 1/1 Running 0 8h 172.17.125.1 k8s-node01 <none> <none> kubernetes-dashboard dashboard-metrics-scraper-7fcdff5f4c-45zfn 1/1 Running 0 7m24s 172.17.125.4 k8s-node01 <none> <none> kubernetes-dashboard kubernetes-dashboard-85f59f8ff7-gws8d 1/1 Running 0 8h 172.17.125.2 k8s-node0

查看node节点,并输出IP地址:

[root@k8s-master01 ~]# kubectl get node -owide NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME k8s-master01 Ready control-plane,master 8h v1.23.17 192.168.1.8 <none> CentOS Linux 7 (Core) 4.19.12-1.el7.elrepo.x86_64 containerd://1.6.19 k8s-master02 Ready control-plane,master 8h v1.23.17 192.168.1.9 <none> CentOS Linux 7 (Core) 4.19.12-1.el7.elrepo.x86_64 containerd://1.6.19 k8s-master03 Ready control-plane,master 8h v1.23.17 192.168.1.10 <none> CentOS Linux 7 (Core) 4.19.12-1.el7.elrepo.x86_64 containerd://1.6.19 k8s-node01 Ready <none> 8h v1.23.17 192.168.1.11 <none> CentOS Linux 7 (Core) 4.19.12-1.el7.elrepo.x86_64 containerd://1.6.19 k8s-node02 Ready <none> 8h v1.23.17 192.168.1.12 <none> CentOS Linux 7 (Core) 4.19.12-1.el7.elrepo.x86_64 container

查看命名空间:

[root@k8s-master01 ~]# kubectl get clusterrole NAME CREATED AT admin 2023-03-29T01:25:52Z calico-kube-controllers 2023-03-29T01:28:32Z calico-node 2023-03-29T01:28:32Z cluster-admin 2023-03-29T01:25:52Z edit 2023-03-29T01:25:52Z kubeadm:get-nodes 2023-03-29T01:26:10Z kubernetes-dashboard 2023-03-29T01:32:49Z system:aggregate-to-admin 2023-03-29T01:25:52Z system:aggregate-to-edit 2023-03-29T01:25:52Z system:aggregate-to-view 2023-03-29T01:25:52Z system:aggregated-metrics-reader 2023-03-29T01:31:53Z system:auth-delegator 2023-03-29T01:25:52Z system:basic-user 2023-03-29T01:25:52Z system:certificates.k8s.io:certificatesigningrequests:nodeclient 2023-03-29T01:25:52Z system:certificates.k8s.io:certificatesigningrequests:selfnodeclient 2023-03-29T01:25:52Z system:certificates.k8s.io:kube-apiserver-client-approver 2023-03-29T01:25:52Z system:certificates.k8s.io:kube-apiserver-client-kubelet-approver 2023-03-29T01:25:52Z system:certificates.k8s.io:kubelet-serving-approver 2023-03-29T01:25:52Z system:certificates.k8s.io:legacy-unknown-approver 2023-03-29T01:25:52Z system:controller:attachdetach-controller 2023-03-29T01:25:52Z system:controller:certificate-controller 2023-03-29T01:25:52Z system:controller:clusterrole-aggregation-controller 2023-03-29T01:25:52Z system:controller:cronjob-controller 2023-03-29T01:25:52Z system:controller:daemon-set-controller 2023-03-29T01:25:52Z system:controller:deployment-controller 2023-03-29T01:25:52Z system:controller:disruption-controller 2023-03-29T01:25:52Z system:controller:endpoint-controller 2023-03-29T01:25:52Z system:controller:endpointslice-controller 2023-03-29T01:25:52Z system:controller:endpointslicemirroring-controller 2023-03-29T01:25:52Z system:controller:ephemeral-volume-controller 2023-03-29T01:25:52Z system:controller:expand-controller 2023-03-29T01:25:52Z system:controller:generic-garbage-collector 2023-03-29T01:25:52Z system:controller:horizontal-pod-autoscaler 2023-03-29T01:25:52Z system:controller:job-controller 2023-03-29T01:25:52Z system:controller:namespace-controller 2023-03-29T01:25:52Z system:controller:node-controller 2023-03-29T01:25:52Z system:controller:persistent-volume-binder 2023-03-29T01:25:52Z system:controller:pod-garbage-collector 2023-03-29T01:25:52Z system:controller:pv-protection-controller 2023-03-29T01:25:52Z system:controller:pvc-protection-controller 2023-03-29T01:25:52Z system:controller:replicaset-controller 2023-03-29T01:25:52Z system:controller:replication-controller 2023-03-29T01:25:52Z system:controller:resourcequota-controller 2023-03-29T01:25:52Z system:controller:root-ca-cert-publisher 2023-03-29T01:25:52Z system:controller:route-controller 2023-03-29T01:25:52Z system:controller:service-account-controller 2023-03-29T01:25:52Z system:controller:service-controller 2023-03-29T01:25:52Z system:controller:statefulset-controller 2023-03-29T01:25:52Z system:controller:ttl-after-finished-controller 2023-03-29T01:25:52Z system:controller:ttl-controller 2023-03-29T01:25:52Z system:coredns 2023-03-29T01:26:10Z system:discovery 2023-03-29T01:25:52Z system:heapster 2023-03-29T01:25:52Z system:kube-aggregator 2023-03-29T01:25:52Z system:kube-controller-manager 2023-03-29T01:25:52Z system:kube-dns 2023-03-29T01:25:52Z system:kube-scheduler 2023-03-29T01:25:52Z system:kubelet-api-admin 2023-03-29T01:25:52Z system:metrics-server 2023-03-29T01:31:53Z system:monitoring 2023-03-29T01:25:52Z system:node 2023-03-29T01:25:52Z system:node-bootstrapper 2023-03-29T01:25:52Z system:node-problem-detector 2023-03-29T01:25:52Z system:node-proxier 2023-03-29T01:25:52Z system:persistent-volume-provisioner 2023-03-29T01:25:52Z system:public-info-viewer 2023-03-29T01:25:52Z system:service-account-issuer-discovery 2023-03-29T01:25:52Z system:volume-scheduler 2023-03-29T01:25:52Z view 2023-03-29T01:25:52Z

具有命名空间隔离性的:

[root@k8s-master01 ~]# kubectl api-resources --namespaced=true NAME SHORTNAMES APIVERSION NAMESPACED KIND bindings v1 true Binding configmaps cm v1 true ConfigMap endpoints ep v1 true Endpoints events ev v1 true Event limitranges limits v1 true LimitRange persistentvolumeclaims pvc v1 true PersistentVolumeClaim pods po v1 true Pod podtemplates v1 true PodTemplate replicationcontrollers rc v1 true ReplicationController resourcequotas quota v1 true ResourceQuota secrets v1 true Secret serviceaccounts sa v1 true ServiceAccount services svc v1 true Service controllerrevisions apps/v1 true ControllerRevision daemonsets ds apps/v1 true DaemonSet deployments deploy apps/v1 true Deployment replicasets rs apps/v1 true ReplicaSet statefulsets sts apps/v1 true StatefulSet localsubjectaccessreviews authorization.k8s.io/v1 true LocalSubjectAccessReview horizontalpodautoscalers hpa autoscaling/v2 true HorizontalPodAutoscaler cronjobs cj batch/v1 true CronJob jobs batch/v1 true Job leases coordination.k8s.io/v1 true Lease networkpolicies crd.projectcalico.org/v1 true NetworkPolicy networksets crd.projectcalico.org/v1 true NetworkSet endpointslices discovery.k8s.io/v1 true EndpointSlice events ev events.k8s.io/v1 true Event pods metrics.k8s.io/v1beta1 true PodMetrics ingresses ing networking.k8s.io/v1 true Ingress networkpolicies netpol networking.k8s.io/v1 true NetworkPolicy poddisruptionbudgets pdb policy/v1 true PodDisruptionBudget rolebindings rbac.authorization.k8s.io/v1 true RoleBinding roles rbac.authorization.k8s.io/v1 true Role csistoragecapacities storage.k8s.io/v1beta1 true CSIStorageCapacity

没有隔离性的:

[root@k8s-master01 ~]# kubectl api-resources --namespaced=false NAME SHORTNAMES APIVERSION NAMESPACED KIND componentstatuses cs v1 false ComponentStatus namespaces ns v1 false Namespace nodes no v1 false Node persistentvolumes pv v1 false PersistentVolume mutatingwebhookconfigurations admissionregistration.k8s.io/v1 false MutatingWebhookConfiguration validatingwebhookconfigurations admissionregistration.k8s.io/v1 false ValidatingWebhookConfiguration customresourcedefinitions crd,crds apiextensions.k8s.io/v1 false CustomResourceDefinition apiservices apiregistration.k8s.io/v1 false APIService tokenreviews authentication.k8s.io/v1 false TokenReview selfsubjectaccessreviews authorization.k8s.io/v1 false SelfSubjectAccessReview selfsubjectrulesreviews authorization.k8s.io/v1 false SelfSubjectRulesReview subjectaccessreviews authorization.k8s.io/v1 false SubjectAccessReview certificatesigningrequests csr certificates.k8s.io/v1 false CertificateSigningRequest bgpconfigurations crd.projectcalico.org/v1 false BGPConfiguration bgppeers crd.projectcalico.org/v1 false BGPPeer blockaffinities crd.projectcalico.org/v1 false BlockAffinity caliconodestatuses crd.projectcalico.org/v1 false CalicoNodeStatus clusterinformations crd.projectcalico.org/v1 false ClusterInformation felixconfigurations crd.projectcalico.org/v1 false FelixConfiguration globalnetworkpolicies crd.projectcalico.org/v1 false GlobalNetworkPolicy globalnetworksets crd.projectcalico.org/v1 false GlobalNetworkSet hostendpoints crd.projectcalico.org/v1 false HostEndpoint ipamblocks crd.projectcalico.org/v1 false IPAMBlock ipamconfigs crd.projectcalico.org/v1 false IPAMConfig ipamhandles crd.projectcalico.org/v1 false IPAMHandle ippools crd.projectcalico.org/v1 false IPPool ipreservations crd.projectcalico.org/v1 false IPReservation kubecontrollersconfigurations crd.projectcalico.org/v1 false KubeControllersConfiguration flowschemas flowcontrol.apiserver.k8s.io/v1beta2 false FlowSchema prioritylevelconfigurations flowcontrol.apiserver.k8s.io/v1beta2 false PriorityLevelConfiguration nodes metrics.k8s.io/v1beta1 false NodeMetrics ingressclasses networking.k8s.io/v1 false IngressClass runtimeclasses node.k8s.io/v1 false RuntimeClass podsecuritypolicies psp policy/v1beta1 false PodSecurityPolicy clusterrolebindings rbac.authorization.k8s.io/v1 false ClusterRoleBinding clusterroles rbac.authorization.k8s.io/v1 false ClusterRole priorityclasses pc scheduling.k8s.io/v1 false PriorityClass csidrivers storage.k8s.io/v1 false CSIDriver csinodes storage.k8s.io/v1 false CSINode storageclasses sc storage.k8s.io/v1 false StorageClass volumeattachments storage.k8s.io/v1 false VolumeAt

根据某个参数去排序:

[root@k8s-master01 ~]# kubectl get svc -n kube-system --sort-by=.metadata.name NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE calico-typha ClusterIP 10.244.42.134 <none> 5473/TCP 8h kube-dns ClusterIP 10.244.0.10 <none> 53/UDP,53/TCP,9153/TCP 8h metrics-server ClusterIP 10.244.225.26 <none> 443/TCP 8h

根据Pod名称排序:

[root@k8s-master01 ~]# kubectl get po -n kube-system --sort-by=.metadata.name NAME READY STATUS RESTARTS AGE calico-kube-controllers-6f6595874c-j9hdl 1/1 Running 0 8h calico-node-2d5p7 1/1 Running 0 8h calico-node-7n5mw 1/1 Running 0 8h calico-node-9pdmh 1/1 Running 0 8h calico-node-b8sh2 1/1 Running 0 8h calico-node-chdsf 1/1 Running 0 8h calico-typha-6b6cf8cbdf-llhvd 1/1 Running 0 8h coredns-65c54cc984-8t757 1/1 Running 0 8h coredns-65c54cc984-mf4nd 1/1 Running 0 8h etcd-k8s-master01 1/1 Running 0 8h etcd-k8s-master02 1/1 Running 0 8h etcd-k8s-master03 1/1 Running 0 8h kube-apiserver-k8s-master01 1/1 Running 0 8h kube-apiserver-k8s-master02 1/1 Running 0 8h kube-apiserver-k8s-master03 1/1 Running 1 (8h ago) 8h kube-controller-manager-k8s-master01 1/1 Running 1 (8h ago) 8h kube-controller-manager-k8s-master02 1/1 Running 0 8h kube-controller-manager-k8s-master03 1/1 Running 0 8h kube-proxy-5vp9t 1/1 Running 0 8h kube-proxy-65s6x 1/1 Running 0 8h kube-proxy-lzj8c 1/1 Running 0 8h kube-proxy-r56m7 1/1 Running 0 8h kube-proxy-sbdm2 1/1 Running 0 8h kube-scheduler-k8s-master01 1/1 Running 1 (8h ago) 8h kube-scheduler-k8s-master02 1/1 Running 0 8h kube-scheduler-k8s-master03 1/1 Running 0 8h metrics-server-5cf8885b66-jpd6z 1/1 Running 0 8h

根据Pod的重启次数排序:

[root@k8s-master01 ~]# kubectl get pods -n kube-system --sort-by='.status.containerStatuses[0].restartCount' NAME READY STATUS RESTARTS AGE kube-apiserver-k8s-master01 1/1 Running 0 8h metrics-server-5cf8885b66-jpd6z 1/1 Running 0 8h calico-kube-controllers-6f6595874c-j9hdl 1/1 Running 0 8h calico-node-9pdmh 1/1 Running 0 8h calico-node-b8sh2 1/1 Running 0 8h calico-node-chdsf 1/1 Running 0 8h calico-typha-6b6cf8cbdf-llhvd 1/1 Running 0 8h coredns-65c54cc984-8t757 1/1 Running 0 8h coredns-65c54cc984-mf4nd 1/1 Running 0 8h etcd-k8s-master01 1/1 Running 0 8h etcd-k8s-master02 1/1 Running 0 8h etcd-k8s-master03 1/1 Running 0 8h calico-node-7n5mw 1/1 Running 0 8h calico-node-2d5p7 1/1 Running 0 8h kube-proxy-r56m7 1/1 Running 0 8h kube-apiserver-k8s-master02 1/1 Running 0 8h kube-controller-manager-k8s-master02 1/1 Running 0 8h kube-controller-manager-k8s-master03 1/1 Running 0 8h kube-proxy-5vp9t 1/1 Running 0 8h kube-proxy-65s6x 1/1 Running 0 8h kube-proxy-lzj8c 1/1 Running 0 8h kube-scheduler-k8s-master03 1/1 Running 0 8h kube-proxy-sbdm2 1/1 Running 0 8h kube-scheduler-k8s-master02 1/1 Running 0 8h kube-scheduler-k8s-master01 1/1 Running 1 (8h ago) 8h kube-apiserver-k8s-master03 1/1 Running 1 (8h ago) 8h kube-controller-manager-k8s-master01 1/1 Running 1 (8h ago) 8h

查看k8s的标签:

[root@k8s-master01 ~]# kubectl get po -n kube-system --show-labels NAME READY STATUS RESTARTS AGE LABELS calico-kube-controllers-6f6595874c-j9hdl 1/1 Running 0 8h k8s-app=calico-kube-controllers,pod-template-hash=6f6595874c calico-node-2d5p7 1/1 Running 0 8h controller-revision-hash=68587cbb67,k8s-app=calico-node,pod-template-generation=1 calico-node-7n5mw 1/1 Running 0 8h controller-revision-hash=68587cbb67,k8s-app=calico-node,pod-template-generation=1 calico-node-9pdmh 1/1 Running 0 8h controller-revision-hash=68587cbb67,k8s-app=calico-node,pod-template-generation=1 calico-node-b8sh2 1/1 Running 0 8h controller-revision-hash=68587cbb67,k8s-app=calico-node,pod-template-generation=1 calico-node-chdsf 1/1 Running 0 8h controller-revision-hash=68587cbb67,k8s-app=calico-node,pod-template-generation=1 calico-typha-6b6cf8cbdf-llhvd 1/1 Running 0 8h k8s-app=calico-typha,pod-template-hash=6b6cf8cbdf coredns-65c54cc984-8t757 1/1 Running 0 8h k8s-app=kube-dns,pod-template-hash=65c54cc984 coredns-65c54cc984-mf4nd 1/1 Running 0 8h k8s-app=kube-dns,pod-template-hash=65c54cc984 etcd-k8s-master01 1/1 Running 0 8h component=etcd,tier=control-plane etcd-k8s-master02 1/1 Running 0 8h component=etcd,tier=control-plane etcd-k8s-master03 1/1 Running 0 8h component=etcd,tier=control-plane kube-apiserver-k8s-master01 1/1 Running 0 8h component=kube-apiserver,tier=control-plane kube-apiserver-k8s-master02 1/1 Running 0 8h component=kube-apiserver,tier=control-plane kube-apiserver-k8s-master03 1/1 Running 1 (8h ago) 8h component=kube-apiserver,tier=control-plane kube-controller-manager-k8s-master01 1/1 Running 1 (8h ago) 8h component=kube-controller-manager,tier=control-plane kube-controller-manager-k8s-master02 1/1 Running 0 8h component=kube-controller-manager,tier=control-plane kube-controller-manager-k8s-master03 1/1 Running 0 8h component=kube-controller-manager,tier=control-plane kube-proxy-5vp9t 1/1 Running 0 8h controller-revision-hash=57cf69df67,k8s-app=kube-proxy,pod-template-generation=3 kube-proxy-65s6x 1/1 Running 0 8h controller-revision-hash=57cf69df67,k8s-app=kube-proxy,pod-template-generation=3 kube-proxy-lzj8c 1/1 Running 0 8h controller-revision-hash=57cf69df67,k8s-app=kube-proxy,pod-template-generation=3 kube-proxy-r56m7 1/1 Running 0 8h controller-revision-hash=57cf69df67,k8s-app=kube-proxy,pod-template-generation=3 kube-proxy-sbdm2 1/1 Running 0 8h controller-revision-hash=57cf69df67,k8s-app=kube-proxy,pod-template-generation=3 kube-scheduler-k8s-master01 1/1 Running 1 (8h ago) 8h component=kube-scheduler,tier=control-plane kube-scheduler-k8s-master02 1/1 Running 0 8h component=kube-scheduler,tier=control-plane kube-scheduler-k8s-master03 1/1 Running 0 8h component=kube-scheduler,tier=control-plane metrics-server-5cf8885b66-jpd6z 1/1 Running 0 8h k8s-app=metrics-server,pod-template-hash=5cf8885b66

查看指定标签有哪些:

[root@k8s-master01 ~]# kubectl get po -n kube-system -l component=etcd,tier=control-plane NAME READY STATUS RESTARTS AGE etcd-k8s-master01 1/1 Running 0 8h etcd-k8s-master02 1/1 Running 0 8h etcd-k8s-master03 1/1 Running 0 8h

查看不等于指定标签的有哪些:

[root@k8s-master01 ~]# kubectl get po -n kube-system -l component!=etcd,tier=control-plane NAME READY STATUS RESTARTS AGE kube-apiserver-k8s-master01 1/1 Running 0 8h kube-apiserver-k8s-master02 1/1 Running 0 8h kube-apiserver-k8s-master03 1/1 Running 1 (8h ago) 8h kube-controller-manager-k8s-master01 1/1 Running 1 (8h ago) 8h kube-controller-manager-k8s-master02 1/1 Running 0 8h kube-controller-manager-k8s-master03 1/1 Running 0 8h kube-scheduler-k8s-master01 1/1 Running 1 (8h ago) 8h kube-scheduler-k8s-master02 1/1 Running 0 8h kube-scheduler-k8s-master03 1/1 Running 0 8h

查看当前不指定的标签正在运行的Pod:

[root@k8s-master01 ~]# kubectl get po -n kube-system -l component!=etcd,tier=control-plane | grep Running kube-apiserver-k8s-master01 1/1 Running 0 8h kube-apiserver-k8s-master02 1/1 Running 0 8h kube-apiserver-k8s-master03 1/1 Running 1 (8h ago) 8h kube-controller-manager-k8s-master01 1/1 Running 1 (8h ago) 8h kube-controller-manager-k8s-master02 1/1 Running 0 8h kube-controller-manager-k8s-master03 1/1 Running 0 8h kube-scheduler-k8s-master01 1/1 Running 1 (8h ago) 8h kube-scheduler-k8s-master02 1/1 Running 0 8h kube-scheduler-k8s-master03 1/1 Running 0 8h

更新

更新资源:

[root@k8s-master01 ~]# kubectl set image deploy nginx nginx=nginx:v2 deployment.apps/nginx image updated

通过yaml文件方式更新:

[root@k8s-master01 ~]# kubectl edit deploy nginx

deployment.apps/nginx edited

保存可以按着shift键+zz就保存了。

查看是否已经更改了:

[root@k8s-master01 ~]# kubectl get deploy nginx -oyaml apiVersion: apps/v1 kind: Deployment metadata: annotations: deployment.kubernetes.io/revision: "3" creationTimestamp: "2023-03-29T10:09:36Z" generation: 3 labels: app: nginx name: nginx namespace: default resourceVersion: "58587" uid: 7d4b0fd1-e8fe-4563-847a-42445517f0ba spec: progressDeadlineSeconds: 600 replicas: 1 revisionHistoryLimit: 10 selector: matchLabels: app: nginx strategy: rollingUpdate: maxSurge: 25% maxUnavailable: 25% type: RollingUpdate template: metadata: creationTimestamp: null labels: app: nginx spec: containers: - image: nginx imagePullPolicy: Always name: nginx resources: {} terminationMessagePath: /dev/termination-log terminationMessagePolicy: File dnsPolicy: ClusterFirst restartPolicy: Always schedulerName: default-scheduler securityContext: {} terminationGracePeriodSeconds: 30 status: availableReplicas: 1 conditions: - lastTransitionTime: "2023-03-29T10:09:58Z" lastUpdateTime: "2023-03-29T10:09:58Z" message: Deployment has minimum availability. reason: MinimumReplicasAvailable status: "True" type: Available - lastTransitionTime: "2023-03-29T10:09:36Z" lastUpdateTime: "2023-03-29T10:13:21Z" message: ReplicaSet "nginx-85b98978db" has successfully progressed. reason: NewReplicaSetAvailable status: "True" type: Progressing observedGeneration: 3 readyReplicas: 1 replicas: 1 updatedReplicas: 1

查看Pod的日志:

[root@k8s-master01 ~]# kubectl logs -f kube-proxy-sbdm2 -n kube-system I0329 01:40:48.699967 1 node.go:163] Successfully retrieved node IP: 192.168.1.9 I0329 01:40:48.700031 1 server_others.go:138] "Detected node IP" address="192.168.1.9" I0329 01:40:48.717647 1 server_others.go:269] "Using ipvs Proxier" I0329 01:40:48.717676 1 server_others.go:271] "Creating dualStackProxier for ipvs" I0329 01:40:48.717686 1 server_others.go:502] "Detect-local-mode set to ClusterCIDR, but no IPv6 cluster CIDR defined, , defaulting to no-op detect-local for IPv6" I0329 01:40:48.717956 1 proxier.go:435] "IPVS scheduler not specified, use rr by default" I0329 01:40:48.718061 1 proxier.go:435] "IPVS scheduler not specified, use rr by default" I0329 01:40:48.718073 1 ipset.go:113] "Ipset name truncated" ipSetName="KUBE-6-LOAD-BALANCER-SOURCE-CIDR" truncatedName="KUBE-6-LOAD-BALANCER-SOURCE-CID" I0329 01:40:48.718117 1 ipset.go:113] "Ipset name truncated" ipSetName="KUBE-6-NODE-PORT-LOCAL-SCTP-HASH" truncatedName="KUBE-6-NODE-PORT-LOCAL-SCTP-HAS" I0329 01:40:48.718280 1 server.go:656] "Version info" version="v1.23.17" I0329 01:40:48.720165 1 conntrack.go:52] "Setting nf_conntrack_max" nf_conntrack_max=131072 I0329 01:40:48.720627 1 config.go:317] "Starting service config controller" I0329 01:40:48.720654 1 shared_informer.go:240] Waiting for caches to sync for service config I0329 01:40:48.720676 1 config.go:226] "Starting endpoint slice config controller" I0329 01:40:48.720679 1 shared_informer.go:240] Waiting for caches to sync for endpoint slice config I0329 01:40:48.721079 1 config.go:444] "Starting node config controller" I0329 01:40:48.721085 1 shared_informer.go:240] Waiting for caches to sync for node config I0329 01:40:48.821198 1 shared_informer.go:247] Caches are synced for node config

查看Pod的10行日志:

[root@k8s-master01 ~]# kubectl logs -f kube-proxy-sbdm2 -n kube-system --tail 10 I0329 01:40:48.720165 1 conntrack.go:52] "Setting nf_conntrack_max" nf_conntrack_max=131072 I0329 01:40:48.720627 1 config.go:317] "Starting service config controller" I0329 01:40:48.720654 1 shared_informer.go:240] Waiting for caches to sync for service config I0329 01:40:48.720676 1 config.go:226] "Starting endpoint slice config controller" I0329 01:40:48.720679 1 shared_informer.go:240] Waiting for caches to sync for endpoint slice config I0329 01:40:48.721079 1 config.go:444] "Starting node config controller" I0329 01:40:48.721085 1 shared_informer.go:240] Waiting for caches to sync for node config I0329 01:40:48.821198 1 shared_informer.go:247] Caches are synced for node config I0329 01:40:48.821225 1 shared_informer.go:247] Caches are synced for endpoint slice config I0329 01:40:48.821256 1 shared_informer.go:247] Caches are synced for service config

不加-f就不会追踪日志了:

[root@k8s-master01 ~]# kubectl logs kube-proxy-sbdm2 -n kube-system --tail 10 I0329 01:40:48.720165 1 conntrack.go:52] "Setting nf_conntrack_max" nf_conntrack_max=131072 I0329 01:40:48.720627 1 config.go:317] "Starting service config controller" I0329 01:40:48.720654 1 shared_informer.go:240] Waiting for caches to sync for service config I0329 01:40:48.720676 1 config.go:226] "Starting endpoint slice config controller" I0329 01:40:48.720679 1 shared_informer.go:240] Waiting for caches to sync for endpoint slice config I0329 01:40:48.721079 1 config.go:444] "Starting node config controller" I0329 01:40:48.721085 1 shared_informer.go:240] Waiting for caches to sync for node config I0329 01:40:48.821198 1 shared_informer.go:247] Caches are synced for node config I0329 01:40:48.821225 1 shared_informer.go:247] Caches are synced for endpoint slice config I0329 01:40:48.821256 1 shared_informer.go:247] Caches are synced for service config

kubectl故障排查

Pod状态字段Phase的不同取值

| 状态 | 说明 |

| Pending(挂机) | Pod已被Kubernetes系统接收,但仍有一个或多个容器未被创建,可以通过kubectl describe查看处于Pending状态的原因 |

| Running(运行中) | Pod已经被绑定到一个节点上,并且所有的容器都已经被创建,而且至少有一个是运行状态,或者是正在启动或者重启,可以通过kubectl logs查看Pod的日志 |

| Succeeded(成功) | 所有容器执行成功并终止,并且不会再次重启,可以通过kubectl logs查看Pod日志 |

| Failed(失败) | 所有容器都已终止,并且至少有一个容器以失败的方式终止,也就是说这个容器要么以非零状态退出,要么被系统终止,可以通过logs和describe查看Pod日志和状态 |

| Unknown(未知) | 通常是由于通信问题造成的无法获得Pod的状态 |

| ImagePullBackOffErrImagePull | 镜像拉取失败,一般是由于镜像不存在、网络不通或者需要登陆认证引起的,可以使用describe命令查看具体原因 |

| CrashLoopBackOff | 容器启动失败,可以通过logs命令查看具体原因,一般为启动命令不正确,健康检查不通过等 |

| OOMKilled | 容器内存溢出,一般是容器的内存Limit设置的过小,或者程序本身内存溢出,可以通过logs查看程序启动日志 |

| Terminating | Pod正在被删除,可以通过describe查看状态 |

| SysctlForbidden | Pod自定义了内核配置,但kubelet没有添加内核配置或配置的内核参数不支持,可以通过describe查看具体原因 |

| Completed | 容器内部主进程退出,一般计划任务执行结束会显示该状态,此时可以通过logs查看容器日志 |

| ContainerCreating | Pod正在创建,一般为正在下载镜像,或者有配置不当的地方,可以通过describe查看具体原因 |

注意:Pod的Phase字段只有Pending、Running、Succeeded、Failed、Unknown,其余的为处于上述描述的原因,可以通过kubectl get po xxx -oyaml查看。

使用describe查看Pod日志:

[root@k8s-master01 ~]# kubectl describe deploy nginx Name: nginx Namespace: default CreationTimestamp: Wed, 29 Mar 2023 18:09:36 +0800 Labels: app=nginx Annotations: deployment.kubernetes.io/revision: 4 Selector: app=nginx Replicas: 1 desired | 1 updated | 1 total | 1 available | 0 unavailable StrategyType: RollingUpdate MinReadySeconds: 0 RollingUpdateStrategy: 25% max unavailable, 25% max surge Pod Template: Labels: app=nginx Containers: redis: Image: redis Port: <none> Host Port: <none> Environment: <none> Mounts: <none> Volumes: <none> Conditions: Type Status Reason ---- ------ ------ Available True MinimumReplicasAvailable Progressing True NewReplicaSetAvailable OldReplicaSets: <none> NewReplicaSet: nginx-fff7f756b (1/1 replicas created) Events: Type Reason Age From Message ---- ------ ---- ---- ------- Normal ScalingReplicaSet 48m deployment-controller Scaled up replica set nginx-85b98978db to 1 Normal ScalingReplicaSet 46m deployment-controller Scaled up replica set nginx-5c6c7747f8 to 1 Normal ScalingReplicaSet 44m deployment-controller Scaled down replica set nginx-5c6c7747f8 to 0 Normal ScalingReplicaSet 29m deployment-controller Scaled up replica set nginx-fff7f756b to 1 Normal ScalingReplicaSet 28m deployment-controller Scaled down replica set nginx-85b98978db to 0

如果有一个Pod里面有两个容器,可以指定它的容器名称查看日志:

kubectl logs -f nginx-fff7f756b-fmnvn -c nginx

在容器里面执行命令:

kubectl exec nginx-fff7f756b-fmnvn -- ls

进入到容器内部执行命令:

[root@k8s-master01 ~]# kubectl exec -ti nginx-fff7f756b-fmnvn -- bash

root@nginx-fff7f756b-fmnvn:/data# cd /tmp/

可以把日志输出到文件里:

[root@k8s-master01 ~]# kubectl logs nginx-fff7f756b-fmnvn > /tmp/1

[root@k8s-master01 ~]# cat /tmp/1 1:C 29 Mar 2023 10:29:05.531 # oO0OoO0OoO0Oo Redis is starting oO0OoO0OoO0Oo 1:C 29 Mar 2023 10:29:05.531 # Redis version=7.0.10, bits=64, commit=00000000, modified=0, pid=1, just started 1:C 29 Mar 2023 10:29:05.531 # Warning: no config file specified, using the default config. In order to specify a config file use redis-server /path/to/redis.conf 1:M 29 Mar 2023 10:29:05.532 * monotonic clock: POSIX clock_gettime 1:M 29 Mar 2023 10:29:05.532 * Running mode=standalone, port=6379. 1:M 29 Mar 2023 10:29:05.532 # WARNING: The TCP backlog setting of 511 cannot be enforced because /proc/sys/net/core/somaxconn is set to the lower value of 128. 1:M 29 Mar 2023 10:29:05.532 # Server initialized 1:M 29 Mar 2023 10:29:05.533 * Ready to accept connections

查看node使用资源:

[root@k8s-master01 ~]# kubectl top node NAME CPU(cores) CPU% MEMORY(bytes) MEMORY% k8s-master01 212m 10% 1312Mi 70% k8s-master02 143m 7% 1127Mi 60% k8s-master03 116m 5% 1097Mi 58% k8s-node01 59m 2% 904Mi 48% k8s-node02 55m 2% 918Mi 49%

查看Pod的使用资源:

[root@k8s-master01 ~]# kubectl top po

NAME CPU(cores) MEMORY(bytes)

nginx-fff7f756b-fmnvn 1m 3Mi

查看所有命名空间下的Pod使用资源:

[root@k8s-master01 ~]# kubectl top po -A NAMESPACE NAME CPU(cores) MEMORY(bytes) default nginx-fff7f756b-fmnvn 2m 3Mi kube-system calico-kube-controllers-6f6595874c-j9hdl 2m 29Mi kube-system calico-node-2d5p7 25m 94Mi kube-system calico-node-7n5mw 25m 94Mi kube-system calico-node-9pdmh 24m 103Mi kube-system calico-node-b8sh2 20m 109Mi kube-system calico-node-chdsf 21m 115Mi kube-system calico-typha-6b6cf8cbdf-llhvd 2m 25Mi kube-system coredns-65c54cc984-8t757 1m 20Mi kube-system coredns-65c54cc984-mf4nd 1m 22Mi kube-system etcd-k8s-master01 31m 82Mi kube-system etcd-k8s-master02 25m 78Mi kube-system etcd-k8s-master03 25m 83Mi kube-system kube-apiserver-k8s-master01 36m 260Mi kube-system kube-apiserver-k8s-master02 35m 255Mi kube-system kube-apiserver-k8s-master03 31m 197Mi kube-system kube-controller-manager-k8s-master01 11m 83Mi kube-system kube-controller-manager-k8s-master02 2m 46Mi kube-system kube-controller-manager-k8s-master03 1m 40Mi kube-system kube-proxy-5vp9t 8m 17Mi kube-system kube-proxy-65s6x 6m 21Mi kube-system kube-proxy-lzj8c 5m 20Mi kube-system kube-proxy-r56m7 6m 28Mi kube-system kube-proxy-sbdm2 4m 21Mi kube-system kube-scheduler-k8s-master01 3m 33Mi kube-system kube-scheduler-k8s-master02 3m 42Mi kube-system kube-scheduler-k8s-master03 2m 34Mi kube-system metrics-server-5cf8885b66-jpd6z 3m 17Mi kubernetes-dashboard dashboard-metrics-scraper-7fcdff5f4c-45zfn 1m 9Mi kubernetes-dashboard kubernetes-dashboard-85f59f8ff7-gws8d 1m 16Mi

查看指定名空间下,使用资源最高的Pod,把它过滤到文件里:

[root@k8s-master01 ~]# kubectl top po -n kube-system NAME CPU(cores) MEMORY(bytes) calico-kube-controllers-6f6595874c-j9hdl 1m 28Mi calico-node-2d5p7 20m 94Mi calico-node-7n5mw 18m 95Mi calico-node-9pdmh 27m 104Mi calico-node-b8sh2 27m 109Mi calico-node-chdsf 25m 114Mi calico-typha-6b6cf8cbdf-llhvd 2m 25Mi coredns-65c54cc984-8t757 1m 19Mi coredns-65c54cc984-mf4nd 1m 21Mi etcd-k8s-master01 35m 82Mi etcd-k8s-master02 26m 78Mi etcd-k8s-master03 26m 83Mi kube-apiserver-k8s-master01 32m 266Mi kube-apiserver-k8s-master02 34m 269Mi kube-apiserver-k8s-master03 34m 211Mi kube-controller-manager-k8s-master01 10m 81Mi kube-controller-manager-k8s-master02 2m 46Mi kube-controller-manager-k8s-master03 1m 40Mi kube-proxy-5vp9t 4m 17Mi kube-proxy-65s6x 10m 20Mi kube-proxy-lzj8c 4m 20Mi kube-proxy-r56m7 7m 28Mi kube-proxy-sbdm2 5m 21Mi kube-scheduler-k8s-master01 2m 30Mi kube-scheduler-k8s-master02 3m 42Mi kube-scheduler-k8s-master03 3m 34Mi metrics-server-5cf8885b66-jpd6z 3m 17Mi

[root@k8s-master01 ~]# echo "kube-apiserver-k8s-master02" > /tmp/xxx [root@k8s-master01 ~]# cat /tmp/xxx kube-apiserver-k8s-master02

1.7 Kubeadm集群证书更新

在生产环境中推荐一年更新一次K8S版本。

1.7.1 续期证书一年

建议在生产环境中去监听证书过期日志,方便知道证书什么时候过期。

查看证书过期时间

[root@k8s-master01 ~]# kubeadm certs check-expiration [check-expiration] Reading configuration from the cluster... [check-expiration] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml' CERTIFICATE EXPIRES RESIDUAL TIME CERTIFICATE AUTHORITY EXTERNALLY MANAGED admin.conf Mar 28, 2024 01:25 UTC 364d ca no apiserver Mar 28, 2024 01:25 UTC 364d ca no apiserver-etcd-client Mar 28, 2024 01:25 UTC 364d etcd-ca no apiserver-kubelet-client Mar 28, 2024 01:25 UTC 364d ca no controller-manager.conf Mar 28, 2024 01:25 UTC 364d ca no etcd-healthcheck-client Mar 28, 2024 01:25 UTC 364d etcd-ca no etcd-peer Mar 28, 2024 01:25 UTC 364d etcd-ca no etcd-server Mar 28, 2024 01:25 UTC 364d etcd-ca no front-proxy-client Mar 28, 2024 01:25 UTC 364d front-proxy-ca no scheduler.conf Mar 28, 2024 01:25 UTC 364d ca no CERTIFICATE AUTHORITY EXPIRES RESIDUAL TIME EXTERNALLY MANAGED ca Mar 26, 2033 01:25 UTC 9y no etcd-ca Mar 26, 2033 01:25 UTC 9y no front-proxy-ca Mar 26, 2033 01:25 UTC 9y no

备份一次原有的证书,防止更新了报错,进行还原:

[root@k8s-master01 ~]# cp -rp /etc/kubernetes/pki/ /opt/pki.bak

更新证书:

[root@k8s-master01 ~]# kubeadm certs renew all [renew] Reading configuration from the cluster... [renew] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml' certificate embedded in the kubeconfig file for the admin to use and for kubeadm itself renewed certificate for serving the Kubernetes API renewed certificate the apiserver uses to access etcd renewed certificate for the API server to connect to kubelet renewed certificate embedded in the kubeconfig file for the controller manager to use renewed certificate for liveness probes to healthcheck etcd renewed certificate for etcd nodes to communicate with each other renewed certificate for serving etcd renewed certificate for the front proxy client renewed certificate embedded in the kubeconfig file for the scheduler manager to use renewed Done renewing certificates. You must restart the kube-apiserver, kube-controller-manager, kube-scheduler and etcd, so that they can use the new certificates.

查看node是否正常

[root@k8s-master01 ~]# kubectl get node NAME STATUS ROLES AGE VERSION k8s-master01 Ready control-plane,master 9h v1.23.17 k8s-master02 Ready control-plane,master 9h v1.23.17 k8s-master03 Ready control-plane,master 9h v1.23.17 k8s-node01 Ready <none> 9h v1.23.17 k8s-node02 Ready <none> 9h v1.23.17

查看Pod是否正常:

[root@k8s-master01 ~]# kubectl get po NAME READY STATUS RESTARTS AGE nginx-fff7f756b-fmnvn 1/1 Running 0 54m

更新证书的时候,它会把当前的文件做一次更新:

[root@k8s-master01 ~]# ls /etc/kubernetes/pki/ -l total 56 -rw-r--r--. 1 root root 1302 Mar 29 19:20 apiserver.crt -rw-r--r--. 1 root root 1155 Mar 29 19:20 apiserver-etcd-client.crt -rw-------. 1 root root 1679 Mar 29 19:20 apiserver-etcd-client.key -rw-------. 1 root root 1679 Mar 29 19:20 apiserver.key -rw-r--r--. 1 root root 1164 Mar 29 19:20 apiserver-kubelet-client.crt -rw-------. 1 root root 1679 Mar 29 19:20 apiserver-kubelet-client.key -rw-r--r--. 1 root root 1099 Mar 29 09:25 ca.crt -rw-------. 1 root root 1679 Mar 29 09:25 ca.key drwxr-xr-x. 2 root root 162 Mar 29 09:25 etcd -rw-r--r--. 1 root root 1115 Mar 29 09:25 front-proxy-ca.crt -rw-------. 1 root root 1675 Mar 29 09:25 front-proxy-ca.key -rw-r--r--. 1 root root 1119 Mar 29 19:20 front-proxy-client.crt -rw-------. 1 root root 1675 Mar 29 19:20 front-proxy-client.key -rw-------. 1 root root 1679 Mar 29 09:25 sa.key -rw-------. 1 root root 451 Mar 29 09:25 sa.pub

我们的K8S集群还没有用到这个证书,我们需要在重启一下:

[root@k8s-master01 ~]# systemctl restart kubelet

1.7.2 证书更新为99年

更新为99年的话会跟自身版本相关联。

查看一下kubeadm的版本:

[root@k8s-master01 ~]# kubeadm version kubeadm version: &version.Info{Major:"1", Minor:"23", GitVersion:"v1.23.17", GitCommit:"953be8927218ec8067e1af2641e540238ffd7576", GitTreeState:"clean", BuildDate:"2023-02-22T13:33:14Z", GoVersion:"go1.19.6", Compiler:"gc", Platform:"linux/amd64"}

同步一下K8S仓库的地址:

[root@k8s-master01 ~]# git clone https://gitee.com/mirrors/kubernetes.git Cloning into 'kubernetes'... remote: Enumerating objects: 1427037, done. remote: Counting objects: 100% (14341/14341), done. remote: Compressing objects: 100% (8326/8326), done. remote: Total 1427037 (delta 9201), reused 8548 (delta 5365), pack-reused 1412696 Receiving objects: 100% (1427037/1427037), 911.55 MiB | 16.42 MiB/s, done. Resolving deltas: 100% (1039605/1039605), done. Checking out files: 100% (23746/23746), done.

使用git branch -a查看一下有哪些分支:

[root@k8s-master01 kubernetes]# git branch -a * master remotes/origin/HEAD -> origin/master remotes/origin/feature-rate-limiting remotes/origin/feature-serverside-apply remotes/origin/feature-workload-ga remotes/origin/fix-aggregator-upgrade-transport remotes/origin/master remotes/origin/release-0.10 remotes/origin/release-0.12 remotes/origin/release-0.13 remotes/origin/release-0.14 remotes/origin/release-0.15 remotes/origin/release-0.16 remotes/origin/release-0.17 remotes/origin/release-0.18 remotes/origin/release-0.19 remotes/origin/release-0.20 remotes/origin/release-0.21 remotes/origin/release-0.4 remotes/origin/release-0.5 remotes/origin/release-0.6 remotes/origin/release-0.7 remotes/origin/release-0.8 remotes/origin/release-0.9 remotes/origin/release-1.0 remotes/origin/release-1.1 remotes/origin/release-1.10 remotes/origin/release-1.11 remotes/origin/release-1.12 remotes/origin/release-1.13 remotes/origin/release-1.14 remotes/origin/release-1.15 remotes/origin/release-1.16 remotes/origin/release-1.17 remotes/origin/release-1.18 remotes/origin/release-1.19 remotes/origin/release-1.2 remotes/origin/release-1.20 remotes/origin/release-1.21 remotes/origin/release-1.22 remotes/origin/release-1.23 remotes/origin/release-1.24 remotes/origin/release-1.25 remotes/origin/release-1.26 remotes/origin/release-1.27 remotes/origin/release-1.3 remotes/origin/release-1.4 remotes/origin/release-1.5 remotes/origin/release-1.6 remotes/origin/release-1.6.3 remotes/origin/release-1.7 remotes/origin/release-1.8 remotes/origin/release-1.9 remotes/origin/revert-85861-scheduler-perf-collect-data-items-from-metrics remotes/origin/revert-89421-disabled_for_large_cluster remotes/origin/revert-90400-1737 remotes/origin/revert-91380-revert-watch-capacity remotes/origin/revert-99386-conformance-on-release remotes/origin/revert-99393-reduceKubeletResourceWatch

可以使用git tag去查看有哪些版本:

[root@k8s-master01 kubernetes]# git tag v0.10.0 v0.10.1 v0.11.0 v0.12.0 v0.12.1 v0.12.2 v0.13.0 v0.13.1 v0.13.1-dev v0.13.2 v0.14.0 v0.14.1 v0.14.2 v0.15.0 v0.16.0 v0.16.1 v0.16.2 v0.17.0 v0.17.1 v0.18.0 v0.18.1 v0.18.2 v0.19.0 v0.19.1 v0.19.2 v0.19.3 v0.2 v0.20.0 v0.20.1

切换分支到自己的k8s版本:

如果要是有报错的话,就把报错的目录下的文件给删掉就好了!!!

[root@k8s-master01 kubernetes]# git checkout v1.23.17 Checking out files: 100% (17024/17024), done. Note: checking out 'v1.23.17'. You are in 'detached HEAD' state. You can look around, make experimental changes and commit them, and you can discard any commits you make in this state without impacting any branches by performing another checkout. If you want to create a new branch to retain commits you create, you may do so (now or later) by using -b with the checkout command again. Example: git checkout -b new_branch_name HEAD is now at 953be89... Release commit for Kubernetes v1.23.17

要确保docker是起来的!!!

启动一个Golang环境的容器:

[root@k8s-master01 kubernetes]# docker run -ti --rm -v `pwd`:/go/src/ registry.cn-beijing.aliyuncs.com/dotbalo/golang:kubeadm bash Unable to find image 'registry.cn-beijing.aliyuncs.com/dotbalo/golang:kubeadm' locally kubeadm: Pulling from dotbalo/golang f606d8928ed3: Pull complete 47db815c6a45: Pull complete bf4849400000: Pull complete a572f7a256d3: Pull complete 643043c84a42: Pull complete 4bbfdffcd51b: Pull complete 7bacd2cea1ca: Pull complete 4ca1c8393efa: Pull complete Digest: sha256:af620e3fb7f2a8ee5e070c2f5608cc6e1600ec98c94d7dd25778a67f1a0b792a Status: Downloaded newer image for registry.cn-beijing.aliyuncs.com/dotbalo/golang:kubeadm

以下操作在容器里面操作!!!

以下操作在容器里面操作!!!

以下操作在容器里面操作!!!

进入到Golang的源码目录:

root@85165a2f7d91:/go# cd /go/src/

root@85165a2f7d91:/go/src#

构建代理:

root@85165a2f7d91:/go/src# go env -w GOPROXY=https://goproxy.cn,direct root@85165a2f7d91:/go/src# go env -w GOSUMDB=off

过滤配置文件证书有效时间:

root@85165a2f7d91:/go/src# grep "365" cmd/kubeadm/app/constants/constants.go CertificateValidity = time.Hour * 24 * 365

更改配置文件证书有效时间:

root@85165a2f7d91:/go/src# sed -i 's#365#365 * 100#g' cmd/kubeadm/app/constants/constants.go

查询一下是否更改:

root@85165a2f7d91:/go/src# grep "365" cmd/kubeadm/app/constants/constants.go CertificateValidity = time.Hour * 24 * 365 * 100

创建文件夹,它编译完之后,会把二进制文件输出到文件夹内:

root@85165a2f7d91:/go/src# mkdir -p _output/

更改一下权限:

root@85165a2f7d91:/go/src# chmod 777 -R _output/

编译一下,时间可能较长,耐心等待:

root@85165a2f7d91:/go/src# make WHAT=cmd/kubeadm

查看一下编译的目录:

root@5592256d5bb3:/go/src# ls _output/bin/kubeadm

_output/bin/kubeadm

拷贝一下文件到./kubeadm下:

root@5592256d5bb3:/go/src# cp _output/bin/kubeadm ./kubeadm

执行到这里然后退出!!!

exit

这里我们的Kubernetes目录下会有kubeadm包,我们执行一下:

[root@k8s-master01 kubernetes]# ls api CHANGELOG.md code-of-conduct.md go.mod kubeadm LICENSES Makefile.generated_files OWNERS_ALIASES README.md SUPPORT.md vendor build cluster CONTRIBUTING.md go.sum kubernetes logo _output pkg SECURITY_CONTACTS test CHANGELOG cmd docs hack LICENSE Makefile OWNERS plugin staging third_party [root@k8s-master01 kubernetes]# ./kubeadm version kubeadm version: &version.Info{Major:"1", Minor:"23+", GitVersion:"v1.23.17-dirty", GitCommit:"953be8927218ec8067e1af2641e540238ffd7576", GitTreeState:"dirty", BuildDate:"2023-03-30T11:19:36Z", GoVersion:"go1.19.2", Compiler:"gc", Platform:"linux/amd64"}

拷贝一下我们的证书:

[root@k8s-master01 kubernetes]# cp kubeadm /opt/

这个使用要使用kubeadm去更新一下我们的证书:

[root@k8s-master01 kubernetes]# /opt/kubeadm certs renew all [renew] Reading configuration from the cluster... [renew] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml' [renew] Error reading configuration from the Cluster. Falling back to default configuration certificate embedded in the kubeconfig file for the admin to use and for kubeadm itself renewed certificate for serving the Kubernetes API renewed certificate the apiserver uses to access etcd renewed certificate for the API server to connect to kubelet renewed certificate embedded in the kubeconfig file for the controller manager to use renewed certificate for liveness probes to healthcheck etcd renewed certificate for etcd nodes to communicate with each other renewed certificate for serving etcd renewed certificate for the front proxy client renewed certificate embedded in the kubeconfig file for the scheduler manager to use renewed Done renewing certificates. You must restart the kube-apiserver, kube-controller-manager, kube-scheduler and etcd, so that they can use the new certificates.

这里我们查看一下我们的kubeadm版本:

[root@k8s-master01 kubernetes]# /opt/kubeadm version kubeadm version: &version.Info{Major:"1", Minor:"23+", GitVersion:"v1.23.17-dirty", GitCommit:"953be8927218ec8067e1af2641e540238ffd7576", GitTreeState:"dirty", BuildDate:"2023-03-30T11:19:36Z", GoVersion:"go1.19.2", Compiler:"gc", Platform:"linux/amd64"}

如果想更新其他master节点的证书,把这个证书拷贝过去就可以了:

[root@k8s-master01 kubernetes]# scp /opt/kubeadm k8s-master02:/opt/ root@k8s-master02's password: kubeadm 100% 42MB 135.1MB/s 00:00

[root@k8s-master01 kubernetes]# scp /opt/kubeadm k8s-master03:/opt/ root@k8s-master03's password: kubeadm 100% 42MB 118.0MB/s 00:00

另外两个master也需要执行:

[root@k8s-master01 kubernetes]# /opt/kubeadm certs renew all [renew] Reading configuration from the cluster... [renew] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml' [renew] Error reading configuration from the Cluster. Falling back to default configuration certificate embedded in the kubeconfig file for the admin to use and for kubeadm itself renewed certificate for serving the Kubernetes API renewed certificate the apiserver uses to access etcd renewed certificate for the API server to connect to kubelet renewed certificate embedded in the kubeconfig file for the controller manager to use renewed certificate for liveness probes to healthcheck etcd renewed certificate for etcd nodes to communicate with each other renewed certificate for serving etcd renewed certificate for the front proxy client renewed certificate embedded in the kubeconfig file for the scheduler manager to use renewed Done renewing certificates. You must restart the kube-apiserver, kube-controller-manager, kube-scheduler and etcd, so that they can use the new certificates.

所有master节点上面检查是否更新了:

[root@k8s-master01 kubernetes]# kubeadm certs check-expiration [check-expiration] Reading configuration from the cluster... [check-expiration] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml' [check-expiration] Error reading configuration from the Cluster. Falling back to default configuration CERTIFICATE EXPIRES RESIDUAL TIME CERTIFICATE AUTHORITY EXTERNALLY MANAGED admin.conf Mar 06, 2123 13:46 UTC 99y ca no apiserver Mar 06, 2123 13:46 UTC 99y ca no apiserver-etcd-client Mar 06, 2123 13:46 UTC 99y etcd-ca no apiserver-kubelet-client Mar 06, 2123 13:46 UTC 99y ca no controller-manager.conf Mar 06, 2123 13:46 UTC 99y ca no etcd-healthcheck-client Mar 06, 2123 13:46 UTC 99y etcd-ca no etcd-peer Mar 06, 2123 13:46 UTC 99y etcd-ca no etcd-server Mar 06, 2123 13:46 UTC 99y etcd-ca no front-proxy-client Mar 06, 2123 13:46 UTC 99y front-proxy-ca no scheduler.conf Mar 06, 2123 13:46 UTC 99y ca no CERTIFICATE AUTHORITY EXPIRES RESIDUAL TIME EXTERNALLY MANAGED ca Mar 20, 2033 03:14 UTC 9y no etcd-ca Mar 20, 2033 03:14 UTC 9y no front-proxy-ca Mar 20, 2033 03:14 UTC 9y no

所有master节点重启kubelet:

[root@k8s-master01 kubernetes]# systemctl restart kubelet