2023数据采集与融合技术实践三

作业1:

- 要求:

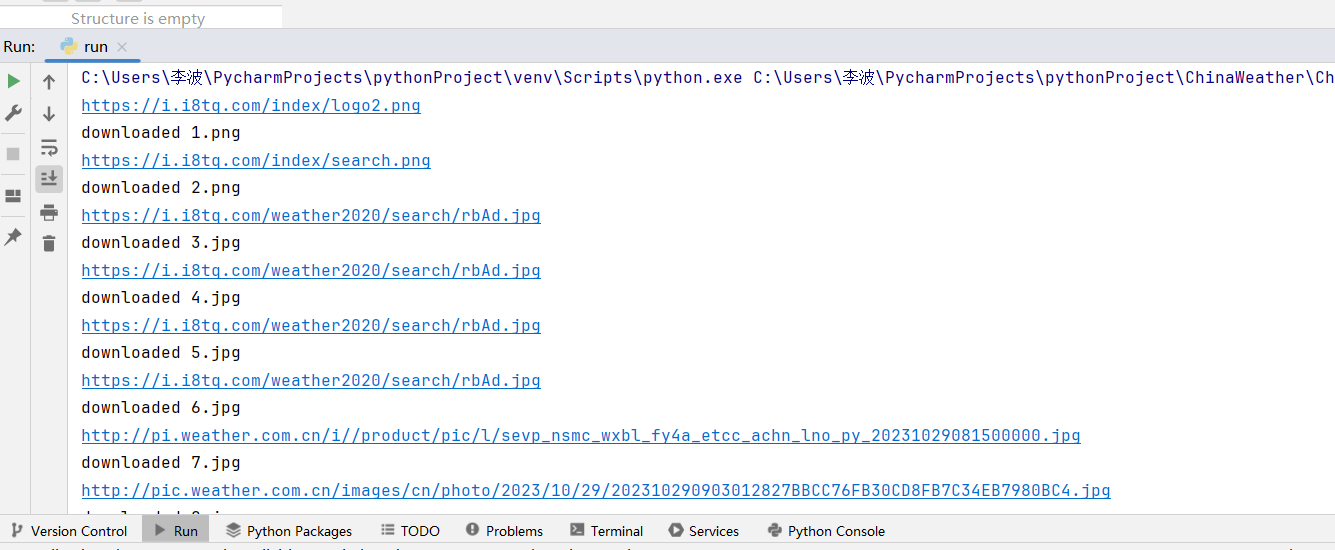

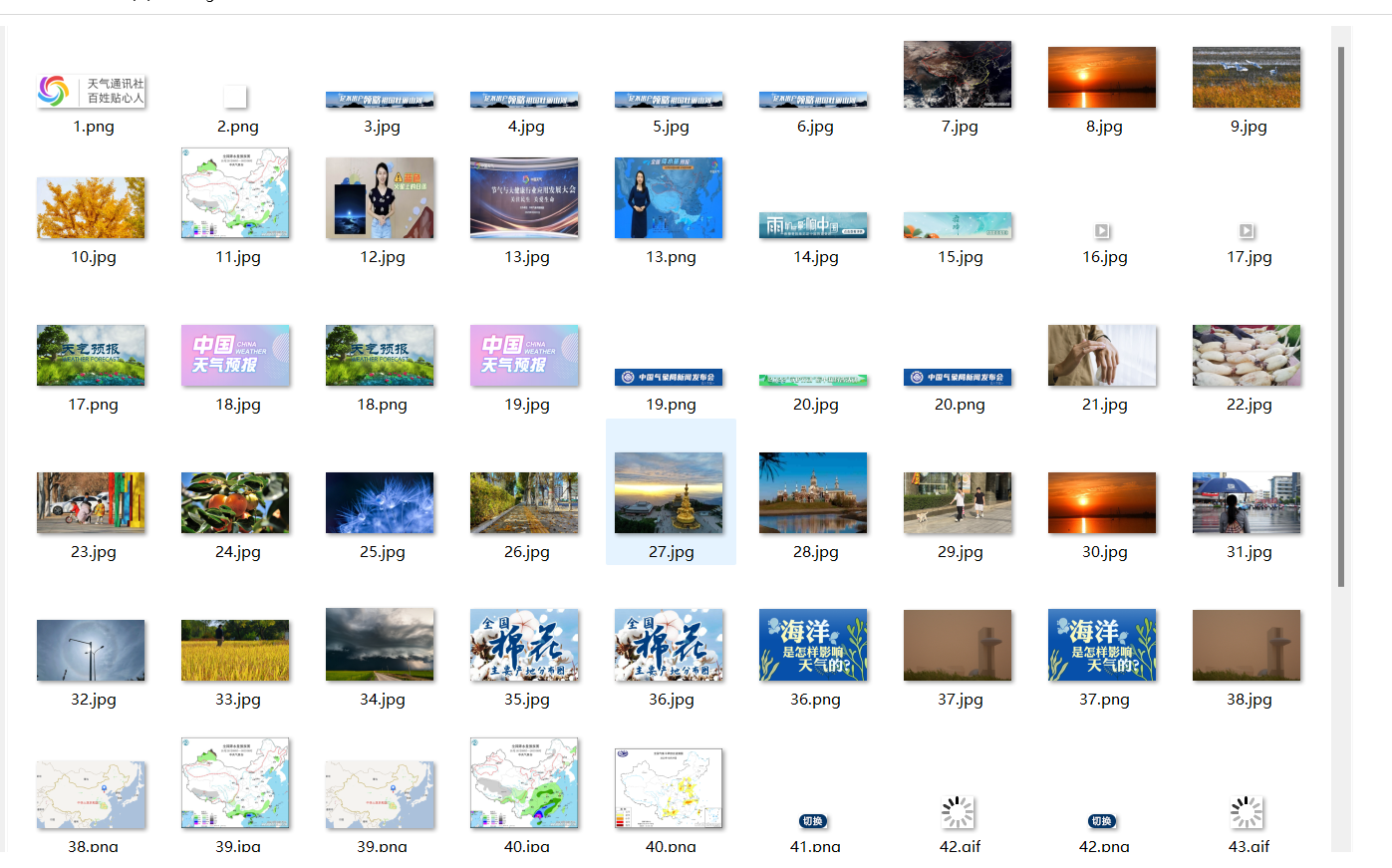

指定一个网站,爬取这个网站中的所有的所有图片,例如:中国气象网(http://www.weather.com.cn)。使用scrapy框架分别实现单线程和多线程的方式爬取。 - 输出信息:

将下载的Url信息在控制台输出,并将下载的图片存储在images子文件中,并给出截图。

码云文件夹链接

1.代码内容运行结果和Gitee链接

MySpider

from ..items import ChinaWeatherItem

class MySpider(scrapy.Spider):

name = "mySpider"

start_urls = ["http://www.weather.com.cn/"]

def parse(self, response):

try:

data = response.body.decode()

selector = scrapy.Selector(text=data)

srcs = selector.xpath('//img/@src').extract() # 选择图像文件的src地址

for src in srcs:

print(src)

# 将提取出的src交由item处理

item = ChinaWeatherItem()

item['src'] = src

yield item

except Exception as err:

print(err)

items

class ChinaWeatherItem(scrapy.Item):

# define the fields for your item here like:

# name = scrapy.Field()

src = scrapy.Field()

pass

pipelines

class ChinaWeatherPipeline:

count = 0

headers = {

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/85.0.4183.121 Safari/537.36"}

def process_item(self, item, spider):

try:

self.count += 1

src = item['src']

if src[len(src) - 4] == ".":

ext = src[len(src) - 4:]

else:

ext = ""

req = urllib.request.Request(src, headers=self.headers)

data = urllib.request.urlopen(req, timeout=100)

data = data.read()

# 将下载的图像文件写入本地文件夹

fobj = open("E:\\images\\" + str(self.count) + ext, "wb")

fobj.write(data)

fobj.close()

print("downloaded "+str(self.count)+ext)

except Exception as err:

print(err)

return item

settings

ROBOTSTXT_OBEY = False

ITEM_PIPELINES = {

'ChinaWeather.pipelines.ChinaWeatherPipeline': 300,

}

REQUEST_FINGERPRINTER_IMPLEMENTATION = "2.7"

TWISTED_REACTOR = "twisted.internet.asyncioreactor.AsyncioSelectorReactor"

FEED_EXPORT_ENCODING = "utf-8"

run

cmdline.execute("scrapy crawl mySpider -s LOG_ENABLED=false".split())

运行结果:

Gitee链接:

https://gitee.com/li-bo-102102157/libo_project/commit/b118d34c9d95b59e9fa87a40a919d96df3cd0321

2.心得体会

通过运用scrapy框架进行复现,了解到各个模块的作用,以及各模块之间的关系。

作业2:

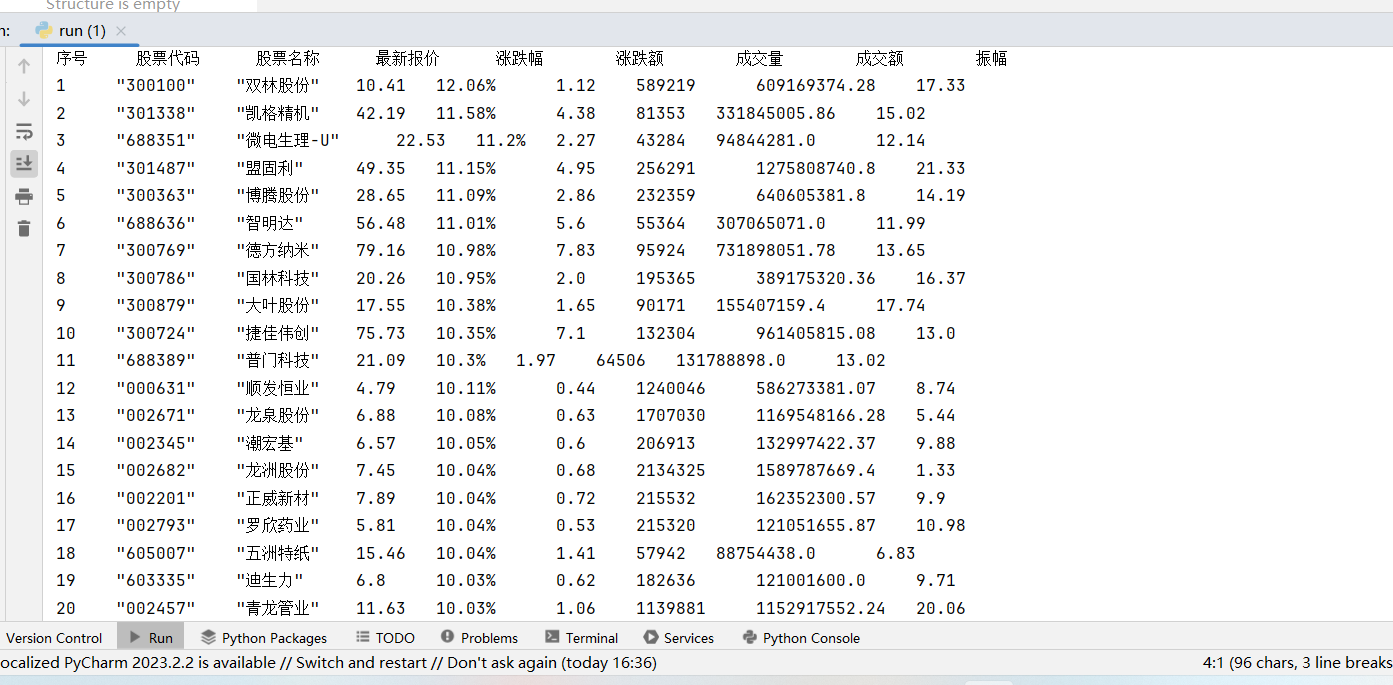

- 要求:熟练掌握 scrapy 中 Item、Pipeline 数据的序列化输出方法;使用scrapy框架+Xpath+MySQL数据库存储技术路线爬取股票相关信息。

候选网站:东方财富网:https://www.eastmoney.com/ - 输出信息:MySQL数据库存储和输出格式如下:

表头英文命名例如:序号id,股票代码:bStockNo……,由同学们自行定义设计

| 序号 | 股票代码 | 股票名称 | 最新报价涨跌幅 | 涨跌额 | 成交量 | 振幅 | 最高 | 最底 | 今开 | 昨收 |

|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 688093 | N世华 | 28.47 | 10.92 | 26.13万 | 7.6亿 | 22.34 | 32.0 | 28.08 | 30.20 |

| 2 | ..... |

1.代码内容运行结果和Gitee链接

stocks

import re

from Dfstocks.items import DfstocksItem

class StocksSpider(scrapy.Spider):

name = 'stocks'

# allowed_domains = ['www.eastmoney.com']

start_urls = [

'http://99.push2.eastmoney.com/api/qt/clist/get?cb=jQuery112408165941736007347_1603177898272&pn=2&pz=20&po=1&np=1&ut=bd1d9ddb04089700cf9c27f6f7426281&fltt=2&invt=2&fid=f3&fs=m:0+t:6,m:0+t:13,m:0+t:80,m:1+t:2,m:1+t:23&fields=f1,f2,f3,f4,f5,f6,f7,f8,f9,f10,f12,f13,f14,f15,f16,f17,f18,f20,f21,f23,f24,f25,f22,f11,f62,f128,f136,f115,f152&_=1603177898382']

def parse(self, response):

req = response.text

pat = '"diff":\[\{(.*?)\}\]'

data = re.compile(pat, re.S).findall(req)

datas = data[0].split('},{') # 对字符进行分片

print("序号\t\t股票代码\t\t股票名称\t\t最新报价\t\t涨跌幅\t\t涨跌额\t\t成交量\t\t成交额\t\t振幅\t\t")

for i in range(len(datas)):

item = DfstocksItem()

s = r'"(\w)+":'

line = re.sub(s, " ", datas[i]).split(",")

item["number"] = str(i + 1)

item["f12"] = line[11]

item["f14"] = line[13]

item["f2"] = line[1]

item["f3"] = line[2]

item["f4"] = line[3]

item["f5"] = line[4]

item["f6"] = line[5]

item["f7"] = line[6]

print(item["number"] + "\t", item['f12'] + "\t", item['f14'] + "\t", item['f2'] + "\t", item['f3'] + "%\t",

item['f4'] + "\t", item['f5'] + "\t", str(item['f6']) + "\t", item['f7'])

yield item

items

class DfstocksItem(scrapy.Item):

# define the fields for your item here like:

# name = scrapy.Field()

number = scrapy.Field()

f12 = scrapy.Field()

f14 = scrapy.Field()

f2 = scrapy.Field()

f3 = scrapy.Field()

f4 = scrapy.Field()

f5 = scrapy.Field()

f6 = scrapy.Field()

f7 = scrapy.Field()

settings

SPIDER_MODULES = ["Dfstocks.spiders"]

NEWSPIDER_MODULE = "Dfstocks.spiders"

ROBOTSTXT_OBEY = False

ITEM_PIPELINES = {

'Dfstocks.pipelines.DfstocksPipeline': 300,

}```

**pipelines**

```from itemadapter import ItemAdapter

class DfstocksPipeline:

def process_item(self, item, spider):

return item```

**run**

```from scrapy import cmdline

cmdline.execute("scrapy crawl stocks -s LOG_ENABLED=false".split())

运行结果:

Gitee链接:

https://gitee.com/li-bo-102102157/libo_project/commit/6a02df31f7c62cdb9298bee0207ab76dda230687

2.心得体会

我前几次测试的时候,发现一直打印不出结果,经过网上查找后发现需要在settings文件中中把ROBOTSTXT_OBEY=false。

作业3:

- 要求:熟练掌握 scrapy 中 Item、Pipeline 数据的序列化输出方法;使用scrapy框架+Xpath+MySQL数据库存储技术路线爬取外汇网站数据。

- 候选网站:****中国银行网:https://www.boc.cn/sourcedb/whpj/

1代码内容运行结果和Gitee链接

currency

from bs4 import UnicodeDammit

from ..items import BankItem

class CurrencySpider(scrapy.Spider):

name = 'currency'

# allowed_domains = ['fx.cmbchina.com/hq/']

start_urls = ['http://fx.cmbchina.com/hq//']

def parse(self, response):

i = 1

try:

dammit = UnicodeDammit(response.body, ["utf-8", "gbk"])

data = dammit.unicode_markup

selector = scrapy.Selector(text=data)

trs = selector.xpath("//div[@id='realRateInfo']/table/tr")

for tr in trs[1:]: # 第一个tr是表头,略过

id = i

Currency = tr.xpath("./td[@class='fontbold'][position()=1]/text()").extract_first()

TSP = tr.xpath("./td[@class='numberright'][position()=1]/text()").extract_first()

CSP = tr.xpath("./td[@class='numberright'][position()=2]/text()").extract_first()

TBP = tr.xpath("./td[@class='numberright'][position()=3]/text()").extract_first()

CBP = tr.xpath("./td[@class='numberright'][position()=4]/text()").extract_first()

Time = tr.xpath("./td[@align='center'][position()=3]/text()").extract_first()

item = BankItem()

item["id"] = id

item["Currency"] = Currency.strip() if Currency else ""

item["TSP"] = TSP.strip() if TSP else ""

item["CSP"] = CSP.strip() if CSP else ""

item["TBP"] = TBP.strip() if TBP else ""

item["CBP"] = CBP.strip() if CBP else ""

item["Time"] = Time.strip() if Time else ""

i += 1

yield item

except Exception as err:

print(err)

items

class BankItem(scrapy.Item):

# define the fields for your item here like:

# name = scrapy.Field()

id = scrapy.Field()

Currency = scrapy.Field()

TSP = scrapy.Field()

CSP = scrapy.Field()

TBP = scrapy.Field()

CBP = scrapy.Field()

Time = scrapy.Field()

pipelines

from itemadapter import ItemAdapter

class BankPipeline:

def open_spider(self, spider):

print("opened")

try:

self.con = pymysql.connect(host="127.0.0.1", port=3306, user="root", passwd="lb159357", db="bb",

charset="utf8")

self.cursor = self.con.cursor(pymysql.cursors.DictCursor)

self.cursor.execute("delete from money")

self.opened = True

self.count = 1

except Exception as err:

print(err)

self.opened = False

def close_spider(self, spider):

if self.opened:

self.con.commit()

self.con.close()

self.opened = False

print("closed")

#print("总共爬取", self.count, "本书籍")

def process_item(self, item, spider):

try:

print(item["id"])

print(item["Currency"])

print(item["TSP"])

print(item["CSP"])

print(item["TBP"])

print(item["CBP"])

print(item["Time"])

print()

if self.opened:

self.cursor.execute(

"insert into money(id, Currency, TSP, CSP, TBP, CBP, Time)values( % s, % s, % s, "

"% s, % s, % s, % s)",

(item["id"], item["Currency"], item["TSP"], item["CSP"], item["TBP"], item["CBP"], item["Time"]))

except Exception as err:

print(err)

return item

run

cmdline.execute("scrapy crawl currency -s LOG_ENABLED=false".split())

运行结果:

Gitee链接:

https://gitee.com/li-bo-102102157/libo_project/commit/b7acfb7f75a2021fb086889d65de770e27ce8f32

2.心得体会

有了前两次的经验这次就很容易实现了。