一、本次实践介绍

1.本次实践环境

1.本次实践环境为ECS云服务器;

2.本次实践为个人测试环境,生产环境请谨慎使用;

3.本次实践为研究docker容器的资源管理,加深对docker容器的理解;

2.登录ECS云服务器

二、docker环境检查

1.检查docker版本

检查docker版本

[root@ecs-7501 ~]# docker version

Client: Docker Engine - Community

Version: 20.10.21

API version: 1.41

Go version: go1.18.7

Git commit: baeda1f

Built: Tue Oct 25 18:04:24 2022

OS/Arch: linux/amd64

Context: default

Experimental: true

Server: Docker Engine - Community

Engine:

Version: 20.10.21

API version: 1.41 (minimum version 1.12)

Go version: go1.18.7

Git commit: 3056208

Built: Tue Oct 25 18:02:38 2022

OS/Arch: linux/amd64

Experimental: false

containerd:

Version: 1.6.10

GitCommit: 770bd0108c32f3fb5c73ae1264f7e503fe7b2661

runc:

Version: 1.1.4

GitCommit: v1.1.4-0-g5fd4c4d

docker-init:

Version: 0.19.0

GitCommit: de40ad0

2.检查docker状态

[root@ecs-7501 ~]# systemctl status docker

● docker.service - Docker Application Container Engine

Loaded: loaded (/usr/lib/systemd/system/docker.service; disabled; vendor preset: disabled)

Active: active (running) since Sun 2022-10-23 14:37:48 CST; 15s ago

Docs: https://docs.docker.com

Main PID: 1810 (dockerd)

Tasks: 7

Memory: 23.2M

CGroup: /system.slice/docker.service

└─1810 /usr/bin/dockerd -H fd:// --containerd=/run/containerd/containerd.sock

Oct 23 14:37:48 ecs-7501 dockerd[1810]: time="2022-10-23T14:37:48.134923744+08:00" level=info msg="scheme \"unix\" not re...e=grpc

Oct 23 14:37:48 ecs-7501 dockerd[1810]: time="2022-10-23T14:37:48.134934583+08:00" level=info msg="ccResolverWrapper: sen...e=grpc

Oct 23 14:37:48 ecs-7501 dockerd[1810]: time="2022-10-23T14:37:48.134940773+08:00" level=info msg="ClientConn switching b...e=grpc

Oct 23 14:37:48 ecs-7501 dockerd[1810]: time="2022-10-23T14:37:48.161045570+08:00" level=info msg="Loading containers: start."

Oct 23 14:37:48 ecs-7501 dockerd[1810]: time="2022-10-23T14:37:48.276742555+08:00" level=info msg="Default bridge (docker...dress"

Oct 23 14:37:48 ecs-7501 dockerd[1810]: time="2022-10-23T14:37:48.315899127+08:00" level=info msg="Loading containers: done."

Oct 23 14:37:48 ecs-7501 dockerd[1810]: time="2022-10-23T14:37:48.329845594+08:00" level=info msg="Docker daemon" commit=....10.18

Oct 23 14:37:48 ecs-7501 dockerd[1810]: time="2022-10-23T14:37:48.329910922+08:00" level=info msg="Daemon has completed i...ation"

Oct 23 14:37:48 ecs-7501 dockerd[1810]: time="2022-10-23T14:37:48.352070554+08:00" level=info msg="API listen on /var/run....sock"

Oct 23 14:37:48 ecs-7501 systemd[1]: Started Docker Application Container Engine.

Hint: Some lines were ellipsized, use -l to show in full.

三、容器资源限额

1.stress容器介绍

stress是一个集成Linux压测实测工具的容器,可以实现对cpu、memory、IO等资源的压力测试。

2.运行一个压力测试容器,实践容器内存分配限额

[root@ecs-7501 ~]# docker run -it -m 200M progrium/stress --vm 1 --vm-bytes 150M

stress: info: [1] dispatching hogs: 0 cpu, 0 io, 1 vm, 0 hdd

stress: dbug: [1] using backoff sleep of 3000us

stress: dbug: [1] --> hogvm worker 1 [7] forked

stress: dbug: [7] allocating 157286400 bytes ...

stress: dbug: [7] touching bytes in strides of 4096 bytes ...

stress: dbug: [7] freed 157286400 bytes

stress: dbug: [7] allocating 157286400 bytes ...

stress: dbug: [7] touching bytes in strides of 4096 bytes ...

stress: dbug: [7] freed 157286400 bytes

stress: dbug: [7] allocating 157286400 bytes ...

stress: dbug: [7] touching bytes in strides of 4096 bytes ...

stress: dbug: [7] freed 157286400 bytes

stress: dbug: [7] allocating 157286400 bytes ...

stress: dbug: [7] touching bytes in strides of 4096 bytes ...

stress: dbug: [7] freed 157286400 bytes

stress: dbug: [7] allocating 157286400 bytes ...

stress: dbug: [7] touching bytes in strides of 4096 bytes ...

stress: dbug: [7] freed 157286400 bytes

stress: dbug: [7] allocating 157286400 bytes ...

stress: dbug: [7] touching bytes in strides of 4096 bytes ...

stress: dbug: [7] freed 157286400 bytes

stress: dbug: [7] allocating 157286400 bytes ...

stress: dbug: [7] touching bytes in strides of 4096 bytes ...

stress: dbug: [7] freed 157286400 bytes

stress: dbug: [7] allocating 157286400 bytes ...

stress: dbug: [7] touching bytes in strides of 4096 bytes ...

stress: dbug: [7] freed 157286400 bytes

stress: dbug: [7] allocating 157286400 bytes ...

stress: dbug: [7] touching bytes in strides of 4096 bytes ...

stress: dbug: [7] freed 157286400 bytes

stress: dbug: [7] allocating 157286400 bytes ...

stress: dbug: [7] touching bytes in strides of 4096 bytes ...

stress: dbug: [7] freed 157286400 bytes

[root@ecs-7501 ~]# docker run -it -m 200M progrium/stress --vm 1 --vm-bytes 250M

stress: info: [1] dispatching hogs: 0 cpu, 0 io, 1 vm, 0 hdd

stress: dbug: [1] using backoff sleep of 3000us

stress: dbug: [1] --> hogvm worker 1 [7] forked

stress: dbug: [7] allocating 262144000 bytes ...

stress: dbug: [7] touching bytes in strides of 4096 bytes ...

stress: FAIL: [1] (416) <-- worker 7 got signal 9

stress: WARN: [1] (418) now reaping child worker processes

stress: FAIL: [1] (422) kill error: No such process

stress: FAIL: [1] (452) failed run completed in 0s

3.运行一个压力测试容器,实践容器内存和swap分配限额

[root@ecs-7501 ~]# docker run -it -m 300M --memory-swap=400M progrium/stress --vm 2 --vm-bytes 100M

stress: info: [1] dispatching hogs: 0 cpu, 0 io, 2 vm, 0 hdd

stress: dbug: [1] using backoff sleep of 6000us

stress: dbug: [1] --> hogvm worker 2 [7] forked

stress: dbug: [1] using backoff sleep of 3000us

stress: dbug: [1] --> hogvm worker 1 [8] forked

stress: dbug: [8] allocating 104857600 bytes ...

stress: dbug: [8] touching bytes in strides of 4096 bytes ...

stress: dbug: [7] allocating 104857600 bytes ...

stress: dbug: [7] touching bytes in strides of 4096 bytes ...

stress: dbug: [7] freed 104857600 bytes

stress: dbug: [7] allocating 104857600 bytes ...

stress: dbug: [7] touching bytes in strides of 4096 bytes ...

stress: dbug: [8] freed 104857600 bytes

stress: dbug: [8] allocating 104857600 bytes ...

stress: dbug: [8] touching bytes in strides of 4096 bytes ...

stress: dbug: [7] freed 104857600 bytes

stress: dbug: [7] allocating 104857600 bytes ...

stress: dbug: [7] touching bytes in strides of 4096 bytes ...

stress: dbug: [8] freed 104857600 bytes

stress: dbug: [8] allocating 104857600 bytes ...

stress: dbug: [8] touching bytes in strides of 4096 bytes ...

stress: dbug: [7] freed 104857600 bytes

stress: dbug: [7] allocating 104857600 bytes ...

stress: dbug: [7] touching bytes in strides of 4096 bytes ...

stress: dbug: [8] freed 104857600 bytes

stress: dbug: [8] allocating 104857600 bytes ...

stress: dbug: [8] touching bytes in strides of 4096 bytes ...

stress: dbug: [8] freed 104857600 bytes

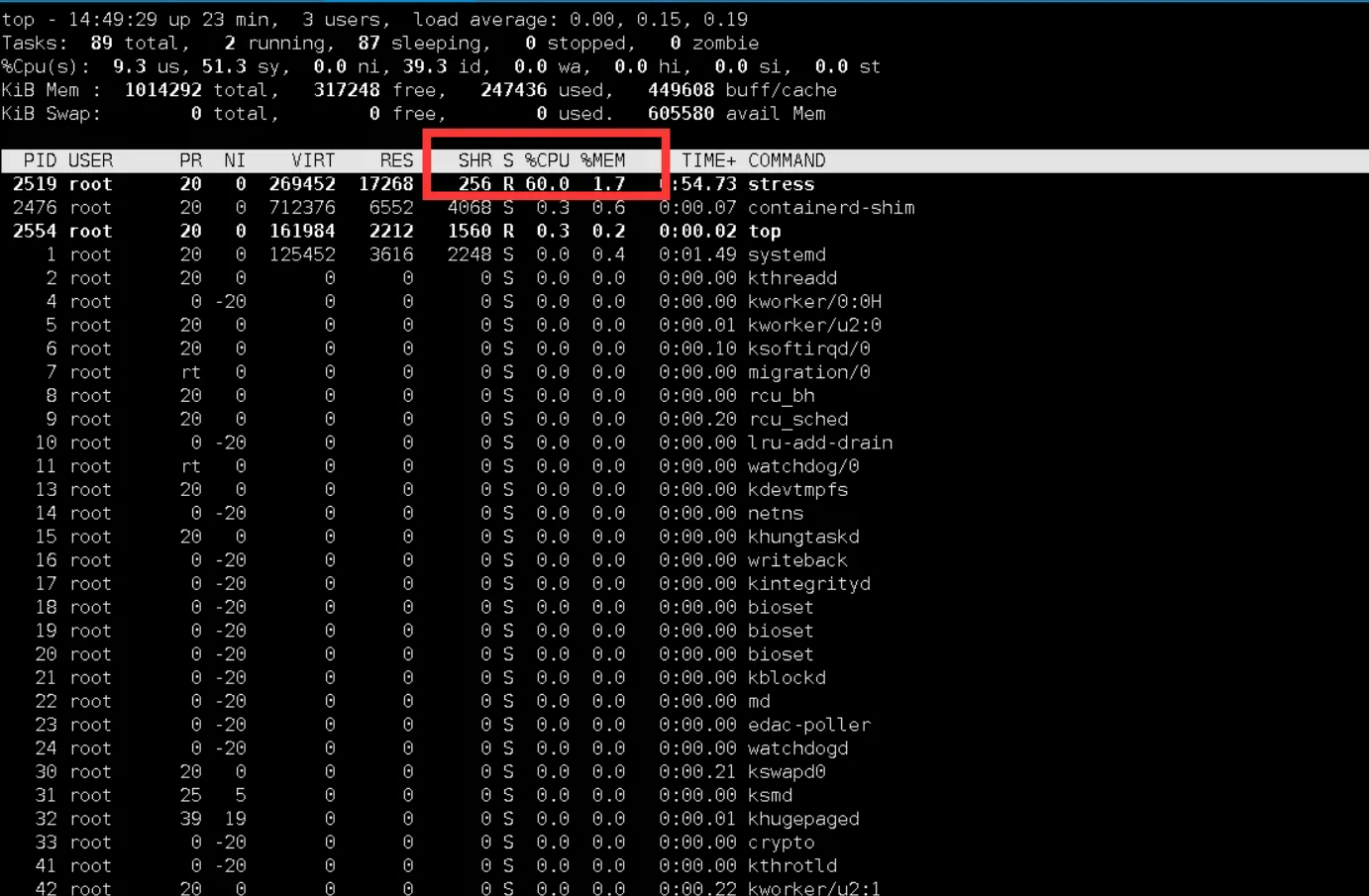

4.运行一个压力测试容器,实践容器CPU使用限额

①运行测试容器

docker run -it --cpus=0.6 progrium/stress --vm 1

②新打开终端查看cpu占用情况

5.运行3个压力测试容器,检查cpu权重限额

docker run -itd --cpu-shares 2048 progrium/stress --cpu 1

docker run -itd --cpu-shares 1024 progrium/stress --cpu 1

docker run -itd --cpu-shares 512 progrium/stress --cpu 1

6.运行一个测试容器,实践容器IO限额

①运行一个测试容器

[root@ecs-7501 ~]# docker run -it --device-write-bps /dev/vda:50MB centos

Unable to find image 'centos:latest' locally

latest: Pulling from library/centos

a1d0c7532777: Pull complete

Digest: sha256:a27fd8080b517143cbbbab9dfb7c8571c40d67d534bbdee55bd6c473f432b177

Status: Downloaded newer image for centos:latest

②测试磁盘的写能力

[root@ecs-7501 ~]# time dd if=/dev/zero of=test.out bs=1M count=200 oflag=direct

200+0 records in

200+0 records out

209715200 bytes (210 MB) copied, 1.258 s, 167 MB/s

real 0m1.261s

user 0m0.000s

sys 0m0.047s

四、容器cgroup管理

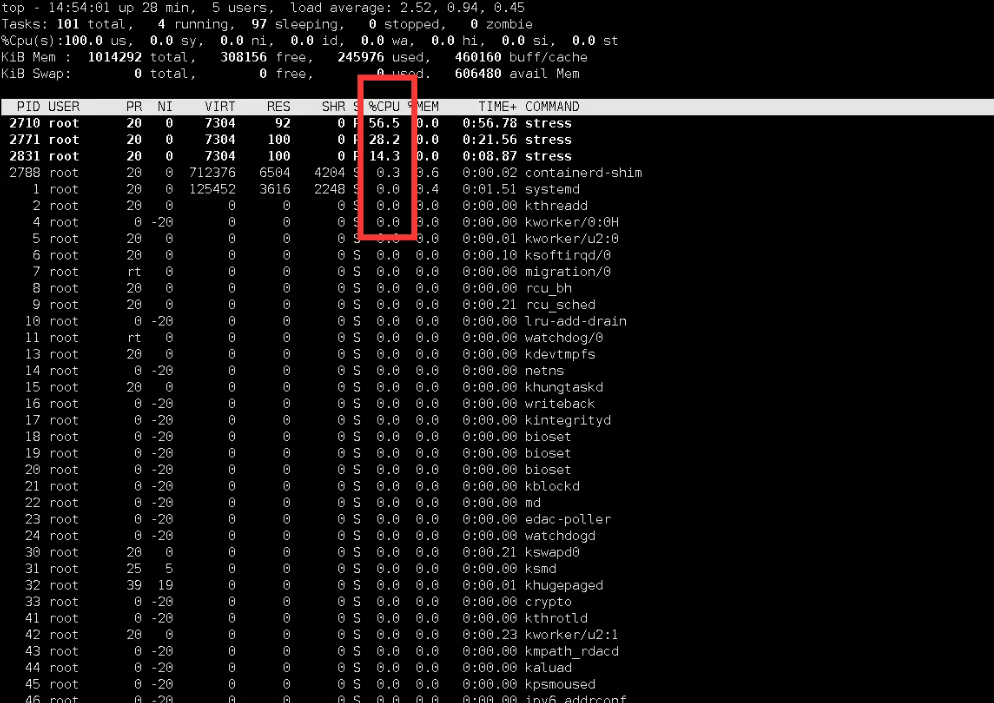

1.运行压力测试容器,验证内存限额cgroup配置

①创建测试容器

运行压力测试容器,配置其内存和swap分配限额。

docker run -itd -m 300M --memory-swap=400M progrium/stress --vm 2 --vm-bytes 100M

②查看内存限制配置文件

(cgroup内存子系统所在路径为/sys/fs/cgroup/memory/docker/容器长ID/)内存限额配置在memory.limit_in_bytes和memory.memsw.limit_in_bytes文件内

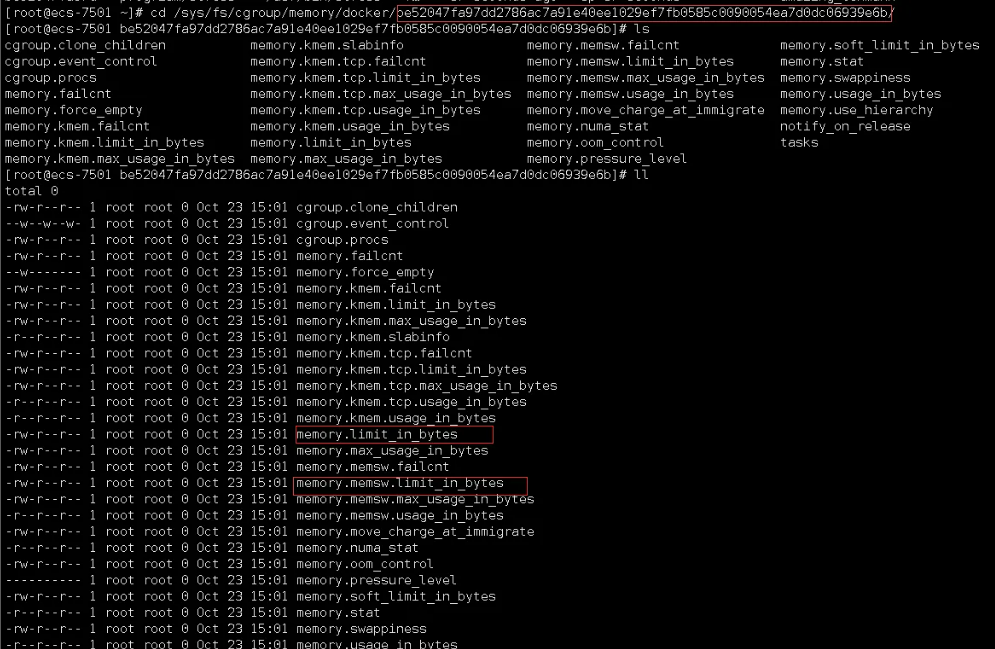

2.运行压力测试容器,验证CPU使用限额cgroup配置

①运行测试容器

docker run -itd --cpus=0.7 progrium/stress --vm 1

1.

②查看cpu使用限制配置文件

ctrl+c结束。按照容器ID,查询cgroup cpu子系统验证其CPU使用限额配置。

(cgroup cpu子系统所在路径为/sys/fs/cgroup/cpu/docker/容器长ID/)CPU使用限额配置在cpu.cfs_quota_us和cpu.cfs_period_us文件内。

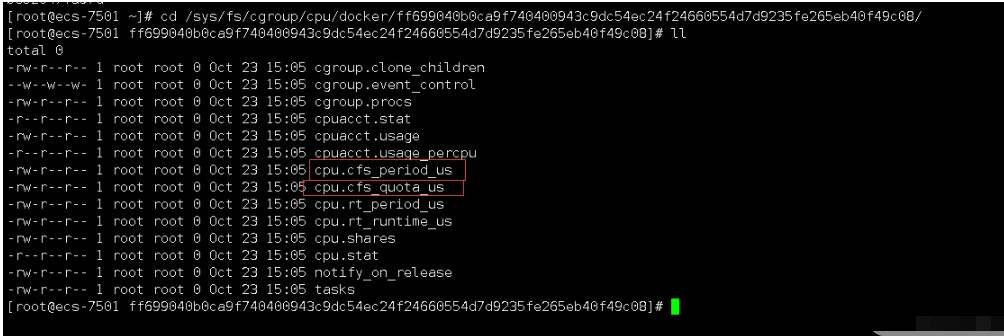

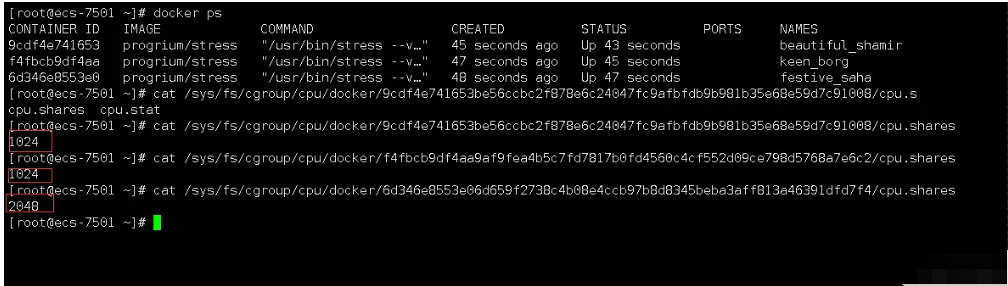

3.运行压力测试容器,验证CPU权重限额cgroup配置

①运行三个测试容器

docker run -itd --cpu-shares 2048 progrium/stress --cpu 1

docker run -itd --cpu-shares 1024 progrium/stress --cpu 1

docker run -itd --cpu-shares 1024 progrium/stress --cpu 1

②top查看cpu使用率

③查看cpu权重限制配置文件

依次运行三个压力测试容器,让宿主机CPU使用出现竞争,并配置其各自CPU权重。按照容器ID,查询cgroup cpu子系统验证其CPU权重限额配置。(cgroup cpu子系统所在路径为/sys/fs/cgroup/cpu/docker/容器长ID/)CPU权重限额配置在cpu.shares文件内。

4.运行测试容器,验证IO限额cgroup配置

①运行测试容器

运行测试容器,配置IO写入带宽限额。按照容器ID,查询cgroup blkio子系统验证其IO写入带宽限额配置。(cgroup blkio子系统所在路径为/sys/fs/cgroup/blkio/)IO写入带宽限额配置在blkio.throttle.write_bps_device文件内。

docker run -it --device-write-bps /dev/vda:70MB centos

[root@ecs-7501 ~]# docker run -it --device-write-bps /dev/vda:70MB centos

[root@253cb36d48a8 /]# cat /sys/fs/cgroup/blkio/blkio.throttle.write_bps_device

253:0 73400320

②查看宿主机磁盘情况

[root@ecs-7501 ~]# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

vda 253:0 0 40G 0 disk

└─vda1 253:1 0 40G 0 part /

五、容器的Namespace管理

1.创建测试容器,分别在容器和宿主机验证主机名

①创建测试容器

[root@ecs-7501 ~]# docker run -d -t -h container centos

fa8e526d3355f49e1a2db0ec7864f6bcfd43e7044c575ca786e968e92c465181

②查看容器内hostname

[root@ecs-7501 ~]# docker exec -it fa8e /bin/bash

[root@container /]# hostname

container

③验证宿主机名

[root@ecs-7501 ~]# hostname

ecs-7501

2.验证容器进程信息

①.进入容器内

[root@ecs-7501 ~]# docker exec -it fa8e /bin/bash

[root@container /]#

②.查看进程

[root@ecs-7501 ~]# docker exec -it fa8e /bin/bash

[root@container /]# ps

PID TTY TIME CMD

30 pts/1 00:00:00 bash

44 pts/1 00:00:00 ps

③.查看宿主机进程

3.容器内创建用户

[root@container /]# ls

bin dev etc home lib lib64 lost+found media mnt opt proc root run sbin srv sys tmp usr var

[root@container /]# useradd container

[root@container /]# su - container

[container@container ~]$ id container

uid=1000(container) gid=1000(container) groups=1000(container)

[container@container ~]$ exit

logout

[root@container /]# exit

exit

[root@ecs-7501 ~]# id container

id: container: no such user