示例源码基于FreeRTOS V9.0.0

队列

1. 概述

FreeRTOS的队列,支持任务与任务间的通信,以及任务与中断间的通信。它是FreeRTOS系统中主要的任务间通信方式。

队列内的消息,是通过拷贝方式传递,而非指针。

队列除了基本的先进先出特性,也支持往队列首部写入数据。

FreeRTOS基于队列进行扩展,实现了互斥量、二值信号量、通用型信号量、递归锁。

2. 接口API

// 动态创建队列

QueueHandle_t xQueueCreate(UBaseType_t uxQueueLength, UBaseType_t uxItemSize);

// 静态创建队列

QueueHandle_t xQueueCreateStatic(UBaseType_t uxQueueLength, UBaseType_t uxItemSize, uint8_t *pucQueueStorageBuffer, StaticQueue_t *pxQueueBuffer);

// 删除动态创建的队列

void vQueueDelete(QueueHandle_t xQueue);

// 往队列尾部写入数据,如果没有空间,阻塞时间为xTicksToWait

BaseType_t xQueueSend(QueueHandle_t xQueue, const void *pvItemToQueue, TickType_t xTicksToWait);

BaseType_t xQueueSendToBack(QueueHandle_t xQueue, const void *pvItemToQueue, TickType_t xTicksToWait);

// 往队列尾部写入数据,此函数可以在中断函数中使用,不可阻塞

BaseType_t xQueueSendToBackFromISR(QueueHandle_t xQueue, const void *pvItemToQueue,BaseType_t *pxHigherPriorityTaskWoken);

// 往队列头部写入数据,如果没有空间,阻塞时间为xTicksToWait

BaseType_t xQueueSendToFront(QueueHandle_t xQueue, const void *pvItemToQueue,TickType_t xTicksToWait);

// 往队列头部写入数据,此函数可以在中断函数中使用,不可阻塞

BaseType_t xQueueSendToFrontFromISR(QueueHandle_t xQueue, const void *pvItemToQueue, BaseType_t *pxHigherPriorityTaskWoken);

// 队列头部读出数据,阻塞时间为xTicksToWait,读到一个数据后,队列中该数据会被移除

BaseType_t xQueueReceive(QueueHandle_t xQueue,void * const pvBuffer, TickType_t xTicksToWait);

// 队列头部读出数据,此函数可以在中断函数中使用,不可阻塞,读到一个数据后,队列中该数据会被移除

BaseType_t xQueueReceiveFromISR(QueueHandle_t xQueue, void *pvBuffer, BaseType_t *pxTaskWoken);

3.队列的实现

3.1 队列的类型

/* For internal use only. These definitions *must* match those in queue.c. */

#define queueQUEUE_TYPE_BASE ( ( uint8_t ) 0U )

#define queueQUEUE_TYPE_SET ( ( uint8_t ) 0U )

#define queueQUEUE_TYPE_MUTEX ( ( uint8_t ) 1U )

#define queueQUEUE_TYPE_COUNTING_SEMAPHORE ( ( uint8_t ) 2U )

#define queueQUEUE_TYPE_BINARY_SEMAPHORE ( ( uint8_t ) 3U )

#define queueQUEUE_TYPE_RECURSIVE_MUTEX ( ( uint8_t ) 4U )

FreeRTOS除普通队列外,还基于此实现了互斥量、二值信号量、通用型信号量、递归锁。

3.2 不同类型的实现差异

- 普通队列:队列容量非0,数据项大小非0,类型为queueQUEUE_TYPE_BASE;

- 二值信号量:队列容量为1,数据项大小为0,类型为queueQUEUE_TYPE_BINARY_SEMAPHORE;

- 通用型信号量:队列容量非0,数据项大小为0,类型为queueQUEUE_TYPE_COUNTING_SEMAPHORE;

- 互斥量:队列容量为1,数据项大小为0,类型为queueQUEUE_TYPE_MUTEX;

- 递归锁:队列容量为1,数据项大小为0,类型为queueQUEUE_TYPE_RECURSIVE_MUTEX;

3.3 队列的数据结构

typedef struct QueueDefinition

{

int8_t *pcHead; /*< Points to the beginning of the queue storage area. */

int8_t *pcTail; /*< Points to the byte at the end of the queue storage area. Once more byte is allocated than necessary to store the queue items, this is used as a marker. */

int8_t *pcWriteTo; /*< Points to the free next place in the storage area. */

union /* Use of a union is an exception to the coding standard to ensure two mutually exclusive structure members don't appear simultaneously (wasting RAM). */

{

int8_t *pcReadFrom; /*< Points to the last place that a queued item was read from when the structure is used as a queue. */

UBaseType_t uxRecursiveCallCount;/*< Maintains a count of the number of times a recursive mutex has been recursively 'taken' when the structure is used as a mutex. */

} u;

List_t xTasksWaitingToSend; /*< List of tasks that are blocked waiting to post onto this queue. Stored in priority order. */

List_t xTasksWaitingToReceive; /*< List of tasks that are blocked waiting to read from this queue. Stored in priority order. */

volatile UBaseType_t uxMessagesWaiting;/*< The number of items currently in the queue. */

UBaseType_t uxLength; /*< The length of the queue defined as the number of items it will hold, not the number of bytes. */

UBaseType_t uxItemSize; /*< The size of each items that the queue will hold. */

volatile int8_t cRxLock; /*< Stores the number of items received from the queue (removed from the queue) while the queue was locked. Set to queueUNLOCKED when the queue is not locked. */

volatile int8_t cTxLock; /*< Stores the number of items transmitted to the queue (added to the queue) while the queue was locked. Set to queueUNLOCKED when the queue is not locked. */

#if( ( configSUPPORT_STATIC_ALLOCATION == 1 ) && ( configSUPPORT_DYNAMIC_ALLOCATION == 1 ) )

uint8_t ucStaticallyAllocated; /*< Set to pdTRUE if the memory used by the queue was statically allocated to ensure no attempt is made to free the memory. */

#endif

#if ( configUSE_QUEUE_SETS == 1 )

struct QueueDefinition *pxQueueSetContainer;

#endif

#if ( configUSE_TRACE_FACILITY == 1 )

UBaseType_t uxQueueNumber;

uint8_t ucQueueType;

#endif

} xQUEUE;

/* The old xQUEUE name is maintained above then typedefed to the new Queue_t

name below to enable the use of older kernel aware debuggers. */

typedef xQUEUE Queue_t;

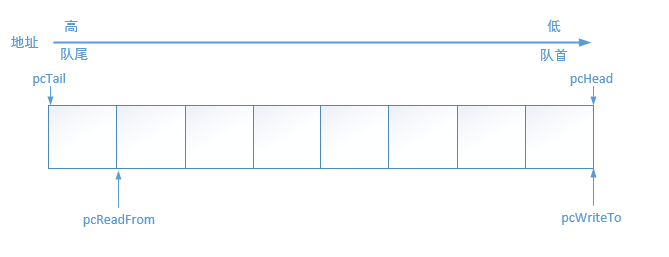

队列的存储空间是一段连续的内存区域。

- pcHead:指向队列存储空间的首部;

- pcTail:指向队列存储空间的尾部;

- pcWriteTo:内存区域中下次可写的位置;

- pcReadFrom:作为共用体成员,当类型为队列时使用,指向下次可读的位置;

- uxRecursiveCallCount:作为共用体成员,当类型为递归锁时使用,记录递归锁上锁次数;

- xTasksWaitingToSend:等待往此队列发送数据的任务列表,因队列满而阻塞;

- xTasksWaitingToReceive:等待从此队列接收数据的任务列表,因队列空而阻塞;

- uxMessagesWaiting:当前队列大小;

- uxLength:队列的容量;

- uxItemSize:队列中数据项的大小;

- cRxLock:当队列锁定时,记录从队列中接收的数据数目,当为queueUNLOCKED标识队列未锁定;

- cTxLock:当队列锁定时,记录往队列中发送的数据数目,当为queueUNLOCKED标识队列未锁定;

- ucStaticallyAllocated:标记队列是静态创建的;

3.4 源码分析

3.4.1 动态创建队列

3.4.1.1 源码

#if( configSUPPORT_DYNAMIC_ALLOCATION == 1 )

#define xQueueCreate( uxQueueLength, uxItemSize ) xQueueGenericCreate( ( uxQueueLength ), ( uxItemSize ), ( queueQUEUE_TYPE_BASE ) )

#endif

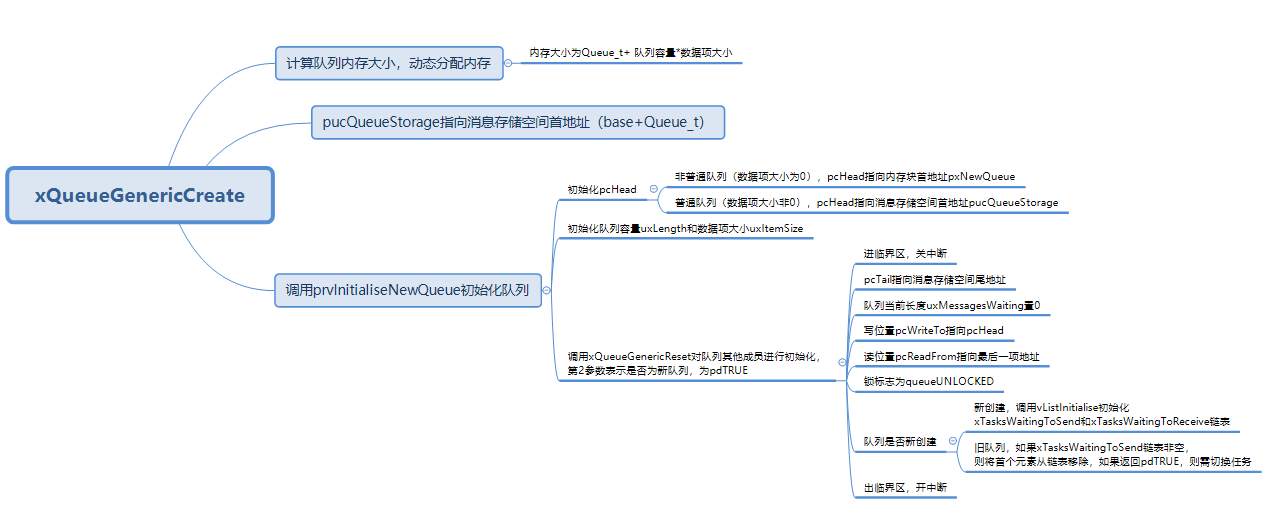

通过宏xQueueCreate动态创建,实际调用xQueueGenericCreate接口,指定了队列类型为queueQUEUE_TYPE_BASE;

xQueueGenericCreate源码如下:

#if( configSUPPORT_DYNAMIC_ALLOCATION == 1 )

QueueHandle_t xQueueGenericCreate( const UBaseType_t uxQueueLength, const UBaseType_t uxItemSize, const uint8_t ucQueueType )

{

Queue_t *pxNewQueue;

size_t xQueueSizeInBytes;

uint8_t *pucQueueStorage;

configASSERT( uxQueueLength > ( UBaseType_t ) 0 );

if( uxItemSize == ( UBaseType_t ) 0 )

{

/* There is not going to be a queue storage area. */

xQueueSizeInBytes = ( size_t ) 0;

}

else

{

/* Allocate enough space to hold the maximum number of items that

can be in the queue at any time. */

xQueueSizeInBytes = ( size_t ) ( uxQueueLength * uxItemSize ); /*lint !e961 MISRA exception as the casts are only redundant for some ports. */

}

pxNewQueue = ( Queue_t * ) pvPortMalloc( sizeof( Queue_t ) + xQueueSizeInBytes );

if( pxNewQueue != NULL )

{

/* Jump past the queue structure to find the location of the queue

storage area. */

pucQueueStorage = ( ( uint8_t * ) pxNewQueue ) + sizeof( Queue_t );

#if( configSUPPORT_STATIC_ALLOCATION == 1 )

{

/* Queues can be created either statically or dynamically, so

note this task was created dynamically in case it is later

deleted. */

pxNewQueue->ucStaticallyAllocated = pdFALSE;

}

#endif /* configSUPPORT_STATIC_ALLOCATION */

prvInitialiseNewQueue( uxQueueLength, uxItemSize, pucQueueStorage, ucQueueType, pxNewQueue );

}

return pxNewQueue;

}

#endif /* configSUPPORT_STATIC_ALLOCATION */

其内调用prvInitialiseNewQueue进行队列的初始化,源码如下:

static void prvInitialiseNewQueue( const UBaseType_t uxQueueLength, const UBaseType_t uxItemSize, uint8_t *pucQueueStorage, const uint8_t ucQueueType, Queue_t *pxNewQueue )

{

/* Remove compiler warnings about unused parameters should

configUSE_TRACE_FACILITY not be set to 1. */

( void ) ucQueueType;

if( uxItemSize == ( UBaseType_t ) 0 )

{

/* No RAM was allocated for the queue storage area, but PC head cannot

be set to NULL because NULL is used as a key to say the queue is used as

a mutex. Therefore just set pcHead to point to the queue as a benign

value that is known to be within the memory map. */

pxNewQueue->pcHead = ( int8_t * ) pxNewQueue;

}

else

{

/* Set the head to the start of the queue storage area. */

pxNewQueue->pcHead = ( int8_t * ) pucQueueStorage;

}

/* Initialise the queue members as described where the queue type is

defined. */

pxNewQueue->uxLength = uxQueueLength;

pxNewQueue->uxItemSize = uxItemSize;

( void ) xQueueGenericReset( pxNewQueue, pdTRUE );

#if ( configUSE_TRACE_FACILITY == 1 )

{

pxNewQueue->ucQueueType = ucQueueType;

}

#endif /* configUSE_TRACE_FACILITY */

#if( configUSE_QUEUE_SETS == 1 )

{

pxNewQueue->pxQueueSetContainer = NULL;

}

#endif /* configUSE_QUEUE_SETS */

traceQUEUE_CREATE( pxNewQueue );

}

初始化时调用了xQueueGenericReset接口,源码如下:

BaseType_t xQueueGenericReset( QueueHandle_t xQueue, BaseType_t xNewQueue )

{

Queue_t * const pxQueue = ( Queue_t * ) xQueue;

configASSERT( pxQueue );

taskENTER_CRITICAL();

{

pxQueue->pcTail = pxQueue->pcHead + ( pxQueue->uxLength * pxQueue->uxItemSize );

pxQueue->uxMessagesWaiting = ( UBaseType_t ) 0U;

pxQueue->pcWriteTo = pxQueue->pcHead;

pxQueue->u.pcReadFrom = pxQueue->pcHead + ( ( pxQueue->uxLength - ( UBaseType_t ) 1U ) * pxQueue->uxItemSize );

pxQueue->cRxLock = queueUNLOCKED;

pxQueue->cTxLock = queueUNLOCKED;

if( xNewQueue == pdFALSE )

{

/* If there are tasks blocked waiting to read from the queue, then

the tasks will remain blocked as after this function exits the queue

will still be empty. If there are tasks blocked waiting to write to

the queue, then one should be unblocked as after this function exits

it will be possible to write to it. */

if( listLIST_IS_EMPTY( &( pxQueue->xTasksWaitingToSend ) ) == pdFALSE )

{

if( xTaskRemoveFromEventList( &( pxQueue->xTasksWaitingToSend ) ) != pdFALSE )

{

queueYIELD_IF_USING_PREEMPTION();

}

else

{

mtCOVERAGE_TEST_MARKER();

}

}

else

{

mtCOVERAGE_TEST_MARKER();

}

}

else

{

/* Ensure the event queues start in the correct state. */

vListInitialise( &( pxQueue->xTasksWaitingToSend ) );

vListInitialise( &( pxQueue->xTasksWaitingToReceive ) );

}

}

taskEXIT_CRITICAL();

/* A value is returned for calling semantic consistency with previous

versions. */

return pdPASS;

}

3.4.1.2 分析

xQueueGenericReset中对于xTaskRemoveFromEventList的说明

- xTasksWaitingToSend是发送阻塞的有序的任务列表,按任务优先级从高到低排列;

- xTasksWaitingToSend列表中的任务,是阻塞态的,阻塞在队列发送,因队列满无法发送而阻塞;

- 重置,意味着队列不再满,需要将发送阻塞的任务从阻塞列表中移除,并移入就绪列表,xTaskRemoveFromEventList实现的就是此功能;

- 函数返回值表示就绪的任务优先级是否高于当前任务,pdTRUE表示高,pdFalse表示低,但返回pdTRUE时,需要让步切换任务使高优先级任务先运行;

队列结构如下:

3.4.2 静态创建队列

3.4.2.1 源码

#if( configSUPPORT_STATIC_ALLOCATION == 1 )

#define xQueueCreateStatic( uxQueueLength, uxItemSize, pucQueueStorage, pxQueueBuffer ) xQueueGenericCreateStatic( ( uxQueueLength ), ( uxItemSize ), ( pucQueueStorage ), ( pxQueueBuffer ), ( queueQUEUE_TYPE_BASE ) )

#endif /* configSUPPORT_STATIC_ALLOCATION */

通过宏xQueueCreateStatic创建,实际调用xQueueGenericCreateStatic接口,指定了队列类型为queueQUEUE_TYPE_BASE。

#if( configSUPPORT_STATIC_ALLOCATION == 1 )

QueueHandle_t xQueueGenericCreateStatic( const UBaseType_t uxQueueLength, const UBaseType_t uxItemSize, uint8_t *pucQueueStorage, StaticQueue_t *pxStaticQueue, const uint8_t ucQueueType )

{

Queue_t *pxNewQueue;

configASSERT( uxQueueLength > ( UBaseType_t ) 0 );

/* The StaticQueue_t structure and the queue storage area must be

supplied. */

configASSERT( pxStaticQueue != NULL );

/* A queue storage area should be provided if the item size is not 0, and

should not be provided if the item size is 0. */

configASSERT( !( ( pucQueueStorage != NULL ) && ( uxItemSize == 0 ) ) );

configASSERT( !( ( pucQueueStorage == NULL ) && ( uxItemSize != 0 ) ) );

#if( configASSERT_DEFINED == 1 )

{

/* Sanity check that the size of the structure used to declare a

variable of type StaticQueue_t or StaticSemaphore_t equals the size of

the real queue and semaphore structures. */

volatile size_t xSize = sizeof( StaticQueue_t );

configASSERT( xSize == sizeof( Queue_t ) );

}

#endif /* configASSERT_DEFINED */

/* The address of a statically allocated queue was passed in, use it.

The address of a statically allocated storage area was also passed in

but is already set. */

pxNewQueue = ( Queue_t * ) pxStaticQueue; /*lint !e740 Unusual cast is ok as the structures are designed to have the same alignment, and the size is checked by an assert. */

if( pxNewQueue != NULL )

{

#if( configSUPPORT_DYNAMIC_ALLOCATION == 1 )

{

/* Queues can be allocated wither statically or dynamically, so

note this queue was allocated statically in case the queue is

later deleted. */

pxNewQueue->ucStaticallyAllocated = pdTRUE;

}

#endif /* configSUPPORT_DYNAMIC_ALLOCATION */

prvInitialiseNewQueue( uxQueueLength, uxItemSize, pucQueueStorage, ucQueueType, pxNewQueue );

}

return pxNewQueue;

}

#endif /* configSUPPORT_STATIC_ALLOCATION */

3.4.2.2 分析

与动态创建的区别是,静态创建需要自己预先分配好队列存储空间,并将消息首地址和队列地址传入;

xQueueGenericCreateStatic内的几个assert断言说明

configASSERT( !( ( pucQueueStorage != NULL ) && ( uxItemSize == 0 ) ) );

configASSERT( !( ( pucQueueStorage == NULL ) && ( uxItemSize != 0 ) ) );

- 对于普通队列,必须满足条件:pucQueueStorage!=NULL && uxItemSize!=0;

- 对于互斥量、二值信号量、通用信号量、递归锁,必须满足条件;pucQueueStorage == NULL && uxItemSize==0;

3.4.3 销毁队列

3.4.3.1 源码

void vQueueDelete( QueueHandle_t xQueue )

{

Queue_t * const pxQueue = ( Queue_t * ) xQueue;

configASSERT( pxQueue );

traceQUEUE_DELETE( pxQueue );

#if ( configQUEUE_REGISTRY_SIZE > 0 )

{

vQueueUnregisterQueue( pxQueue );

}

#endif

#if( ( configSUPPORT_DYNAMIC_ALLOCATION == 1 ) && ( configSUPPORT_STATIC_ALLOCATION == 0 ) )

{

/* The queue can only have been allocated dynamically - free it

again. */

vPortFree( pxQueue );

}

#elif( ( configSUPPORT_DYNAMIC_ALLOCATION == 1 ) && ( configSUPPORT_STATIC_ALLOCATION == 1 ) )

{

/* The queue could have been allocated statically or dynamically, so

check before attempting to free the memory. */

if( pxQueue->ucStaticallyAllocated == ( uint8_t ) pdFALSE )

{

vPortFree( pxQueue );

}

else

{

mtCOVERAGE_TEST_MARKER();

}

}

#else

{

/* The queue must have been statically allocated, so is not going to be

deleted. Avoid compiler warnings about the unused parameter. */

( void ) pxQueue;

}

#endif /* configSUPPORT_DYNAMIC_ALLOCATION */

}

3.4.3.2 分析

如果是动态创建的队列,释放时核心就是释放内存,如果是静态创建的队列,则空处理;

关于vPortFree接口的分析,见 内存管理

3.4.4 发送消息

3.4.4.1 xQueueGenericSend源码

#define xQueueSendToFront( xQueue, pvItemToQueue, xTicksToWait ) xQueueGenericSend( ( xQueue ), ( pvItemToQueue ), ( xTicksToWait ), queueSEND_TO_FRONT )

#define xQueueSendToBack( xQueue, pvItemToQueue, xTicksToWait ) xQueueGenericSend( ( xQueue ), ( pvItemToQueue ), ( xTicksToWait ), queueSEND_TO_BACK )

#define xQueueSend( xQueue, pvItemToQueue, xTicksToWait ) xQueueGenericSend( ( xQueue ), ( pvItemToQueue ), ( xTicksToWait ), queueSEND_TO_BACK )

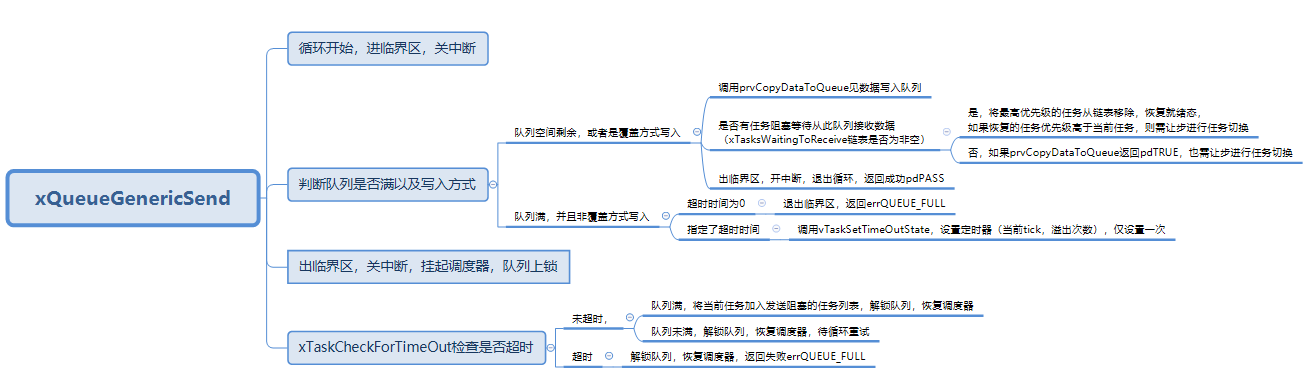

FreeRTOS的队列发送支持往队列尾发送和往队列首部发送,定义了如上三个宏实现,实际调用的是xQueueGenericSend函数,通过最后一个参数指定;

#define queueSEND_TO_BACK ( ( BaseType_t ) 0 )

#define queueSEND_TO_FRONT ( ( BaseType_t ) 1 )

#define queueOVERWRITE ( ( BaseType_t ) 2 )

xQueueGenericSend函数实现如下:

BaseType_t xQueueGenericSend( QueueHandle_t xQueue, const void * const pvItemToQueue, TickType_t xTicksToWait, const BaseType_t xCopyPosition )

{

BaseType_t xEntryTimeSet = pdFALSE, xYieldRequired;

TimeOut_t xTimeOut;

Queue_t * const pxQueue = ( Queue_t * ) xQueue;

configASSERT( pxQueue );

configASSERT( !( ( pvItemToQueue == NULL ) && ( pxQueue->uxItemSize != ( UBaseType_t ) 0U ) ) );

configASSERT( !( ( xCopyPosition == queueOVERWRITE ) && ( pxQueue->uxLength != 1 ) ) );

#if ( ( INCLUDE_xTaskGetSchedulerState == 1 ) || ( configUSE_TIMERS == 1 ) )

{

configASSERT( !( ( xTaskGetSchedulerState() == taskSCHEDULER_SUSPENDED ) && ( xTicksToWait != 0 ) ) );

}

#endif

/* This function relaxes the coding standard somewhat to allow return

statements within the function itself. This is done in the interest

of execution time efficiency. */

for( ;; )

{

taskENTER_CRITICAL();

{

/* Is there room on the queue now? The running task must be the

highest priority task wanting to access the queue. If the head item

in the queue is to be overwritten then it does not matter if the

queue is full. */

if( ( pxQueue->uxMessagesWaiting < pxQueue->uxLength ) || ( xCopyPosition == queueOVERWRITE ) )

{

traceQUEUE_SEND( pxQueue );

xYieldRequired = prvCopyDataToQueue( pxQueue, pvItemToQueue, xCopyPosition );

#if ( configUSE_QUEUE_SETS == 1 )

{

if( pxQueue->pxQueueSetContainer != NULL )

{

if( prvNotifyQueueSetContainer( pxQueue, xCopyPosition ) != pdFALSE )

{

/* The queue is a member of a queue set, and posting

to the queue set caused a higher priority task to

unblock. A context switch is required. */

queueYIELD_IF_USING_PREEMPTION();

}

else

{

mtCOVERAGE_TEST_MARKER();

}

}

else

{

/* If there was a task waiting for data to arrive on the

queue then unblock it now. */

if( listLIST_IS_EMPTY( &( pxQueue->xTasksWaitingToReceive ) ) == pdFALSE )

{

if( xTaskRemoveFromEventList( &( pxQueue->xTasksWaitingToReceive ) ) != pdFALSE )

{

/* The unblocked task has a priority higher than

our own so yield immediately. Yes it is ok to

do this from within the critical section - the

kernel takes care of that. */

queueYIELD_IF_USING_PREEMPTION();

}

else

{

mtCOVERAGE_TEST_MARKER();

}

}

else if( xYieldRequired != pdFALSE )

{

/* This path is a special case that will only get

executed if the task was holding multiple mutexes

and the mutexes were given back in an order that is

different to that in which they were taken. */

queueYIELD_IF_USING_PREEMPTION();

}

else

{

mtCOVERAGE_TEST_MARKER();

}

}

}

#else /* configUSE_QUEUE_SETS */

{

/* If there was a task waiting for data to arrive on the

queue then unblock it now. */

if( listLIST_IS_EMPTY( &( pxQueue->xTasksWaitingToReceive ) ) == pdFALSE )

{

if( xTaskRemoveFromEventList( &( pxQueue->xTasksWaitingToReceive ) ) != pdFALSE )

{

/* The unblocked task has a priority higher than

our own so yield immediately. Yes it is ok to do

this from within the critical section - the kernel

takes care of that. */

queueYIELD_IF_USING_PREEMPTION();

}

else

{

mtCOVERAGE_TEST_MARKER();

}

}

else if( xYieldRequired != pdFALSE )

{

/* This path is a special case that will only get

executed if the task was holding multiple mutexes and

the mutexes were given back in an order that is

different to that in which they were taken. */

queueYIELD_IF_USING_PREEMPTION();

}

else

{

mtCOVERAGE_TEST_MARKER();

}

}

#endif /* configUSE_QUEUE_SETS */

taskEXIT_CRITICAL();

return pdPASS;

}

else

{

if( xTicksToWait == ( TickType_t ) 0 )

{

/* The queue was full and no block time is specified (or

the block time has expired) so leave now. */

taskEXIT_CRITICAL();

/* Return to the original privilege level before exiting

the function. */

traceQUEUE_SEND_FAILED( pxQueue );

return errQUEUE_FULL;

}

else if( xEntryTimeSet == pdFALSE )

{

/* The queue was full and a block time was specified so

configure the timeout structure. */

vTaskSetTimeOutState( &xTimeOut );

xEntryTimeSet = pdTRUE;

}

else

{

/* Entry time was already set. */

mtCOVERAGE_TEST_MARKER();

}

}

}

taskEXIT_CRITICAL();

/* Interrupts and other tasks can send to and receive from the queue

now the critical section has been exited. */

vTaskSuspendAll();

prvLockQueue( pxQueue );

/* Update the timeout state to see if it has expired yet. */

if( xTaskCheckForTimeOut( &xTimeOut, &xTicksToWait ) == pdFALSE )

{

if( prvIsQueueFull( pxQueue ) != pdFALSE )

{

traceBLOCKING_ON_QUEUE_SEND( pxQueue );

vTaskPlaceOnEventList( &( pxQueue->xTasksWaitingToSend ), xTicksToWait );

/* Unlocking the queue means queue events can effect the

event list. It is possible that interrupts occurring now

remove this task from the event list again - but as the

scheduler is suspended the task will go onto the pending

ready last instead of the actual ready list. */

prvUnlockQueue( pxQueue );

/* Resuming the scheduler will move tasks from the pending

ready list into the ready list - so it is feasible that this

task is already in a ready list before it yields - in which

case the yield will not cause a context switch unless there

is also a higher priority task in the pending ready list. */

if( xTaskResumeAll() == pdFALSE )

{

portYIELD_WITHIN_API();

}

}

else

{

/* Try again. */

prvUnlockQueue( pxQueue );

( void ) xTaskResumeAll();

}

}

else

{

/* The timeout has expired. */

prvUnlockQueue( pxQueue );

( void ) xTaskResumeAll();

traceQUEUE_SEND_FAILED( pxQueue );

return errQUEUE_FULL;

}

}

}

3.4.4.2 xQueueGenericSend分析

问题:

-

问:为什么拷贝数据入队和对pxQueue->xTasksWaitingToReceive列表的操作 要加入临界区,禁用中断,而在阻塞时间内等待发送的相关处理却是仅挂起调度器和上锁队列?

答:加临界区禁用中断是为了防止任务在写队列时被中断打断或被其他任务抢占,中断内也会拷贝数据入队和操作xTasksWaitingToReceive列表,不加会造成数据混乱出错。见xQueueGenericSendFromISR实现。而在阻塞时间内等待发送的相关处理仅挂起调度器和上锁队列,是因为在中断内会检测队列的上锁状态,当锁定时是不会操作xTasksWaitingToSend和xTasksWaitingToReceive,仅会更新txLock和rxLock的计数,交由任务去操作xTasksWaitingToSend和xTasksWaitingToReceive。挂起调度器是为了防止其他任务并发操作,上锁队列是防止中断操作;

-

问:阻塞等待发送且队列一直满时,不是会循环触发vTaskPlaceOnEventList( &( pxQueue->xTasksWaitingToSend ), xTicksToWait );,从而使当前任务重复加入阻塞列表内么?

答:将任务加入阻塞队列并恢复调度器后,当前任务不会再调度到,只有当其他任务或中断将其从阻塞队列移除时才会。所以当再次循环执行到 vTaskPlaceOnEventList时,任务已经被再次调度了,其不在xTasksWaitingToSend列表内,不存在重复加入问题。

3.4.4.3 xQueueGenericSendFromISR源码

#define xQueueSendToFrontFromISR( xQueue, pvItemToQueue, pxHigherPriorityTaskWoken ) xQueueGenericSendFromISR( ( xQueue ), ( pvItemToQueue ), ( pxHigherPriorityTaskWoken ), queueSEND_TO_FRONT )

#define xQueueSendToBackFromISR( xQueue, pvItemToQueue, pxHigherPriorityTaskWoken ) xQueueGenericSendFromISR( ( xQueue ), ( pvItemToQueue ), ( pxHigherPriorityTaskWoken ), queueSEND_TO_BACK )

#define xQueueSendFromISR( xQueue, pvItemToQueue, pxHigherPriorityTaskWoken ) xQueueGenericSendFromISR( ( xQueue ), ( pvItemToQueue ), ( pxHigherPriorityTaskWoken ), queueSEND_TO_BACK )

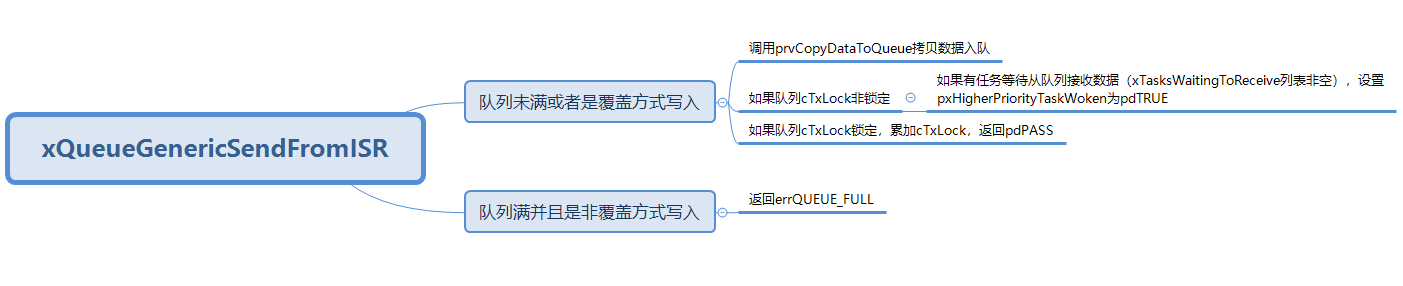

中断服务程序专用的发送接口,带后缀FromISR,也支持队尾发送和队首发送,如上三个宏实现,实际调用的是xQueueGenericSendFromISR函数。

BaseType_t xQueueGenericSendFromISR( QueueHandle_t xQueue, const void * const pvItemToQueue, BaseType_t * const pxHigherPriorityTaskWoken, const BaseType_t xCopyPosition )

{

BaseType_t xReturn;

UBaseType_t uxSavedInterruptStatus;

Queue_t * const pxQueue = ( Queue_t * ) xQueue;

configASSERT( pxQueue );

configASSERT( !( ( pvItemToQueue == NULL ) && ( pxQueue->uxItemSize != ( UBaseType_t ) 0U ) ) );

configASSERT( !( ( xCopyPosition == queueOVERWRITE ) && ( pxQueue->uxLength != 1 ) ) );

/* RTOS ports that support interrupt nesting have the concept of a maximum

system call (or maximum API call) interrupt priority. Interrupts that are

above the maximum system call priority are kept permanently enabled, even

when the RTOS kernel is in a critical section, but cannot make any calls to

FreeRTOS API functions. If configASSERT() is defined in FreeRTOSConfig.h

then portASSERT_IF_INTERRUPT_PRIORITY_INVALID() will result in an assertion

failure if a FreeRTOS API function is called from an interrupt that has been

assigned a priority above the configured maximum system call priority.

Only FreeRTOS functions that end in FromISR can be called from interrupts

that have been assigned a priority at or (logically) below the maximum

system call interrupt priority. FreeRTOS maintains a separate interrupt

safe API to ensure interrupt entry is as fast and as simple as possible.

More information (albeit Cortex-M specific) is provided on the following

link: http://www.freertos.org/RTOS-Cortex-M3-M4.html */

portASSERT_IF_INTERRUPT_PRIORITY_INVALID();

/* Similar to xQueueGenericSend, except without blocking if there is no room

in the queue. Also don't directly wake a task that was blocked on a queue

read, instead return a flag to say whether a context switch is required or

not (i.e. has a task with a higher priority than us been woken by this

post). */

uxSavedInterruptStatus = portSET_INTERRUPT_MASK_FROM_ISR();

{

if( ( pxQueue->uxMessagesWaiting < pxQueue->uxLength ) || ( xCopyPosition == queueOVERWRITE ) )

{

const int8_t cTxLock = pxQueue->cTxLock;

traceQUEUE_SEND_FROM_ISR( pxQueue );

/* Semaphores use xQueueGiveFromISR(), so pxQueue will not be a

semaphore or mutex. That means prvCopyDataToQueue() cannot result

in a task disinheriting a priority and prvCopyDataToQueue() can be

called here even though the disinherit function does not check if

the scheduler is suspended before accessing the ready lists. */

( void ) prvCopyDataToQueue( pxQueue, pvItemToQueue, xCopyPosition );

/* The event list is not altered if the queue is locked. This will

be done when the queue is unlocked later. */

if( cTxLock == queueUNLOCKED )

{

#if ( configUSE_QUEUE_SETS == 1 )

{

if( pxQueue->pxQueueSetContainer != NULL )

{

if( prvNotifyQueueSetContainer( pxQueue, xCopyPosition ) != pdFALSE )

{

/* The queue is a member of a queue set, and posting

to the queue set caused a higher priority task to

unblock. A context switch is required. */

if( pxHigherPriorityTaskWoken != NULL )

{

*pxHigherPriorityTaskWoken = pdTRUE;

}

else

{

mtCOVERAGE_TEST_MARKER();

}

}

else

{

mtCOVERAGE_TEST_MARKER();

}

}

else

{

if( listLIST_IS_EMPTY( &( pxQueue->xTasksWaitingToReceive ) ) == pdFALSE )

{

if( xTaskRemoveFromEventList( &( pxQueue->xTasksWaitingToReceive ) ) != pdFALSE )

{

/* The task waiting has a higher priority so

record that a context switch is required. */

if( pxHigherPriorityTaskWoken != NULL )

{

*pxHigherPriorityTaskWoken = pdTRUE;

}

else

{

mtCOVERAGE_TEST_MARKER();

}

}

else

{

mtCOVERAGE_TEST_MARKER();

}

}

else

{

mtCOVERAGE_TEST_MARKER();

}

}

}

#else /* configUSE_QUEUE_SETS */

{

if( listLIST_IS_EMPTY( &( pxQueue->xTasksWaitingToReceive ) ) == pdFALSE )

{

if( xTaskRemoveFromEventList( &( pxQueue->xTasksWaitingToReceive ) ) != pdFALSE )

{

/* The task waiting has a higher priority so record that a

context switch is required. */

if( pxHigherPriorityTaskWoken != NULL )

{

*pxHigherPriorityTaskWoken = pdTRUE;

}

else

{

mtCOVERAGE_TEST_MARKER();

}

}

else

{

mtCOVERAGE_TEST_MARKER();

}

}

else

{

mtCOVERAGE_TEST_MARKER();

}

}

#endif /* configUSE_QUEUE_SETS */

}

else

{

/* Increment the lock count so the task that unlocks the queue

knows that data was posted while it was locked. */

pxQueue->cTxLock = ( int8_t ) ( cTxLock + 1 );

}

xReturn = pdPASS;

}

else

{

traceQUEUE_SEND_FROM_ISR_FAILED( pxQueue );

xReturn = errQUEUE_FULL;

}

}

portCLEAR_INTERRUPT_MASK_FROM_ISR( uxSavedInterruptStatus );

return xReturn;

}

3.4.4.4 xQueueGenericSendFromISR分析

xQueueGenericSendFromISR是在中断服务内使用的接口,与xQueueGenericSend差异在于:

- 接口内不能阻塞,因此不提供超时时间参数;

- 当数据写入队列且有任务阻塞等待从队列接收数据时,它不是直接在接口内进行任务切换,而是设置一个标记,标识是否有更高优先级任务等待唤醒,交由用户实现任务切换;

- 当队列cTxLock锁定时,不操作接收阻塞的任务列表,而是累加cTxLock。推迟到在解锁队列prvUnlockQueue中才操作列表;

3.4.4.5 prvCopyDataToQueue源码

static BaseType_t prvCopyDataToQueue( Queue_t * const pxQueue, const void *pvItemToQueue, const BaseType_t xPosition )

{

BaseType_t xReturn = pdFALSE;

UBaseType_t uxMessagesWaiting;

/* This function is called from a critical section. */

uxMessagesWaiting = pxQueue->uxMessagesWaiting;

if( pxQueue->uxItemSize == ( UBaseType_t ) 0 )

{

#if ( configUSE_MUTEXES == 1 )

{

if( pxQueue->uxQueueType == queueQUEUE_IS_MUTEX )

{

/* The mutex is no longer being held. */

xReturn = xTaskPriorityDisinherit( ( void * ) pxQueue->pxMutexHolder );

pxQueue->pxMutexHolder = NULL;

}

else

{

mtCOVERAGE_TEST_MARKER();

}

}

#endif /* configUSE_MUTEXES */

}

else if( xPosition == queueSEND_TO_BACK )

{

( void ) memcpy( ( void * ) pxQueue->pcWriteTo, pvItemToQueue, ( size_t ) pxQueue->uxItemSize ); /*lint !e961 !e418 MISRA exception as the casts are only redundant for some ports, plus previous logic ensures a null pointer can only be passed to memcpy() if the copy size is 0. */

pxQueue->pcWriteTo += pxQueue->uxItemSize;

if( pxQueue->pcWriteTo >= pxQueue->pcTail ) /*lint !e946 MISRA exception justified as comparison of pointers is the cleanest solution. */

{

pxQueue->pcWriteTo = pxQueue->pcHead;

}

else

{

mtCOVERAGE_TEST_MARKER();

}

}

else

{

( void ) memcpy( ( void * ) pxQueue->u.pcReadFrom, pvItemToQueue, ( size_t ) pxQueue->uxItemSize ); /*lint !e961 MISRA exception as the casts are only redundant for some ports. */

pxQueue->u.pcReadFrom -= pxQueue->uxItemSize;

if( pxQueue->u.pcReadFrom < pxQueue->pcHead ) /*lint !e946 MISRA exception justified as comparison of pointers is the cleanest solution. */

{

pxQueue->u.pcReadFrom = ( pxQueue->pcTail - pxQueue->uxItemSize );

}

else

{

mtCOVERAGE_TEST_MARKER();

}

if( xPosition == queueOVERWRITE )

{

if( uxMessagesWaiting > ( UBaseType_t ) 0 )

{

/* An item is not being added but overwritten, so subtract

one from the recorded number of items in the queue so when

one is added again below the number of recorded items remains

correct. */

--uxMessagesWaiting;

}

else

{

mtCOVERAGE_TEST_MARKER();

}

}

else

{

mtCOVERAGE_TEST_MARKER();

}

}

pxQueue->uxMessagesWaiting = uxMessagesWaiting + 1;

return xReturn;

}

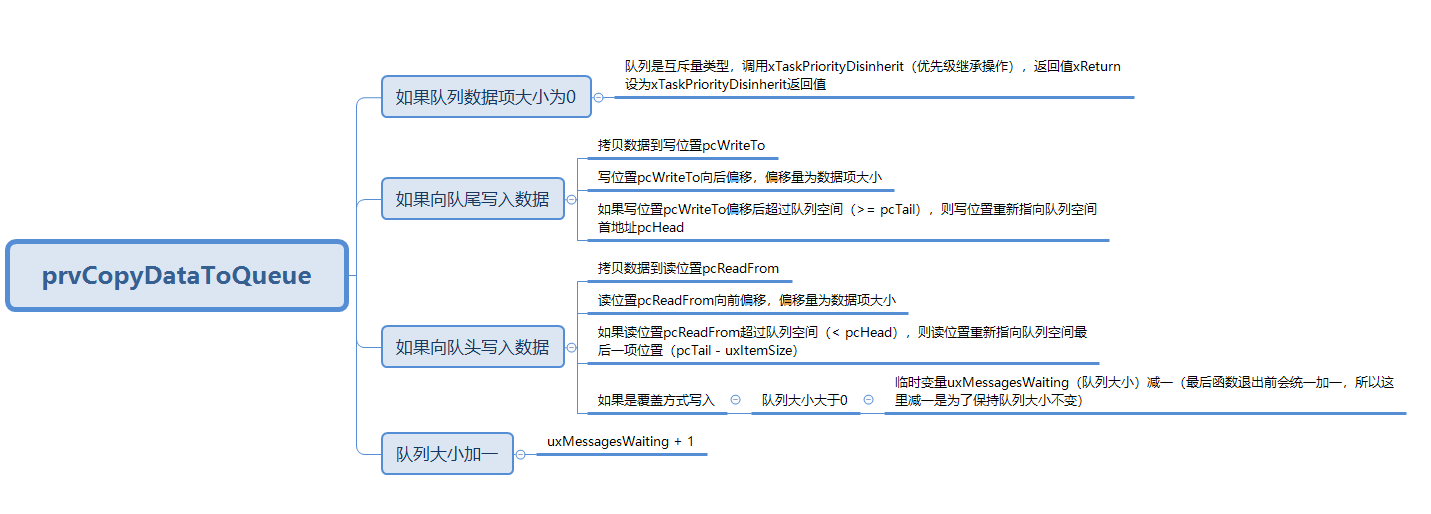

3.4.4.6 prvCopyDataToQueue分析

prvCopyDataToQueue用于拷入数据入队。

3.4.5 接收消息

3.4.5.1 xQueueReceive源码

#define xQueueReceive( xQueue, pvBuffer, xTicksToWait ) xQueueGenericReceive( ( xQueue ), ( pvBuffer ), ( xTicksToWait ), pdFALSE )

xQueueReceive宏实际调用的是xQueueGenericReceive。

BaseType_t xQueueGenericReceive( QueueHandle_t xQueue, void * const pvBuffer, TickType_t xTicksToWait, const BaseType_t xJustPeeking )

{

BaseType_t xEntryTimeSet = pdFALSE;

TimeOut_t xTimeOut;

int8_t *pcOriginalReadPosition;

Queue_t * const pxQueue = ( Queue_t * ) xQueue;

configASSERT( pxQueue );

configASSERT( !( ( pvBuffer == NULL ) && ( pxQueue->uxItemSize != ( UBaseType_t ) 0U ) ) );

#if ( ( INCLUDE_xTaskGetSchedulerState == 1 ) || ( configUSE_TIMERS == 1 ) )

{

configASSERT( !( ( xTaskGetSchedulerState() == taskSCHEDULER_SUSPENDED ) && ( xTicksToWait != 0 ) ) );

}

#endif

/* This function relaxes the coding standard somewhat to allow return

statements within the function itself. This is done in the interest

of execution time efficiency. */

for( ;; )

{

taskENTER_CRITICAL();

{

const UBaseType_t uxMessagesWaiting = pxQueue->uxMessagesWaiting;

/* Is there data in the queue now? To be running the calling task

must be the highest priority task wanting to access the queue. */

if( uxMessagesWaiting > ( UBaseType_t ) 0 )

{

/* Remember the read position in case the queue is only being

peeked. */

pcOriginalReadPosition = pxQueue->u.pcReadFrom;

prvCopyDataFromQueue( pxQueue, pvBuffer );

if( xJustPeeking == pdFALSE )

{

traceQUEUE_RECEIVE( pxQueue );

/* Actually removing data, not just peeking. */

pxQueue->uxMessagesWaiting = uxMessagesWaiting - 1;

#if ( configUSE_MUTEXES == 1 )

{

if( pxQueue->uxQueueType == queueQUEUE_IS_MUTEX )

{

/* Record the information required to implement

priority inheritance should it become necessary. */

pxQueue->pxMutexHolder = ( int8_t * ) pvTaskIncrementMutexHeldCount(); /*lint !e961 Cast is not redundant as TaskHandle_t is a typedef. */

}

else

{

mtCOVERAGE_TEST_MARKER();

}

}

#endif /* configUSE_MUTEXES */

if( listLIST_IS_EMPTY( &( pxQueue->xTasksWaitingToSend ) ) == pdFALSE )

{

if( xTaskRemoveFromEventList( &( pxQueue->xTasksWaitingToSend ) ) != pdFALSE )

{

queueYIELD_IF_USING_PREEMPTION();

}

else

{

mtCOVERAGE_TEST_MARKER();

}

}

else

{

mtCOVERAGE_TEST_MARKER();

}

}

else

{

traceQUEUE_PEEK( pxQueue );

/* The data is not being removed, so reset the read

pointer. */

pxQueue->u.pcReadFrom = pcOriginalReadPosition;

/* The data is being left in the queue, so see if there are

any other tasks waiting for the data. */

if( listLIST_IS_EMPTY( &( pxQueue->xTasksWaitingToReceive ) ) == pdFALSE )

{

if( xTaskRemoveFromEventList( &( pxQueue->xTasksWaitingToReceive ) ) != pdFALSE )

{

/* The task waiting has a higher priority than this task. */

queueYIELD_IF_USING_PREEMPTION();

}

else

{

mtCOVERAGE_TEST_MARKER();

}

}

else

{

mtCOVERAGE_TEST_MARKER();

}

}

taskEXIT_CRITICAL();

return pdPASS;

}

else

{

if( xTicksToWait == ( TickType_t ) 0 )

{

/* The queue was empty and no block time is specified (or

the block time has expired) so leave now. */

taskEXIT_CRITICAL();

traceQUEUE_RECEIVE_FAILED( pxQueue );

return errQUEUE_EMPTY;

}

else if( xEntryTimeSet == pdFALSE )

{

/* The queue was empty and a block time was specified so

configure the timeout structure. */

vTaskSetTimeOutState( &xTimeOut );

xEntryTimeSet = pdTRUE;

}

else

{

/* Entry time was already set. */

mtCOVERAGE_TEST_MARKER();

}

}

}

taskEXIT_CRITICAL();

/* Interrupts and other tasks can send to and receive from the queue

now the critical section has been exited. */

vTaskSuspendAll();

prvLockQueue( pxQueue );

/* Update the timeout state to see if it has expired yet. */

if( xTaskCheckForTimeOut( &xTimeOut, &xTicksToWait ) == pdFALSE )

{

if( prvIsQueueEmpty( pxQueue ) != pdFALSE )

{

traceBLOCKING_ON_QUEUE_RECEIVE( pxQueue );

#if ( configUSE_MUTEXES == 1 )

{

if( pxQueue->uxQueueType == queueQUEUE_IS_MUTEX )

{

taskENTER_CRITICAL();

{

vTaskPriorityInherit( ( void * ) pxQueue->pxMutexHolder );

}

taskEXIT_CRITICAL();

}

else

{

mtCOVERAGE_TEST_MARKER();

}

}

#endif

vTaskPlaceOnEventList( &( pxQueue->xTasksWaitingToReceive ), xTicksToWait );

prvUnlockQueue( pxQueue );

if( xTaskResumeAll() == pdFALSE )

{

portYIELD_WITHIN_API();

}

else

{

mtCOVERAGE_TEST_MARKER();

}

}

else

{

/* Try again. */

prvUnlockQueue( pxQueue );

( void ) xTaskResumeAll();

}

}

else

{

prvUnlockQueue( pxQueue );

( void ) xTaskResumeAll();

if( prvIsQueueEmpty( pxQueue ) != pdFALSE )

{

traceQUEUE_RECEIVE_FAILED( pxQueue );

return errQUEUE_EMPTY;

}

else

{

mtCOVERAGE_TEST_MARKER();

}

}

}

}

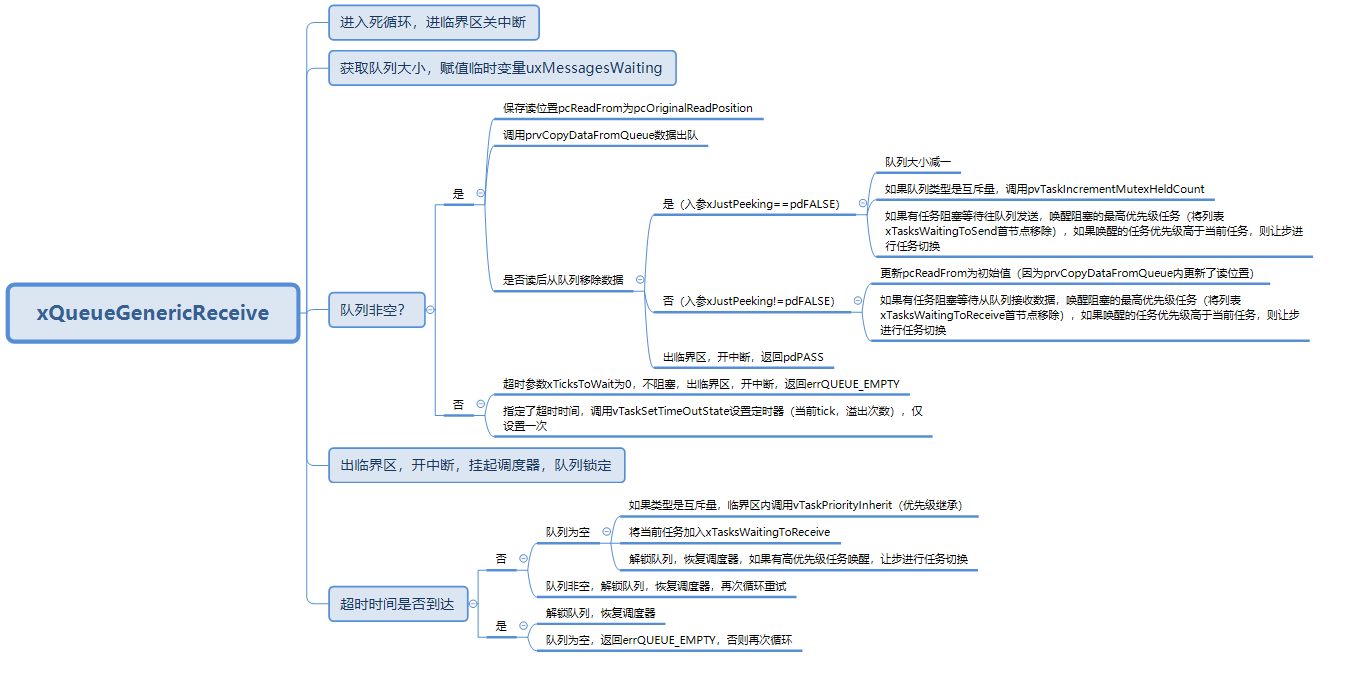

3.4.5.2 xQueueReceive分析

3.4.5.3 xQueueReceiveFromISR源码

BaseType_t xQueueReceiveFromISR( QueueHandle_t xQueue, void * const pvBuffer, BaseType_t * const pxHigherPriorityTaskWoken )

{

BaseType_t xReturn;

UBaseType_t uxSavedInterruptStatus;

Queue_t * const pxQueue = ( Queue_t * ) xQueue;

configASSERT( pxQueue );

configASSERT( !( ( pvBuffer == NULL ) && ( pxQueue->uxItemSize != ( UBaseType_t ) 0U ) ) );

/* RTOS ports that support interrupt nesting have the concept of a maximum

system call (or maximum API call) interrupt priority. Interrupts that are

above the maximum system call priority are kept permanently enabled, even

when the RTOS kernel is in a critical section, but cannot make any calls to

FreeRTOS API functions. If configASSERT() is defined in FreeRTOSConfig.h

then portASSERT_IF_INTERRUPT_PRIORITY_INVALID() will result in an assertion

failure if a FreeRTOS API function is called from an interrupt that has been

assigned a priority above the configured maximum system call priority.

Only FreeRTOS functions that end in FromISR can be called from interrupts

that have been assigned a priority at or (logically) below the maximum

system call interrupt priority. FreeRTOS maintains a separate interrupt

safe API to ensure interrupt entry is as fast and as simple as possible.

More information (albeit Cortex-M specific) is provided on the following

link: http://www.freertos.org/RTOS-Cortex-M3-M4.html */

portASSERT_IF_INTERRUPT_PRIORITY_INVALID();

uxSavedInterruptStatus = portSET_INTERRUPT_MASK_FROM_ISR();

{

const UBaseType_t uxMessagesWaiting = pxQueue->uxMessagesWaiting;

/* Cannot block in an ISR, so check there is data available. */

if( uxMessagesWaiting > ( UBaseType_t ) 0 )

{

const int8_t cRxLock = pxQueue->cRxLock;

traceQUEUE_RECEIVE_FROM_ISR( pxQueue );

prvCopyDataFromQueue( pxQueue, pvBuffer );

pxQueue->uxMessagesWaiting = uxMessagesWaiting - 1;

/* If the queue is locked the event list will not be modified.

Instead update the lock count so the task that unlocks the queue

will know that an ISR has removed data while the queue was

locked. */

if( cRxLock == queueUNLOCKED )

{

if( listLIST_IS_EMPTY( &( pxQueue->xTasksWaitingToSend ) ) == pdFALSE )

{

if( xTaskRemoveFromEventList( &( pxQueue->xTasksWaitingToSend ) ) != pdFALSE )

{

/* The task waiting has a higher priority than us so

force a context switch. */

if( pxHigherPriorityTaskWoken != NULL )

{

*pxHigherPriorityTaskWoken = pdTRUE;

}

else

{

mtCOVERAGE_TEST_MARKER();

}

}

else

{

mtCOVERAGE_TEST_MARKER();

}

}

else

{

mtCOVERAGE_TEST_MARKER();

}

}

else

{

/* Increment the lock count so the task that unlocks the queue

knows that data was removed while it was locked. */

pxQueue->cRxLock = ( int8_t ) ( cRxLock + 1 );

}

xReturn = pdPASS;

}

else

{

xReturn = pdFAIL;

traceQUEUE_RECEIVE_FROM_ISR_FAILED( pxQueue );

}

}

portCLEAR_INTERRUPT_MASK_FROM_ISR( uxSavedInterruptStatus );

return xReturn;

}

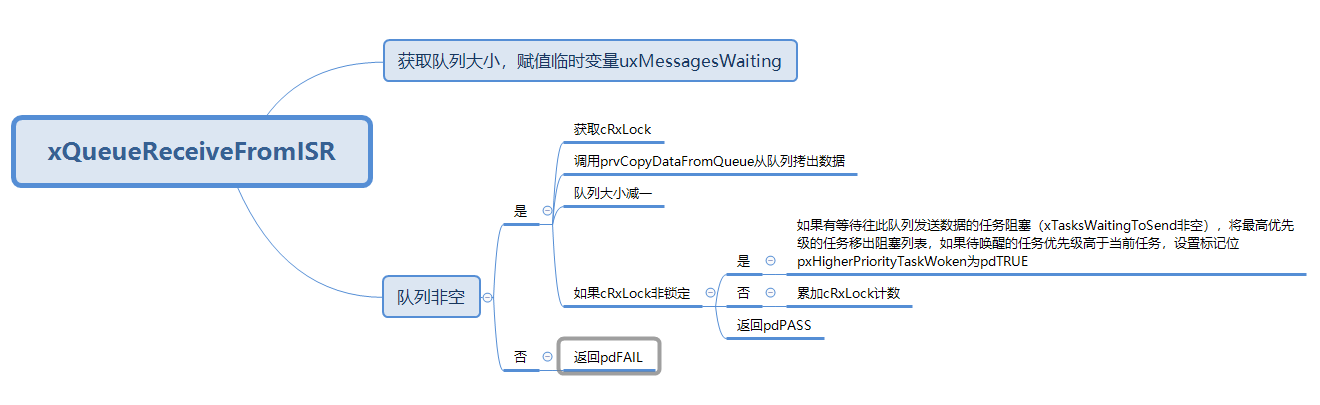

3.4.5.4 xQueueReceiveFromISR分析

xQueueReceiveFromISR是在中断服务内使用的接口,与xQueueGenericReceive差异在于:

- 接口内不能阻塞,因此不提供超时时间参数;

- 当数据拷出队列且有任务阻塞等待往队列发送数据时,它不是直接在接口内进行任务切换,而是设置一个标记,标识是否有更高优先级任务等待唤醒,交由用户实现任务切换;

- 当队列cRxLock锁定时,不操作接收阻塞的任务列表,而是累加cRxLock。推迟到在解锁队列prvUnlockQueue中才操作列表;

3.4.5.5 prvCopyDataFromQueue源码

static void prvCopyDataFromQueue( Queue_t * const pxQueue, void * const pvBuffer )

{

if( pxQueue->uxItemSize != ( UBaseType_t ) 0 )

{

pxQueue->u.pcReadFrom += pxQueue->uxItemSize;

if( pxQueue->u.pcReadFrom >= pxQueue->pcTail ) /*lint !e946 MISRA exception justified as use of the relational operator is the cleanest solutions. */

{

pxQueue->u.pcReadFrom = pxQueue->pcHead;

}

else

{

mtCOVERAGE_TEST_MARKER();

}

( void ) memcpy( ( void * ) pvBuffer, ( void * ) pxQueue->u.pcReadFrom, ( size_t ) pxQueue->uxItemSize ); /*lint !e961 !e418 MISRA exception as the casts are only redundant for some ports. Also previous logic ensures a null pointer can only be passed to memcpy() when the count is 0. */

}

}

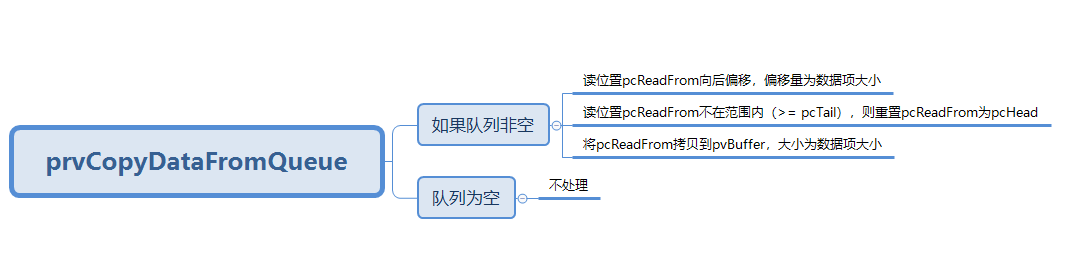

3.4.5.6 prvCopyDataFromQueue分析

prvCopyDataFromQueue用于拷出数据出队。

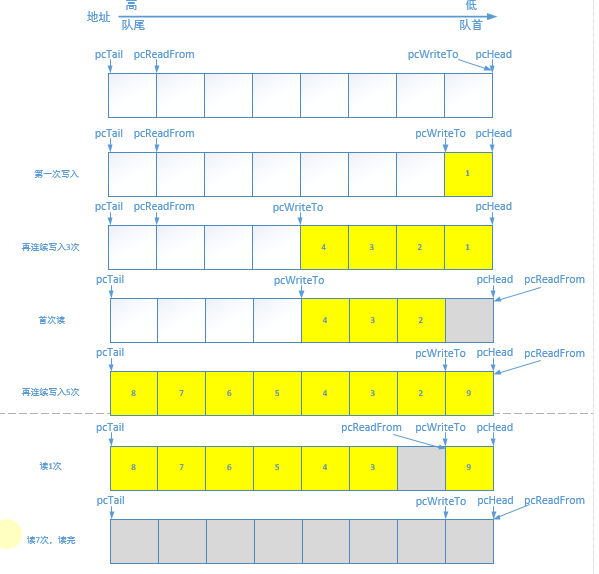

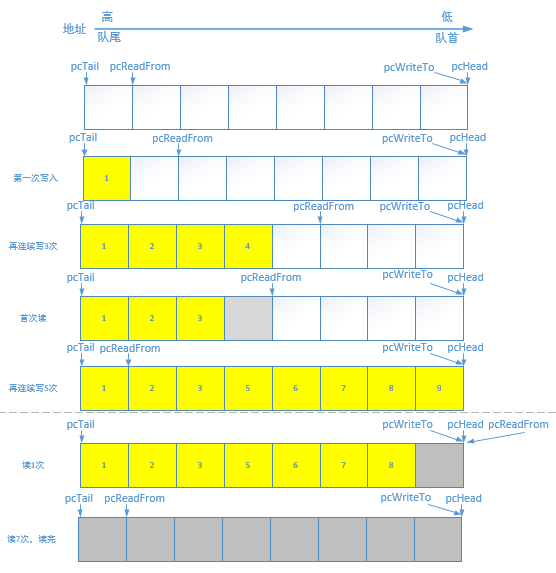

3.4.6 数据入队出队示意图(普通队列)

queueSEND_TO_BACK:

- 写的时候先往pcWriteTo写,再递增pcWriteTo指针;

- pcWriteTo到尾端的时候要重新指向头,它永远不会指向到pcTail;

- 读的时候先递增pcReadFrom指针,再从pcReadFrom拷贝;

- pcReadFrom到尾端的时候要重新指向头,它永远不会指向到pcTail;

queueSEND_TO_FRONT:

queueSEND_TO_FRONT的时候操作的是pcReadFrom指针,而不是pcWriteTo。

- 写的时候先往pcReadFrom写,再递减pcReadFrom指针;

- pcReadFrom越过头端的时候要重新指向尾端数据块,它永远不会指向到pcTail;

- 读的时候先递增pcReadFrom指针,再从pcReadFrom拷贝;

- pcReadFrom到尾端的时候要重新指向头,它永远不会指向到pcTail;

3.4.7 队列上锁解锁

3.4.7.1 prvLockQueue源码

/*

* Macro to mark a queue as locked. Locking a queue prevents an ISR from

* accessing the queue event lists.

*/

#define prvLockQueue( pxQueue ) \

taskENTER_CRITICAL(); \

{ \

if( ( pxQueue )->cRxLock == queueUNLOCKED ) \

{ \

( pxQueue )->cRxLock = queueLOCKED_UNMODIFIED; \

} \

if( ( pxQueue )->cTxLock == queueUNLOCKED ) \

{ \

( pxQueue )->cTxLock = queueLOCKED_UNMODIFIED; \

} \

} \

taskEXIT_CRITICAL()

3.4.7.2 prvLockQueue分析

采用宏实现,属于临界区,需要在锁定前关中断,锁定后开中断。锁定只是设置标志位为queueLOCKED_UNMODIFIED;锁标志区分为cTxLock和cRxLock,对应于发送和接收。

3.4.7.3 prvUnlockQueue源码

static void prvUnlockQueue( Queue_t * const pxQueue )

{

/* THIS FUNCTION MUST BE CALLED WITH THE SCHEDULER SUSPENDED. */

/* The lock counts contains the number of extra data items placed or

removed from the queue while the queue was locked. When a queue is

locked items can be added or removed, but the event lists cannot be

updated. */

taskENTER_CRITICAL();

{

int8_t cTxLock = pxQueue->cTxLock;

/* See if data was added to the queue while it was locked. */

while( cTxLock > queueLOCKED_UNMODIFIED )

{

/* Data was posted while the queue was locked. Are any tasks

blocked waiting for data to become available? */

#if ( configUSE_QUEUE_SETS == 1 )

{

if( pxQueue->pxQueueSetContainer != NULL )

{

if( prvNotifyQueueSetContainer( pxQueue, queueSEND_TO_BACK ) != pdFALSE )

{

/* The queue is a member of a queue set, and posting to

the queue set caused a higher priority task to unblock.

A context switch is required. */

vTaskMissedYield();

}

else

{

mtCOVERAGE_TEST_MARKER();

}

}

else

{

/* Tasks that are removed from the event list will get

added to the pending ready list as the scheduler is still

suspended. */

if( listLIST_IS_EMPTY( &( pxQueue->xTasksWaitingToReceive ) ) == pdFALSE )

{

if( xTaskRemoveFromEventList( &( pxQueue->xTasksWaitingToReceive ) ) != pdFALSE )

{

/* The task waiting has a higher priority so record that a

context switch is required. */

vTaskMissedYield();

}

else

{

mtCOVERAGE_TEST_MARKER();

}

}

else

{

break;

}

}

}

#else /* configUSE_QUEUE_SETS */

{

/* Tasks that are removed from the event list will get added to

the pending ready list as the scheduler is still suspended. */

if( listLIST_IS_EMPTY( &( pxQueue->xTasksWaitingToReceive ) ) == pdFALSE )

{

if( xTaskRemoveFromEventList( &( pxQueue->xTasksWaitingToReceive ) ) != pdFALSE )

{

/* The task waiting has a higher priority so record that

a context switch is required. */

vTaskMissedYield();

}

else

{

mtCOVERAGE_TEST_MARKER();

}

}

else

{

break;

}

}

#endif /* configUSE_QUEUE_SETS */

--cTxLock;

}

pxQueue->cTxLock = queueUNLOCKED;

}

taskEXIT_CRITICAL();

/* Do the same for the Rx lock. */

taskENTER_CRITICAL();

{

int8_t cRxLock = pxQueue->cRxLock;

while( cRxLock > queueLOCKED_UNMODIFIED )

{

if( listLIST_IS_EMPTY( &( pxQueue->xTasksWaitingToSend ) ) == pdFALSE )

{

if( xTaskRemoveFromEventList( &( pxQueue->xTasksWaitingToSend ) ) != pdFALSE )

{

vTaskMissedYield();

}

else

{

mtCOVERAGE_TEST_MARKER();

}

--cRxLock;

}

else

{

break;

}

}

pxQueue->cRxLock = queueUNLOCKED;

}

taskEXIT_CRITICAL();

}

3.4.7.4 prvUnlockQueue分析

3.4.8 队列空判断

static BaseType_t prvIsQueueEmpty( const Queue_t *pxQueue )

{

BaseType_t xReturn;

taskENTER_CRITICAL();

{

if( pxQueue->uxMessagesWaiting == ( UBaseType_t ) 0 )

{

xReturn = pdTRUE;

}

else

{

xReturn = pdFALSE;

}

}

taskEXIT_CRITICAL();

return xReturn;

}

/*-----------------------------------------------------------*/

BaseType_t xQueueIsQueueEmptyFromISR( const QueueHandle_t xQueue )

{

BaseType_t xReturn;

configASSERT( xQueue );

if( ( ( Queue_t * ) xQueue )->uxMessagesWaiting == ( UBaseType_t ) 0 )

{

xReturn = pdTRUE;

}

else

{

xReturn = pdFALSE;

}

return xReturn;

} /*lint !e818 xQueue could not be pointer to const because it is a typedef. */

检查uxMessagesWaiting是否为0,uxMessagesWaiting表示队列大小,prvIsQueueEmpty需要在检查前进临界区关中断,检查后出临界区开中断,防止中断和高优先级任务抢占。

xQueueIsQueueFullFromISR为中断服务程序调用,不需临界区保护;

3.4.9 队列满判断

static BaseType_t prvIsQueueFull( const Queue_t *pxQueue )

{

BaseType_t xReturn;

taskENTER_CRITICAL();

{

if( pxQueue->uxMessagesWaiting == pxQueue->uxLength )

{

xReturn = pdTRUE;

}

else

{

xReturn = pdFALSE;

}

}

taskEXIT_CRITICAL();

return xReturn;

}

/*-----------------------------------------------------------*/

BaseType_t xQueueIsQueueFullFromISR( const QueueHandle_t xQueue )

{

BaseType_t xReturn;

configASSERT( xQueue );

if( ( ( Queue_t * ) xQueue )->uxMessagesWaiting == ( ( Queue_t * ) xQueue )->uxLength )

{

xReturn = pdTRUE;

}

else

{

xReturn = pdFALSE;

}

return xReturn;

} /*lint !e818 xQueue could not be pointer to const because it is a typedef. */

检查uxMessagesWaiting是否等于uxLength,uxMessagesWaiting表示队列大小,uxLength表示队列容量;

prvIsQueueFull需要在检查前进临界区关中断,检查后出临界区开中断,防止中断和高优先级任务抢占;

xQueueIsQueueFullFromISR为中断服务程序调用,不需临界区保护;

3.5 为什么不在FromISR接口内进行任务切换

为什么不在"FromISR"函数内部进行任务切换,而只是标记一下而已呢?是为了效率,ISR中有可能多次调用"FromISR"函数,如果在"FromISR"内部进行任务切换,会浪费时间。解决方法是:

- 在"FromISR"中标记是否需要切换;

- 在ISR返回之前再进行任务切换;

在ISR中调用API时不进行任务切换,而只是在"xHigherPriorityTaskWoken"中标记一下,除了效率,还有多种好处:

- 效率高:避免不必要的任务切换;

- 让ISR更可控:中断随机产生,在API中进行任务切换的话,可能导致问题更复杂;

- 可移植性

- 在Tick中断中,调用 vApplicationTickHook() :它运行于ISR,只能使用"FromISR"的函数;

使用"FromISR"函数时,如果不想使用xHigherPriorityTaskWoken参数,可以设置为NULL。

参考链接

百问网 FreeRTOS入门与工程实践-基于STM32F103 第17章中断管理(Interrupt Management)