前言

本篇将介绍如何通过添加RenderFeature实现自定义的postprocess——Dual Blur

关于RenderFeature的基础可以看这篇https://www.cnblogs.com/chenglixue/p/17816447.html

Dual Blur介绍

-

因为毛神对于十大模糊算法的介绍已经整理得十分详细了,所以这里不会深入,但会大致讲讲它的思想

-

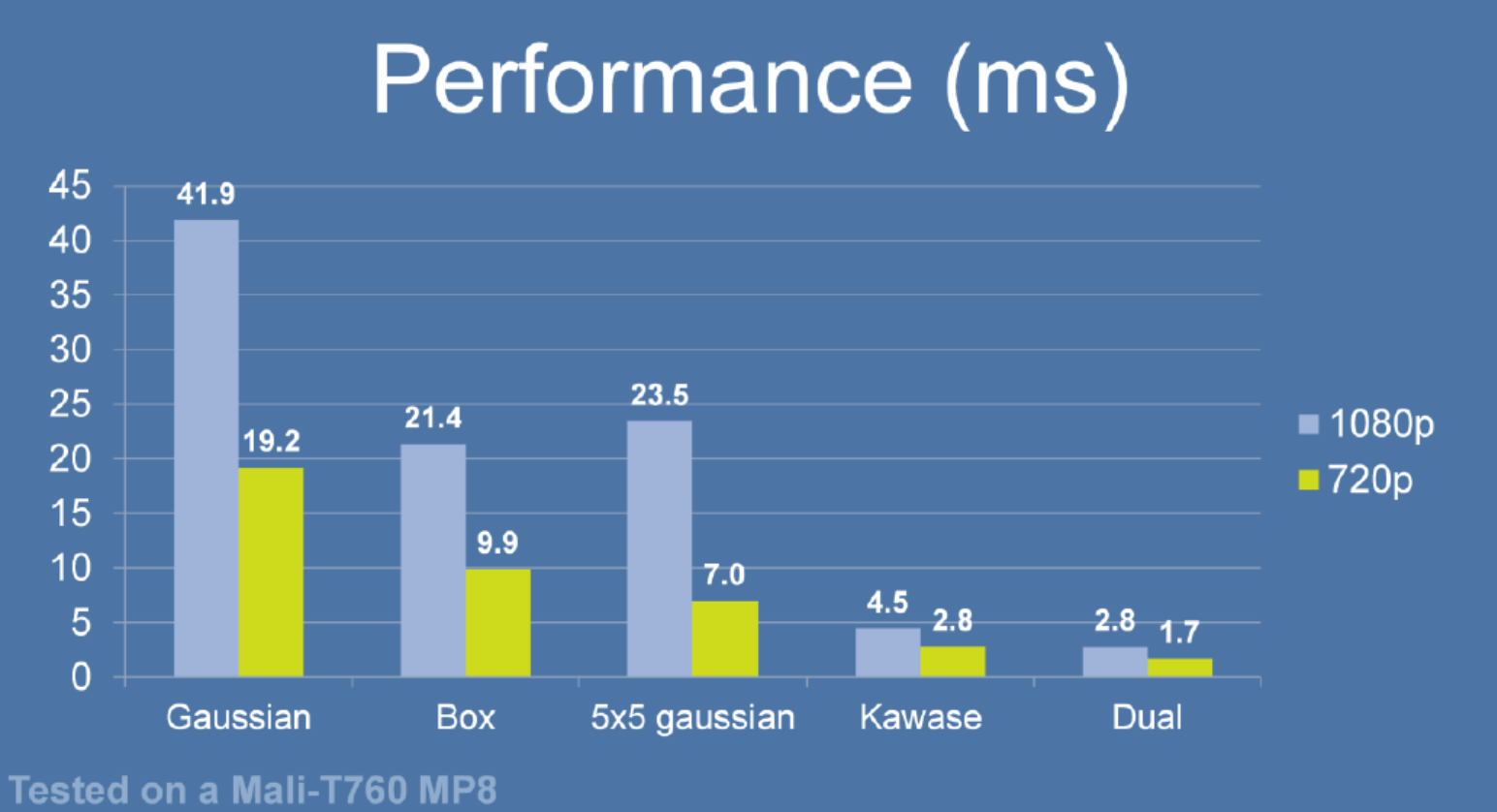

思想:Dual Blur是一种基于Kawase Blur的模糊算法,由两种不同的Blur Kernel组成。和Kawase Blur的Blit不同之处在于,Dual Blur在Blit时还对RT进行降采样和升采样

-

因为降采样和升采样的原因,造成RT使用更少的内存,所以就有最优秀的性能

Shader

-

思路

- 降采样:与Kawase Blur一样,采样5个pixel,但中间的目标pixel权重为1/2,边缘4个pixel权重为1/8

- 升采样:采样8个像素点,4个近的权重为1/6, 4个远的权重为1/12

-

实现

将uv变换放在VS执行,提升PS性能Shader "Custom/PP_DualBlur" { Properties { _MainTex("Main Tex", 2D) = "white" {} _BlurIntensity("Blur Intensity", Range(0, 10)) = 1 } SubShader { Tags { "RenderPipeline" = "UniversalPipeline" } Cull Off ZWrite Off ZTest Always HLSLINCLUDE #include "Packages/com.unity.render-pipelines.universal/ShaderLibrary/Core.hlsl" CBUFFER_START(UnityPerMaterial) half _BlurIntensity; float4 _MainTex_TexelSize; CBUFFER_END TEXTURE2D(_MainTex); SAMPLER(sampler_MainTex); struct VSInput { float4 positionL : POSITION; float2 uv : TEXCOORD0; }; struct PSInput { float4 positionH : SV_POSITION; float2 uv : TEXCOORD0; float4 uv01 : TEXCOORD1; float4 uv23 : TEXCOORD2; float4 uv45 : TEXCOORD3; float4 uv67 : TEXCOORD4; }; ENDHLSL Pass { NAME "Down Samp[le" HLSLPROGRAM #pragma vertex VS #pragma fragment PS PSInput VS(VSInput vsInput) { PSInput vsOutput; vsOutput.positionH = TransformObjectToHClip(vsInput.positionL); // 在D3D平台下,若开启抗锯齿,_TexelSize.y会变成负值,需要进行oneminus,否则会导致图像上下颠倒 #ifdef UNITY_UV_STARTS_AT_TOP if(_MainTex_TexelSize.y < 0) vsInput.uv.y = 1 - vsInput.uv.y; #endif vsOutput.uv = vsInput.uv; vsOutput.uv01.xy = vsInput.uv + float2(1.f, 1.f) * _MainTex_TexelSize.xy * _BlurIntensity; vsOutput.uv01.zw = vsInput.uv + float2(-1.f, -1.f) * _MainTex_TexelSize.xy * _BlurIntensity; vsOutput.uv23.xy = vsInput.uv + float2(1.f, -1.f) * _MainTex_TexelSize.xy * _BlurIntensity; vsOutput.uv23.zw = vsInput.uv + float2(-1.f, 1.f) * _MainTex_TexelSize.xy * _BlurIntensity; return vsOutput; } float4 PS(PSInput psInput) : SV_TARGET { float4 outputColor = 0.f; outputColor += SAMPLE_TEXTURE2D(_MainTex, sampler_MainTex, psInput.uv.xy) * 0.5; outputColor += SAMPLE_TEXTURE2D(_MainTex, sampler_MainTex, psInput.uv01.xy) * 0.125; outputColor += SAMPLE_TEXTURE2D(_MainTex, sampler_MainTex, psInput.uv01.zw) * 0.125; outputColor += SAMPLE_TEXTURE2D(_MainTex, sampler_MainTex, psInput.uv23.xy) * 0.125; outputColor += SAMPLE_TEXTURE2D(_MainTex, sampler_MainTex, psInput.uv23.zw) * 0.125; return outputColor; } ENDHLSL } Pass { NAME "Up Sample" HLSLPROGRAM #pragma vertex VS #pragma fragment PS PSInput VS(VSInput vsInput) { PSInput vsOutput; vsOutput.positionH = TransformObjectToHClip(vsInput.positionL); #ifdef UNITY_UV_STARTS_AT_TOP if(_MainTex_TexelSize.y < 0.f) vsInput.uv.y = 1 - vsInput.uv.y; #endif vsOutput.uv = vsInput.uv; // 1/12 vsOutput.uv01.xy = vsInput.uv + float2(0, 1) * _MainTex_TexelSize.xy * _BlurIntensity; vsOutput.uv01.zw = vsInput.uv + float2(0, -1) * _MainTex_TexelSize.xy * _BlurIntensity; vsOutput.uv23.xy = vsInput.uv + float2(1, 0) * _MainTex_TexelSize.xy * _BlurIntensity; vsOutput.uv23.zw = vsInput.uv + float2(-1, 0) * _MainTex_TexelSize.xy * _BlurIntensity; // 1/6 vsOutput.uv45.xy = vsInput.uv + float2(1, 1) * 0.5 * _MainTex_TexelSize.xy * _BlurIntensity; vsOutput.uv45.zw = vsInput.uv + float2(-1, -1) * 0.5 * _MainTex_TexelSize.xy * _BlurIntensity; vsOutput.uv67.xy = vsInput.uv + float2(1, -1) * 0.5 * _MainTex_TexelSize.xy * _BlurIntensity; vsOutput.uv67.zw = vsInput.uv + float2(-1, 1) * 0.5 * _MainTex_TexelSize.xy * _BlurIntensity; return vsOutput; } float4 PS(PSInput psInput) : SV_TARGET { float4 outputColor = 0.f; outputColor += SAMPLE_TEXTURE2D(_MainTex, sampler_MainTex, psInput.uv01.xy) * 1/12; outputColor += SAMPLE_TEXTURE2D(_MainTex, sampler_MainTex, psInput.uv01.zw) * 1/12; outputColor += SAMPLE_TEXTURE2D(_MainTex, sampler_MainTex, psInput.uv23.xy) * 1/12; outputColor += SAMPLE_TEXTURE2D(_MainTex, sampler_MainTex, psInput.uv23.zw) * 1/12; outputColor += SAMPLE_TEXTURE2D(_MainTex, sampler_MainTex, psInput.uv45.xy) * 1/6; outputColor += SAMPLE_TEXTURE2D(_MainTex, sampler_MainTex, psInput.uv45.zw) * 1/6; outputColor += SAMPLE_TEXTURE2D(_MainTex, sampler_MainTex, psInput.uv67.xy) * 1/6; outputColor += SAMPLE_TEXTURE2D(_MainTex, sampler_MainTex, psInput.uv67.zw) * 1/6; return outputColor; } ENDHLSL } } }

RenderFeature

-

实现

public class DualBlurRenderFeature : ScriptableRendererFeature { // render feature 显示内容 [System.Serializable] public class PassSetting { // profiler tag will show up in frame debugger public readonly string m_ProfilerTag = "Dual Blur Pass"; // 安插位置 public RenderPassEvent m_passEvent = RenderPassEvent.AfterRenderingTransparents; // 控制分辨率 [Range(1, 5)] public int m_Downsample = 1; [Range(1, 5)] public int m_PassLoop = 2; // 模糊强度 [Range(0, 10)] public float m_BlurIntensity = 5; } public PassSetting m_Setting = new PassSetting(); DualBlurRenderPass m_DualBlurPass; // 初始化 public override void Create() { m_DualBlurPass = new DualBlurRenderPass(m_Setting); } // Here you can inject one or multiple render passes in the renderer. // This method is called when setting up the renderer once per-camera. public override void AddRenderPasses(ScriptableRenderer renderer, ref RenderingData renderingData) { // can queue up multiple passes after each other renderer.EnqueuePass(m_DualBlurPass); } }都是些老朋友了应该无需介绍

RenderPass

-

实现

同样,重量级嘉宾是RenderPassclass DualBlurRenderPass : ScriptableRenderPass { // 用于存储pass setting private DualBlurRenderFeature.PassSetting m_passSetting; private RenderTargetIdentifier m_TargetBuffer; private Material m_Material; static class ShaderIDs { // int 相较于 string可以获得更好的性能,因为这是预处理的 internal static readonly int m_BlurIntensityProperty = Shader.PropertyToID("_BlurIntensity"); } // 降采样和升采样的ShaderID struct BlurLevelShaderIDs { internal int downLevelID; internal int upLevelID; } static int maxBlurLevel = 16; private BlurLevelShaderIDs[] blurLevel; // 用于设置material 属性 public DualBlurRenderPass(DualBlurRenderFeature.PassSetting passSetting) { this.m_passSetting = passSetting; renderPassEvent = m_passSetting.m_passEvent; if (m_Material == null) m_Material = CoreUtils.CreateEngineMaterial("Custom/PP_DualBlur"); // 基于pass setting设置material Properties m_Material.SetFloat(ShaderIDs.m_BlurIntensityProperty, m_passSetting.m_BlurIntensity); } // Gets called by the renderer before executing the pass. // Can be used to configure render targets and their clearing state. // Can be used to create temporary render target textures. // If this method is not overriden, the render pass will render to the active camera render target. public override void OnCameraSetup(CommandBuffer cmd, ref RenderingData renderingData) { // Grab the color buffer from the renderer camera color target m_TargetBuffer = renderingData.cameraData.renderer.cameraColorTarget; blurLevel = new BlurLevelShaderIDs[maxBlurLevel]; for (int t = 0; t < maxBlurLevel; ++t) // 16个down level id, 16个up level id { blurLevel[t] = new BlurLevelShaderIDs { downLevelID = Shader.PropertyToID("_BlurMipDown" + t), upLevelID = Shader.PropertyToID("_BlurMipUp" + t) }; } } // The actual execution of the pass. This is where custom rendering occurs public override void Execute(ScriptableRenderContext context, ref RenderingData renderingData) { // Grab a command buffer. We put the actual execution of the pass inside of a profiling scope CommandBuffer cmd = CommandBufferPool.Get(); // camera target descriptor will be used when creating a temporary render texture RenderTextureDescriptor descriptor = renderingData.cameraData.cameraTargetDescriptor; // 降采样 descriptor.width /= m_passSetting.m_Downsample; descriptor.height /= m_passSetting.m_Downsample; // 设置 temporary render texture的depth buffer的精度 descriptor.depthBufferBits = 0; using (new ProfilingScope(cmd, new ProfilingSampler(m_passSetting.m_ProfilerTag))) { // 初始图像作为down的初始图像 RenderTargetIdentifier lastDown = m_TargetBuffer; // 计算down sample // 老思路,计算复制给工具Temp RT for (int i = 0; i < m_passSetting.m_PassLoop; ++i) { // 创建down、up的Temp RT int midDown = blurLevel[i].downLevelID; int midUp = blurLevel[i].upLevelID; cmd.GetTemporaryRT(midDown, descriptor, FilterMode.Bilinear); cmd.GetTemporaryRT(midUp, descriptor, FilterMode.Bilinear); // down sample cmd.Blit(lastDown, midDown, m_Material, 0); // 计算得到的图像复制给lastDown,以便下个循环继续计算 lastDown = midDown; // down sample每次循环都降低分辨率 descriptor.width = Mathf.Max(descriptor.width / 2, 1); descriptor.height = Mathf.Max(descriptor.height / 2, 1); } // 计算up sample // 将最终的down sample RT ID赋值给首个up sample RT ID int lastUp = blurLevel[m_passSetting.m_PassLoop - 1].downLevelID; // 第一个ID已经赋值 for (int i = m_passSetting.m_PassLoop - 2; i > 0; --i) { int midUp = blurLevel[i].upLevelID; cmd.Blit(lastUp, midUp, m_Material, 1); lastUp = midUp; } // 将最终的up sample RT 复制给 输出RT cmd.Blit( lastUp, m_TargetBuffer, m_Material, 1); } // Execute the command buffer and release it context.ExecuteCommandBuffer(cmd); CommandBufferPool.Release(cmd); } // Called when the camera has finished rendering // release/cleanup any allocated resources that were created by this pass public override void OnCameraCleanup(CommandBuffer cmd) { if(cmd == null) throw new ArgumentNullException("cmd"); // Since created a temporary render texture in OnCameraSetup, we need to release the memory here to avoid a leak for (int i = 0; i < m_passSetting.m_PassLoop; ++i) { cmd.ReleaseTemporaryRT(blurLevel[i].downLevelID); cmd.ReleaseTemporaryRT(blurLevel[i].upLevelID); } } } -

和以往不同的是,本次实现添加了一个struct BlurLevelShaderIDs,用于存储down sample ID、up sample ID。为什么不存储在之前实现的ShaderIDs呢?因为在down sample时,需要降低RT的分辨率,而GetTemporaryRT()后RT的分辨率是不可以变的,所以需要许多个down sample RT

效果

-

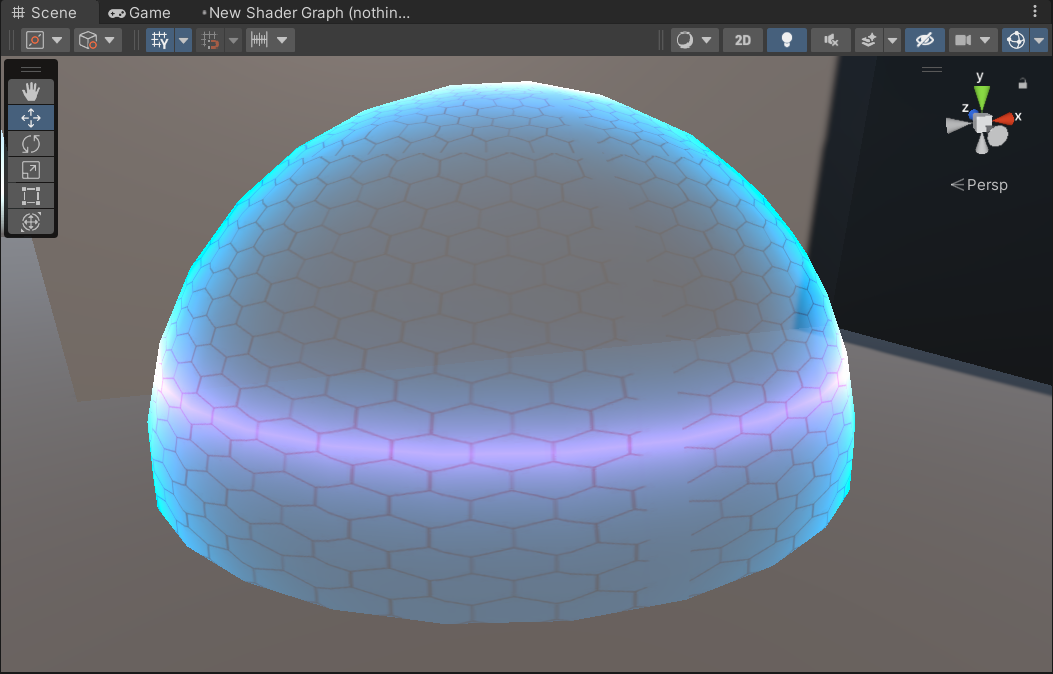

实现前

-

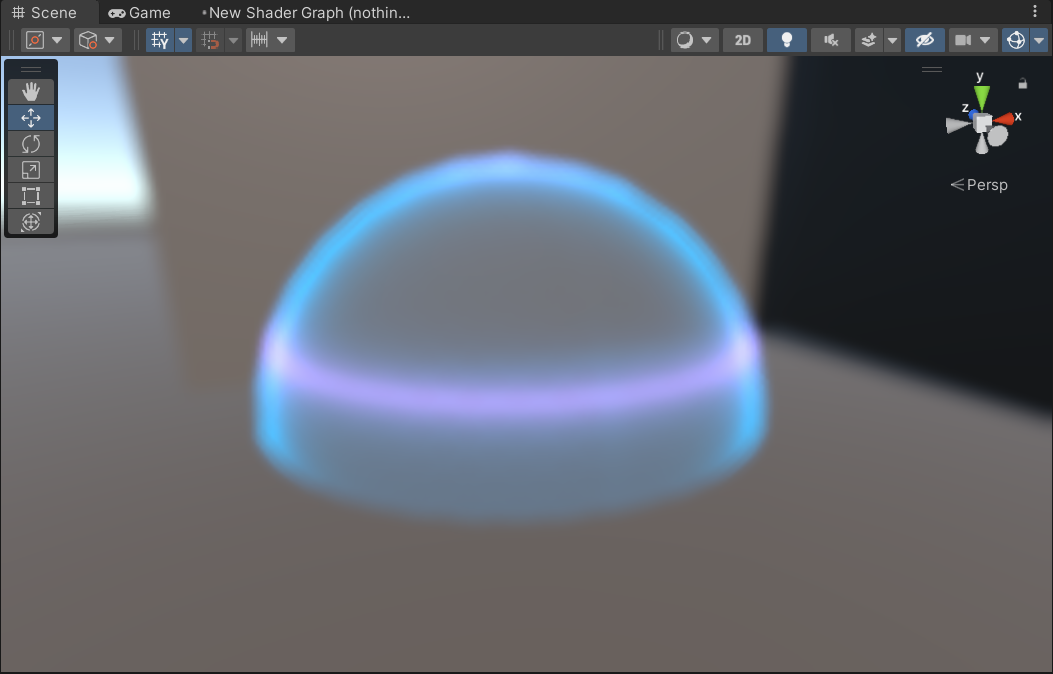

实现后

reference

https://zhuanlan.zhihu.com/p/125744132

https://docs.unity3d.com/cn/2021.3/Manual/SL-PlatformDifferences.html

https://www.bilibili.com/read/cv6597443/?spm_id_from=333.999.0.0