① 自动限速算法

1 """ 2 17. 自动限速算法 3 from scrapy.contrib.throttle import AutoThrottle 4 自动限速设置 5 1. 获取最小延迟 DOWNLOAD_DELAY 6 2. 获取最大延迟 AUTOTHROTTLE_MAX_DELAY 7 3. 设置初始下载延迟 AUTOTHROTTLE_START_DELAY 8 4. 当请求下载完成后,获取其"连接"时间 latency,即:请求连接到接受到响应头之间的时间 9 5. 用于计算的... AUTOTHROTTLE_TARGET_CONCURRENCY 10 target_delay = latency / self.target_concurrency 11 new_delay = (slot.delay + target_delay) / 2.0 # 表示上一次的延迟时间 12 new_delay = max(target_delay, new_delay) 13 new_delay = min(max(self.mindelay, new_delay), self.maxdelay) 14 slot.delay = new_delay 15 """ 16 17 # 开始自动限速 18 # AUTOTHROTTLE_ENABLED = True 19 # The initial download delay 20 # 初始下载延迟 21 # AUTOTHROTTLE_START_DELAY = 5 22 # The maximum download delay to be set in case of high latencies 23 # 最大下载延迟 24 # AUTOTHROTTLE_MAX_DELAY = 10 25 # The average number of requests Scrapy should be sending in parallel to each remote server 26 # 平均每秒并发数 27 # AUTOTHROTTLE_TARGET_CONCURRENCY = 1.0 28 29 # Enable showing throttling stats for every response received: 30 # 是否显示 31 # AUTOTHROTTLE_DEBUG = True

1 import logging 2 3 from scrapy.exceptions import NotConfigured 4 from scrapy import signals 5 6 logger = logging.getLogger(__name__) 7 8 9 class AutoThrottle(object): 10 11 def __init__(self, crawler): 12 self.crawler = crawler 13 if not crawler.settings.getbool('AUTOTHROTTLE_ENABLED'): 14 raise NotConfigured 15 16 self.debug = crawler.settings.getbool("AUTOTHROTTLE_DEBUG") 17 self.target_concurrency = crawler.settings.getfloat("AUTOTHROTTLE_TARGET_CONCURRENCY") 18 crawler.signals.connect(self._spider_opened, signal=signals.spider_opened) 19 crawler.signals.connect(self._response_downloaded, signal=signals.response_downloaded) 20 21 @classmethod 22 def from_crawler(cls, crawler): 23 return cls(crawler) 24 25 def _spider_opened(self, spider): 26 self.mindelay = self._min_delay(spider) 27 self.maxdelay = self._max_delay(spider) 28 spider.download_delay = self._start_delay(spider) 29 30 def _min_delay(self, spider): 31 s = self.crawler.settings 32 return getattr(spider, 'download_delay', s.getfloat('DOWNLOAD_DELAY')) 33 34 def _max_delay(self, spider): 35 return self.crawler.settings.getfloat('AUTOTHROTTLE_MAX_DELAY') 36 37 def _start_delay(self, spider): 38 return max(self.mindelay, self.crawler.settings.getfloat('AUTOTHROTTLE_START_DELAY')) 39 40 def _response_downloaded(self, response, request, spider): 41 key, slot = self._get_slot(request, spider) 42 latency = request.meta.get('download_latency') 43 if latency is None or slot is None: 44 return 45 46 olddelay = slot.delay 47 self._adjust_delay(slot, latency, response) 48 if self.debug: 49 diff = slot.delay - olddelay 50 size = len(response.body) 51 conc = len(slot.transferring) 52 logger.info( 53 "slot: %(slot)s | conc:%(concurrency)2d | " 54 "delay:%(delay)5d ms (%(delaydiff)+d) | " 55 "latency:%(latency)5d ms | size:%(size)6d bytes", 56 { 57 'slot': key, 'concurrency': conc, 58 'delay': slot.delay * 1000, 'delaydiff': diff * 1000, 59 'latency': latency * 1000, 'size': size 60 }, 61 extra={'spider': spider} 62 ) 63 64 def _get_slot(self, request, spider): 65 key = request.meta.get('download_slot') 66 return key, self.crawler.engine.downloader.slots.get(key) 67 68 def _adjust_delay(self, slot, latency, response): 69 """Define delay adjustment policy""" 70 71 # If a server needs `latency` seconds to respond then 72 # we should send a request each `latency/N` seconds 73 # to have N requests processed in parallel 74 target_delay = latency / self.target_concurrency 75 76 # Adjust the delay to make it closer to target_delay 77 new_delay = (slot.delay + target_delay) / 2.0 78 79 # If target delay is bigger than old delay, then use it instead of mean. 80 # It works better with problematic sites. 81 new_delay = max(target_delay, new_delay) 82 83 # Make sure self.mindelay <= new_delay <= self.max_delay 84 new_delay = min(max(self.mindelay, new_delay), self.maxdelay) 85 86 # Dont adjust delay if response status != 200 and new delay is smaller 87 # than old one, as error pages (and redirections) are usually small and 88 # so tend to reduce latency, thus provoking a positive feedback by 89 # reducing delay instead of increase. 90 if response.status != 200 and new_delay <= slot.delay: 91 return 92 93 slot.delay = new_delay

补充:

1 from scrapy.contrib.downloadermiddleware.robotstxt import RobotsTxtMiddleware #爬虫规则 2 from scrapy.core.downloader.webclient import ScrapyHTTPClientFactory #找到请求到接收响应的时间:latency 3 #request.meta['download_latency'] = self.headers_time-self.start_time

② 启用缓存

1 """ 2 目的用于将已经发送的请求或响应缓存下来,以便以后使用 3 4 from scrapy.downloadermiddlewares.httpcache import HttpCacheMiddleware 5 from scrapy.extensions.httpcache import DummyPolicy 6 from scrapy.extensions.httpcache import RFC2616Policy 7 from scrapy.extensions.httpcache import FilesystemCacheStorage 8 """ 9 # Enable and configure HTTP caching (disabled by default) 10 # See https://doc.scrapy.org/en/latest/topics/downloader-middleware.html#httpcache-middleware-settings 11 12 from scrapy.downloadermiddlewares.httpcache import HttpCacheMiddleware 13 # 是否启用缓存策略 14 # HTTPCACHE_ENABLED = True 15 16 # 缓存策略① 17 from scrapy.extensions.httpcache import DummyPolicy 18 # 缓存策略:所有请求均缓存,下次在请求直接访问原来的缓存即可 19 # HTTPCACHE_POLICY = "scrapy.extensions.httpcache.DummyPolicy" 20 21 # 缓存策略②:根据Http响应头:Cache-Control、Last-Modified 等进行缓存的策略 -->>常用 22 from scrapy.extensions.httpcache import RFC2616Policy 23 24 # 缓存超时时间 25 # HTTPCACHE_EXPIRATION_SECS = 0 26 27 # 缓存忽略的Http状态码 28 # HTTPCACHE_IGNORE_HTTP_CODES = [] 29 30 # 缓存保存路径 31 # HTTPCACHE_DIR = 'httpcache' 32 33 # 缓存存储的插件 34 # HTTPCACHE_STORAGE = 'scrapy.extensions.httpcache.FilesystemCacheStorage' #可以自定义存储类,遵循File..格式即可 35 # 文件类型存储:存储类型 -->>常用 36 from scrapy.extensions.httpcache import FilesystemCacheStorage #查看源码,文件保存response

1 from email.utils import formatdate 2 from twisted.internet import defer 3 from twisted.internet.error import TimeoutError, DNSLookupError, \ 4 ConnectionRefusedError, ConnectionDone, ConnectError, \ 5 ConnectionLost, TCPTimedOutError 6 from twisted.web.client import ResponseFailed 7 from scrapy import signals 8 from scrapy.exceptions import NotConfigured, IgnoreRequest 9 from scrapy.utils.misc import load_object 10 11 12 class HttpCacheMiddleware(object): 13 14 DOWNLOAD_EXCEPTIONS = (defer.TimeoutError, TimeoutError, DNSLookupError, 15 ConnectionRefusedError, ConnectionDone, ConnectError, 16 ConnectionLost, TCPTimedOutError, ResponseFailed, 17 IOError) 18 19 def __init__(self, settings, stats): 20 if not settings.getbool('HTTPCACHE_ENABLED'): 21 raise NotConfigured 22 self.policy = load_object(settings['HTTPCACHE_POLICY'])(settings) 23 self.storage = load_object(settings['HTTPCACHE_STORAGE'])(settings) 24 self.ignore_missing = settings.getbool('HTTPCACHE_IGNORE_MISSING') 25 self.stats = stats 26 27 @classmethod 28 def from_crawler(cls, crawler): 29 o = cls(crawler.settings, crawler.stats) 30 crawler.signals.connect(o.spider_opened, signal=signals.spider_opened) 31 crawler.signals.connect(o.spider_closed, signal=signals.spider_closed) 32 return o 33 34 def spider_opened(self, spider): 35 self.storage.open_spider(spider) 36 37 def spider_closed(self, spider): 38 self.storage.close_spider(spider) 39 40 def process_request(self, request, spider): 41 if request.meta.get('dont_cache', False): 42 return 43 44 # Skip uncacheable requests 45 if not self.policy.should_cache_request(request): 46 request.meta['_dont_cache'] = True # flag as uncacheable 47 return 48 49 # Look for cached response and check if expired 50 cachedresponse = self.storage.retrieve_response(spider, request) 51 if cachedresponse is None: 52 self.stats.inc_value('httpcache/miss', spider=spider) 53 if self.ignore_missing: 54 self.stats.inc_value('httpcache/ignore', spider=spider) 55 raise IgnoreRequest("Ignored request not in cache: %s" % request) 56 return # first time request 57 58 # Return cached response only if not expired 59 cachedresponse.flags.append('cached') 60 if self.policy.is_cached_response_fresh(cachedresponse, request): 61 self.stats.inc_value('httpcache/hit', spider=spider) 62 return cachedresponse 63 64 # Keep a reference to cached response to avoid a second cache lookup on 65 # process_response hook 66 request.meta['cached_response'] = cachedresponse 67 68 def process_response(self, request, response, spider): 69 if request.meta.get('dont_cache', False): 70 return response 71 72 # Skip cached responses and uncacheable requests 73 if 'cached' in response.flags or '_dont_cache' in request.meta: 74 request.meta.pop('_dont_cache', None) 75 return response 76 77 # RFC2616 requires origin server to set Date header, 78 # https://www.w3.org/Protocols/rfc2616/rfc2616-sec14.html#sec14.18 79 if 'Date' not in response.headers: 80 response.headers['Date'] = formatdate(usegmt=1) 81 82 # Do not validate first-hand responses 83 cachedresponse = request.meta.pop('cached_response', None) 84 if cachedresponse is None: 85 self.stats.inc_value('httpcache/firsthand', spider=spider) 86 self._cache_response(spider, response, request, cachedresponse) 87 return response 88 89 if self.policy.is_cached_response_valid(cachedresponse, response, request): 90 self.stats.inc_value('httpcache/revalidate', spider=spider) 91 return cachedresponse 92 93 self.stats.inc_value('httpcache/invalidate', spider=spider) 94 self._cache_response(spider, response, request, cachedresponse) 95 return response 96 97 def process_exception(self, request, exception, spider): 98 cachedresponse = request.meta.pop('cached_response', None) 99 if cachedresponse is not None and isinstance(exception, self.DOWNLOAD_EXCEPTIONS): 100 self.stats.inc_value('httpcache/errorrecovery', spider=spider) 101 return cachedresponse 102 103 def _cache_response(self, spider, response, request, cachedresponse): 104 if self.policy.should_cache_response(response, request): 105 self.stats.inc_value('httpcache/store', spider=spider) 106 self.storage.store_response(spider, request, response) 107 else: 108 self.stats.inc_value('httpcache/uncacheable', spider=spider)

1 class DummyPolicy(object): 2 3 def __init__(self, settings): 4 self.ignore_schemes = settings.getlist('HTTPCACHE_IGNORE_SCHEMES') 5 self.ignore_http_codes = [int(x) for x in settings.getlist('HTTPCACHE_IGNORE_HTTP_CODES')] 6 7 def should_cache_request(self, request): 8 return urlparse_cached(request).scheme not in self.ignore_schemes 9 10 def should_cache_response(self, response, request): 11 return response.status not in self.ignore_http_codes 12 13 def is_cached_response_fresh(self, response, request): 14 return True 15 16 def is_cached_response_valid(self, cachedresponse, response, request): 17 return True

1 class RFC2616Policy(object): 2 3 MAXAGE = 3600 * 24 * 365 # one year 4 5 def __init__(self, settings): 6 self.always_store = settings.getbool('HTTPCACHE_ALWAYS_STORE') 7 self.ignore_schemes = settings.getlist('HTTPCACHE_IGNORE_SCHEMES') 8 self.ignore_response_cache_controls = [to_bytes(cc) for cc in 9 settings.getlist('HTTPCACHE_IGNORE_RESPONSE_CACHE_CONTROLS')] 10 self._cc_parsed = WeakKeyDictionary() 11 12 def _parse_cachecontrol(self, r): 13 if r not in self._cc_parsed: 14 cch = r.headers.get(b'Cache-Control', b'') 15 parsed = parse_cachecontrol(cch) 16 if isinstance(r, Response): 17 for key in self.ignore_response_cache_controls: 18 parsed.pop(key, None) 19 self._cc_parsed[r] = parsed 20 return self._cc_parsed[r] 21 22 def should_cache_request(self, request): 23 if urlparse_cached(request).scheme in self.ignore_schemes: 24 return False 25 cc = self._parse_cachecontrol(request) 26 # obey user-agent directive "Cache-Control: no-store" 27 if b'no-store' in cc: 28 return False 29 # Any other is eligible for caching 30 return True 31 32 def should_cache_response(self, response, request): 33 # What is cacheable - https://www.w3.org/Protocols/rfc2616/rfc2616-sec13.html#sec14.9.1 34 # Response cacheability - https://www.w3.org/Protocols/rfc2616/rfc2616-sec13.html#sec13.4 35 # Status code 206 is not included because cache can not deal with partial contents 36 cc = self._parse_cachecontrol(response) 37 # obey directive "Cache-Control: no-store" 38 if b'no-store' in cc: 39 return False 40 # Never cache 304 (Not Modified) responses 41 elif response.status == 304: 42 return False 43 # Cache unconditionally if configured to do so 44 elif self.always_store: 45 return True 46 # Any hint on response expiration is good 47 elif b'max-age' in cc or b'Expires' in response.headers: 48 return True 49 # Firefox fallbacks this statuses to one year expiration if none is set 50 elif response.status in (300, 301, 308): 51 return True 52 # Other statuses without expiration requires at least one validator 53 elif response.status in (200, 203, 401): 54 return b'Last-Modified' in response.headers or b'ETag' in response.headers 55 # Any other is probably not eligible for caching 56 # Makes no sense to cache responses that does not contain expiration 57 # info and can not be revalidated 58 else: 59 return False 60 61 def is_cached_response_fresh(self, cachedresponse, request): 62 cc = self._parse_cachecontrol(cachedresponse) 63 ccreq = self._parse_cachecontrol(request) 64 if b'no-cache' in cc or b'no-cache' in ccreq: 65 return False 66 67 now = time() 68 freshnesslifetime = self._compute_freshness_lifetime(cachedresponse, request, now) 69 currentage = self._compute_current_age(cachedresponse, request, now) 70 71 reqmaxage = self._get_max_age(ccreq) 72 if reqmaxage is not None: 73 freshnesslifetime = min(freshnesslifetime, reqmaxage) 74 75 if currentage < freshnesslifetime: 76 return True 77 78 if b'max-stale' in ccreq and b'must-revalidate' not in cc: 79 # From RFC2616: "Indicates that the client is willing to 80 # accept a response that has exceeded its expiration time. 81 # If max-stale is assigned a value, then the client is 82 # willing to accept a response that has exceeded its 83 # expiration time by no more than the specified number of 84 # seconds. If no value is assigned to max-stale, then the 85 # client is willing to accept a stale response of any age." 86 staleage = ccreq[b'max-stale'] 87 if staleage is None: 88 return True 89 90 try: 91 if currentage < freshnesslifetime + max(0, int(staleage)): 92 return True 93 except ValueError: 94 pass 95 96 # Cached response is stale, try to set validators if any 97 self._set_conditional_validators(request, cachedresponse) 98 return False 99 100 def is_cached_response_valid(self, cachedresponse, response, request): 101 # Use the cached response if the new response is a server error, 102 # as long as the old response didn't specify must-revalidate. 103 if response.status >= 500: 104 cc = self._parse_cachecontrol(cachedresponse) 105 if b'must-revalidate' not in cc: 106 return True 107 108 # Use the cached response if the server says it hasn't changed. 109 return response.status == 304 110 111 def _set_conditional_validators(self, request, cachedresponse): 112 if b'Last-Modified' in cachedresponse.headers: 113 request.headers[b'If-Modified-Since'] = cachedresponse.headers[b'Last-Modified'] 114 115 if b'ETag' in cachedresponse.headers: 116 request.headers[b'If-None-Match'] = cachedresponse.headers[b'ETag'] 117 118 def _get_max_age(self, cc): 119 try: 120 return max(0, int(cc[b'max-age'])) 121 except (KeyError, ValueError): 122 return None 123 124 def _compute_freshness_lifetime(self, response, request, now): 125 # Reference nsHttpResponseHead::ComputeFreshnessLifetime 126 # https://dxr.mozilla.org/mozilla-central/source/netwerk/protocol/http/nsHttpResponseHead.cpp#706 127 cc = self._parse_cachecontrol(response) 128 maxage = self._get_max_age(cc) 129 if maxage is not None: 130 return maxage 131 132 # Parse date header or synthesize it if none exists 133 date = rfc1123_to_epoch(response.headers.get(b'Date')) or now 134 135 # Try HTTP/1.0 Expires header 136 if b'Expires' in response.headers: 137 expires = rfc1123_to_epoch(response.headers[b'Expires']) 138 # When parsing Expires header fails RFC 2616 section 14.21 says we 139 # should treat this as an expiration time in the past. 140 return max(0, expires - date) if expires else 0 141 142 # Fallback to heuristic using last-modified header 143 # This is not in RFC but on Firefox caching implementation 144 lastmodified = rfc1123_to_epoch(response.headers.get(b'Last-Modified')) 145 if lastmodified and lastmodified <= date: 146 return (date - lastmodified) / 10 147 148 # This request can be cached indefinitely 149 if response.status in (300, 301, 308): 150 return self.MAXAGE 151 152 # Insufficient information to compute fresshness lifetime 153 return 0 154 155 def _compute_current_age(self, response, request, now): 156 # Reference nsHttpResponseHead::ComputeCurrentAge 157 # https://dxr.mozilla.org/mozilla-central/source/netwerk/protocol/http/nsHttpResponseHead.cpp#658 158 currentage = 0 159 # If Date header is not set we assume it is a fast connection, and 160 # clock is in sync with the server 161 date = rfc1123_to_epoch(response.headers.get(b'Date')) or now 162 if now > date: 163 currentage = now - date 164 165 if b'Age' in response.headers: 166 try: 167 age = int(response.headers[b'Age']) 168 currentage = max(currentage, age) 169 except ValueError: 170 pass 171 172 return currentage

1 class FilesystemCacheStorage(object): 2 3 def __init__(self, settings): 4 self.cachedir = data_path(settings['HTTPCACHE_DIR']) 5 self.expiration_secs = settings.getint('HTTPCACHE_EXPIRATION_SECS') 6 self.use_gzip = settings.getbool('HTTPCACHE_GZIP') 7 self._open = gzip.open if self.use_gzip else open 8 9 def open_spider(self, spider): 10 logger.debug("Using filesystem cache storage in %(cachedir)s" % {'cachedir': self.cachedir}, 11 extra={'spider': spider}) 12 13 def close_spider(self, spider): 14 pass 15 16 def retrieve_response(self, spider, request): 17 """Return response if present in cache, or None otherwise.""" 18 metadata = self._read_meta(spider, request) 19 if metadata is None: 20 return # not cached 21 rpath = self._get_request_path(spider, request) 22 with self._open(os.path.join(rpath, 'response_body'), 'rb') as f: 23 body = f.read() 24 with self._open(os.path.join(rpath, 'response_headers'), 'rb') as f: 25 rawheaders = f.read() 26 url = metadata.get('response_url') 27 status = metadata['status'] 28 headers = Headers(headers_raw_to_dict(rawheaders)) 29 respcls = responsetypes.from_args(headers=headers, url=url) 30 response = respcls(url=url, headers=headers, status=status, body=body) 31 return response 32 33 def store_response(self, spider, request, response): 34 """Store the given response in the cache.""" 35 rpath = self._get_request_path(spider, request) 36 if not os.path.exists(rpath): 37 os.makedirs(rpath) 38 metadata = { 39 'url': request.url, 40 'method': request.method, 41 'status': response.status, 42 'response_url': response.url, 43 'timestamp': time(), 44 } 45 with self._open(os.path.join(rpath, 'meta'), 'wb') as f: 46 f.write(to_bytes(repr(metadata))) 47 with self._open(os.path.join(rpath, 'pickled_meta'), 'wb') as f: 48 pickle.dump(metadata, f, protocol=2) 49 with self._open(os.path.join(rpath, 'response_headers'), 'wb') as f: 50 f.write(headers_dict_to_raw(response.headers)) 51 with self._open(os.path.join(rpath, 'response_body'), 'wb') as f: 52 f.write(response.body) 53 with self._open(os.path.join(rpath, 'request_headers'), 'wb') as f: 54 f.write(headers_dict_to_raw(request.headers)) 55 with self._open(os.path.join(rpath, 'request_body'), 'wb') as f: 56 f.write(request.body) 57 58 def _get_request_path(self, spider, request): 59 key = request_fingerprint(request) 60 return os.path.join(self.cachedir, spider.name, key[0:2], key) 61 62 def _read_meta(self, spider, request): 63 rpath = self._get_request_path(spider, request) 64 metapath = os.path.join(rpath, 'pickled_meta') 65 if not os.path.exists(metapath): 66 return # not found 67 mtime = os.stat(metapath).st_mtime 68 if 0 < self.expiration_secs < time() - mtime: 69 return # expired 70 with self._open(metapath, 'rb') as f: 71 return pickle.load(f)

③ 代理

1. 默认代理:

1 代理,需要在环境变量中设置 2 from scrapy.contrib.downloadermiddleware.httpproxy import HttpProxyMiddleware 3 4 方式一:使用默认 5 os.environ 6 { 7 http_proxy:http://root:woshiniba@192.168.11.11:9999/ 8 https_proxy:http://192.168.11.11:9999/ 9 }

1 class HttpProxyMiddleware(object): 2 3 def __init__(self, auth_encoding='latin-1'): 4 self.auth_encoding = auth_encoding 5 self.proxies = {} 6 for type, url in getproxies().items(): 7 self.proxies[type] = self._get_proxy(url, type) 8 9 @classmethod 10 def from_crawler(cls, crawler): 11 if not crawler.settings.getbool('HTTPPROXY_ENABLED'): 12 raise NotConfigured 13 auth_encoding = crawler.settings.get('HTTPPROXY_AUTH_ENCODING') 14 return cls(auth_encoding) 15 16 def _basic_auth_header(self, username, password): 17 user_pass = to_bytes( 18 '%s:%s' % (unquote(username), unquote(password)), 19 encoding=self.auth_encoding) 20 return base64.b64encode(user_pass).strip() 21 22 def _get_proxy(self, url, orig_type): 23 proxy_type, user, password, hostport = _parse_proxy(url) 24 proxy_url = urlunparse((proxy_type or orig_type, hostport, '', '', '', '')) 25 26 if user: 27 creds = self._basic_auth_header(user, password) 28 else: 29 creds = None 30 31 return creds, proxy_url 32 33 def process_request(self, request, spider): 34 # ignore if proxy is already set 35 if 'proxy' in request.meta: 36 if request.meta['proxy'] is None: 37 return 38 # extract credentials if present 39 creds, proxy_url = self._get_proxy(request.meta['proxy'], '') 40 request.meta['proxy'] = proxy_url 41 if creds and not request.headers.get('Proxy-Authorization'): 42 request.headers['Proxy-Authorization'] = b'Basic ' + creds 43 return 44 elif not self.proxies: 45 return 46 47 parsed = urlparse_cached(request) 48 scheme = parsed.scheme 49 50 # 'no_proxy' is only supported by http schemes 51 if scheme in ('http', 'https') and proxy_bypass(parsed.hostname): 52 return 53 54 if scheme in self.proxies: 55 self._set_proxy(request, scheme) 56 57 def _set_proxy(self, request, scheme): 58 creds, proxy = self.proxies[scheme] 59 request.meta['proxy'] = proxy 60 if creds: 61 request.headers['Proxy-Authorization'] = b'Basic ' + creds

分析:

1 # from six.moves.urllib.request import getproxies, proxy_bypass 2 # 源码下 getproxies() 相当于os.environ(获取环境变量) 3 # def _set_proxy(self, request, scheme): 4 # creds, proxy = self.proxies[scheme] 5 # request.meta['proxy'] = proxy #主要 6 # if creds: 7 # request.headers['Proxy-Authorization'] = b'Basic ' + creds ->>不需要账号密码可以省略掉 #主要

2.自定义HttpProxyMiddleware类:

1 def to_bytes(text, encoding=None, errors='strict'): 2 if isinstance(text, bytes): 3 return text 4 if not isinstance(text, six.string_types): 5 raise TypeError('to_bytes must receive a unicode, str or bytes ' 6 'object, got %s' % type(text).__name__) 7 if encoding is None: 8 encoding = 'utf-8' 9 return text.encode(encoding, errors) 10 11 class ProxyMiddleware(object): 12 def process_request(self, request, spider): 13 PROXIES = [ 14 {'ip_port': '111.11.228.75:80', 'user_pass': ''}, 15 {'ip_port': '120.198.243.22:80', 'user_pass': ''}, 16 {'ip_port': '111.8.60.9:8123', 'user_pass': ''}, 17 {'ip_port': '101.71.27.120:80', 'user_pass': ''}, 18 {'ip_port': '122.96.59.104:80', 'user_pass': ''}, 19 {'ip_port': '122.224.249.122:8088', 'user_pass': ''}, 20 ] 21 proxy = random.choice(PROXIES) 22 if proxy['user_pass'] is not None: 23 request.meta['proxy'] = to_bytes("http://%s" % proxy['ip_port']) 24 encoded_user_pass = base64.encodestring(to_bytes(proxy['user_pass'])) 25 request.headers['Proxy-Authorization'] = to_bytes('Basic ' + encoded_user_pass) 26 print "**************ProxyMiddleware have pass************" + proxy['ip_port'] 27 else: 28 print "**************ProxyMiddleware no pass************" + proxy['ip_port'] 29 request.meta['proxy'] = to_bytes("http://%s" % proxy['ip_port']) 30 31 DOWNLOADER_MIDDLEWARES = { 32 'step8_king.middlewares.ProxyMiddleware': 500, 33 }

④ Https访问

Https访问时有两种情况:

1.要爬取网站使用的可信任证书(默认支持)

1 DOWNLOADER_HTTPCLIENTFACTORY = "scrapy.core.downloader.webclient.ScrapyHTTPClientFactory" 2 DOWNLOADER_CLIENTCONTEXTFACTORY = "scrapy.core.downloader.contextfactory.ScrapyClientContextFactory"

1 class ScrapyClientContextFactory(BrowserLikePolicyForHTTPS): 2 """ 3 Non-peer-certificate verifying HTTPS context factory 4 5 Default OpenSSL method is TLS_METHOD (also called SSLv23_METHOD) 6 which allows TLS protocol negotiation 7 8 'A TLS/SSL connection established with [this method] may 9 understand the SSLv3, TLSv1, TLSv1.1 and TLSv1.2 protocols.' 10 """ 11 12 def __init__(self, method=SSL.SSLv23_METHOD, *args, **kwargs): 13 super(ScrapyClientContextFactory, self).__init__(*args, **kwargs) 14 self._ssl_method = method 15 16 def getCertificateOptions(self): 17 # setting verify=True will require you to provide CAs 18 # to verify against; in other words: it's not that simple 19 20 # backward-compatible SSL/TLS method: 21 # 22 # * this will respect `method` attribute in often recommended 23 # `ScrapyClientContextFactory` subclass 24 # (https://github.com/scrapy/scrapy/issues/1429#issuecomment-131782133) 25 # 26 # * getattr() for `_ssl_method` attribute for context factories 27 # not calling super(..., self).__init__ 28 return CertificateOptions(verify=False, 29 method=getattr(self, 'method', 30 getattr(self, '_ssl_method', None)), 31 fixBrokenPeers=True, 32 acceptableCiphers=DEFAULT_CIPHERS) 33 34 # kept for old-style HTTP/1.0 downloader context twisted calls, 35 # e.g. connectSSL() 36 def getContext(self, hostname=None, port=None): 37 return self.getCertificateOptions().getContext() 38 39 def creatorForNetloc(self, hostname, port): 40 return ScrapyClientTLSOptions(hostname.decode("ascii"), self.getContext())

源码分析:

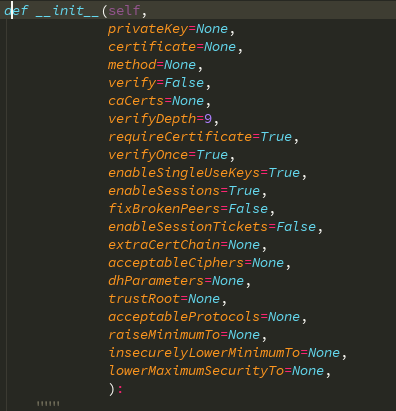

1 #def CertificateOptions(self): 2 #return CertificateOptions 3 #privateKey=None, 4 #certificate=None, 5 #自定义证书 -->> 继承ScrapyClientContextFactory,重写CertificateOptions方法,将CertificateOptions添加自定义的两个参数(privateKey,certificate)

2.要爬取网站使用的自定义证书

1 DOWNLOADER_HTTPCLIENTFACTORY = "scrapy.core.downloader.webclient.ScrapyHTTPClientFactory" 2 DOWNLOADER_CLIENTCONTEXTFACTORY = "step8_king.https.MySSLFactory" 3 4 # https.py 5 from scrapy.core.downloader.contextfactory import ScrapyClientContextFactory 6 from twisted.internet.ssl import (optionsForClientTLS, CertificateOptions, PrivateCertificate) 7 8 class MySSLFactory(ScrapyClientContextFactory): 9 def getCertificateOptions(self): 10 from OpenSSL import crypto 11 v1 = crypto.load_privatekey(crypto.FILETYPE_PEM, open('/Users/wupeiqi/client.key.unsecure', mode='r').read()) 12 v2 = crypto.load_certificate(crypto.FILETYPE_PEM, open('/Users/wupeiqi/client.pem', mode='r').read()) 13 return CertificateOptions( 14 privateKey=v1, # pKey对象 15 certificate=v2, # X509对象 16 verify=False, 17 method=getattr(self, 'method', getattr(self, '_ssl_method', None)) 18 ) 19 其他: 20 相关类 21 scrapy.core.downloader.handlers.http.HttpDownloadHandler 22 scrapy.core.downloader.webclient.ScrapyHTTPClientFactory 23 scrapy.core.downloader.contextfactory.ScrapyClientContextFactory 24 相关配置 25 DOWNLOADER_HTTPCLIENTFACTORY 26 DOWNLOADER_CLIENTCONTEXTFACTORY

详细参考:https://www.cnblogs.com/wupeiqi/articles/6229292.html