两次被这个问题折腾了,记录一下解决方案,并梳理一下各个路径的作用。

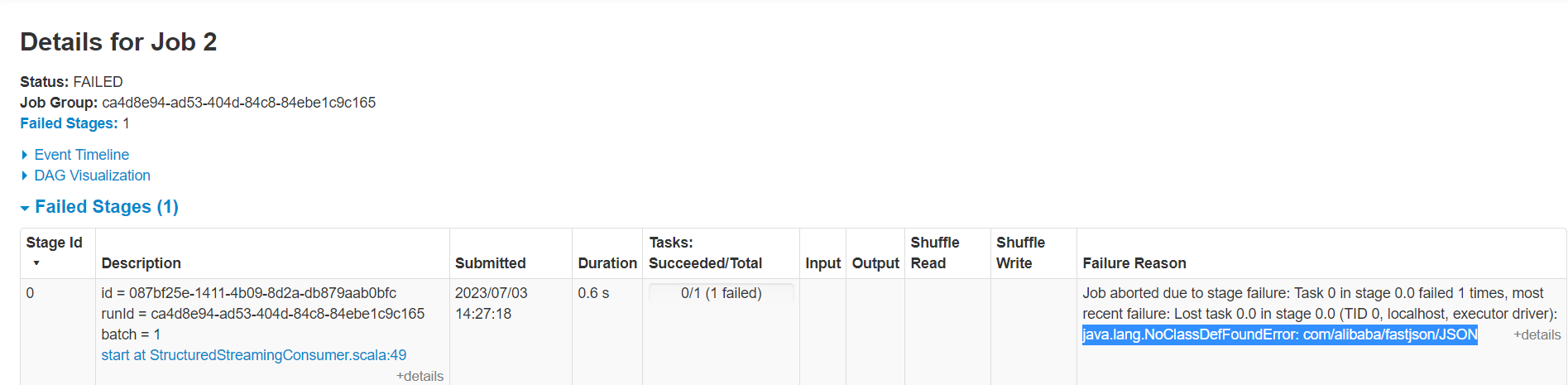

问题现象

spark作业在通过 spark-submit提交到yarn后,出现 NoClassDefFound的报错

问题分析

出现ClassNotFound, NoClassDefFound的问题要么就是jar包版本错误,要么就是classpath里头没有这个jar包。

因此要解决此问题,只要将jar包放到正确的路径,并设置好classpath即可

解决方案

两种方案解决

- spark-submit 提交时,通过参数指定 --conf "spark.executor.extraClassPath=/path/to/jar"

/usr/hdp/2.6.4.0-91/spark/bin/spark-submit

--master yarn

--deploy-mode cluster

--class com.test.main.SparkCompute

--executor-memory "25g"

--driver-memory "4g"

--executor-cores "5"

--num-executors "4"

--conf "spark.executor.extraClassPath=/usr/hdp/current/hadoop-yarn-client/lib/ojdbc6-11.2.0.3.0.jar:/usr/hdp/current/hadoop-yarn-client/lib/mysql-connector-java-5.1.27.jar:/usr/hdp/current/hive-server2/lib/hive-hbase-handler.jar:/usr/hdp/current/hbase-client/lib/guava-12.0.1.jar:/usr/hdp/current/hbase-client/lib/hbase-client.jar:/usr/hdp/current/hbase-client/lib/hbase-common.jar:/usr/hdp/current/hbase-client/lib/hbase-protocol.jar:/usr/hdp/current/hbase-client/lib/hbase-server.jar"

--conf "spark.executor.extraJavaOptions=-server -XX:+UseG1GC -XX:MaxGCPauseMillis=50"

--principal test001@COM

--jars test.jar

2. 设置一个额外jar的目录,将此目录添加到 mr 和spark的路径

mr修改 $HADOOP_HOME/etc/hadoop/mapred-site.xml, 修改 mapreduce.application.classpath 参数,添加上新的路径

<property>

<name>mapreduce.application.classpath</name>

<value>$HADOOP_CLIENT_CONF_DIR,$PWD/mr-framework/*,$MR2_CLASSPATH,/opt/cloudera/parcels/CDH/lib/hadoop/lib_userjob/*</value>

</property>

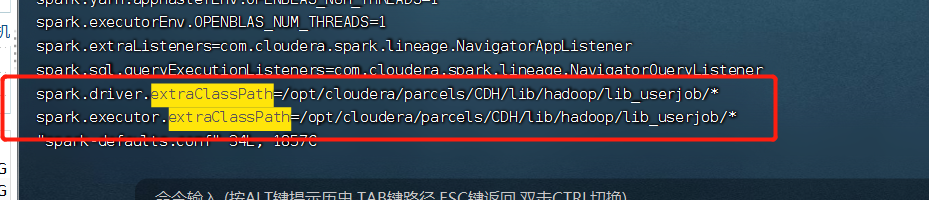

spark 修改 $SPARK_HOME/conf/spark-defaults.conf, 添加

spark.driver.extraClassPath=/opt/cloudera/parcels/CDH/lib/hadoop/lib_userjob/*

spark.executor.extraClassPath=/opt/cloudera/parcels/CDH/lib/hadoop/lib_userjob/*

注意事项

- 修改spark的配置文件,设置额外的路径来保存jar, 要记得分发到各个节点

- yarn配置中有个 yarn.application.classpath,不要修改这个,实测无效,p用没有