ceph的内容太多了,单独写一篇文章记录自己的一些RBD的学习笔记,其实简书和其他博客上已经记录的非常全面了,但是因为出处都比较分散,所以还是自己把自己的实验记录一下便于以后学习的查阅。也感谢各位大佬的无私分享。

1.RBD池的创建和enable

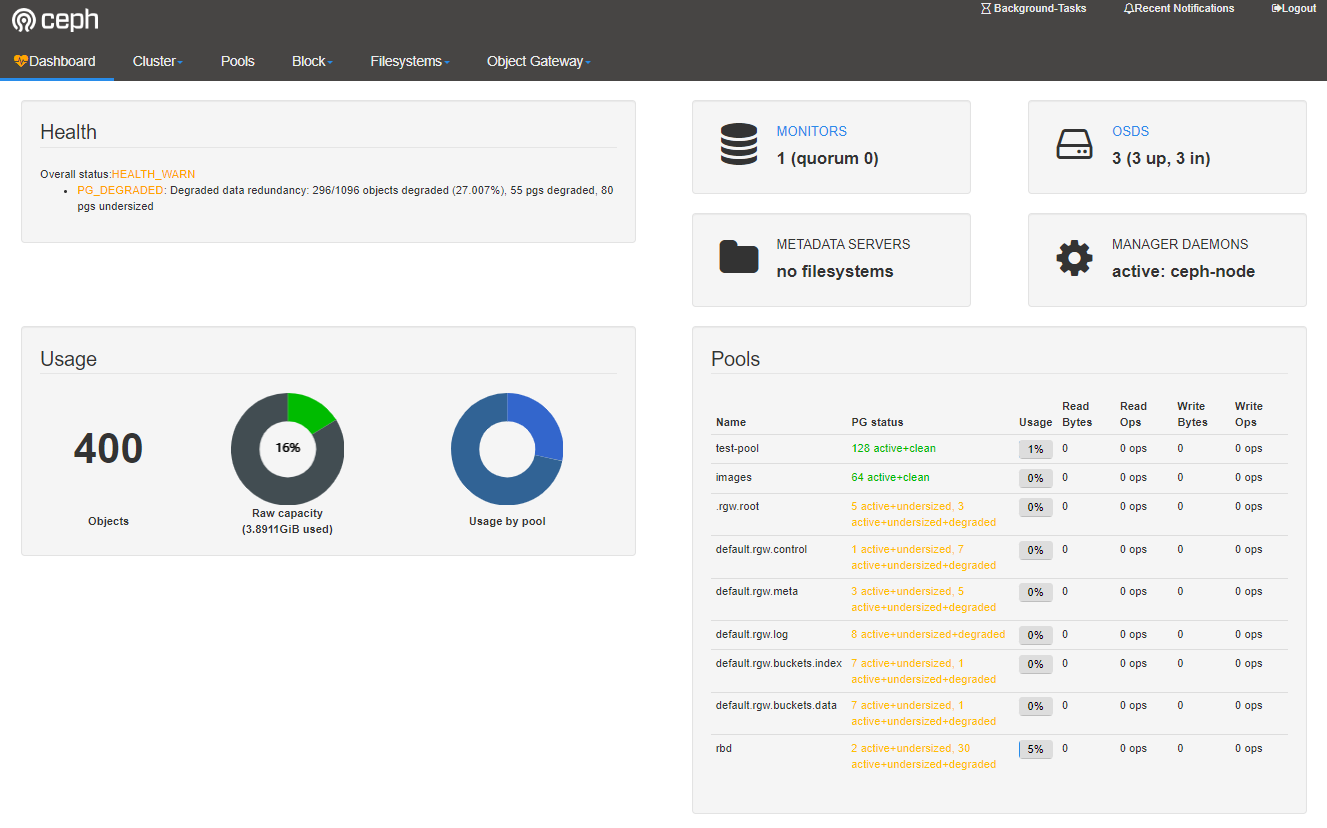

[cephadmin@ceph-node ~]$ ceph osd pool create rbd 32 32 pool 'rbd' created [cephadmin@ceph-node ~]$ ceph osd pool application enable rbd rbd enabled application 'rbd' on pool 'rbd' [cephadmin@ceph-node ~]$ ceph osd pool ls detail pool 1 'test-pool' replicated size 2 min_size 2 crush_rule 0 object_hash rjenkins pg_num 128 pgp_num 128 last_change 43 flags hashpspool max_bytes 214748364800 max_objects 50000 stripe_width 0 application rgw pool 2 'images' replicated size 2 min_size 2 crush_rule 0 object_hash rjenkins pg_num 64 pgp_num 64 last_change 41 flags hashpspool stripe_width 0 pool 3 '.rgw.root' replicated size 3 min_size 2 crush_rule 0 object_hash rjenkins pg_num 8 pgp_num 8 last_change 53 owner 18446744073709551615 flags hashpspool stripe_width 0 application rgw pool 4 'default.rgw.control' replicated size 3 min_size 2 crush_rule 0 object_hash rjenkins pg_num 8 pgp_num 8 last_change 56 owner 18446744073709551615 flags hashpspool stripe_width 0 application rgw pool 5 'default.rgw.meta' replicated size 3 min_size 2 crush_rule 0 object_hash rjenkins pg_num 8 pgp_num 8 last_change 58 owner 18446744073709551615 flags hashpspool stripe_width 0 application rgw pool 6 'default.rgw.log' replicated size 3 min_size 2 crush_rule 0 object_hash rjenkins pg_num 8 pgp_num 8 last_change 60 owner 18446744073709551615 flags hashpspool stripe_width 0 application rgw pool 7 'default.rgw.buckets.index' replicated size 3 min_size 2 crush_rule 0 object_hash rjenkins pg_num 8 pgp_num 8 last_change 70 owner 18446744073709551615 flags hashpspool stripe_width 0 application rgw pool 8 'default.rgw.buckets.data' replicated size 3 min_size 2 crush_rule 0 object_hash rjenkins pg_num 8 pgp_num 8 last_change 73 owner 18446744073709551615 flags hashpspool stripe_width 0 application rgw pool 9 'rbd' replicated size 3 min_size 2 crush_rule 0 object_hash rjenkins pg_num 32 pgp_num 32 last_change 85 flags hashpspool stripe_width 0 application rbd

2.快设备的操作

rbd create --size 10240 image01 [cephadmin@ceph-node ~]$ rbd ls image01 [cephadmin@ceph-node ~]$ rbd info image01 rbd image 'image01': size 10 GiB in 2560 objects order 22 (4 MiB objects) id: 1218f6b8b4567 block_name_prefix: rbd_data.1218f6b8b4567 format: 2 features: layering, exclusive-lock, object-map, fast-diff, deep-flatten op_features: flags: create_timestamp: Tue Aug 15 11:23:05 2023 [cephadmin@ceph-node ~]$ rbd feature disable image01 exclusive-lock, object-map, fast-diff, deep-flatten [root@ceph-node1 ~]# rbd map image01 /dev/rbd0 [root@ceph-node1 ~]# rbd showmapped id pool image snap device 0 rbd image01 - /dev/rbd0 [root@ceph-node1 ~]# mkfs.xfs /dev/rbd0 Discarding blocks...Done. meta-data=/dev/rbd0 isize=512 agcount=16, agsize=163840 blks = sectsz=512 attr=2, projid32bit=1 = crc=1 finobt=0, sparse=0 data = bsize=4096 blocks=2621440, imaxpct=25 = sunit=1024 swidth=1024 blks naming =version 2 bsize=4096 ascii-ci=0 ftype=1 log =internal log bsize=4096 blocks=2560, version=2 = sectsz=512 sunit=8 blks, lazy-count=1 realtime =none extsz=4096 blocks=0, rtextents=0 [root@ceph-node1 ~]# mount /dev/rbd0 /mnt [root@ceph-node1 ~]# cd /mnt [root@ceph-node1 mnt]# ls [root@ceph-node1 mnt]# df -TH|grep rbd0 /dev/rbd0 xfs 11G 35M 11G 1% /mnt

我是在另外一个osd节点上操作的,需要把ceph.conf和ceph.client.admin.keyring拷贝到/etc/ceph目录下去。

如果是非ceph节点,需要安装ceph-common的包

3. 往块中写入一个文件

[root@ceph-node1 mnt]# dd if=/dev/zero of=/mnt/rbd_test bs=1M count=300 300+0 records in 300+0 records out 314572800 bytes (315 MB) copied, 1.54531 s, 204 MB/s [root@ceph-node1 mnt]# ll total 307200 -rw-r--r-- 1 root root 314572800 Aug 15 11:47 rbd_test [root@ceph-node1 mnt]# ceph df GLOBAL: SIZE AVAIL RAW USED %RAW USED 24 GiB 20 GiB 3.9 GiB 16.22 POOLS: NAME ID USED %USED MAX AVAIL OBJECTS test-pool 1 124 MiB 1.30 9.2 GiB 104 images 2 0 B 0 9.2 GiB 0 .rgw.root 3 1.1 KiB 0 6.2 GiB 4 default.rgw.control 4 0 B 0 6.2 GiB 8 default.rgw.meta 5 1.3 KiB 0 6.2 GiB 8 default.rgw.log 6 0 B 0 6.2 GiB 175 default.rgw.buckets.index 7 0 B 0 6.2 GiB 1 default.rgw.buckets.data 8 3.1 KiB 0 6.2 GiB 1 rbd 9 314 MiB 3.21 6.2 GiB 99

发现一个比较奇怪的问题,我的每个osd总空间是8G,但是RBD建立块设备是10G,居然也能成功,应该是按照消耗量来的,消耗多少算多少。另外OSD空间不够支持扩展?

参考链接

https://www.jianshu.com/p/712e58d36a77

https://www.cnblogs.com/zhrx/p/16098075.html