import requests url = "https://www.sogou.com/" # 替换为您要访问的网站的URL for i in range(20): response = requests.get(url) print(f"请求 {i + 1}:") print("状态码:", response.status_code) print("文本内容:", response.text) print("文本内容长度:", len(response.text)) print("内容长度:", len(response.content)) print()

搜狗网页爬取属性

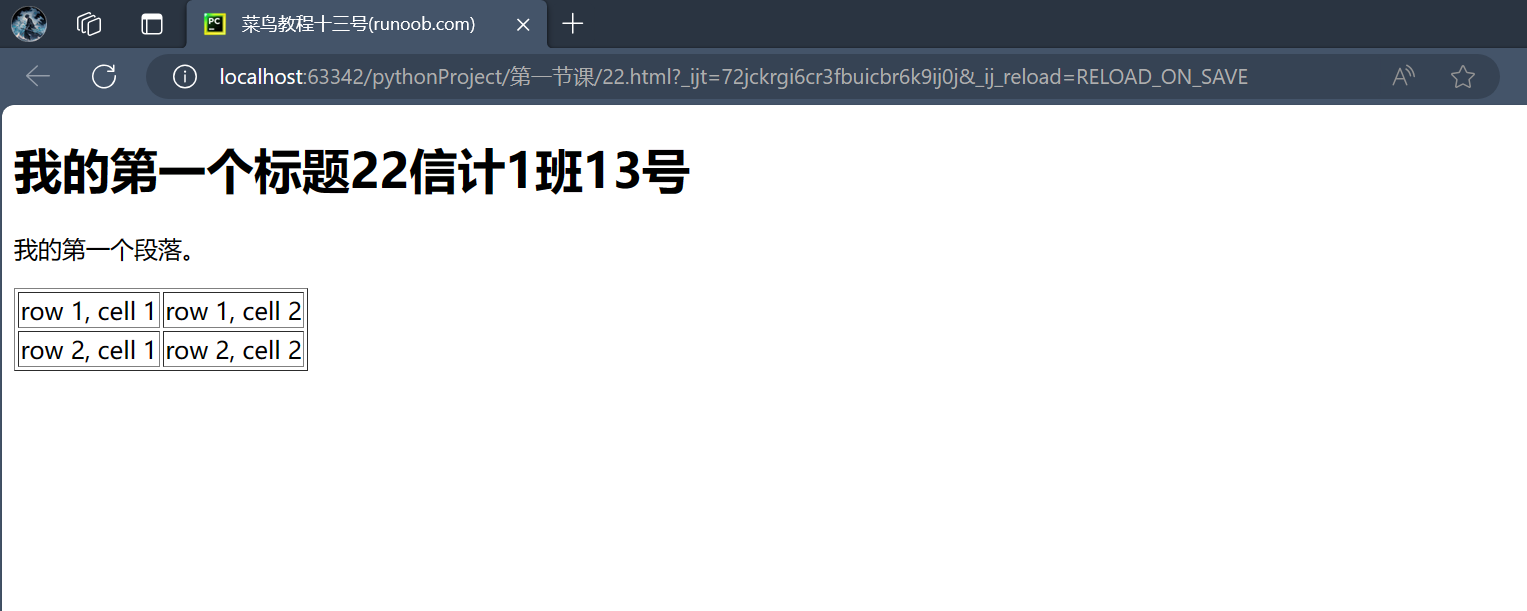

from bs4 import BeautifulSoup html_doc = """<!DOCTYPE html> <html> <head> <meta charset="utf-8"> <title>菜鸟教程十三号(runoob.com)</title> </head> <body> <h1>我的第一个标题</h1> <p id="first">我的第一个段落。</p> </body> <table border="1"> <tr> <td>row 1, cell 1</td> <td>row 1, cell 2</td> </tr> <tr> <td>row 2, cell 1</td> <td>row 2, cell 2</td> </tr> </table> </html>""" soup = BeautifulSoup(html_doc, 'html.parser') print(soup.head) body = soup.body print(body) first_tag = soup.find(id="first") print(first_tag) chinese_chars = [char for char in soup.text if '\u4e00' <= char <= '\u9fff'] print(''.join(chinese_chars))

bs4库解析

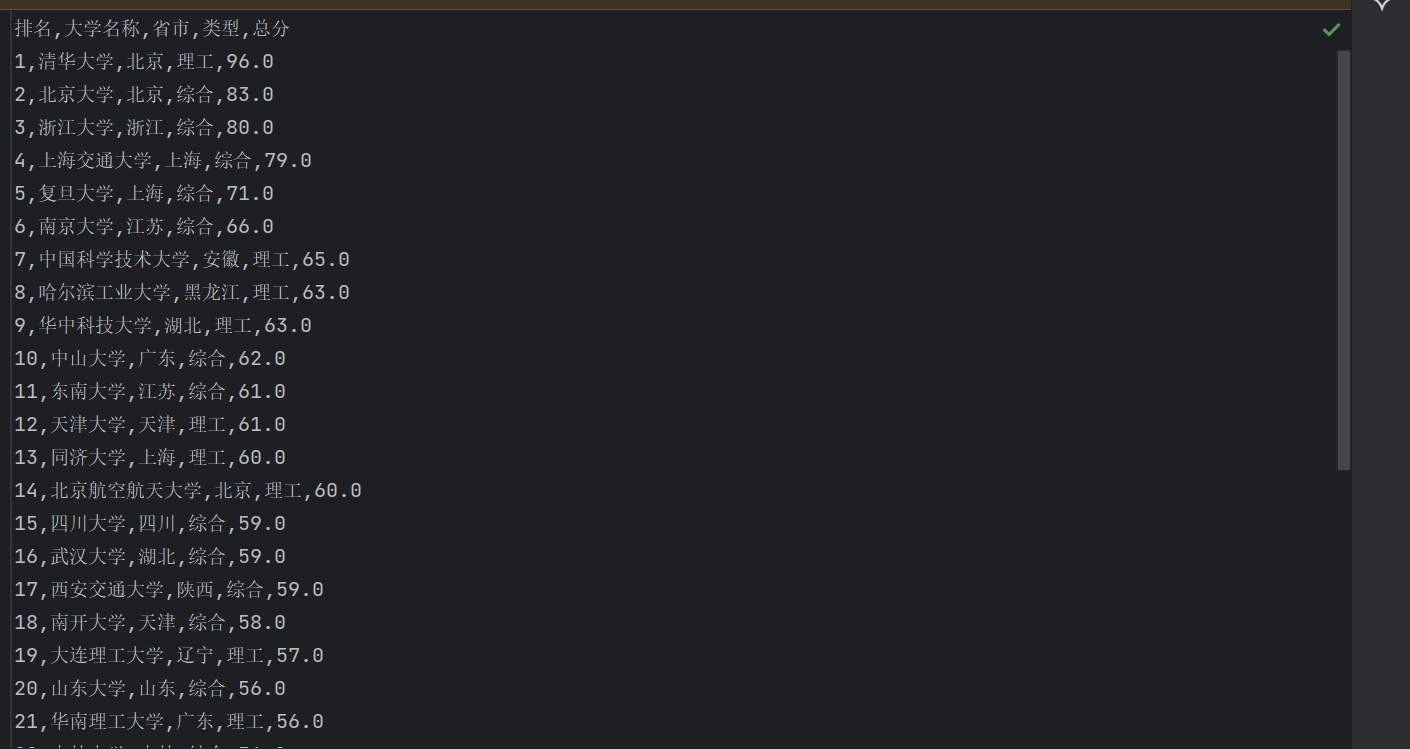

import requests from bs4 import BeautifulSoup import csv import matplotlib.pyplot as plt def getHTMLText(url): try: r = requests.get(url, timeout=30) r.raise_for_status() r.encoding = r.apparent_encoding html = r.text return html except: print("爬取失败") return None def fillUnivList(ulist, html): soup = BeautifulSoup(html, 'html.parser') table = soup.find('table', class_='rk-table') if table is None: print("未找到排名表格") return tbody = table.find('tbody') if tbody is None: print("未找到<tbody>标签") return data = tbody.find_all('tr') for tr in data: tds = tr.find_all('td') if len(tds) < 5: # 跳过不完整的行 continue td_2 = tds[2].text.strip() if tds[2].text else "" td_3 = tds[3].text.strip() if tds[3].text else "" ulist.append([tds[0].string.strip(), tds[1].find('a').string.strip(), td_2, td_3, tds[4].string.strip()]) def printUnivList(ulist, num): file_name = "大学排行.csv" with open(file_name, 'w', newline='', encoding='utf-8') as f: writer = csv.writer(f) writer.writerow(["排名", "大学名称", "省市", "类型", "总分"]) for i in range(num): u = ulist[i] writer.writerow(u) print(f"排名:{u[0]}\t大学名称:{u[1]}\t省市:{u[2]}\t类型:{u[3]}\t总分:{u[4]}") def main(): ulist = [] url = 'https://www.shanghairanking.cn/rankings/bcur/201611' html = getHTMLText(url) if html is not None: fillUnivList(ulist, html) printUnivList(ulist,30) main()

2016年大学排名爬取