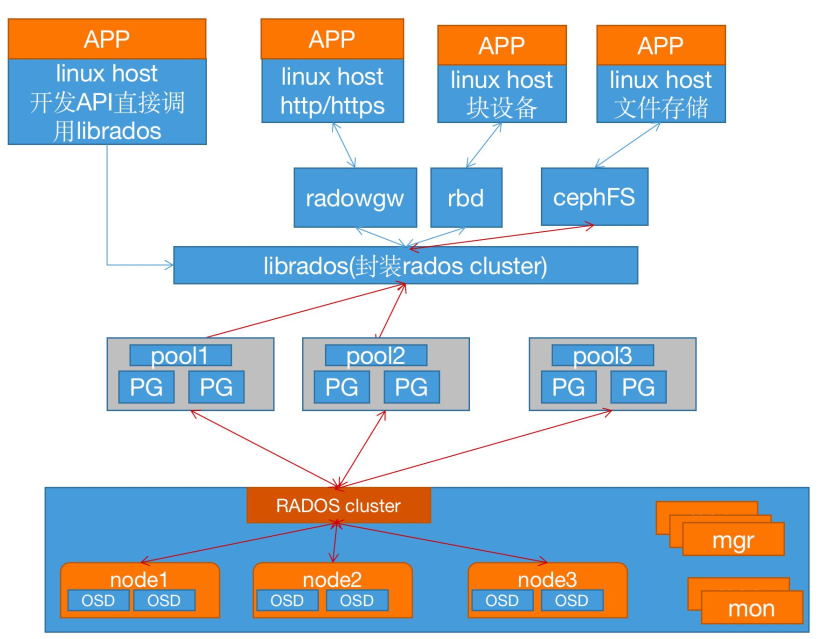

1.梳理ceph的组件关系

1.1 ceph介绍

Ceph是一个开源的分布式存储系统,同时支持对象存储、块设备、文件系统。

ceph支持EB(1EB=1,000,000,00OGB)级别的数据存储, ceph把每一个待管理的数据流(文件等数据)切分为一到多个固定大小(默认4兆)的对象数据,并以其为原子单元(原子是构成元素的最小单元)完成数据的读写。

ceph 的底层存储服务是由多个存储主机(host)组成的存储集群,该集群也被称之为RADOS(reliable automatic distributed object store)存储集群,即可靠的、自动化的、分布式的对象存储系统。

librados是 RADOS存储集群的API,支持C/C++/JAVApython/ruby/php/go等编程语言客户端。

1.2 ceph集群的组成部分

https://docs.ceph.com/en/latest/start/intro/

LIBRADOS、RADOSGW、RBD和Ceph FS 统称为Ceph 客户端接口,RADOSGW、RBD、Ceph FS是基于LIBRADOS提供的多编程语言接口开发的。

一个ceph集群的组成部分:

- 若干的Ceph OSD(对象存储守护程序)

- 至少需要一个 Ceph Monitors 监视器(1,3,5,7...)

- 两个或以上的Ceph 管理器managers

- 运行Ceph 文件系统客户端时还需要高可用的Ceph Metadata Server(文件系统元数据服务器)

- RADOS cluster:由多台host存储服务器组成的ceph集群

- OSD(Object Storage Daemon):每台存储服务器的磁盘组成的存储空间

- Mon(Monitor)::ceph的监视器,维护OSD和PG的集群状态,一个ceph集群至少要有一个mon,可以是一三五七等等这样的奇数个。

- Mgr(Manager):负责跟踪运行时指标和Ceph集群的当前状态,包括存储利用率,当前性能指标和系统负载等.

1.3 Monitor(ceph-mon)ceph监视器

在一个主机上运行的一个守护进程,用于维护集群状态的映射(maintains maps of the cluster state),比如ceph集群中有多少存储池、每个存储池有多少PG以及存储池和PG的映射关系等,monitor map,manager map,the OSD map,the MDS map,and the CRUSH map,这些映射是ceph守护程序相互协调所需的关键集群状态,此外监视器还负责管理守护程序和客户端之间的身份验证(认证使用cephX协议),通常至少需要三个监视器才能实现冗余和高可用性。

1.4 Managers(ceph-mgr)

在一个主机上运行的一个守护进程,Ceph Manager守护程序(ceph-mgr)负责跟踪运行时指标和Ceph集群的当前状态,包括存储利用率,当前性能指标和系统负载。CephManager守护程序还托管基于python的模块来管理和公开Ceph集群信息,包括基于Web的Ceph仪表板和REST API,高可用性通常至少需要两个管理器。

1.5 Ceph OSDs(对象存储守护程序ceph-osd)

提供存储数据,操作系统上的一个磁盘就是一个OSD守护程序,OSD用于处理ceph集群数据复制,恢复,重新平衡,并通过检查其他Ceph OSD守护程序的心跳来向Ceph监视器和管理器提供一些监视信息。通常至少需要3个Ceph OSD才能实现冗余和高可用性。

1.6 MDS(ceph元数据服务器ceph-mds)

代表ceph文件系统(NFS/CIFS)存储元数据,即Ceph块设备和Ceph对象存储不使用MDS

1.7 Ceph 的管理节点

- ceph的常用管理接口是一组命令行工具程序,例如rados、ceph、rbd等命令,ceph管理员可以从某个特定的ceph-mon节点执行管理操作

- 推荐使用部署专用的管理节点对ceph进行配置管理、升级与后期维护,方便后期权限管理,管理节点的权限只对管理人员开放,可以避免一些不必要的误操作的发生.

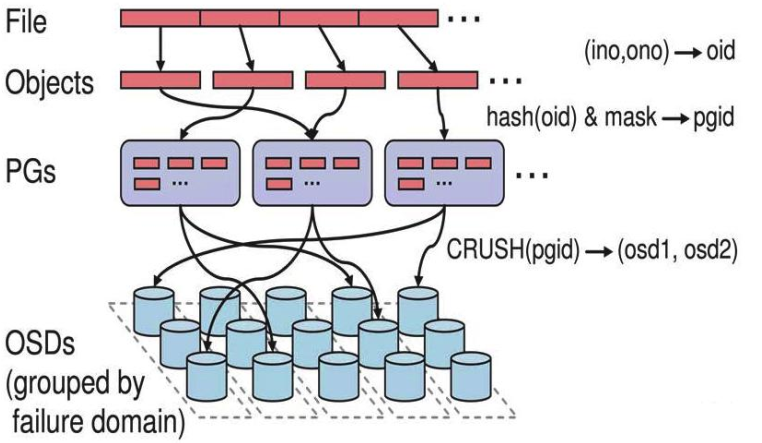

1.8 ceph逻辑组织架构

- Pool: 存储池、分区,存储池的大小取决于底层的存储空间。

- PG(placement group):一个 pool 内部可以有多个PG存在, pool和PG都是抽象的逻辑概念,一个pool中有多少个PG可以通过公式计算。

- OSD(Object Storage Daemon,对象存储设备):每一块磁盘都是一个 osd, 一个主机由一个或多个 osd 组成.

- ceph 集群部署好之后,要先创建存储池才能向ceph写入数据,文件在向ceph保存之前要先进行一致性hash计算,计算后会把文件保存在某个对应的PG的,此文件一定属于某个pool的一个PG,在通过PG保存在OSD上。数据对象在写到主 OSD 之后再同步对从OSD以实现数据的高可用。

存储文件过程:

-

第一步:计算文件到对象的映射

计算文件到对象的映射,假如file为客户端要读写的文件,得到oid(object id) = ino + onoino: inode number (INO),File的元数据序列号,File的唯一id

ono:object number(ONO),File 切分产生的某个object的序号,默认以4M切分一个块大小 -

第二步:通过hash 算法计算出文件对应的 pool中的PG

通过一致性HASH计算Object到PG, Object -> PG 映射hash(oid)& mask-> pgid -

第三步:通过CRUSH 把对象映射到PG中的OSD

通过CRUSH算法计算PG到OSD,PG ->OSD 映射:[CRUSH(pgid)->(osd1,osd2,osd3)]在线进制转换: https://tool.oschina.net/hexconvert

64-1=63(0~63=累计64个)

1100100=100(对象的 hash值) 100&64=36,200&64=8

0111111=63(PG总数)

——————————

0100100=36(与运算结果)

-

第四步:PG中的主OSD将对象写入到硬盘

-

第五步:主OSD将数据同步给备份OSD,并等待备份OSD返回确认

-

第六步:主OSD将写入完成返回给客户端

2.基于ceph-deploy部署ceph 16.2.x 单节点mon和mgr环境

https://docs.ceph.com/en/latest/releases/

2.1 环境准备

操作系统推荐:https://docs.ceph.com/en/latest/start/os-recommendations/

2.1.1 Ceph分布式存储集群规划原则/目标

2.1.2 服务器硬件选型

官方硬件推荐:https://docs.ceph.com/en/latest/start/hardware-recommendations/

-

monitor 、 mgr、 radosgw

4C/8G-16G(小型,专用虚拟机)8C/16G-32G(中型,专用虚拟机)

16C-32C/32G-64G(大型/超大型,专用物理机)

-

MDS(相对配置更高一个等级)

8C/8G-16G(小型,专用虚拟机)16C/16G-32G(中型,专用虚拟机)

32C-64C/64G-96G(大型、超大型,物理机)

-

OSD 节点CPU

每个OSD进程至少有一个CPU核心或以上,比如服务器一共2颗CPU每个12核心24线程,那么服务器总计有48核心CPU,这样最多最多最多可以放48块磁盘.

(物理CPU数量*每颗CPU核心)/OSD磁盘数量=X/每OSD CPU核心>=1核心CPU比如:(2颗*每颗24核心)/24 OSD磁盘数量=2/每 OSD CPU核心>=1核心CPU

-

OSD节点内存

OSD硬盘空间在2T或以内的时候每个硬盘2G内存,4T的空间每个OSD磁盘4G内存,即大约每1T的磁盘空间(最少)分配1G的内存空间做数据读写缓存.

(总内存/OSD磁盘总空间)=X>1G内存

比如:(总内存128G/36T磁盘总空间)=3G/每T>1G内存

2.1.3 集群环境

操作系统为:Ubuntu20.04;网卡为:双网卡,集群地址与公共网络地址

1个部署节点:

10.0.0.50/192.168.10.50

3台mon(2c4g)监视服务器:

10.0.0.51/192.168.10.51

10.0.0.52/192.168.10.52

10.0.0.53/192.168.10.53

2台mgr(2c4g)管理服务器:

10.0.0.54/192.168.10.54

10.0.0.55/192.168.10.55

4台node(2c4g)存储服务器,外接5块硬盘:

10.0.0.56/192.168.10.56

10.0.0.57/192.168.10.57

10.0.0.58/192.168.10.58

10.0.0.59/192.168.10.59

2.2 安装ceph-deploy节点

注意:

Ubuntu20.04 默认python3.8因python3.8去掉了一些函数,所以在用

sudo apt-get install ceph-deploy 安装ceph-deploy版本是 2.0.1用ceph-deploy 2.0.1安装

ceph-deploy new hostName时会报module 'platform' has no attribute 'linux_distribution' 错误!ceph-deploy 源码现版本已到2.1. 0,可以

git clone https://github.com/ceph/ceph-deploy.git 下载源码来安装

2.2.1 安装ceph-deploy 2.1.0

方法一:pip安装

[root@ceph-deploy ~]#apt update

[root@ceph-deploy ~]#apt install python3-pip sshpass ansible -y

[root@ceph-deploy ~]#pip3 install git+https://github.com/ceph/ceph-deploy.git

方法二:源码安装

若网络不好,无法git clone安装时,可通过下载源码方式安装

[root@ceph-deploy ~]#apt update

[root@ceph-deploy ~]#apt install python3-pip sshpass ansible -y

[root@ceph-deploy ~]#cd /opt/

# 可通过浏览器方式下载,上传至服务器

[root@ceph-deploy opt]#wget https://github.com/ceph/ceph-deploy/archive/refs/tags/v2.1.0.zip

[root@ceph-deploy opt]#unzip ceph-deploy-2.1.0.zip

[root@ceph-deploy opt]#cd ceph-deploy-2.1.0/

[root@ceph-deploy ceph-deploy-2.1.0]#python3 setup.py install

# 若提示remoto版本不符合要求导致无法安装ceph-deploy时,先可以通过清华源安装升级remoto,再执行python3 setup.py install

# remoto错误提示:pkg_resources.DistributionNotFound: The 'remoto>=1.1.4' distribution was not found and is required by ceph-deploy

pip install -i https://pypi.tuna.tsinghua.edu.cn/simple remoto

查看版本

[root@ceph-deploy ~]#ceph-deploy --version

2.1.0

2.2.2 系统环境初始化

- 设置ansible免密登录

配置ansible主机清单

[root@ceph-deploy ~]#cat /etc/ansible/hosts

[ceph]

10.0.0.50

10.0.0.51

10.0.0.52

10.0.0.53

10.0.0.54

10.0.0.55

10.0.0.56

10.0.0.57

10.0.0.58

10.0.0.59

设置免密登录

# 生成密钥

ssh-keygen

# 推送密钥至主机

#!/bin/bash

for i in {50..59};

do

sshpass -p '123456' ssh-copy-id -o "StrictHostKeyChecking=no" -i /root/.ssh/id_rsa.pub 10.0.0.$i;

done

-

时间同步

-

关闭selinux和防火墙(CentOS)

-

配置主机域名解析或通过DNS解析

ansible 'ceph' -m shell -a "echo '10.0.0.50 ceph-deploy' >>/etc/hosts" ansible 'ceph' -m shell -a "echo '10.0.0.51 ceph-mon1' >>/etc/hosts" ansible 'ceph' -m shell -a "echo '10.0.0.52 ceph-mon2' >>/etc/hosts" ansible 'ceph' -m shell -a "echo '10.0.0.53 ceph-mon3' >>/etc/hosts" ansible 'ceph' -m shell -a "echo '10.0.0.54 ceph-mgr1' >>/etc/hosts" ansible 'ceph' -m shell -a "echo '10.0.0.55 ceph-mgr2' >>/etc/hosts" ansible 'ceph' -m shell -a "echo '10.0.0.56 ceph-node1' >>/etc/hosts" ansible 'ceph' -m shell -a "echo '10.0.0.57 ceph-node2' >>/etc/hosts" ansible 'ceph' -m shell -a "echo '10.0.0.58 ceph-node3' >>/etc/hosts" ansible 'ceph' -m shell -a "echo '10.0.0.59 ceph-node4' >>/etc/hosts" # 也可所有节点直接编辑 cat >>/etc/hosts <<EOF 10.0.0.50 ceph-deploy 10.0.0.51 ceph-mon1 10.0.0.52 ceph-mon2 10.0.0.53 ceph-mon3 10.0.0.54 ceph-mgr1 10.0.0.55 ceph-mgr2 10.0.0.56 ceph-node1 10.0.0.57 ceph-node2 10.0.0.58 ceph-node3 10.0.0.59 ceph-node4 EOF

-

设置系统镜像源

cat >> /etc/apt/sources.list <<EOF # 清华源 deb https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ focal main restricted universe multiverse # deb-src https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ focal main restricted universe multiverse deb https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ focal-updates main restricted universe multiverse # deb-src https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ focal-updates main restricted universe multiverse deb https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ focal-backports main restricted universe multiverse # deb-src https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ focal-backports main restricted universe multiverse deb https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ focal-security main restricted universe multiverse # deb-src https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ focal-security main restricted universe multiverse EOF # 更新 apt update -

配置ceph镜像源

# 支持https镜像仓库源 ansible 'ceph' -m shell -a "apt install -y apt-transport-https ca-certificates curl software-properties-common" # 导入key ansible 'ceph' -m shell -a "wget -q -O- 'https://mirrors.tuna.tsinghua.edu.cn/ceph/keys/release.asc' | apt-key add -" # 添加ceph镜像源 ansible 'ceph' -m shell -a "echo 'deb https://mirrors.tuna.tsinghua.edu.cn/ceph/debian-pacific/ focal main' >> /etc/apt/sources.list && apt update" -

创建普通用户cephadmin

推荐使用指定的普通用户部署和运行ceph 集群,普通用户只要能以非交互方式执行sudo命令执行一些特权命令即可,新版的ceph-deploy可以指定包含root的在内只要可以执行sudo命令的用户,不过仍然推荐使用普通用户,ceph集群安装完成后会自动创建ceph用户(ceph集群畎认会使用ceph用户运行各服务进程,如ceph-osd等),因此推荐使用除了ceph 用户之外的比如cephuser、cephadmin这样的普通用户去部署和管理ceph集群。

cephadmin仅用于通过ceph-deploy部署和管理ceph集群的时候使用,比如首次初始化集群和部署集群、添加节点、删除节点等,ceph 集群在node节点、mgr等节点会使用ceph用户启动服务进程。

在包含ceph-deploy节点的存储节点、mon节点和 mgr节点等创建cephadmin 用户。

# 创建cephadmin用户

ansible 'ceph' -m shell -a "groupadd -r -g 2088 cephadmin && useradd -r -m -s /bin/bash -u 2088 -g 2088 cephadmin && echo 'cephadmin:123456'|chpasswd"

# 允许cephadmin用户以sodu执行特权命令

ansible 'ceph' -m shell -a "echo 'cephadmin ALL=(ALL) NOPASSWD: ALL' >> /etc/sudoers"

验证测试

# 用户创建测试

[root@ceph-deploy ~]#ansible 'ceph' -m shell -a "id cephadmin"

10.0.0.52 | CHANGED | rc=0 >>

uid=2088(cephadmin) gid=2088(cephadmin) groups=2088(cephadmin)

10.0.0.54 | CHANGED | rc=0 >>

uid=2088(cephadmin) gid=2088(cephadmin) groups=2088(cephadmin)

10.0.0.53 | CHANGED | rc=0 >>

uid=2088(cephadmin) gid=2088(cephadmin) groups=2088(cephadmin)

10.0.0.51 | CHANGED | rc=0 >>

uid=2088(cephadmin) gid=2088(cephadmin) groups=2088(cephadmin)

10.0.0.50 | CHANGED | rc=0 >>

uid=2088(cephadmin) gid=2088(cephadmin) groups=2088(cephadmin)

10.0.0.55 | CHANGED | rc=0 >>

uid=2088(cephadmin) gid=2088(cephadmin) groups=2088(cephadmin)

10.0.0.57 | CHANGED | rc=0 >>

uid=2088(cephadmin) gid=2088(cephadmin) groups=2088(cephadmin)

10.0.0.56 | CHANGED | rc=0 >>

uid=2088(cephadmin) gid=2088(cephadmin) groups=2088(cephadmin)

10.0.0.58 | CHANGED | rc=0 >>

uid=2088(cephadmin) gid=2088(cephadmin) groups=2088(cephadmin)

10.0.0.59 | CHANGED | rc=0 >>

uid=2088(cephadmin) gid=2088(cephadmin) groups=2088(cephadmin)

- 配置cephadmin免密登录在deploy节点以cephadmin用户配置秘钥分发

[root@ceph-deploy ~]#su - cephadmin

cephadmin@ceph-deploy:~$ ssh-keygen

ssh-copy-id cephadmin@10.0.0.50

ssh-copy-id cephadmin@10.0.0.51

ssh-copy-id cephadmin@10.0.0.52

ssh-copy-id cephadmin@10.0.0.53

ssh-copy-id cephadmin@10.0.0.54

ssh-copy-id cephadmin@10.0.0.55

ssh-copy-id cephadmin@10.0.0.56

ssh-copy-id cephadmin@10.0.0.57

ssh-copy-id cephadmin@10.0.0.58

ssh-copy-id cephadmin@10.0.0.59

[root@ceph-deploy ~]#su - cephadmin

cephadmin@ceph-deploy:~$ ssh-keygen

# 执行以下脚本

cephadmin@ceph-deploy:~$ cat ssh-keygen-cephadmin-push.sh

#!/bin/bash

for i in {50..59};

do

sshpass -p '123456' ssh-copy-id -o "StrictHostKeyChecking=no" -i /home/cephadmin/.ssh/id_rsa.pub cephadmin@10.0.0.$i;

done

2.3 初始化mon节点

2.3.1 各节点安装python2.7

cephadmin@ceph-deploy:~$ sudo ansible 'ceph' -m shell -a 'apt install -y python2.7'

cephadmin@ceph-deploy:~$ sudo ansible 'ceph' -m shell -a "sudo ln -sv /usr/bin/python2.7 /usr/bin/python2"

2.3.2 在管理节点初始化mon1节点

cephadmin@ceph-deploy:~$ sudo mkdir -p /data/ceph-cluster

cephadmin@ceph-deploy:~$ sudo chown -R cephadmin:cephadmin /data/

cephadmin@ceph-deploy:~$ cd /data/ceph-cluster

cephadmin@ceph-deploy:/data/ceph-cluster$ ceph-deploy new --cluster-network 192.168.10.0/24 --public-network 10.0.0.0/24 ceph-mon1

验证初始化

cephadmin@ceph-deploy:/data/ceph-cluster$ ll

total 12

drwxr-xr-x 2 cephadmin cephadmin 75 Sep 21 12:25 ./

drwxr-xr-x 3 cephadmin cephadmin 26 Sep 21 12:12 ../

-rw-rw-r-- 1 cephadmin cephadmin 3424 Sep 21 12:25 ceph-deploy-ceph.log # 自动生成的配置文件

-rw-rw-r-- 1 cephadmin cephadmin 259 Sep 21 12:25 ceph.conf # 初始化日志

-rw------- 1 cephadmin cephadmin 73 Sep 21 12:25 ceph.mon.keyring # 用于ceph mon节点内部通讯认证的秘钥环文件

# ceph配置文件

cephadmin@ceph-deploy:/data/ceph-cluster$ cat ceph.conf

[global]

fsid = 28820ae5-8747-4c53-827b-219361781ada # ceph集群ID

public_network = 10.0.0.0/24 # 公网地址

cluster_network = 192.168.10.0/24 # 集群内部地址

mon_initial_members = ceph-mon1 # mon服务器,可以添加多个mon节点,用逗号做分割

mon_host = 10.0.0.51

auth_cluster_required = cephx

auth_service_required = cephx

auth_client_required = cephx

2.3.3 ceph-deploy用法

ceph-deploy -h

usage: ceph-deploy [-h] [-v | -q] [--version] [--username USERNAME] [--overwrite-conf] [--ceph-conf CEPH_CONF] COMMAND ...

Easy Ceph deployment

-^-

/ \

|O o| ceph-deploy v2.1.0

).-.(

'/|||\`

| '|` |

'|`

Full documentation can be found at: http://ceph.com/ceph-deploy/docs

optional arguments:

-h, --help show this help message and exit

-v, --verbose be more verbose

-q, --quiet be less verbose

--version the current installed version of ceph-deploy

--username USERNAME the username to connect to the remote host

--overwrite-conf overwrite an existing conf file on remote host (if present)

--ceph-conf CEPH_CONF

use (or reuse) a given ceph.conf file

commands:

COMMAND description

new 开始部署一个新的ceph集群,并生成cluster.conf集群配置文件和keyring认证文件

install 在远程主机上安装ceph相关软件包

mds 管理MGR守护程序(ceph-mgr,Ceph Manager DaemonCeph管理器守护程序)

mgr 管理MDS守护程序(Ceph Metadata Server,ceph源数据服务器)

mon 管理MON守护程序(ceph-mon,ceph 监视器)

rgw 管理RGW守护程序(RADOSGW,对象存储网关)

gatherkeys 从指定获取提供新节点的验证 keys,这些 keys会在添加新的MON/OSD/MD加入的时候使用

disk 管理远程主机磁盘。

osd 在远程主机准备数据磁盘,即将指定远程主机的指定磁盘添加到ceph集群作为osd使用

admin 推送ceph 集群配置文件和 client.admin 认证文件到远程主机

config 将ceph.conf 配置文件推送到远程主机或从远程主机拷贝

repo 远程主机仓库管理

purge 删除远端主机的安装包和所有数据

purgedata 从/var/lib/ceph 删除ceph 数据,会删除/etc/ceph 下的内容

uninstall 从远端主机删除安装包

forgetkeys 从本地主机删除所有的验证 keyring,包括client.admin, monitor, bootstrap等认证文件

pkg 管理远端主机的安装包

See 'ceph-deploy <command> --help' for help on a specific command

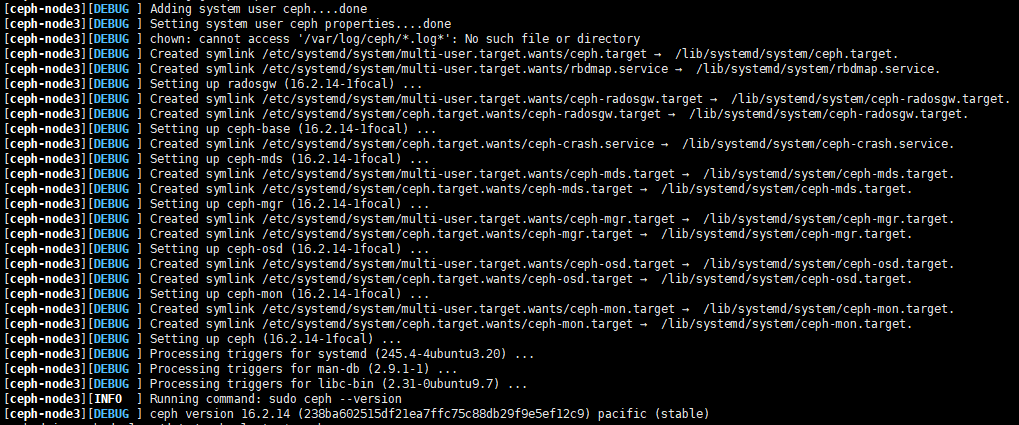

2.4 初始化node节点

初始化存储节点等于在存储节点安装了ceph 及 ceph-rodsgw安装包,但是使用默认的官方仓库会因为网络原因导致初始化超时,因此各存储节点推荐修改ceph 仓库为阿里或者清华等国内的镜像源。检查各节点是否配置清华ceph镜像源(Ubuntu20.04,ceph16)

cephadmin@ceph-deploy:/data/ceph-cluster$ cat /etc/apt/sources.list

# 清华源

deb https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ focal main restricted universe multiverse

# deb-src https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ focal main restricted universe multiverse

deb https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ focal-updates main restricted universe multiverse

# deb-src https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ focal-updates main restricted universe multiverse

deb https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ focal-backports main restricted universe multiverse

# deb-src https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ focal-backports main restricted universe multiverse

deb https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ focal-security main restricted universe multiverse

# deb-src https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ focal-security main restricted universe multiverse

deb https://mirrors.tuna.tsinghua.edu.cn/ceph/debian-pacific/ focal main # ceph源

先初始化三个node节点

ceph-deploy install --no-adjust-repos --nogpgcheck ceph-node1 ceph-node2 ceph-node3

初始化完成

2.5 安装ceph-mon服务

在mon节点安装组件ceph-mon服务,并通过初始化mon节点,mon节点HA还可以后期横向扩容

2.5.1 安装ceph-mon

cephadmin@ceph-mon1:/$ apt-cache madison ceph-mon

...

cephadmin@ceph-mon1:/$ sudo apt install -y ceph-mon

cephadmin@ceph-mon2:/$ sudo apt install -y ceph-mon

cephadmin@ceph-mon3:/$ sudo apt install -y ceph-mon

2.5.2 ceph集群添加ceph-mon服务

cephadmin@ceph-deploy:/data/ceph-cluster$ ceph-deploy mon create-initial

2.5.3 验证mon节点

验证在mon定节点已经自动安装并启动了ceph-mon服务,并且后期在ceph-deploy节点初始化目录会生成一些bootstrap ceph mds/mgr/osd/rgw等服务的 keyring认证文件,这些初始化文件拥有对ceph 集群的最高权限,所以一定要保存好.

cephadmin@ceph-mon1:/$ ps -ef|grep ceph-mon

ceph 21579 1 0 02:58 ? 00:00:00 /usr/bin/ceph-mon -f --cluster ceph --id ceph-mon1 --setuser ceph --setgroup ceph

cephadm+ 22131 16106 0 02:59 pts/0 00:00:00 grep --color=auto ceph-mon

2.6 分发admin秘钥

在ceph-deploy节点把配置文件和admin密钥拷贝至Ceph集群需要执行ceph管理命令的节点,从而不需要后期通过ceph命令对ceph集群进行管理配置的时候每次都需要指定ceph-mon节点地址和 ceph.client.admin.keyring 文件,另外各ceph-mon节点也需要同步ceph 的集群配置文件与认证文件.

2.6.1 安装ceph-common

如果在ceph-deploy节点管理集群,需先安装ceph公共组件

cephadmin@ceph-deploy:/data/ceph-cluster$ sudo apt install -y ceph-common

说明:node节点在初始时已经完成安装

2.6.2分发秘钥给node节点

ceph-deploy admin ceph-node1 ceph-node2 ceph-node3

2.6.3 ceph node节点验证秘钥

node节点验证key文件

[root@ceph-node1 ~]#ls -l /etc/ceph/

total 12

-rw------- 1 root root 151 Sep 21 03:07 ceph.client.admin.keyring

-rw-r--r-- 1 root root 259 Sep 21 03:07 ceph.conf

-rw-r--r-- 1 root root 92 Aug 30 00:38 rbdmap

-rw------- 1 root root 0 Sep 21 03:07 tmplu8ns4a4

认证文件的属主和属组为了安全考虑,默认设置为root用户和root组,如果需要ceph用户也能执行ceph命令,就需要对ceph用户进行授权

# 安装acl,设置授权

[root@ceph-node1 ~]apt install acl -y

[root@ceph-node1 ~]#setfacl -m u:cephadmin:rw /etc/ceph/ceph.client.admin.keyring

[root@ceph-node2 ~]apt install acl -y

[root@ceph-node2 ~]#setfacl -m u:cephadmin:rw /etc/ceph/ceph.client.admin.keyring

[root@ceph-node3 ~]apt install acl -y

[root@ceph-node3 ~]#setfacl -m u:cephadmin:rw /etc/ceph/ceph.client.admin.keyring

2.7 部署mgr节点

2.7.1 mgr1节点安装ceph-mgr

cephadmin@ceph-mgr1:~$ sudo apt install -y ceph-mgr

2.7.2 初始化ceph-mgr1

cephadmin@ceph-deploy:/data/ceph-cluster$ ceph-deploy mgr create ceph-mgr1

2.7.3 验证ceph-mgr节点

cephadmin@ceph-mgr1:~$ ps -ef|grep ceph-mgr

ceph 24863 1 10 03:18 ? 00:00:03 /usr/bin/ceph-mgr -f --cluster ceph --id ceph-mgr1 --setuser ceph --setgroup ceph

cephadm+ 25079 18705 0 03:18 pts/0 00:00:00 grep --color=auto ceph-mgr

2.8 ceph-deploy管理ceph集群

# deploy节点安装,ceph-common用来执行ceph管理命令

cephadmin@ceph-deploy:/data/ceph-cluster$ apt install ceph-common -y

# 分发秘钥给自己

cephadmin@ceph-deploy:/data/ceph-cluster$ ceph-deploy admin ceph-deploy

# 授权cephadmin用户

cephadmin@ceph-deploy:/data/ceph-cluster$ sudo apt install acl -y

cephadmin@ceph-deploy:/data/ceph-cluster$ sudo setfacl -m u:cephadmin:rw /etc/ceph/ceph.client.admin.keyring

验证

cephadmin@ceph-deploy:/data/ceph-cluster$ ceph -s

cluster:

id: 28820ae5-8747-4c53-827b-219361781ada

health: HEALTH_WARN

mon is allowing insecure global_id reclaim # 需要禁用非安全模式通信

OSD count 0 < osd_pool_default_size 3 # 集群OSD数量小于3

services:

mon: 1 daemons, quorum ceph-mon1 (age 23m)

mgr: ceph-mgr1(active, since 4m)

osd: 0 osds: 0 up, 0 in

data:

pools: 0 pools, 0 pgs

objects: 0 objects, 0 B

usage: 0 B used, 0 B / 0 B avail

pgs:

# 禁用非安全模式通信

cephadmin@ceph-deploy:/data/ceph-cluster$ ceph config set mon auth_allow_insecure_global_id_reclaim false

cephadmin@ceph-deploy:/data/ceph-cluster$ ceph -s

cluster:

id: 28820ae5-8747-4c53-827b-219361781ada

health: HEALTH_WARN

OSD count 0 < osd_pool_default_size 3

services:

mon: 1 daemons, quorum ceph-mon1 (age 26m)

mgr: ceph-mgr1(active, since 6m)

osd: 0 osds: 0 up, 0 in

data:

pools: 0 pools, 0 pgs

objects: 0 objects, 0 B

usage: 0 B used, 0 B / 0 B avail

pgs:

# 查看集群版本

cephadmin@ceph-deploy:/data/ceph-cluster$ ceph versions

{

"mon": {

"ceph version 16.2.14 (238ba602515df21ea7ffc75c88db29f9e5ef12c9) pacific (stable)": 1

},

"mgr": {

"ceph version 16.2.14 (238ba602515df21ea7ffc75c88db29f9e5ef12c9) pacific (stable)": 1

},

"osd": {},

"mds": {},

"overall": {

"ceph version 16.2.14 (238ba602515df21ea7ffc75c88db29f9e5ef12c9) pacific (stable)": 2

}

}

2.9 初始化存储节点

OSD节点安装运行环境

cephadmin@ceph-deploy:/data/ceph-cluster$ ceph-deploy install --release pacific ceph-node1

cephadmin@ceph-deploy:/data/ceph-cluster$ ceph-deploy install --release pacific ceph-node2

cephadmin@ceph-deploy:/data/ceph-cluster$ ceph-deploy install --release pacific ceph-node3

使用ceph-deploy disk zap擦除各ceph node的ceph数据磁盘

ceph-deploy disk zap ceph-node1 /dev/sdb

ceph-deploy disk zap ceph-node1 /dev/sdc

ceph-deploy disk zap ceph-node1 /dev/sdd

ceph-deploy disk zap ceph-node1 /dev/sde

ceph-deploy disk zap ceph-node1 /dev/sdf

ceph-deploy disk zap ceph-node2 /dev/sdb

ceph-deploy disk zap ceph-node2 /dev/sdc

ceph-deploy disk zap ceph-node2 /dev/sdd

ceph-deploy disk zap ceph-node2 /dev/sde

ceph-deploy disk zap ceph-node2 /dev/sdf

ceph-deploy disk zap ceph-node3 /dev/sdb

ceph-deploy disk zap ceph-node3 /dev/sdc

ceph-deploy disk zap ceph-node3 /dev/sdd

ceph-deploy disk zap ceph-node3 /dev/sde

ceph-deploy disk zap ceph-node3 /dev/sdf

擦除过程

cephadmin@ceph-deploy:/data/ceph-cluster$ ceph-deploy osd create ceph-node3 --data /dev/sdf

[ceph_deploy.conf][DEBUG ] found configuration file at: /home/cephadmin/.cephdeploy.conf

[ceph_deploy.cli][INFO ] Invoked (2.1.0): /usr/local/bin/ceph-deploy osd create ceph-node3 --data /dev/sdf

[ceph_deploy.cli][INFO ] ceph-deploy options:

[ceph_deploy.cli][INFO ] verbose : False

[ceph_deploy.cli][INFO ] quiet : False

[ceph_deploy.cli][INFO ] username : None

[ceph_deploy.cli][INFO ] overwrite_conf : False

[ceph_deploy.cli][INFO ] ceph_conf : None

[ceph_deploy.cli][INFO ] cluster : ceph

[ceph_deploy.cli][INFO ] subcommand : create

[ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf object at 0x7f8f6fb6da90>

[ceph_deploy.cli][INFO ] default_release : False

[ceph_deploy.cli][INFO ] func : <function osd at 0x7f8f6fc150d0>

[ceph_deploy.cli][INFO ] data : /dev/sdf

[ceph_deploy.cli][INFO ] journal : None

[ceph_deploy.cli][INFO ] zap_disk : False

[ceph_deploy.cli][INFO ] fs_type : xfs

[ceph_deploy.cli][INFO ] dmcrypt : False

[ceph_deploy.cli][INFO ] dmcrypt_key_dir : /etc/ceph/dmcrypt-keys

[ceph_deploy.cli][INFO ] filestore : None

[ceph_deploy.cli][INFO ] bluestore : None

[ceph_deploy.cli][INFO ] block_db : None

[ceph_deploy.cli][INFO ] block_wal : None

[ceph_deploy.cli][INFO ] host : ceph-node3

[ceph_deploy.cli][INFO ] debug : False

[ceph_deploy.osd][DEBUG ] Creating OSD on cluster ceph with data device /dev/sdf

[ceph-node3][DEBUG ] connection detected need for sudo

[ceph-node3][DEBUG ] connected to host: ceph-node3

[ceph_deploy.osd][INFO ] Distro info: ubuntu 20.04 focal

[ceph_deploy.osd][DEBUG ] Deploying osd to ceph-node3

[ceph-node3][INFO ] Running command: sudo /usr/sbin/ceph-volume --cluster ceph lvm create --bluestore --data /dev/sdf

[ceph-node3][WARNIN] Running command: /usr/bin/ceph-authtool --gen-print-key

[ceph-node3][WARNIN] Running command: /usr/bin/ceph --cluster ceph --name client.bootstrap-osd --keyring /var/lib/ceph/bootstrap-osd/ceph.keyring -i - osd new 2ca3d89a-2b5c-45ff-94a4-c87960f94533

[ceph-node3][WARNIN] Running command: vgcreate --force --yes ceph-bcbe326f-53cc-48ea-bc0c-ab3d70422761 /dev/sdf

[ceph-node3][WARNIN] stdout: Physical volume "/dev/sdf" successfully created.

2.10 添加OSD

2.10.1 数据分类保存方式

Data: 即ceph保存的对象数据

Block: rocks DB数据即元数据

block: 数据库的wal日志

2.10.2 添加OSD

ceph-deploy osd create ceph-node1 --data /dev/sdb

ceph-deploy osd create ceph-node1 --data /dev/sdc

ceph-deploy osd create ceph-node1 --data /dev/sdd

ceph-deploy osd create ceph-node1 --data /dev/sde

ceph-deploy osd create ceph-node1 --data /dev/sdf

ceph-deploy osd create ceph-node2 --data /dev/sdb

ceph-deploy osd create ceph-node2 --data /dev/sdc

ceph-deploy osd create ceph-node2 --data /dev/sdd

ceph-deploy osd create ceph-node2 --data /dev/sde

ceph-deploy osd create ceph-node2 --data /dev/sdf

ceph-deploy osd create ceph-node3 --data /dev/sdb

ceph-deploy osd create ceph-node3 --data /dev/sdc

ceph-deploy osd create ceph-node3 --data /dev/sdd

ceph-deploy osd create ceph-node3 --data /dev/sde

ceph-deploy osd create ceph-node3 --data /dev/sdf

添加OSD过程

cephadmin@ceph-deploy:/data/ceph-cluster$ ceph-deploy osd create ceph-node3 --data /dev/sdf

[ceph_deploy.conf][DEBUG ] found configuration file at: /home/cephadmin/.cephdeploy.conf

[ceph_deploy.cli][INFO ] Invoked (2.1.0): /usr/local/bin/ceph-deploy osd create ceph-node3 --data /dev/sdf

[ceph_deploy.cli][INFO ] ceph-deploy options:

[ceph_deploy.cli][INFO ] verbose : False

[ceph_deploy.cli][INFO ] quiet : False

[ceph_deploy.cli][INFO ] username : None

[ceph_deploy.cli][INFO ] overwrite_conf : False

[ceph_deploy.cli][INFO ] ceph_conf : None

[ceph_deploy.cli][INFO ] cluster : ceph

[ceph_deploy.cli][INFO ] subcommand : create

[ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf object at 0x7fac27ef1dc0>

[ceph_deploy.cli][INFO ] default_release : False

[ceph_deploy.cli][INFO ] func : <function osd at 0x7fac27f1f0d0>

[ceph_deploy.cli][INFO ] data : /dev/sdf

[ceph_deploy.cli][INFO ] journal : None

[ceph_deploy.cli][INFO ] zap_disk : False

[ceph_deploy.cli][INFO ] fs_type : xfs

[ceph_deploy.cli][INFO ] dmcrypt : False

[ceph_deploy.cli][INFO ] dmcrypt_key_dir : /etc/ceph/dmcrypt-keys

[ceph_deploy.cli][INFO ] filestore : None

[ceph_deploy.cli][INFO ] bluestore : None

[ceph_deploy.cli][INFO ] block_db : None

[ceph_deploy.cli][INFO ] block_wal : None

[ceph_deploy.cli][INFO ] host : ceph-node3

[ceph_deploy.cli][INFO ] debug : False

[ceph_deploy.osd][DEBUG ] Creating OSD on cluster ceph with data device /dev/sdf

[ceph-node3][DEBUG ] connection detected need for sudo

[ceph-node3][DEBUG ] connected to host: ceph-node3

[ceph_deploy.osd][INFO ] Distro info: ubuntu 20.04 focal

[ceph_deploy.osd][DEBUG ] Deploying osd to ceph-node3

[ceph-node3][INFO ] Running command: sudo /usr/sbin/ceph-volume --cluster ceph lvm create --bluestore --data /dev/sdf

[ceph-node3][WARNIN] --> Device /dev/sdf is already prepared

[ceph-node3][INFO ] checking OSD status...

[ceph-node3][INFO ] Running command: sudo /bin/ceph --cluster=ceph osd stat --format=json

[ceph_deploy.osd][DEBUG ] Host ceph-node3 is now ready for osd use.

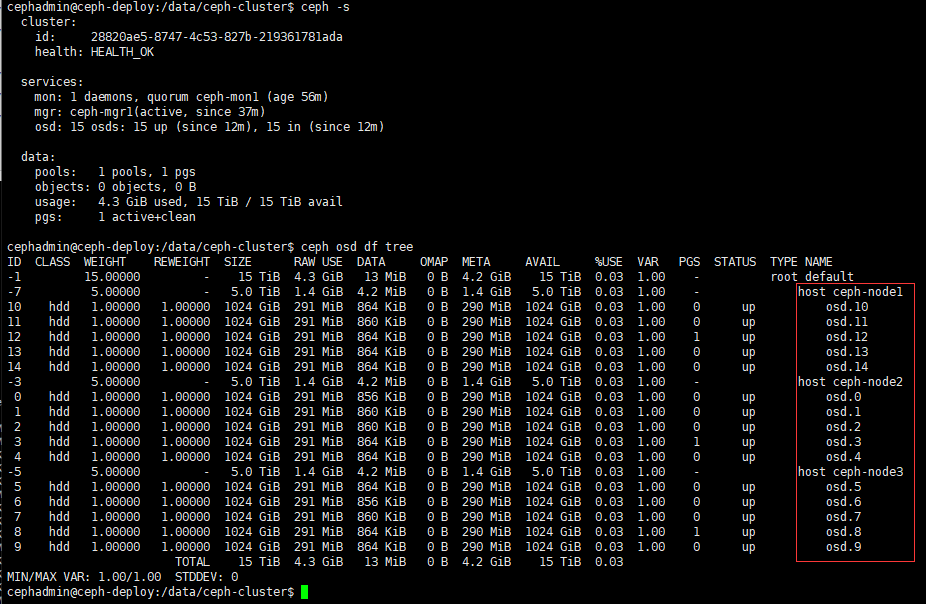

查看集群

cephadmin@ceph-deploy:/data/ceph-cluster$ ceph -s

cluster:

id: 28820ae5-8747-4c53-827b-219361781ada

health: HEALTH_OK

services:

mon: 1 daemons, quorum ceph-mon1 (age 47m)

mgr: ceph-mgr1(active, since 28m)

osd: 15 osds: 15 up (since 3m), 15 in (since 3m)

data:

pools: 1 pools, 1 pgs

objects: 0 objects, 0 B

usage: 4.3 GiB used, 15 TiB / 15 TiB avail

pgs: 1 active+clean

2.10.3 设置OSD服务自启动

默认就已经为自启动,node节点添加完成后,可以重启服务测试node服务器重启后,OSD是否会自动启动

ceph-node1

# 查看osd

[root@ceph-node1 ~]#ps -ef|grep osd

ceph 64063 1 0 03:42 ? 00:00:02 /usr/bin/ceph-osd -f --cluster ceph --id 10 --setuser ceph --setgroup ceph

ceph 66020 1 0 03:42 ? 00:00:02 /usr/bin/ceph-osd -f --cluster ceph --id 11 --setuser ceph --setgroup ceph

ceph 67976 1 0 03:42 ? 00:00:01 /usr/bin/ceph-osd -f --cluster ceph --id 12 --setuser ceph --setgroup ceph

ceph 69927 1 0 03:42 ? 00:00:01 /usr/bin/ceph-osd -f --cluster ceph --id 13 --setuser ceph --setgroup ceph

ceph 71885 1 0 03:42 ? 00:00:01 /usr/bin/ceph-osd -f --cluster ceph --id 14 --setuser ceph --setgroup ceph

# 设置开机自启动

systemctl enable ceph-osd@10 ceph-osd@11 ceph-osd@12 ceph-osd@13 ceph-osd@14

ceph-node2

[root@ceph-node2 ~]#ps -ef|grep osd

ceph 61919 1 0 03:37 ? 00:00:03 /usr/bin/ceph-osd -f --cluster ceph --id 0 --setuser ceph --setgroup ceph

ceph 63909 1 0 03:37 ? 00:00:03 /usr/bin/ceph-osd -f --cluster ceph --id 1 --setuser ceph --setgroup ceph

ceph 65861 1 0 03:37 ? 00:00:03 /usr/bin/ceph-osd -f --cluster ceph --id 2 --setuser ceph --setgroup ceph

ceph 67849 1 0 03:37 ? 00:00:03 /usr/bin/ceph-osd -f --cluster ceph --id 3 --setuser ceph --setgroup ceph

ceph 69789 1 0 03:38 ? 00:00:03 /usr/bin/ceph-osd -f --cluster ceph --id 4 --setuser ceph --setgroup cep

systemctl enable ceph-osd@0 ceph-osd@1 ceph-osd@2 ceph-osd@3 ceph-osd@4

ceph-node3

[root@ceph-node3 ~]#ps -ef|grep osd

ceph 140933 1 0 03:38 ? 00:00:04 /usr/bin/ceph-osd -f --cluster ceph --id 5 --setuser ceph --setgroup ceph

ceph 142908 1 1 03:39 ? 00:00:05 /usr/bin/ceph-osd -f --cluster ceph --id 6 --setuser ceph --setgroup ceph

ceph 144855 1 0 03:39 ? 00:00:03 /usr/bin/ceph-osd -f --cluster ceph --id 7 --setuser ceph --setgroup ceph

ceph 146814 1 0 03:39 ? 00:00:03 /usr/bin/ceph-osd -f --cluster ceph --id 8 --setuser ceph --setgroup ceph

ceph 148754 1 0 03:39 ? 00:00:03 /usr/bin/ceph-osd -f --cluster ceph --id 9 --setuser ceph --setgroup ceph

systemctl enable ceph-osd@5 ceph-osd@6 ceph-osd@7 ceph-osd@8 ceph-osd@9

查看集群osd状态

可看到node上osd的id

2.11 从RADOS移除OSD

Ceph集群中的一个OSD是一个node 节点的服务进程且对应于一个物理磁盘设备,是一个专用的守护进程.在某OSD设备出现故障,或管理员出于管理之需确实要移除特定的OSD设备时,需要先停止相关的守护进程,而后再进行移除操作。

移除步骤如下:

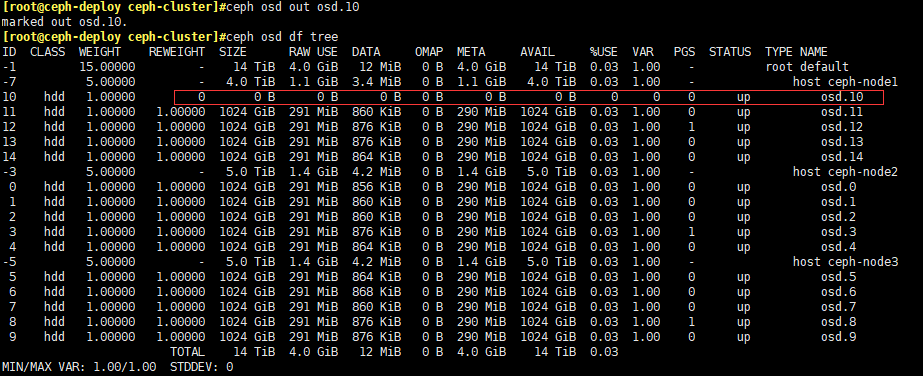

2.11.1 停用设备

[root@ceph-deploy ceph-cluster]#ceph osd out osd.10

磁盘显示为0,状态是up

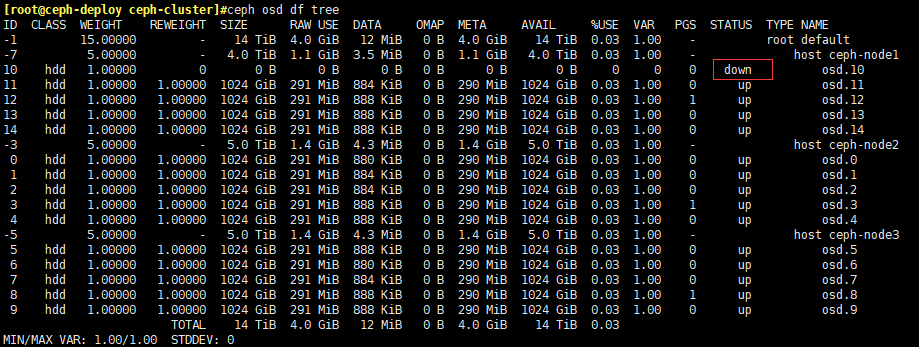

2.11.2 停止进程

[root@ceph-node1 ~]#systemctl stop ceph-osd@10.service

状态变为down

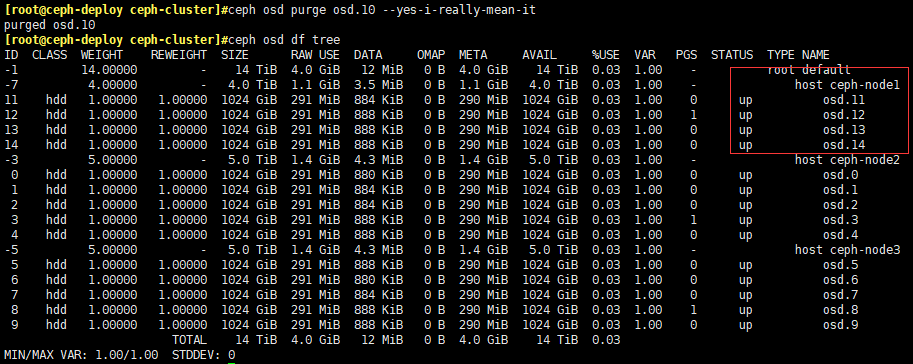

2.11.3 移除设备

[root@ceph-deploy ceph-cluster]#ceph osd purge osd.10 --yes-i-really-mean-it

磁盘信息移除

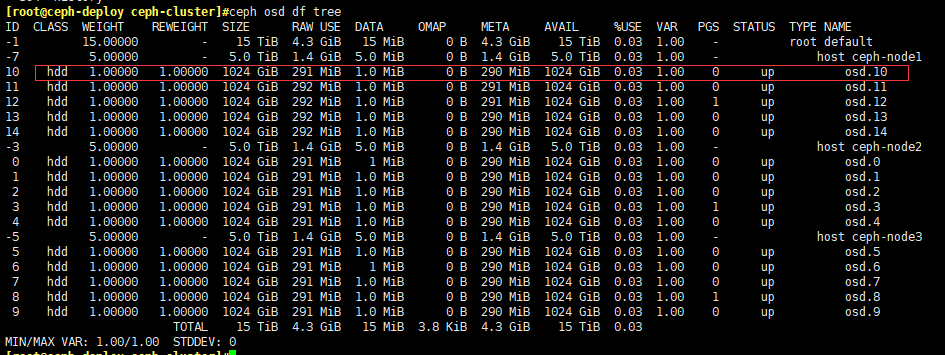

2.12 重新将原磁盘添加回集群

- 清空已删除磁盘中的内容

[root@ceph-node1 ~]# wipefs -af /dev/sdb

- 擦除硬盘

cephadmin@ceph-deploy:/data/ceph-cluster$ ceph-deploy disk zap ceph-node1 /dev/sdb

- 添加osd

cephadmin@ceph-deploy:/data/ceph-cluster$ ceph-deploy osd create ceph-node1 --data /dev/sdb

- 设置开机自启

[root@ceph-node1 ~]#systemctl enable ceph-osd@10

验证osd重新加回集群

3. 扩展ceph集群实现高可用

3.1 扩展mon节点

ceph-mon是原生具备自选举以实现高可用机制的ceph服务,节点数量通常为奇数。

mon节点安装服务

cephadmin@ceph-mon2:~$ sudo apt install -y ceph-mon

cephadmin@ceph-mon3:~$ sudo apt install -y ceph-mon

mon节点添加至集群

cephadmin@ceph-deploy:/data/ceph-cluster$ ceph-deploy mon add ceph-mon2

cephadmin@ceph-deploy:/data/ceph-cluster$ ceph-deploy mon add ceph-mon2

# 若出现错误,[ERROR ] RuntimeError: config file /etc/ceph/ceph.conf exists with different content

ceph-deploy --overwrite-conf mon add ceph-mon2

ceph-deploy --overwrite-conf mon add ceph-mon3

验证ceph-mon状态

# 以json格式查看

cephadmin@ceph-deploy:/data/ceph-cluster$ ceph quorum_status --format json-pretty

{

"election_epoch": 12,

"quorum": [

0,

1,

2

],

"quorum_names": [

"ceph-mon1",

"ceph-mon2",

"ceph-mon3"

],

"quorum_leader_name": "ceph-mon1", # 当前leader

"quorum_age": 32,

"features": {

"quorum_con": "4540138314316775423",

"quorum_mon": [

"kraken",

"luminous",

"mimic",

"osdmap-prune",

"nautilus",

"octopus",

"pacific",

"elector-pinging"

]

},

"monmap": {

"epoch": 3,

"fsid": "28820ae5-8747-4c53-827b-219361781ada",

"modified": "2023-09-20T20:46:48.910442Z",

"created": "2023-09-20T18:58:33.478584Z",

"min_mon_release": 16,

"min_mon_release_name": "pacific",

"election_strategy": 1,

"disallowed_leaders: ": "",

"stretch_mode": false,

"tiebreaker_mon": "",

"removed_ranks: ": "",

"features": {

"persistent": [

"kraken",

"luminous",

"mimic",

"osdmap-prune",

"nautilus",

"octopus",

"pacific",

"elector-pinging"

],

"optional": []

},

"mons": [

{

"rank": 0,

"name": "ceph-mon1",

"public_addrs": {

"addrvec": [

{

"type": "v2",

"addr": "10.0.0.51:3300",

"nonce": 0

},

{

"type": "v1",

"addr": "10.0.0.51:6789",

"nonce": 0

}

]

},

"addr": "10.0.0.51:6789/0",

"public_addr": "10.0.0.51:6789/0",

"priority": 0,

"weight": 0,

"crush_location": "{}"

},

{

"rank": 1,

"name": "ceph-mon2",

"public_addrs": {

"addrvec": [

{

"type": "v2",

"addr": "10.0.0.52:3300",

"nonce": 0

},

{

"type": "v1",

"addr": "10.0.0.52:6789",

"nonce": 0

}

]

},

"addr": "10.0.0.52:6789/0",

"public_addr": "10.0.0.52:6789/0",

"priority": 0,

"weight": 0,

"crush_location": "{}"

},

{

"rank": 2, # 节点等级

"name": "ceph-mon3", # 节点名称

"public_addrs": {

"addrvec": [

{

"type": "v2",

"addr": "10.0.0.53:3300",

"nonce": 0

},

{

"type": "v1",

"addr": "10.0.0.53:6789",

"nonce": 0

}

]

},

"addr": "10.0.0.53:6789/0", # 监听地址

"public_addr": "10.0.0.53:6789/0",

"priority": 0,

"weight": 0,

"crush_location": "{}"

}

]

}

}

验证集群状态

cephadmin@ceph-deploy:/data/ceph-cluster$ ceph -s

cluster:

id: 28820ae5-8747-4c53-827b-219361781ada

health: HEALTH_OK

services:

mon: 3 daemons, quorum ceph-mon1,ceph-mon2,ceph-mon3 (age 6m) # 3个monjied

mgr: ceph-mgr1(active, since 94m)

osd: 15 osds: 15 up (since 19m), 15 in (since 20m)

data:

pools: 1 pools, 1 pgs

objects: 0 objects, 0 B

usage: 4.3 GiB used, 15 TiB / 15 TiB avail

pgs: 1 active+clean

3.2 扩展mgr节点

mgr2节点安装ceph-mgr服务

cephadmin@ceph-mgr2:~$ sudo apt install -y ceph-mgr

添加mgr2至集群

# 添加至集群

cephadmin@ceph-deploy:/data/ceph-cluster$ ceph-deploy mgr create ceph-mgr2

# 分发秘钥给ceph-mgr2节点

cephadmin@ceph-deploy:/data/ceph-cluster$ ceph-deploy admin ceph-mgr2

验证mgr节点状态

cephadmin@ceph-deploy:/data/ceph-cluster$ ceph -s

cluster:

id: 28820ae5-8747-4c53-827b-219361781ada

health: HEALTH_OK

services:

mon: 3 daemons, quorum ceph-mon1,ceph-mon2,ceph-mon3 (age 11m)

mgr: ceph-mgr1(active, since 100m), standbys: ceph-mgr2 # mgr服务一主一备,mgr2为备

osd: 15 osds: 15 up (since 25m), 15 in (since 25m)

data:

pools: 1 pools, 1 pgs

objects: 0 objects, 0 B

usage: 4.3 GiB used, 15 TiB / 15 TiB avail

pgs: 1 active+clean

3.3 添加node节点和磁盘

添加node4至集群

-

添加apt镜像仓库、更新仓库

[root@ceph-node4 ~]#cat /etc/apt/sources.list ... deb https://mirrors.tuna.tsinghua.edu.cn/ceph/debian-pacific/ focal main # 添加该ceph镜像源 [root@ceph-node4 ~]# apt update -

初始化node4

cephadmin@ceph-deploy:/data/ceph-cluster$ ceph-deploy install --no-adjust-repos --nogpgcheck ceph-node4 -

deploy节点对node节点执行安装ceph 基本运行环境

cephadmin@ceph-deploy:/data/ceph-cluster$ ceph-deploy install --release pacific ceph-node4 -

擦除node节点数据磁盘

cephadmin@ceph-deploy:/data/ceph-cluster$ ceph-deploy disk zap ceph-node4 /dev/sdb ceph-deploy disk zap ceph-node4 /dev/sdc ceph-deploy disk zap ceph-node4 /dev/sdd ceph-deploy disk zap ceph-node4 /dev/sde ceph-deploy disk zap ceph-node4 /dev/sdf -

添加OSD

ceph-deploy osd create ceph-node4 --data /dev/sdb ceph-deploy osd create ceph-node4 --data /dev/sdc ceph-deploy osd create ceph-node4 --data /dev/sdd ceph-deploy osd create ceph-node4 --data /dev/sde ceph-deploy osd create ceph-node4 --data /dev/sdf -

设置开机自启动

[root@ceph-node4 ~]#ps -ef |grep osd ceph 78013 1 1 05:13 ? 00:00:00 /usr/bin/ceph-osd -f --cluster ceph --id 15 --setuser ceph --setgroup ceph ceph 79956 1 1 05:13 ? 00:00:00 /usr/bin/ceph-osd -f --cluster ceph --id 16 --setuser ceph --setgroup ceph ceph 81892 1 1 05:13 ? 00:00:00 /usr/bin/ceph-osd -f --cluster ceph --id 17 --setuser ceph --setgroup ceph ceph 83824 1 1 05:14 ? 00:00:00 /usr/bin/ceph-osd -f --cluster ceph --id 18 --setuser ceph --setgroup ceph ceph 85770 1 2 05:14 ? 00:00:00 /usr/bin/ceph-osd -f --cluster ceph --id 19 --setuser ceph --setgroup ceph root 86344 68292 0 05:14 pts/0 00:00:00 grep --color=auto osd [root@ceph-node4 ~]#systemctl enable ceph-osd@15 ceph-osd@16 ceph-osd@17 ceph-osd@18 ceph-osd@19 -

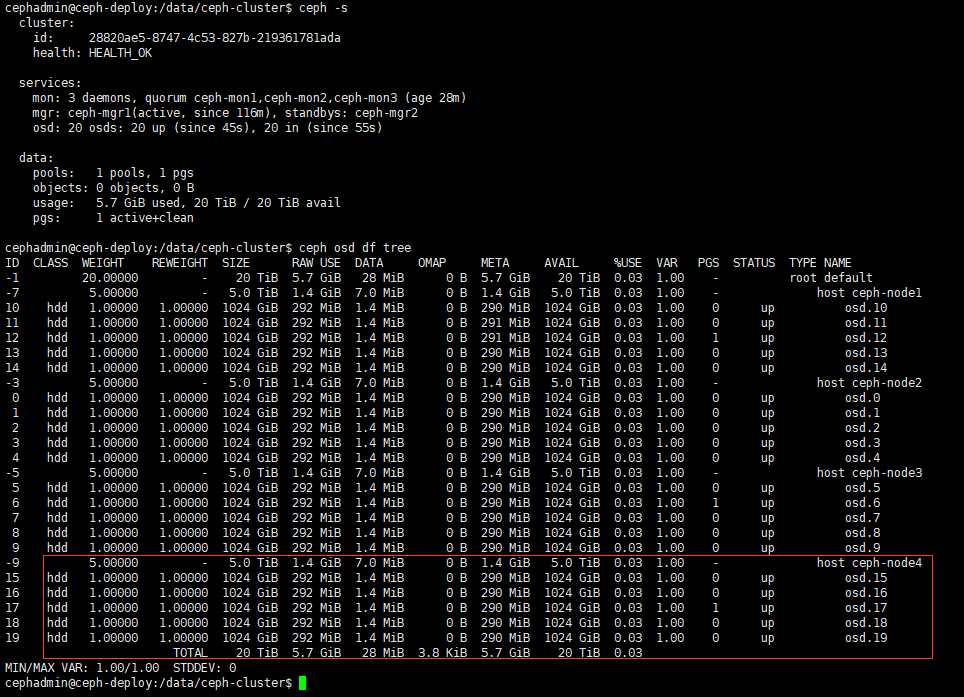

验证集群

3.4 同步ceph-mon扩容节点信息

修改ceph.conf配置

cephadmin@ceph-deploy:/data/ceph-cluster$ cat ceph.conf

[global]

fsid = 28820ae5-8747-4c53-827b-219361781ada

public_network = 10.0.0.0/24

cluster_network = 192.168.10.0/24

mon_initial_members = ceph-mon1,ceph-mon2,ceph-mon3

mon_host = 10.0.0.51,10.0.0.52,10.0.0.53

auth_cluster_required = cephx

auth_service_required = cephx

auth_client_required = cephx

同步配置文件到各节点/etc/ceph/ceph.conf配置文件

cephadmin@ceph-deploy:/data/ceph-cluster$ ceph-deploy --overwrite-conf config push ceph-deploy ceph-mon{1,2,3} ceph-mgr{1,2} ceph-node{1,2,3,4}

[ceph_deploy.conf][DEBUG ] found configuration file at: /home/cephadmin/.cephdeploy.conf

[ceph_deploy.cli][INFO ] Invoked (2.1.0): /usr/local/bin/ceph-deploy --overwrite-conf config push ceph-deploy ceph-mon1 ceph-mon2 ceph-mon3 ceph-mgr1 ceph-mgr2 ceph-node1 ceph-node2 ceph-node3 ceph-node4

[ceph_deploy.cli][INFO ] ceph-deploy options:

[ceph_deploy.cli][INFO ] verbose : False

[ceph_deploy.cli][INFO ] quiet : False

[ceph_deploy.cli][INFO ] username : None

[ceph_deploy.cli][INFO ] overwrite_conf : True

[ceph_deploy.cli][INFO ] ceph_conf : None

[ceph_deploy.cli][INFO ] cluster : ceph

[ceph_deploy.cli][INFO ] subcommand : push

[ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf object at 0x7f7f24bc1d60>

[ceph_deploy.cli][INFO ] default_release : False

[ceph_deploy.cli][INFO ] func : <function config at 0x7f7f24c8a550>

[ceph_deploy.cli][INFO ] client : ['ceph-deploy', 'ceph-mon1', 'ceph-mon2', 'ceph-mon3', 'ceph-mgr1', 'ceph-mgr2', 'ceph-node1', 'ceph-node2', 'ceph-node3', 'ceph-node4']

[ceph_deploy.config][DEBUG ] Pushing config to ceph-deploy

[ceph-deploy][DEBUG ] connection detected need for sudo

[ceph-deploy][DEBUG ] connected to host: ceph-deploy

[ceph_deploy.config][DEBUG ] Pushing config to ceph-mon1

[ceph-mon1][DEBUG ] connection detected need for sudo

[ceph-mon1][DEBUG ] connected to host: ceph-mon1

[ceph_deploy.config][DEBUG ] Pushing config to ceph-mon2

[ceph-mon2][DEBUG ] connection detected need for sudo

[ceph-mon2][DEBUG ] connected to host: ceph-mon2

[ceph_deploy.config][DEBUG ] Pushing config to ceph-mon3

[ceph-mon3][DEBUG ] connection detected need for sudo

[ceph-mon3][DEBUG ] connected to host: ceph-mon3

[ceph_deploy.config][DEBUG ] Pushing config to ceph-mgr1

[ceph-mgr1][DEBUG ] connection detected need for sudo

[ceph-mgr1][DEBUG ] connected to host: ceph-mgr1

[ceph_deploy.config][DEBUG ] Pushing config to ceph-mgr2

[ceph-mgr2][DEBUG ] connection detected need for sudo

[ceph-mgr2][DEBUG ] connected to host: ceph-mgr2

[ceph_deploy.config][DEBUG ] Pushing config to ceph-node1

[ceph-node1][DEBUG ] connection detected need for sudo

[ceph-node1][DEBUG ] connected to host: ceph-node1

[ceph_deploy.config][DEBUG ] Pushing config to ceph-node2

[ceph-node2][DEBUG ] connection detected need for sudo

[ceph-node2][DEBUG ] connected to host: ceph-node2

[ceph_deploy.config][DEBUG ] Pushing config to ceph-node3

[ceph-node3][DEBUG ] connection detected need for sudo

[ceph-node3][DEBUG ] connected to host: ceph-node3

[ceph_deploy.config][DEBUG ] Pushing config to ceph-node4

[ceph-node4][DEBUG ] connection detected need for sudo

[ceph-node4][DEBUG ] connected to host: ceph-node4

节点验证

# deploy节点

cephadmin@ceph-deploy:/data/ceph-cluster$ cat /etc/ceph/ceph.conf

[global]

fsid = 28820ae5-8747-4c53-827b-219361781ada

public_network = 10.0.0.0/24

cluster_network = 192.168.10.0/24

mon_initial_members = ceph-mon1,ceph-mon2,ceph-mon3

mon_host = 10.0.0.51,10.0.0.52,10.0.0.53

auth_cluster_required = cephx

auth_service_required = cephx

auth_client_required = cephx

# mgr2节点

cephadmin@ceph-mgr2:~$ cat /etc/ceph/ceph.conf

[global]

fsid = 28820ae5-8747-4c53-827b-219361781ada

public_network = 10.0.0.0/24

cluster_network = 192.168.10.0/24

mon_initial_members = ceph-mon1,ceph-mon2,ceph-mon3

mon_host = 10.0.0.51,10.0.0.52,10.0.0.53

auth_cluster_required = cephx

auth_service_required = cephx

auth_client_required = cephx

# node4节点

[root@ceph-node4 ~]#cat /etc/ceph/ceph.conf

[global]

fsid = 28820ae5-8747-4c53-827b-219361781ada

public_network = 10.0.0.0/24

cluster_network = 192.168.10.0/24

mon_initial_members = ceph-mon1,ceph-mon2,ceph-mon3

mon_host = 10.0.0.51,10.0.0.52,10.0.0.53

auth_cluster_required = cephx

auth_service_required = cephx

auth_client_required = cephx

4. ceph集群数据上传、下载测试

存取数据时,客户端必须首先连接至RADOS集群上某存储池,然后根据对象名称由相关的CRUSH规则完成数据对象寻址.于是,为了测试集群的数据存取功能,这里首先创建一个用于测试的存储池mypool,并设定其PG数量为32个。

4.1 创建pool

ceph osd pool create mypool 32 32

查看pg组合关系

[root@ceph-deploy ceph-cluster]#ceph osd pool ls

device_health_metrics

mypool

[root@ceph-deploy ceph-cluster]#ceph pg ls-by-pool mypool |awk '{print $1,$2,$15}'

PG OBJECTS ACTING

2.0 0 [8,10,3]p8 # 同一份数据存放在磁盘8/10/3中,8为主进行读写

2.1 0 [15,0,13]p15

2.2 0 [5,1,15]p5

2.3 0 [17,5,14]p17

2.4 0 [1,12,18]p1

2.5 0 [12,4,8]p12

2.6 0 [1,13,19]p1

2.7 0 [6,17,2]p6

2.8 0 [16,13,0]p16

2.9 0 [4,9,19]p4

2.a 0 [11,4,18]p11

2.b 0 [13,7,17]p13

2.c 0 [12,0,5]p12

2.d 0 [12,19,3]p12

2.e 0 [2,13,19]p2

2.f 0 [11,17,8]p11

2.10 0 [15,13,0]p15

2.11 0 [16,6,1]p16

2.12 0 [10,3,9]p10

2.13 0 [17,6,3]p17

2.14 0 [8,13,17]p8

2.15 0 [19,1,11]p19

2.16 0 [8,12,17]p8

2.17 0 [6,14,2]p6

2.18 0 [18,9,12]p18

2.19 0 [3,6,13]p3

2.1a 0 [6,14,2]p6

2.1b 0 [11,7,17]p11

2.1c 0 [10,7,1]p10

2.1d 0 [15,10,7]p15

2.1e 0 [3,13,15]p3

2.1f 0 [4,7,14]p4

* NOTE: afterwards

说明:若查找数字OSD序号所在ceph-node节点位置,可通过查看node节点上的osd进程得到

4.2 上传文件

rados put msg1 /var/log/syslog --pool=mypool

列出文件

[root@ceph-deploy ceph-cluster]# rados ls --pool=mypool

msg1

文件信息

[root@ceph-deploy ceph-cluster]#ceph osd map mypool msg1

osdmap e113 pool 'mypool' (2) object 'msg1' -> pg 2.c833d430 (2.10) -> up ([15,13,0], p15) acting ([15,13,0], p15)

4.3 下载文件

rados get msg1 --pool=mypool /opt/my.txt

示例:

[root@ceph-deploy ceph-cluster]#rados get msg1 --pool=mypool /opt/my.txt

[root@ceph-deploy ceph-cluster]#ll /opt/

total 1060

drwxr-xr-x 2 root root 20 Sep 18 04:15 ./

drwxr-xr-x 19 root root 275 Sep 18 00:38 ../

-rw-r--r-- 1 root root 1082695 Sep 18 04:15 my.txt

[root@ceph-deploy ceph-cluster]#head /opt/my.txt

Sep 18 00:00:21 ceph-deploy rsyslogd: [origin software="rsyslogd" swVersion="8.2001.0" x-pid="847" x-info="https://www.rsyslog.com"] rsyslogd was HUPed

Sep 18 00:00:26 ceph-deploy multipathd[672]: sda: add missing path

Sep 18 00:00:26 ceph-deploy multipathd[672]: sda: failed to get udev uid: Invalid argument

Sep 18 00:00:26 ceph-deploy multipathd[672]: sda: failed to get sysfs uid: Invalid argument

Sep 18 00:00:26 ceph-deploy multipathd[672]: sda: failed to get sgio uid: No such file or directory

Sep 18 00:00:31 ceph-deploy multipathd[672]: sda: add missing path

Sep 18 00:00:31 ceph-deploy multipathd[672]: sda: failed to get udev uid: Invalid argument

Sep 18 00:00:31 ceph-deploy multipathd[672]: sda: failed to get sysfs uid: Invalid argument

Sep 18 00:00:31 ceph-deploy multipathd[672]: sda: failed to get sgio uid: No such file or directory

Sep 18 00:00:36 ceph-deploy multipathd[672]: sda: add missing pat

4.4 修改文件

rados put msg1 /etc/passwd --pool=mypool

示例:

[root@ceph-deploy ceph-cluster]#rados put msg1 /etc/passwd --pool=mypool

[root@ceph-deploy ceph-cluster]#rados get msg1 --pool=mypool /opt/2.txt

[root@ceph-deploy ceph-cluster]#tail /opt/2.txt

usbmux:x:111:46:usbmux daemon,,,:/var/lib/usbmux:/usr/sbin/nologin

sshd:x:112:65534::/run/sshd:/usr/sbin/nologin

systemd-coredump:x:999:999:systemd Core Dumper:/:/usr/sbin/nologin

sc:x:1000:1000:sc:/home/sc:/bin/bash

lxd:x:998:100::/var/snap/lxd/common/lxd:/bin/false

_chrony:x:113:117:Chrony daemon,,,:/var/lib/chrony:/usr/sbin/nologin

_rpc:x:114:65534::/run/rpcbind:/usr/sbin/nologin

statd:x:115:65534::/var/lib/nfs:/usr/sbin/nologin

cephadmin:x:2088:2088::/home/cephadmin:/bin/bash

ceph:x:64045:64045:Ceph storage service:/var/lib/ceph:/usr/sbin/nologin

4.5 删除文件

rados rm msg1 --pool=mypool

示例

[root@ceph-deploy ceph-cluster]#rados rm msg1 --pool=mypool

[root@ceph-deploy ceph-cluster]#rados ls --pool=mypool