1. 安装 virtual box

版本:VirtualBox-6.1.42

2. 创建虚拟机,安装 centos7

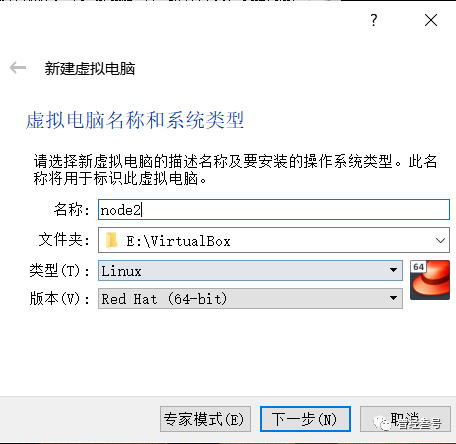

新建虚拟机:

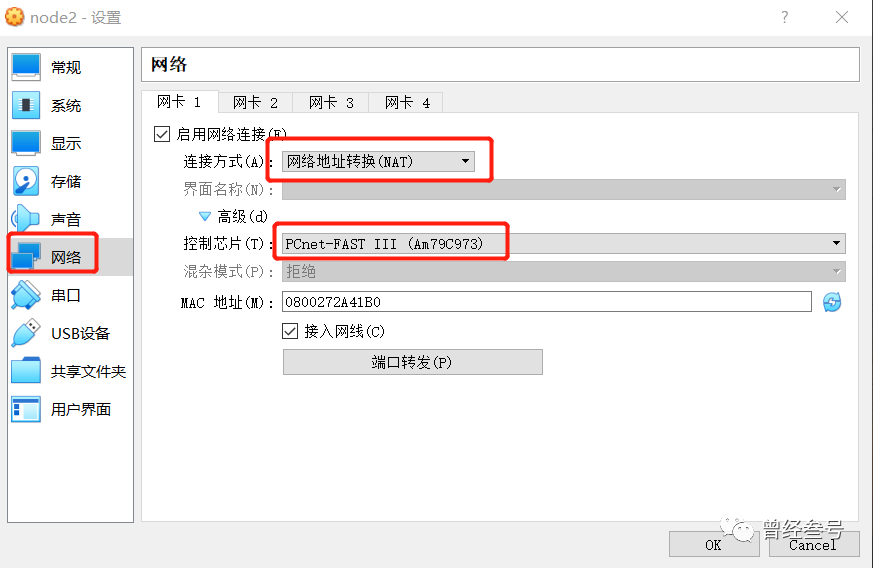

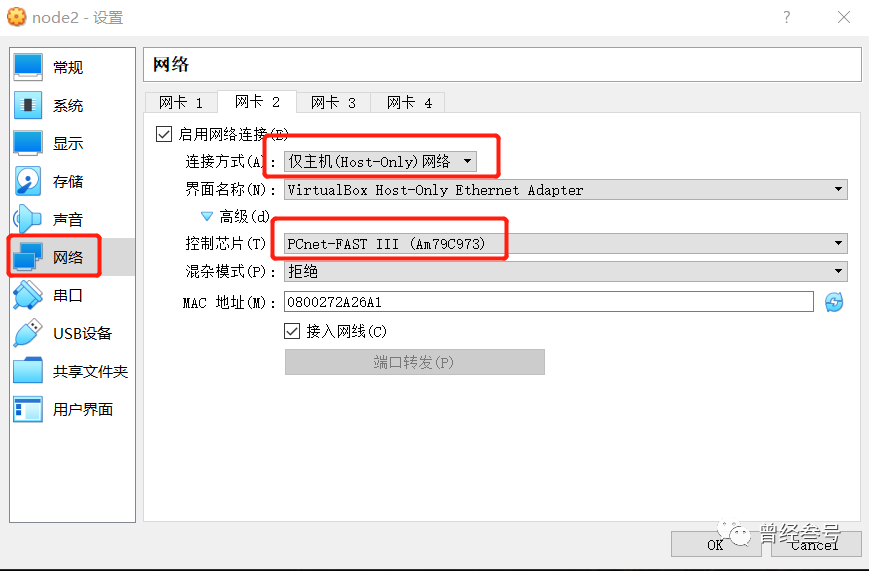

选择网络

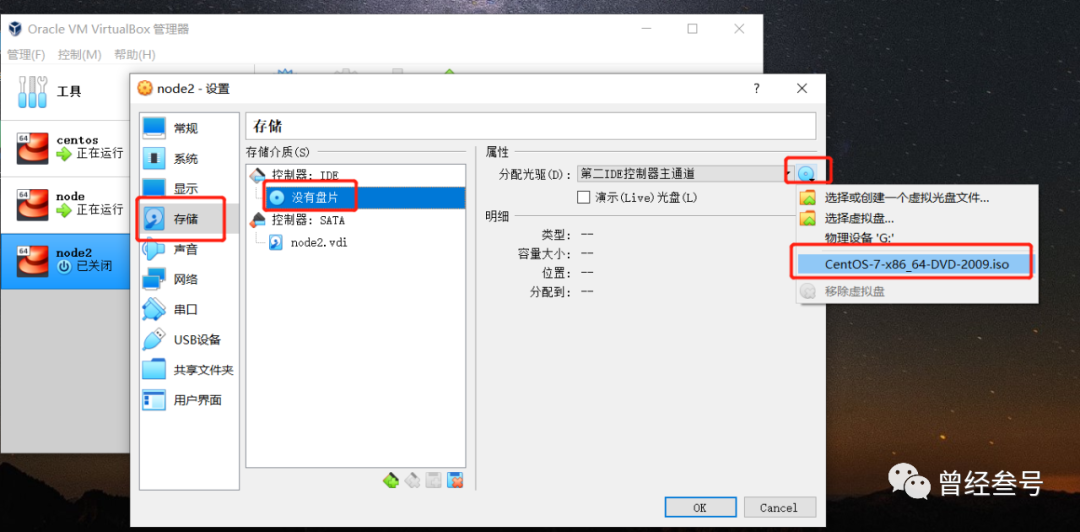

安装 centos7 操作系统:

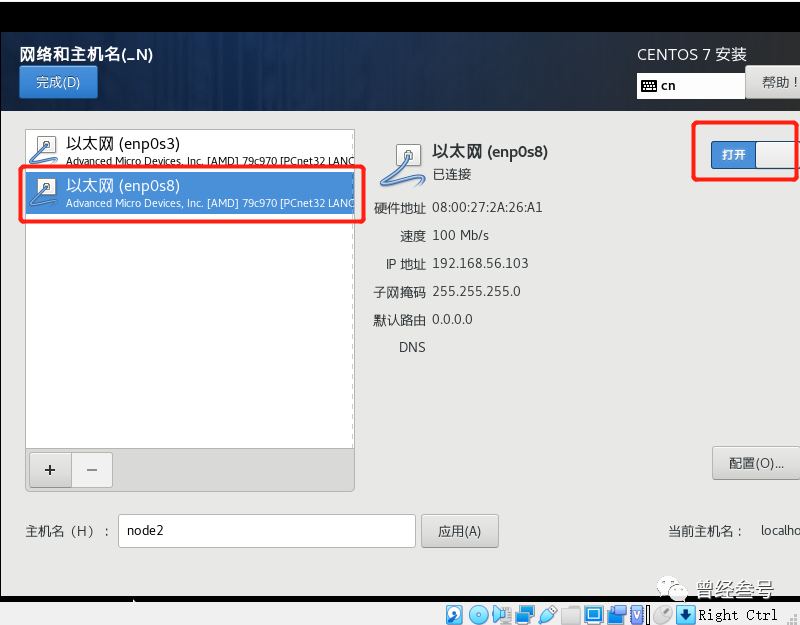

安装过程中配置网络

centos 安装完成后,需要修改 host-only 的网络参数

cd /etc/sysconfig/network-scripts/

vim ifcfg-enp0s8

文件内容如下:

1 TYPE=Ethernet 2 PROXY_METHOD=none 3 BROWSER_ONLY=no 4 BOOTPROTO=static 5 DEFROUTE=yes 6 IPV4_FAILURE_FATAL=no 7 IPV6INIT=yes 8 IPV6_AUTOCONF=yes 9 IPV6_DEFROUTE=yes 10 IPV6_FAILURE_FATAL=no 11 IPV6_ADDR_GEN_MODE=stable-privacy 12 NAME=enp0s8 13 UUID=6160c659-6e3d-4525-9628-dcb6cb9a946a 14 DEVICE=enp0s8 15 ONBOOT=yes 16 IPV6_PRIVACY=on 17 IPADDR=192.168.56.8 18 NETMASK=255.255.255.0 19 GATEWAY=192.168.56.1 20 ZONE=public

重启过后,在宿主机上可通过 ssh 连接到虚拟机,虚拟机网络全部配置完成。

3. 安装 docker

参考 https://yeasy.gitbook.io/docker_practice/install/centos

4. 安装 k8s

| 主机名 | ip | 角色 | os版本 |

| k8s-master | 192.168.56.7 | master | CentOS Linux release 7.9.2009 (Core) |

| k8s-node1 | 192.168.56.8 | worker | CentOS Linux release 7.9.2009 (Core) |

4.1 在2台服务器上配置好域名

4.2 关闭防火墙

systemctl stop firewalld

systemctl disable firewalld

4.3 关闭 SeLinux

setenforce 0 sed -i "s/SELINUX=enforcing/SELINUX=disabled/g" /etc/selinux/config

4.4 关闭 swap

swapoff -a yes | cp /etc/fstab /etc/fstab_bak cat /etc/fstab_bak |grep -v swap > /etc/fstab

4.5 安装 nfs-utils

yum install -y nfs-utils

yum install -y wget

4.6 修改 /etc/sysctl.conf

创建 sys_config.sh 脚本,文件内容如下:

# 如果有配置,则修改 sed -i "s#^net.ipv4.ip_forward.*#net.ipv4.ip_forward=1#g" /etc/sysctl.conf sed -i "s#^net.bridge.bridge-nf-call-ip6tables.*#net.bridge.bridge-nf-call-ip6tables=1#g" /etc/sysctl.conf sed -i "s#^net.bridge.bridge-nf-call-iptables.*#net.bridge.bridge-nf-call-iptables=1#g" /etc/sysctl.conf sed -i "s#^net.ipv6.conf.all.disable_ipv6.*#net.ipv6.conf.all.disable_ipv6=1#g" /etc/sysctl.conf sed -i "s#^net.ipv6.conf.default.disable_ipv6.*#net.ipv6.conf.default.disable_ipv6=1#g" /etc/sysctl.conf sed -i "s#^net.ipv6.conf.lo.disable_ipv6.*#net.ipv6.conf.lo.disable_ipv6=1#g" /etc/sysctl.conf sed -i "s#^net.ipv6.conf.all.forwarding.*#net.ipv6.conf.all.forwarding=1#g" /etc/sysctl.conf # # echo "net.ipv4.ip_forward = 1" >> /etc/sysctl.conf echo "net.bridge.bridge-nf-call-ip6tables = 1" >> /etc/sysctl.conf echo "net.bridge.bridge-nf-call-iptables = 1" >> /etc/sysctl.conf echo "net.ipv6.conf.all.disable_ipv6 = 1" >> /etc/sysctl.conf echo "net.ipv6.conf.default.disable_ipv6 = 1" >> /etc/sysctl.conf echo "net.ipv6.conf.lo.disable_ipv6 = 1" >> /etc/sysctl.conf echo "net.ipv6.conf.all.forwarding = 1" >> /etc/sysctl.conf # 执行命令 sysctl -p

执行脚本

chmod a+x sys_config.sh

./sys_config.sh

4.7 配置 k8s yum 源

vim k8s_yum.sh # k8s_yum.sh 文件内容 cat <<EOF > /etc/yum.repos.d/kubernetes.repo [kubernetes] name=Kubernetes baseurl=http://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64 enabled=1 gpgcheck=0 repo_gpgcheck=0 gpgkey=http://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg http://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg EOF chmod a+x ./k8s_yum.sh ./k8s_yum.sh

4.8 集群内所有机器上安装 kubelet, kubeadm, kubectl

yum install -y kubelet-1.18.20 kubeadm-1.18.20 kubectl-1.18.20

4.9 修改 docker cgroup driver 为 systemd

sed -i "s#^ExecStart=/usr/bin/dockerd.*#ExecStart=/usr/bin/dockerd -H fd:// --containerd=/run/containerd/containerd.sock --exec-opt native.cgroupdriver=systemd#g" /usr/lib/systemd/system/docker.service

4.10 启动 kubelet

systemctl daemon-reload

systemctl restart docker

systemctl enable kubelet && systemctl start kubelet

4.11 初始化 master

kubeadm init \ --apiserver-advertise-address=192.168.56.7 \ --image-repository registry.aliyuncs.com/google_containers \ --kubernetes-version v1.18.20 \ --service-cidr=10.96.0.0/12 \ --pod-network-cidr=10.244.0.0/16

mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/config

4.12 根据提示,将 node 节点加入集群

# 如果忘记 token,在 master 中执行命令可重新获取 kubeadm token create --print-join-command kubeadm join 192.168.56.7:6443 --token fhspoz.m0lztzh3tcnoarkc \ --discovery-token-ca-cert-hash sha256:5ee31f032672162d355219fc3f6923ae87fa27ddb7c831b1766fd6db2dea37b5

4.13 在 master 中安装 flannel

wget https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml kubectl apply -f kube-flannel.yml

需要注意的是:如果集群内节点仍处于 notReady 状态,则检查 flannal 的网卡设置。

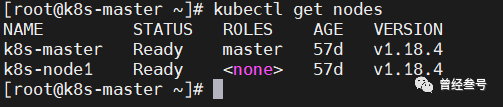

4.14 检查节点状态