一、基于Ceph的存储解决方案上

1、Kubernetes使用Rook部署Ceph存储集群

Rook https://rook.io 是一个自管理分布式存储编排系统,可为K8S提供便利的存储解决方案

Rook本身不提供存储,而是在kubernetes和存储系统之间提供适配层,简化存储系统的部署与维护工作。

为什么要使用Rook?

- Ceph官方推荐使用Rook进行部署管理

- 通过原生的Kubernetes机制和数据存储交互,

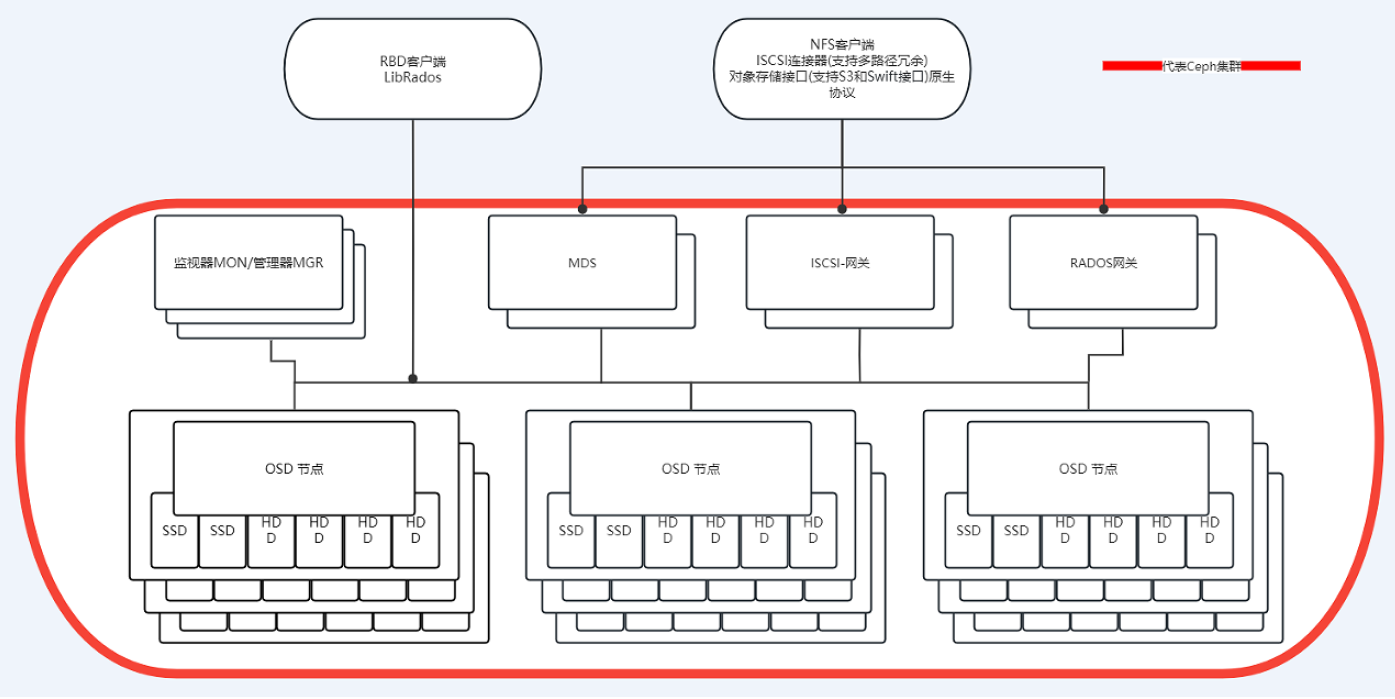

2、Ceph介绍

Ceph是一款为优秀的性能、可靠性和可扩展性而设计的统一的、分布式文件系统

Ceph支持三种存储

- 块存储(RDB):可直接作为磁盘挂载

- 文件系统(CephF):兼容的网络文件系统CephFS,专注高性能、大容量存储

- 对象存储(RADOSGW):提供RESTful接口,也提供多种编程语言绑定。兼容SE、Swift(OpenStack的对象存储)

2.1 核心组件

Ceph主要有三个核心组件

- OSD:用于集群所有数据与对象的存储,处理集群数据的负载、恢复、回填、再均衡、并向其他osd守护进程发送心跳,然后向Monitor提供一些监控信息

- Monitor:监控整个集群的状态,管理集群客户端认证与授权,保证集群数据的一致性

- MDS:负载保存we年系统的元数据,管理目录结构。对象存储和块设备不需要元数据服务

3、安装Ceph集群

Rook支持K8S v1.19+版本,CPU架构amd64、x86或arm64

通过Rook安装ceph集群必须满足以下先决条件

- 至少3个节点、并且全部可以调度Pod,满足Ceph副本高可用要求

- 已部署好K8S集群

- OSD节点没各节点至少有一块裸设备(Raw devices,未分区未系统格式化)

3.1Ceph在K8S部署

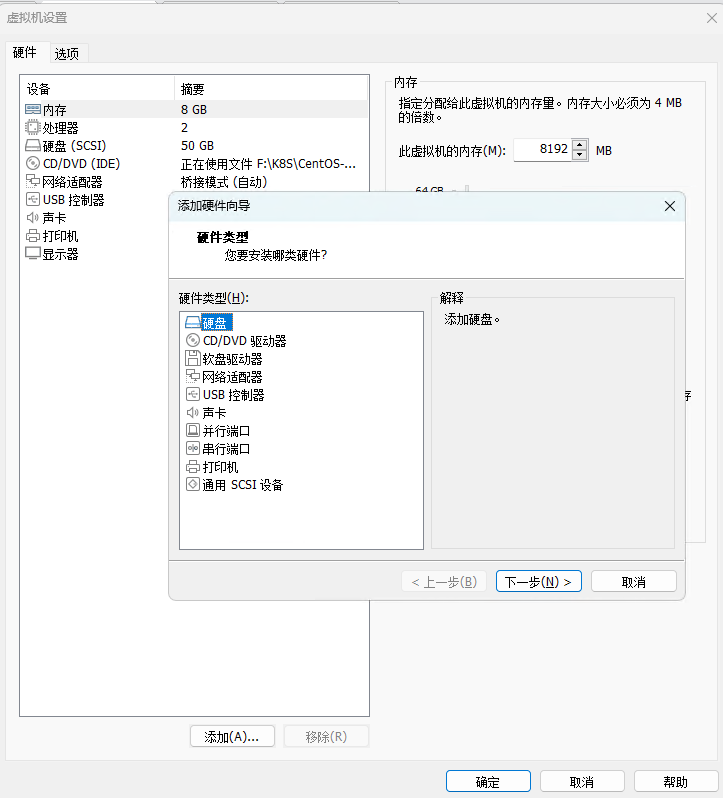

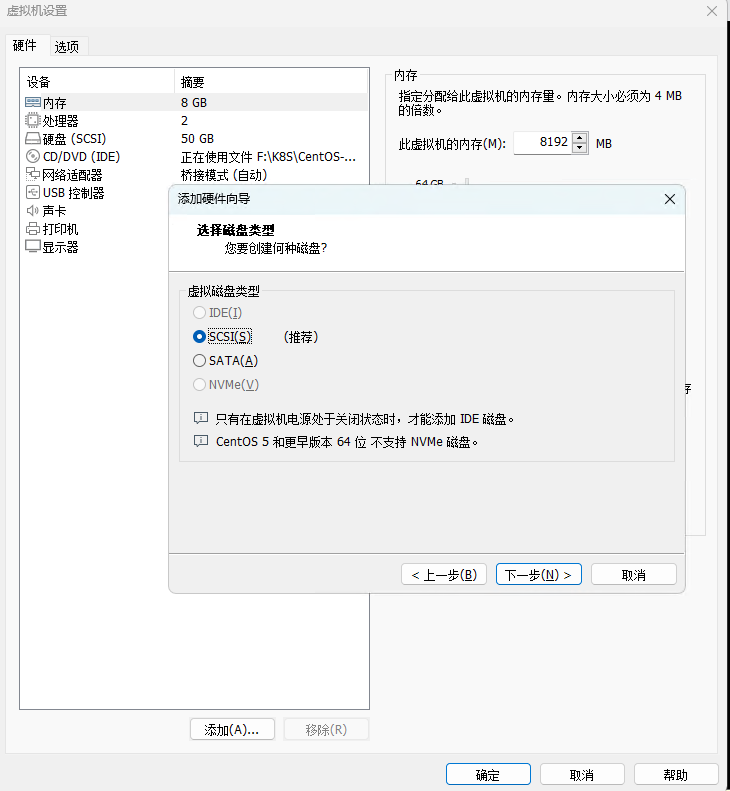

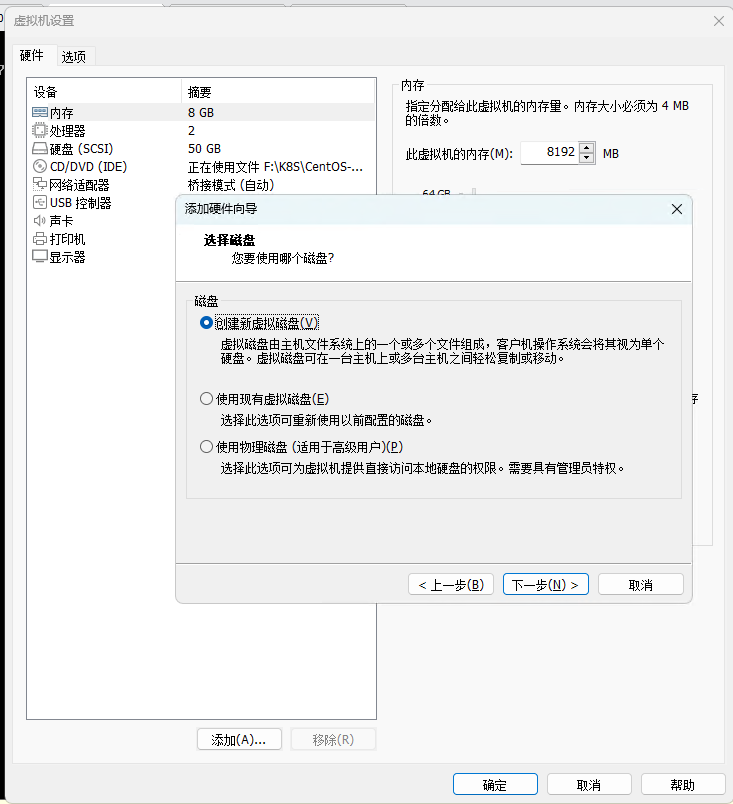

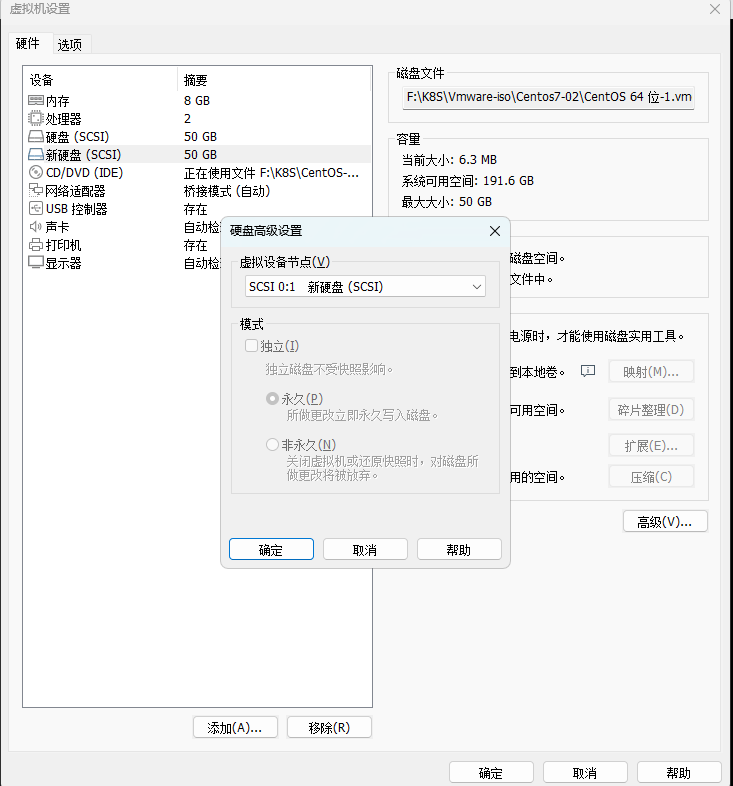

为K8S集群的每个工作节点添加一块额外的未格式化磁盘(裸设备),具体操作见下图

将新增的磁盘设置为独立模式(模拟公有云厂商提供的独立云磁盘),启动k8s集群,在node节点使用以下命令检查磁盘是否满足Ceph部署要求

# lsblk -f

[root@node-1-231 ~]# lsblk -f

NAME FSTYPE LABEL UUID MOUNTPOINT

sda

├─sda1 xfs ea9cfeb1-5d17-4cfd-8023-1d2db4e5ec4d /boot

└─sda2 LVM2_member eWjchz-pMad-Fhb2-kyJr-BMwp-aTpd-Mkkzkk

├─centos-root xfs fec91ee5-2ef1-49f3-8c13-65fc7b58518a /

└─centos-swap swap eac66189-d0c0-41dd-9262-9e46e0572202

sdb

sr0 iso9660 CentOS 7 x86_64 2020-11-03-14-55-29-00

[root@node-1-232 ~]# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sda 8:0 0 50G 0 disk

├─sda1 8:1 0 1G 0 part /boot

└─sda2 8:2 0 49G 0 part

├─centos-root 253:0 0 47G 0 lvm /

└─centos-swap 253:1 0 2G 0 lvm

sdb 8:16 0 50G 0 disk

sr0 11:0 1 973M 0 rom

您在 /var/spool/mail/root 中有新邮件

[root@node-1-232 ~]# lsblk -f

NAME FSTYPE LABEL UUID MOUNTPOINT

sda

├─sda1 xfs 5e7ffc85-45bc-4f5a-bb18-defc8064f0a3 /boot

└─sda2 LVM2_member SozvYr-Il0h-TGMA-SZIV-riiA-ZLFo-cH0zcP

├─centos-root xfs 974b0d0e-2e7e-4483-bcad-a55ed5f71fc6 /

└─centos-swap swap 6cb8ab0e-63c6-4186-9bc9-07bcd3cae8bb

sdb

sr0 iso9660 CentOS 7 x86_64 2020-11-03-14-55-29-00

[root@node-1-233 ~]# lsblk -f

NAME FSTYPE LABEL UUID MOUNTPOINT

sda

├─sda1 xfs fcabb174-6e36-4990-b6fe-425a2d67c6ad /boot

└─sda2 LVM2_member tPeLVy-DViM-Ww2D-5pac-iVM7-KBLB-jC7ZfP

├─centos-root xfs 90a807fd-3c20-47f6-b438-5e340c150195 /

└─centos-swap swap e148f1f3-0cfb-41c1-9d12-2a40824c99ca

sdb

sr0 iso9660 CentOS 7 x86_64 2020-11-03-14-55-29-00 lsblk -f输出中的sdb磁盘就是我们工作节点新添加的裸设备(FSTYPE 为空)。可以分配给Ceph使用

修改Rook CSI驱动注册路径

注意:rook csi渠道挂载的路径是挂载到kubelet配置的--root-dir参数指定的目录下,根据实际的--root-dir参数修改rook csi的kubelet路径地址,如果与实际kubelet的--root-dir路径不一致,会导致挂载存储时报错

默认安装路径:/var/lib/kubelet/ ,基本不用修改,如果非默认需要更改

vim rook/deploy/examples

ROOK_CSI_KUBELET_DIR_PATH在k8s集群中企业Rook准入控制器。该准入控制器在身份认证和授权之后并在持久化对象之前,拦截发往K8S API Server的请求以进行认证。在安装Rook之前,使用以下命令在K8S集群中安装Rook准入控制器

kubectl apply -f https://github.com/cert-manager/cert-manager/releases/download/v1.13.2/cert-manager.yamlwget https://github.com/cert-manager/cert-manager/releases/download/v1.13.2/cert-manager.yaml

root@master-1-230 2.3]# kubectl apply -f cert-manager.yaml

namespace/cert-manager created

customresourcedefinition.apiextensions.k8s.io/certificaterequests.cert-manager.io created

customresourcedefinition.apiextensions.k8s.io/certificates.cert-manager.io created

customresourcedefinition.apiextensions.k8s.io/challenges.acme.cert-manager.io created

customresourcedefinition.apiextensions.k8s.io/clusterissuers.cert-manager.io created

customresourcedefinition.apiextensions.k8s.io/issuers.cert-manager.io created

customresourcedefinition.apiextensions.k8s.io/orders.acme.cert-manager.io created

serviceaccount/cert-manager-cainjector created

serviceaccount/cert-manager created

serviceaccount/cert-manager-webhook created

configmap/cert-manager created

configmap/cert-manager-webhook created

clusterrole.rbac.authorization.k8s.io/cert-manager-cainjector created

clusterrole.rbac.authorization.k8s.io/cert-manager-controller-issuers created

clusterrole.rbac.authorization.k8s.io/cert-manager-controller-clusterissuers created

clusterrole.rbac.authorization.k8s.io/cert-manager-controller-certificates created

clusterrole.rbac.authorization.k8s.io/cert-manager-controller-orders created

clusterrole.rbac.authorization.k8s.io/cert-manager-controller-challenges created

clusterrole.rbac.authorization.k8s.io/cert-manager-controller-ingress-shim created

clusterrole.rbac.authorization.k8s.io/cert-manager-cluster-view created

clusterrole.rbac.authorization.k8s.io/cert-manager-view created

clusterrole.rbac.authorization.k8s.io/cert-manager-edit created

clusterrole.rbac.authorization.k8s.io/cert-manager-controller-approve:cert-manager-io created

clusterrole.rbac.authorization.k8s.io/cert-manager-controller-certificatesigningrequests created

clusterrole.rbac.authorization.k8s.io/cert-manager-webhook:subjectaccessreviews created

clusterrolebinding.rbac.authorization.k8s.io/cert-manager-cainjector created

clusterrolebinding.rbac.authorization.k8s.io/cert-manager-controller-issuers created

clusterrolebinding.rbac.authorization.k8s.io/cert-manager-controller-clusterissuers created

clusterrolebinding.rbac.authorization.k8s.io/cert-manager-controller-certificates created

clusterrolebinding.rbac.authorization.k8s.io/cert-manager-controller-orders created

clusterrolebinding.rbac.authorization.k8s.io/cert-manager-controller-challenges created

clusterrolebinding.rbac.authorization.k8s.io/cert-manager-controller-ingress-shim created

clusterrolebinding.rbac.authorization.k8s.io/cert-manager-controller-approve:cert-manager-io created

clusterrolebinding.rbac.authorization.k8s.io/cert-manager-controller-certificatesigningrequests created

clusterrolebinding.rbac.authorization.k8s.io/cert-manager-webhook:subjectaccessreviews created

role.rbac.authorization.k8s.io/cert-manager-cainjector:leaderelection created

role.rbac.authorization.k8s.io/cert-manager:leaderelection created

role.rbac.authorization.k8s.io/cert-manager-webhook:dynamic-serving created

rolebinding.rbac.authorization.k8s.io/cert-manager-cainjector:leaderelection created

rolebinding.rbac.authorization.k8s.io/cert-manager:leaderelection created

rolebinding.rbac.authorization.k8s.io/cert-manager-webhook:dynamic-serving created

service/cert-manager created

service/cert-manager-webhook created

deployment.apps/cert-manager-cainjector created

deployment.apps/cert-manager created

deployment.apps/cert-manager-webhook created

mutatingwebhookconfiguration.admissionregistration.k8s.io/cert-manager-webhook created

validatingwebhookconfiguration.admissionregistration.k8s.io/cert-manager-webhook created检查需要安装Ceph的node节点是否安装LVM2

#yum install lvm2 -y

# yum list installed|grep lvm2

Repodata is over 2 weeks old. Install yum-cron? Or run: yum makecache fast

lvm2.x86_64 7:2.02.187-6.el7_9.5 @updates

lvm2-libs.x86_64 7:2.02.187-6.el7_9.5 @updates Ceph需要一个带有RBD模块的Linux内核。在K8S集群的节点运行 lsmod |grep rbd 检查,如果该命令返回为空,当前系统没有加载RBD模块。

#将RBD模块加载命令放到开机加载项

cat > /etc/sysconfig/modules/rbd.modules << EOF

#!/bin/bash

modprobe rbd

EOF

#为脚本添加可执行权限

chmod +x /etc/sysconfig/modules/rbd.modules

#查看RBD模块是否加载成功

# lsmod |grep rbd

rbd 83733 0

libceph 306750 1 rbd

二、基于Ceph的存储解决方案中

3.2 使用Rook在K8S集群部署Ceph存储集群

使用Rook官方提供的示例部署组件清单(mainifests)部署一个Ceph集群

使用git从github(https://github.com/rook/rook.git) clone太慢,改为gitee导入

使用git将部署清单示例下载到本地(使用gitee仓库)

[root@master-1-230 2.3]# git clone --single-branch --branch v1.12.8 https://gitee.com/ikubernetesi/rook.git

正克隆到 'rook'...

remote: Enumerating objects: 92664, done.

remote: Counting objects: 100% (92664/92664), done.

remote: Compressing objects: 100% (26041/26041), done.

remote: Total 92664 (delta 65003), reused 92443 (delta 64848), pack-reused 0

接收对象中: 100% (92664/92664), 49.01 MiB | 7.46 MiB/s, done.

处理 delta 中: 100% (65003/65003), done.

Note: checking out 'aa3eab85caba76b3a1b854aadb0b7e3faa8a43cb'.

You are in 'detached HEAD' state. You can look around, make experimental

changes and commit them, and you can discard any commits you make in this

state without impacting any branches by performing another checkout.

If you want to create a new branch to retain commits you create, you may

do so (now or later) by using -b with the checkout command again. Example:

git checkout -b new_branch_name

#进入到本地部署组件清单示例目录

cd rook/deploy/examples#执行以下命令将Rook和Ceph相关CRD资源和通用资源创建到K8S集群

# kubectl apply -f crds.yaml -f common.yaml

[root@master-1-230 examples]# kubectl apply -f crds.yaml -f common.yaml

customresourcedefinition.apiextensions.k8s.io/cephblockpoolradosnamespaces.ceph.rook.io created

customresourcedefinition.apiextensions.k8s.io/cephblockpools.ceph.rook.io created

customresourcedefinition.apiextensions.k8s.io/cephbucketnotifications.ceph.rook.io created

customresourcedefinition.apiextensions.k8s.io/cephbuckettopics.ceph.rook.io created

customresourcedefinition.apiextensions.k8s.io/cephclients.ceph.rook.io created

customresourcedefinition.apiextensions.k8s.io/cephclusters.ceph.rook.io created

customresourcedefinition.apiextensions.k8s.io/cephcosidrivers.ceph.rook.io created

customresourcedefinition.apiextensions.k8s.io/cephfilesystemmirrors.ceph.rook.io created

customresourcedefinition.apiextensions.k8s.io/cephfilesystems.ceph.rook.io created

customresourcedefinition.apiextensions.k8s.io/cephfilesystemsubvolumegroups.ceph.rook.io created

customresourcedefinition.apiextensions.k8s.io/cephnfses.ceph.rook.io created

customresourcedefinition.apiextensions.k8s.io/cephobjectrealms.ceph.rook.io created

customresourcedefinition.apiextensions.k8s.io/cephobjectstores.ceph.rook.io created

customresourcedefinition.apiextensions.k8s.io/cephobjectstoreusers.ceph.rook.io created

customresourcedefinition.apiextensions.k8s.io/cephobjectzonegroups.ceph.rook.io created

customresourcedefinition.apiextensions.k8s.io/cephobjectzones.ceph.rook.io created

customresourcedefinition.apiextensions.k8s.io/cephrbdmirrors.ceph.rook.io created

customresourcedefinition.apiextensions.k8s.io/objectbucketclaims.objectbucket.io created

customresourcedefinition.apiextensions.k8s.io/objectbuckets.objectbucket.io created

namespace/rook-ceph created

clusterrole.rbac.authorization.k8s.io/cephfs-csi-nodeplugin created

clusterrole.rbac.authorization.k8s.io/cephfs-external-provisioner-runner created

clusterrole.rbac.authorization.k8s.io/objectstorage-provisioner-role created

clusterrole.rbac.authorization.k8s.io/rbd-csi-nodeplugin created

clusterrole.rbac.authorization.k8s.io/rbd-external-provisioner-runner created

clusterrole.rbac.authorization.k8s.io/rook-ceph-cluster-mgmt created

clusterrole.rbac.authorization.k8s.io/rook-ceph-global created

clusterrole.rbac.authorization.k8s.io/rook-ceph-mgr-cluster created

clusterrole.rbac.authorization.k8s.io/rook-ceph-mgr-system created

clusterrole.rbac.authorization.k8s.io/rook-ceph-object-bucket created

clusterrole.rbac.authorization.k8s.io/rook-ceph-osd created

clusterrole.rbac.authorization.k8s.io/rook-ceph-system created

clusterrolebinding.rbac.authorization.k8s.io/cephfs-csi-nodeplugin-role created

clusterrolebinding.rbac.authorization.k8s.io/cephfs-csi-provisioner-role created

clusterrolebinding.rbac.authorization.k8s.io/objectstorage-provisioner-role-binding created

clusterrolebinding.rbac.authorization.k8s.io/rbd-csi-nodeplugin created

clusterrolebinding.rbac.authorization.k8s.io/rbd-csi-provisioner-role created

clusterrolebinding.rbac.authorization.k8s.io/rook-ceph-global created

clusterrolebinding.rbac.authorization.k8s.io/rook-ceph-mgr-cluster created

clusterrolebinding.rbac.authorization.k8s.io/rook-ceph-object-bucket created

clusterrolebinding.rbac.authorization.k8s.io/rook-ceph-osd created

clusterrolebinding.rbac.authorization.k8s.io/rook-ceph-system created

role.rbac.authorization.k8s.io/cephfs-external-provisioner-cfg created

role.rbac.authorization.k8s.io/rbd-csi-nodeplugin created

role.rbac.authorization.k8s.io/rbd-external-provisioner-cfg created

role.rbac.authorization.k8s.io/rook-ceph-cmd-reporter created

role.rbac.authorization.k8s.io/rook-ceph-mgr created

role.rbac.authorization.k8s.io/rook-ceph-osd created

role.rbac.authorization.k8s.io/rook-ceph-purge-osd created

role.rbac.authorization.k8s.io/rook-ceph-rgw created

role.rbac.authorization.k8s.io/rook-ceph-system created

rolebinding.rbac.authorization.k8s.io/cephfs-csi-provisioner-role-cfg created

rolebinding.rbac.authorization.k8s.io/rbd-csi-nodeplugin-role-cfg created

rolebinding.rbac.authorization.k8s.io/rbd-csi-provisioner-role-cfg created

rolebinding.rbac.authorization.k8s.io/rook-ceph-cluster-mgmt created

rolebinding.rbac.authorization.k8s.io/rook-ceph-cmd-reporter created

rolebinding.rbac.authorization.k8s.io/rook-ceph-mgr created

rolebinding.rbac.authorization.k8s.io/rook-ceph-mgr-system created

rolebinding.rbac.authorization.k8s.io/rook-ceph-osd created

rolebinding.rbac.authorization.k8s.io/rook-ceph-purge-osd created

rolebinding.rbac.authorization.k8s.io/rook-ceph-rgw created

rolebinding.rbac.authorization.k8s.io/rook-ceph-system created

serviceaccount/objectstorage-provisioner created

serviceaccount/rook-ceph-cmd-reporter created

serviceaccount/rook-ceph-mgr created

serviceaccount/rook-ceph-osd created

serviceaccount/rook-ceph-purge-osd created

serviceaccount/rook-ceph-rgw created

serviceaccount/rook-ceph-system created

serviceaccount/rook-csi-cephfs-plugin-sa created

serviceaccount/rook-csi-cephfs-provisioner-sa created

serviceaccount/rook-csi-rbd-plugin-sa created

serviceaccount/rook-csi-rbd-provisioner-sa created部署Rook Operator组件,该组件为Rook与Kubernetes 交互的组件,整个集群只需要一个副本。Rook Operator 的配置在Ceph集群安装后不能修改,否则Rook会删除Ceph集群并重建。修改operator.yaml的配置

vim operator.yaml

#需配置镜像加速

# these images to the desired release of the CSI driver.

110 # ROOK_CSI_CEPH_IMAGE: "quay.io/cephcsi/cephcsi:v3.9.0"

111 # ROOK_CSI_REGISTRAR_IMAGE: "registry.k8s.io/sig-storage/csi-node-driver-registrar:v2.8.0"

112 # ROOK_CSI_RESIZER_IMAGE: "registry.k8s.io/sig-storage/csi-resizer:v1.8.0"

113 # ROOK_CSI_PROVISIONER_IMAGE: "registry.k8s.io/sig-storage/csi-provisioner:v3.5.0"

114 # ROOK_CSI_SNAPSHOTTER_IMAGE: "registry.k8s.io/sig-storage/csi-snapshotter:v6.2.2"

115 # ROOK_CSI_ATTACHER_IMAGE: "registry.k8s.io/sig-storage/csi-attacher:v4.3.0"

#生产环境一般将裸设备自动发现开关设置true

498 ROOK_ENABLE_DISCOVERY_DAEMON: "true"

#打开CephCSI提供者的节点(node)亲和性(去掉前面的注释即可,会同时作用于CephFS和RBD提供者,如果分开这两者的调度,打开后面专用的节点亲和性)

184 CSI_PROVISIONER_NODE_AFFINITY: "role=storage-node; storage=rook-ceph"

#如果CephFS和RBD提供者的调度亲和性要分开,则在上面的基础上继续打开他们专用的开关

209 #CSI_RBD_PROVISIONER_NODE_AFFINITY: "role=rbd-node"

226 #CSI_CEPHFS_PROVISIONER_NODE_AFFINITY: "role=cephfs-node"

#打开CephCSI插件的节点(node)亲和性(去掉前面的注释即可,会同时作用于CephFS和RBD提供者,如果分开这两者的调度,打开后面专用的节点亲和性)

196 CSI_PLUGIN_NODE_AFFINITY: "role=storage-node; storage=rook-ceph"

#如果CephFS和RBD提供者的调度亲和性要分开,则在上面的基础上继续打开他们专用的开关

217 # CSI_RBD_PLUGIN_NODE_AFFINITY: "role=rbd-node"

234 # CSI_CEPHFS_PLUGIN_NODE_AFFINITY: "role=cephfs-node"

#生产环境一般打开裸设备自动发现守护进程

ROOK_ENABLE_DISCOVERY_DAEMON: "true"

#同时打开发现代理的节点亲和性环境变量修改完成后,根据节点标签亲和性设置,

[root@master-1-230 ~]# kubectl label nodes node-1-231 node-1-232 node-1-233 role=storage-node

node/node-1-231 labeled

node/node-1-232 labeled

node/node-1-233 labeled

[root@master-1-230 ~]# kubectl label nodes node-1-231 node-1-232 node-1-233 storage=rook-ceph

node/node-1-231 labeled

node/node-1-232 labeled

node/node-1-233 labeled修改完成后,在master节点部署Rook Ceph Operator

cat operator.yaml

[root@master-1-230 examples]# cat operator.yaml

#################################################################################################################

# The deployment for the rook operator

# Contains the common settings for most Kubernetes deployments.

# For example, to create the rook-ceph cluster:

# kubectl create -f crds.yaml -f common.yaml -f operator.yaml

# kubectl create -f cluster.yaml

#

# Also see other operator sample files for variations of operator.yaml:

# - operator-openshift.yaml: Common settings for running in OpenShift

###############################################################################################################

# Rook Ceph Operator Config ConfigMap

# Use this ConfigMap to override Rook-Ceph Operator configurations.

# NOTE! Precedence will be given to this config if the same Env Var config also exists in the

# Operator Deployment.

# To move a configuration(s) from the Operator Deployment to this ConfigMap, add the config

# here. It is recommended to then remove it from the Deployment to eliminate any future confusion.

kind: ConfigMap

apiVersion: v1

metadata:

name: rook-ceph-operator-config

# should be in the namespace of the operator

namespace: rook-ceph # namespace:operator

data:

# The logging level for the operator: ERROR | WARNING | INFO | DEBUG

ROOK_LOG_LEVEL: "INFO"

# Allow using loop devices for osds in test clusters.

ROOK_CEPH_ALLOW_LOOP_DEVICES: "false"

# Enable the CSI driver.

# To run the non-default version of the CSI driver, see the override-able image properties in operator.yaml

ROOK_CSI_ENABLE_CEPHFS: "true"

# Enable the default version of the CSI RBD driver. To start another version of the CSI driver, see image properties below.

ROOK_CSI_ENABLE_RBD: "true"

# Enable the CSI NFS driver. To start another version of the CSI driver, see image properties below.

ROOK_CSI_ENABLE_NFS: "false"

ROOK_CSI_ENABLE_GRPC_METRICS: "false"

# Set to true to enable Ceph CSI pvc encryption support.

CSI_ENABLE_ENCRYPTION: "false"

# Set to true to enable host networking for CSI CephFS and RBD nodeplugins. This may be necessary

# in some network configurations where the SDN does not provide access to an external cluster or

# there is significant drop in read/write performance.

# CSI_ENABLE_HOST_NETWORK: "true"

# Set to true to enable adding volume metadata on the CephFS subvolume and RBD images.

# Not all users might be interested in getting volume/snapshot details as metadata on CephFS subvolume and RBD images.

# Hence enable metadata is false by default.

# CSI_ENABLE_METADATA: "true"

# cluster name identifier to set as metadata on the CephFS subvolume and RBD images. This will be useful in cases

# like for example, when two container orchestrator clusters (Kubernetes/OCP) are using a single ceph cluster.

# CSI_CLUSTER_NAME: "my-prod-cluster"

# Set logging level for cephCSI containers maintained by the cephCSI.

# Supported values from 0 to 5. 0 for general useful logs, 5 for trace level verbosity.

# CSI_LOG_LEVEL: "0"

# Set logging level for Kubernetes-csi sidecar containers.

# Supported values from 0 to 5. 0 for general useful logs (the default), 5 for trace level verbosity.

# CSI_SIDECAR_LOG_LEVEL: "0"

# Set replicas for csi provisioner deployment.

CSI_PROVISIONER_REPLICAS: "2"

# OMAP generator will generate the omap mapping between the PV name and the RBD image.

# CSI_ENABLE_OMAP_GENERATOR need to be enabled when we are using rbd mirroring feature.

# By default OMAP generator sidecar is deployed with CSI provisioner pod, to disable

# it set it to false.

# CSI_ENABLE_OMAP_GENERATOR: "false"

# set to false to disable deployment of snapshotter container in CephFS provisioner pod.

CSI_ENABLE_CEPHFS_SNAPSHOTTER: "true"

# set to false to disable deployment of snapshotter container in NFS provisioner pod.

CSI_ENABLE_NFS_SNAPSHOTTER: "true"

# set to false to disable deployment of snapshotter container in RBD provisioner pod.

CSI_ENABLE_RBD_SNAPSHOTTER: "true"

# Enable cephfs kernel driver instead of ceph-fuse.

# If you disable the kernel client, your application may be disrupted during upgrade.

# See the upgrade guide: https://rook.io/docs/rook/latest/ceph-upgrade.html

# NOTE! cephfs quota is not supported in kernel version < 4.17

CSI_FORCE_CEPHFS_KERNEL_CLIENT: "true"

# (Optional) policy for modifying a volume's ownership or permissions when the RBD PVC is being mounted.

# supported values are documented at https://kubernetes-csi.github.io/docs/support-fsgroup.html

CSI_RBD_FSGROUPPOLICY: "File"

# (Optional) policy for modifying a volume's ownership or permissions when the CephFS PVC is being mounted.

# supported values are documented at https://kubernetes-csi.github.io/docs/support-fsgroup.html

CSI_CEPHFS_FSGROUPPOLICY: "File"

# (Optional) policy for modifying a volume's ownership or permissions when the NFS PVC is being mounted.

# supported values are documented at https://kubernetes-csi.github.io/docs/support-fsgroup.html

CSI_NFS_FSGROUPPOLICY: "File"

# (Optional) Allow starting unsupported ceph-csi image

ROOK_CSI_ALLOW_UNSUPPORTED_VERSION: "false"

# (Optional) control the host mount of /etc/selinux for csi plugin pods.

CSI_PLUGIN_ENABLE_SELINUX_HOST_MOUNT: "false"

# The default version of CSI supported by Rook will be started. To change the version

# of the CSI driver to something other than what is officially supported, change

# these images to the desired release of the CSI driver.

# ROOK_CSI_CEPH_IMAGE: "quay.io/cephcsi/cephcsi:v3.9.0"

# ROOK_CSI_REGISTRAR_IMAGE: "registry.k8s.io/sig-storage/csi-node-driver-registrar:v2.8.0"

# ROOK_CSI_RESIZER_IMAGE: "registry.k8s.io/sig-storage/csi-resizer:v1.8.0"

# ROOK_CSI_PROVISIONER_IMAGE: "registry.k8s.io/sig-storage/csi-provisioner:v3.5.0"

# ROOK_CSI_SNAPSHOTTER_IMAGE: "registry.k8s.io/sig-storage/csi-snapshotter:v6.2.2"

# ROOK_CSI_ATTACHER_IMAGE: "registry.k8s.io/sig-storage/csi-attacher:v4.3.0"

# To indicate the image pull policy to be applied to all the containers in the csi driver pods.

# ROOK_CSI_IMAGE_PULL_POLICY: "IfNotPresent"

# (Optional) set user created priorityclassName for csi plugin pods.

CSI_PLUGIN_PRIORITY_CLASSNAME: "system-node-critical"

# (Optional) set user created priorityclassName for csi provisioner pods.

CSI_PROVISIONER_PRIORITY_CLASSNAME: "system-cluster-critical"

# CSI CephFS plugin daemonset update strategy, supported values are OnDelete and RollingUpdate.

# Default value is RollingUpdate.

# CSI_CEPHFS_PLUGIN_UPDATE_STRATEGY: "OnDelete"

# A maxUnavailable parameter of CSI cephFS plugin daemonset update strategy.

# Default value is 1.

# CSI_CEPHFS_PLUGIN_UPDATE_STRATEGY_MAX_UNAVAILABLE: "1"

# CSI RBD plugin daemonset update strategy, supported values are OnDelete and RollingUpdate.

# Default value is RollingUpdate.

# CSI_RBD_PLUGIN_UPDATE_STRATEGY: "OnDelete"

# A maxUnavailable parameter of CSI RBD plugin daemonset update strategy.

# Default value is 1.

# CSI_RBD_PLUGIN_UPDATE_STRATEGY_MAX_UNAVAILABLE: "1"

# CSI NFS plugin daemonset update strategy, supported values are OnDelete and RollingUpdate.

# Default value is RollingUpdate.

# CSI_NFS_PLUGIN_UPDATE_STRATEGY: "OnDelete"

# kubelet directory path, if kubelet configured to use other than /var/lib/kubelet path.

# ROOK_CSI_KUBELET_DIR_PATH: "/var/lib/kubelet"

# Labels to add to the CSI CephFS Deployments and DaemonSets Pods.

# ROOK_CSI_CEPHFS_POD_LABELS: "key1=value1,key2=value2"

# Labels to add to the CSI RBD Deployments and DaemonSets Pods.

# ROOK_CSI_RBD_POD_LABELS: "key1=value1,key2=value2"

# Labels to add to the CSI NFS Deployments and DaemonSets Pods.

# ROOK_CSI_NFS_POD_LABELS: "key1=value1,key2=value2"

# (Optional) CephCSI CephFS plugin Volumes

# CSI_CEPHFS_PLUGIN_VOLUME: |

# - name: lib-modules

# hostPath:

# path: /run/current-system/kernel-modules/lib/modules/

# - name: host-nix

# hostPath:

# path: /nix

# (Optional) CephCSI CephFS plugin Volume mounts

# CSI_CEPHFS_PLUGIN_VOLUME_MOUNT: |

# - name: host-nix

# mountPath: /nix

# readOnly: true

# (Optional) CephCSI RBD plugin Volumes

# CSI_RBD_PLUGIN_VOLUME: |

# - name: lib-modules

# hostPath:

# path: /run/current-system/kernel-modules/lib/modules/

# - name: host-nix

# hostPath:

# path: /nix

# (Optional) CephCSI RBD plugin Volume mounts

# CSI_RBD_PLUGIN_VOLUME_MOUNT: |

# - name: host-nix

# mountPath: /nix

# readOnly: true

# (Optional) CephCSI provisioner NodeAffinity (applied to both CephFS and RBD provisioner).

CSI_PROVISIONER_NODE_AFFINITY: "role=storage-node; storage=rook-ceph"

# (Optional) CephCSI provisioner tolerations list(applied to both CephFS and RBD provisioner).

# Put here list of taints you want to tolerate in YAML format.

# CSI provisioner would be best to start on the same nodes as other ceph daemons.

# CSI_PROVISIONER_TOLERATIONS: |

# - effect: NoSchedule

# key: node-role.kubernetes.io/control-plane

# operator: Exists

# - effect: NoExecute

# key: node-role.kubernetes.io/etcd

# operator: Exists

# (Optional) CephCSI plugin NodeAffinity (applied to both CephFS and RBD plugin).

CSI_PLUGIN_NODE_AFFINITY: "role=storage-node; storage=rook-ceph"

# (Optional) CephCSI plugin tolerations list(applied to both CephFS and RBD plugin).

# Put here list of taints you want to tolerate in YAML format.

# CSI plugins need to be started on all the nodes where the clients need to mount the storage.

# CSI_PLUGIN_TOLERATIONS: |

# - effect: NoSchedule

# key: node-role.kubernetes.io/control-plane

# operator: Exists

# - effect: NoExecute

# key: node-role.kubernetes.io/etcd

# operator: Exists

# (Optional) CephCSI RBD provisioner NodeAffinity (if specified, overrides CSI_PROVISIONER_NODE_AFFINITY).

# CSI_RBD_PROVISIONER_NODE_AFFINITY: "role=rbd-node"

# (Optional) CephCSI RBD provisioner tolerations list(if specified, overrides CSI_PROVISIONER_TOLERATIONS).

# Put here list of taints you want to tolerate in YAML format.

# CSI provisioner would be best to start on the same nodes as other ceph daemons.

# CSI_RBD_PROVISIONER_TOLERATIONS: |

# - key: node.rook.io/rbd

# operator: Exists

# (Optional) CephCSI RBD plugin NodeAffinity (if specified, overrides CSI_PLUGIN_NODE_AFFINITY).

# CSI_RBD_PLUGIN_NODE_AFFINITY: "role=rbd-node"

# (Optional) CephCSI RBD plugin tolerations list(if specified, overrides CSI_PLUGIN_TOLERATIONS).

# Put here list of taints you want to tolerate in YAML format.

# CSI plugins need to be started on all the nodes where the clients need to mount the storage.

# CSI_RBD_PLUGIN_TOLERATIONS: |

# - key: node.rook.io/rbd

# operator: Exists

# (Optional) CephCSI CephFS provisioner NodeAffinity (if specified, overrides CSI_PROVISIONER_NODE_AFFINITY).

# CSI_CEPHFS_PROVISIONER_NODE_AFFINITY: "role=cephfs-node"

# (Optional) CephCSI CephFS provisioner tolerations list(if specified, overrides CSI_PROVISIONER_TOLERATIONS).

# Put here list of taints you want to tolerate in YAML format.

# CSI provisioner would be best to start on the same nodes as other ceph daemons.

# CSI_CEPHFS_PROVISIONER_TOLERATIONS: |

# - key: node.rook.io/cephfs

# operator: Exists

# (Optional) CephCSI CephFS plugin NodeAffinity (if specified, overrides CSI_PLUGIN_NODE_AFFINITY).

# CSI_CEPHFS_PLUGIN_NODE_AFFINITY: "role=cephfs-node"

# NOTE: Support for defining NodeAffinity for operators other than "In" and "Exists" requires the user to input a

# valid v1.NodeAffinity JSON or YAML string. For example, the following is valid YAML v1.NodeAffinity:

# CSI_CEPHFS_PLUGIN_NODE_AFFINITY: |

# requiredDuringSchedulingIgnoredDuringExecution:

# nodeSelectorTerms:

# - matchExpressions:

# - key: myKey

# operator: DoesNotExist

# (Optional) CephCSI CephFS plugin tolerations list(if specified, overrides CSI_PLUGIN_TOLERATIONS).

# Put here list of taints you want to tolerate in YAML format.

# CSI plugins need to be started on all the nodes where the clients need to mount the storage.

# CSI_CEPHFS_PLUGIN_TOLERATIONS: |

# - key: node.rook.io/cephfs

# operator: Exists

# (Optional) CephCSI NFS provisioner NodeAffinity (overrides CSI_PROVISIONER_NODE_AFFINITY).

# CSI_NFS_PROVISIONER_NODE_AFFINITY: "role=nfs-node"

# (Optional) CephCSI NFS provisioner tolerations list (overrides CSI_PROVISIONER_TOLERATIONS).

# Put here list of taints you want to tolerate in YAML format.

# CSI provisioner would be best to start on the same nodes as other ceph daemons.

# CSI_NFS_PROVISIONER_TOLERATIONS: |

# - key: node.rook.io/nfs

# operator: Exists

# (Optional) CephCSI NFS plugin NodeAffinity (overrides CSI_PLUGIN_NODE_AFFINITY).

# CSI_NFS_PLUGIN_NODE_AFFINITY: "role=nfs-node"

# (Optional) CephCSI NFS plugin tolerations list (overrides CSI_PLUGIN_TOLERATIONS).

# Put here list of taints you want to tolerate in YAML format.

# CSI plugins need to be started on all the nodes where the clients need to mount the storage.

# CSI_NFS_PLUGIN_TOLERATIONS: |

# - key: node.rook.io/nfs

# operator: Exists

# (Optional) CEPH CSI RBD provisioner resource requirement list, Put here list of resource

# requests and limits you want to apply for provisioner pod

#CSI_RBD_PROVISIONER_RESOURCE: |

# - name : csi-provisioner

# resource:

# requests:

# memory: 128Mi

# cpu: 100m

# limits:

# memory: 256Mi

# cpu: 200m

# - name : csi-resizer

# resource:

# requests:

# memory: 128Mi

# cpu: 100m

# limits:

# memory: 256Mi

# cpu: 200m

# - name : csi-attacher

# resource:

# requests:

# memory: 128Mi

# cpu: 100m

# limits:

# memory: 256Mi

# cpu: 200m

# - name : csi-snapshotter

# resource:

# requests:

# memory: 128Mi

# cpu: 100m

# limits:

# memory: 256Mi

# cpu: 200m

# - name : csi-rbdplugin

# resource:

# requests:

# memory: 512Mi

# cpu: 250m

# limits:

# memory: 1Gi

# cpu: 500m

# - name : csi-omap-generator

# resource:

# requests:

# memory: 512Mi

# cpu: 250m

# limits:

# memory: 1Gi

# cpu: 500m

# - name : liveness-prometheus

# resource:

# requests:

# memory: 128Mi

# cpu: 50m

# limits:

# memory: 256Mi

# cpu: 100m

# (Optional) CEPH CSI RBD plugin resource requirement list, Put here list of resource

# requests and limits you want to apply for plugin pod

#CSI_RBD_PLUGIN_RESOURCE: |

# - name : driver-registrar

# resource:

# requests:

# memory: 128Mi

# cpu: 50m

# limits:

# memory: 256Mi

# cpu: 100m

# - name : csi-rbdplugin

# resource:

# requests:

# memory: 512Mi

# cpu: 250m

# limits:

# memory: 1Gi

# cpu: 500m

# - name : liveness-prometheus

# resource:

# requests:

# memory: 128Mi

# cpu: 50m

# limits:

# memory: 256Mi

# cpu: 100m

# (Optional) CEPH CSI CephFS provisioner resource requirement list, Put here list of resource

# requests and limits you want to apply for provisioner pod

#CSI_CEPHFS_PROVISIONER_RESOURCE: |

# - name : csi-provisioner

# resource:

# requests:

# memory: 128Mi

# cpu: 100m

# limits:

# memory: 256Mi

# cpu: 200m

# - name : csi-resizer

# resource:

# requests:

# memory: 128Mi

# cpu: 100m

# limits:

# memory: 256Mi

# cpu: 200m

# - name : csi-attacher

# resource:

# requests:

# memory: 128Mi

# cpu: 100m

# limits:

# memory: 256Mi

# cpu: 200m

# - name : csi-snapshotter

# resource:

# requests:

# memory: 128Mi

# cpu: 100m

# limits:

# memory: 256Mi

# cpu: 200m

# - name : csi-cephfsplugin

# resource:

# requests:

# memory: 512Mi

# cpu: 250m

# limits:

# memory: 1Gi

# cpu: 500m

# - name : liveness-prometheus

# resource:

# requests:

# memory: 128Mi

# cpu: 50m

# limits:

# memory: 256Mi

# cpu: 100m

# (Optional) CEPH CSI CephFS plugin resource requirement list, Put here list of resource

# requests and limits you want to apply for plugin pod

#CSI_CEPHFS_PLUGIN_RESOURCE: |

# - name : driver-registrar

# resource:

# requests:

# memory: 128Mi

# cpu: 50m

# limits:

# memory: 256Mi

# cpu: 100m

# - name : csi-cephfsplugin

# resource:

# requests:

# memory: 512Mi

# cpu: 250m

# limits:

# memory: 1Gi

# cpu: 500m

# - name : liveness-prometheus

# resource:

# requests:

# memory: 128Mi

# cpu: 50m

# limits:

# memory: 256Mi

# cpu: 100m

# (Optional) CEPH CSI NFS provisioner resource requirement list, Put here list of resource

# requests and limits you want to apply for provisioner pod

# CSI_NFS_PROVISIONER_RESOURCE: |

# - name : csi-provisioner

# resource:

# requests:

# memory: 128Mi

# cpu: 100m

# limits:

# memory: 256Mi

# cpu: 200m

# - name : csi-nfsplugin

# resource:

# requests:

# memory: 512Mi

# cpu: 250m

# limits:

# memory: 1Gi

# cpu: 500m

# - name : csi-attacher

# resource:

# requests:

# memory: 128Mi

# cpu: 100m

# limits:

# memory: 256Mi

# cpu: 200m

# (Optional) CEPH CSI NFS plugin resource requirement list, Put here list of resource

# requests and limits you want to apply for plugin pod

# CSI_NFS_PLUGIN_RESOURCE: |

# - name : driver-registrar

# resource:

# requests:

# memory: 128Mi

# cpu: 50m

# limits:

# memory: 256Mi

# cpu: 100m

# - name : csi-nfsplugin

# resource:

# requests:

# memory: 512Mi

# cpu: 250m

# limits:

# memory: 1Gi

# cpu: 500m

# Configure CSI Ceph FS grpc and liveness metrics port

# Set to true to enable Ceph CSI liveness container.

CSI_ENABLE_LIVENESS: "false"

# CSI_CEPHFS_GRPC_METRICS_PORT: "9091"

# CSI_CEPHFS_LIVENESS_METRICS_PORT: "9081"

# Configure CSI RBD grpc and liveness metrics port

# CSI_RBD_GRPC_METRICS_PORT: "9090"

# CSI_RBD_LIVENESS_METRICS_PORT: "9080"

# CSIADDONS_PORT: "9070"

# Set CephFS Kernel mount options to use https://docs.ceph.com/en/latest/man/8/mount.ceph/#options

# Set to "ms_mode=secure" when connections.encrypted is enabled in CephCluster CR

# CSI_CEPHFS_KERNEL_MOUNT_OPTIONS: "ms_mode=secure"

# Whether the OBC provisioner should watch on the operator namespace or not, if not the namespace of the cluster will be used

ROOK_OBC_WATCH_OPERATOR_NAMESPACE: "true"

# Whether to start the discovery daemon to watch for raw storage devices on nodes in the cluster.

# This daemon does not need to run if you are only going to create your OSDs based on StorageClassDeviceSets with PVCs.

ROOK_ENABLE_DISCOVERY_DAEMON: "true"

# The timeout value (in seconds) of Ceph commands. It should be >= 1. If this variable is not set or is an invalid value, it's default to 15.

ROOK_CEPH_COMMANDS_TIMEOUT_SECONDS: "15"

# Enable the csi addons sidecar.

CSI_ENABLE_CSIADDONS: "false"

# Enable watch for faster recovery from rbd rwo node loss

ROOK_WATCH_FOR_NODE_FAILURE: "true"

# ROOK_CSIADDONS_IMAGE: "quay.io/csiaddons/k8s-sidecar:v0.7.0"

# The CSI GRPC timeout value (in seconds). It should be >= 120. If this variable is not set or is an invalid value, it's default to 150.

CSI_GRPC_TIMEOUT_SECONDS: "150"

ROOK_DISABLE_ADMISSION_CONTROLLER: "true"

# Enable topology based provisioning.

CSI_ENABLE_TOPOLOGY: "false"

# Domain labels define which node labels to use as domains

# for CSI nodeplugins to advertise their domains

# NOTE: the value here serves as an example and needs to be

# updated with node labels that define domains of interest

# CSI_TOPOLOGY_DOMAIN_LABELS: "kubernetes.io/hostname,topology.kubernetes.io/zone,topology.rook.io/rack"

# Enable read affinity for RBD volumes. Recommended to

# set to true if running kernel 5.8 or newer.

CSI_ENABLE_READ_AFFINITY: "false"

# CRUSH location labels define which node labels to use

# as CRUSH location. This should correspond to the values set in

# the CRUSH map.

# Defaults to all the labels mentioned in

# https://rook.io/docs/rook/latest/CRDs/Cluster/ceph-cluster-crd/#osd-topology

# CSI_CRUSH_LOCATION_LABELS: "kubernetes.io/hostname,topology.kubernetes.io/zone,topology.rook.io/rack"

# Whether to skip any attach operation altogether for CephCSI PVCs.

# See more details [here](https://kubernetes-csi.github.io/docs/skip-attach.html#skip-attach-with-csi-driver-object).

# If set to false it skips the volume attachments and makes the creation of pods using the CephCSI PVC fast.

# **WARNING** It's highly discouraged to use this for RWO volumes. for RBD PVC it can cause data corruption,

# csi-addons operations like Reclaimspace and PVC Keyrotation will also not be supported if set to false

# since we'll have no VolumeAttachments to determine which node the PVC is mounted on.

# Refer to this [issue](https://github.com/kubernetes/kubernetes/issues/103305) for more details.

CSI_CEPHFS_ATTACH_REQUIRED: "true"

CSI_RBD_ATTACH_REQUIRED: "true"

CSI_NFS_ATTACH_REQUIRED: "true"

# Rook Discover toleration. Will tolerate all taints with all keys.

# (Optional) Rook Discover tolerations list. Put here list of taints you want to tolerate in YAML format.

# DISCOVER_TOLERATIONS: |

# - effect: NoSchedule

# key: node-role.kubernetes.io/control-plane

# operator: Exists

# - effect: NoExecute

# key: node-role.kubernetes.io/etcd

# operator: Exists

# (Optional) Rook Discover priority class name to set on the pod(s)

# DISCOVER_PRIORITY_CLASS_NAME: "<PriorityClassName>"

# (Optional) Discover Agent NodeAffinity.

DISCOVER_AGENT_NODE_AFFINITY: |

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: role

operator: In

values:

- storage-node

- key: storage

operator: In

values:

- rook-ceph

# (Optional) Discover Agent Pod Labels.

# DISCOVER_AGENT_POD_LABELS: "key1=value1,key2=value2"

# Disable automatic orchestration when new devices are discovered

ROOK_DISABLE_DEVICE_HOTPLUG: "false"

# The duration between discovering devices in the rook-discover daemonset.

ROOK_DISCOVER_DEVICES_INTERVAL: "60m"

# DISCOVER_DAEMON_RESOURCES: |

# - name: DISCOVER_DAEMON_RESOURCES

# resources:

# limits:

# cpu: 500m

# memory: 512Mi

# requests:

# cpu: 100m

# memory: 128Mi

---

# OLM: BEGIN OPERATOR DEPLOYMENT

apiVersion: apps/v1

kind: Deployment

metadata:

name: rook-ceph-operator

namespace: rook-ceph # namespace:operator

labels:

operator: rook

storage-backend: ceph

app.kubernetes.io/name: rook-ceph

app.kubernetes.io/instance: rook-ceph

app.kubernetes.io/component: rook-ceph-operator

app.kubernetes.io/part-of: rook-ceph-operator

spec:

selector:

matchLabels:

app: rook-ceph-operator

strategy:

type: Recreate

replicas: 1

template:

metadata:

labels:

app: rook-ceph-operator

spec:

serviceAccountName: rook-ceph-system

containers:

- name: rook-ceph-operator

#image: rook/ceph:v1.12.8

image: docker.io/rook/ceph:v1.12.8

args: ["ceph", "operator"]

securityContext:

runAsNonRoot: true

runAsUser: 2016

runAsGroup: 2016

capabilities:

drop: ["ALL"]

volumeMounts:

- mountPath: /var/lib/rook

name: rook-config

- mountPath: /etc/ceph

name: default-config-dir

- mountPath: /etc/webhook

name: webhook-cert

ports:

- containerPort: 9443

name: https-webhook

protocol: TCP

env:

# If the operator should only watch for cluster CRDs in the same namespace, set this to "true".

# If this is not set to true, the operator will watch for cluster CRDs in all namespaces.

- name: ROOK_CURRENT_NAMESPACE_ONLY

value: "false"

# Whether to start pods as privileged that mount a host path, which includes the Ceph mon and osd pods.

# Set this to true if SELinux is enabled (e.g. OpenShift) to workaround the anyuid issues.

# For more details see https://github.com/rook/rook/issues/1314#issuecomment-355799641

- name: ROOK_HOSTPATH_REQUIRES_PRIVILEGED

value: "false"

# Provide customised regex as the values using comma. For eg. regex for rbd based volume, value will be like "(?i)rbd[0-9]+".

# In case of more than one regex, use comma to separate between them.

# Default regex will be "(?i)dm-[0-9]+,(?i)rbd[0-9]+,(?i)nbd[0-9]+"

# Add regex expression after putting a comma to blacklist a disk

# If value is empty, the default regex will be used.

- name: DISCOVER_DAEMON_UDEV_BLACKLIST

value: "(?i)dm-[0-9]+,(?i)rbd[0-9]+,(?i)nbd[0-9]+"

# Time to wait until the node controller will move Rook pods to other

# nodes after detecting an unreachable node.

# Pods affected by this setting are:

# mgr, rbd, mds, rgw, nfs, PVC based mons and osds, and ceph toolbox

# The value used in this variable replaces the default value of 300 secs

# added automatically by k8s as Toleration for

# <node.kubernetes.io/unreachable>

# The total amount of time to reschedule Rook pods in healthy nodes

# before detecting a <not ready node> condition will be the sum of:

# --> node-monitor-grace-period: 40 seconds (k8s kube-controller-manager flag)

# --> ROOK_UNREACHABLE_NODE_TOLERATION_SECONDS: 5 seconds

- name: ROOK_UNREACHABLE_NODE_TOLERATION_SECONDS

value: "5"

# The name of the node to pass with the downward API

- name: NODE_NAME

valueFrom:

fieldRef:

fieldPath: spec.nodeName

# The pod name to pass with the downward API

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

# The pod namespace to pass with the downward API

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

# Recommended resource requests and limits, if desired

#resources:

# limits:

# cpu: 500m

# memory: 512Mi

# requests:

# cpu: 100m

# memory: 128Mi

# Uncomment it to run lib bucket provisioner in multithreaded mode

#- name: LIB_BUCKET_PROVISIONER_THREADS

# value: "5"

# Uncomment it to run rook operator on the host network

#hostNetwork: true

volumes:

- name: rook-config

emptyDir: {}

- name: default-config-dir

emptyDir: {}

- name: webhook-cert

emptyDir: {}

# OLM: END OPERATOR DEPLOYMENT[root@master-1-230 examples]# kubectl apply -f operator.yaml

configmap/rook-ceph-operator-config created

deployment.apps/rook-ceph-operator created

[root@master-1-230 examples]# kubectl get pod -n rook-ceph

NAME READY STATUS RESTARTS AGE

rook-ceph-operator-9864d576b-mdj9r 1/1 Running 0 2m7s

rook-discover-7ngcr 1/1 Running 0 2m6s

rook-discover-dh2tx 1/1 Running 0 2m6s

rook-discover-hpx98 1/1 Running 0 2m6s检查rook-ceph-operator相关pod都运行正常,修改 rook/deploy/examples/cluster.yaml 文件

cat cluster.yaml

[root@master-1-230 examples]# cat cluster.yaml

#################################################################################################################

# Define the settings for the rook-ceph cluster with common settings for a production cluster.

# All nodes with available raw devices will be used for the Ceph cluster. At least three nodes are required

# in this example. See the documentation for more details on storage settings available.

# For example, to create the cluster:

# kubectl create -f crds.yaml -f common.yaml -f operator.yaml

# kubectl create -f cluster.yaml

#################################################################################################################

apiVersion: ceph.rook.io/v1

kind: CephCluster

metadata:

name: rook-ceph

namespace: rook-ceph # namespace:cluster

spec:

cephVersion:

# The container image used to launch the Ceph daemon pods (mon, mgr, osd, mds, rgw).

# v16 is Pacific, and v17 is Quincy.

# RECOMMENDATION: In production, use a specific version tag instead of the general v17 flag, which pulls the latest release and could result in different

# versions running within the cluster. See tags available at https://hub.docker.com/r/ceph/ceph/tags/.

# If you want to be more precise, you can always use a timestamp tag such quay.io/ceph/ceph:v17.2.6-20230410

# This tag might not contain a new Ceph version, just security fixes from the underlying operating system, which will reduce vulnerabilities

image: quay.io/ceph/ceph:v17.2.6

# Whether to allow unsupported versions of Ceph. Currently `pacific`, `quincy`, and `reef` are supported.

# Future versions such as `squid` (v19) would require this to be set to `true`.

# Do not set to true in production.

allowUnsupported: false

# The path on the host where configuration files will be persisted. Must be specified.

# Important: if you reinstall the cluster, make sure you delete this directory from each host or else the mons will fail to start on the new cluster.

# In Minikube, the '/data' directory is configured to persist across reboots. Use "/data/rook" in Minikube environment.

dataDirHostPath: /var/lib/rook

# Whether or not upgrade should continue even if a check fails

# This means Ceph's status could be degraded and we don't recommend upgrading but you might decide otherwise

# Use at your OWN risk

# To understand Rook's upgrade process of Ceph, read https://rook.io/docs/rook/latest/ceph-upgrade.html#ceph-version-upgrades

skipUpgradeChecks: false

# Whether or not continue if PGs are not clean during an upgrade

continueUpgradeAfterChecksEvenIfNotHealthy: false

# WaitTimeoutForHealthyOSDInMinutes defines the time (in minutes) the operator would wait before an OSD can be stopped for upgrade or restart.

# If the timeout exceeds and OSD is not ok to stop, then the operator would skip upgrade for the current OSD and proceed with the next one

# if `continueUpgradeAfterChecksEvenIfNotHealthy` is `false`. If `continueUpgradeAfterChecksEvenIfNotHealthy` is `true`, then operator would

# continue with the upgrade of an OSD even if its not ok to stop after the timeout. This timeout won't be applied if `skipUpgradeChecks` is `true`.

# The default wait timeout is 10 minutes.

waitTimeoutForHealthyOSDInMinutes: 10

mon:

# Set the number of mons to be started. Generally recommended to be 3.

# For highest availability, an odd number of mons should be specified.

count: 3

# The mons should be on unique nodes. For production, at least 3 nodes are recommended for this reason.

# Mons should only be allowed on the same node for test environments where data loss is acceptable.

allowMultiplePerNode: false

mgr:

# When higher availability of the mgr is needed, increase the count to 2.

# In that case, one mgr will be active and one in standby. When Ceph updates which

# mgr is active, Rook will update the mgr services to match the active mgr.

count: 2

allowMultiplePerNode: false

modules:

# Several modules should not need to be included in this list. The "dashboard" and "monitoring" modules

# are already enabled by other settings in the cluster CR.

- name: pg_autoscaler

enabled: true

# enable the ceph dashboard for viewing cluster status

dashboard:

enabled: true

# serve the dashboard under a subpath (useful when you are accessing the dashboard via a reverse proxy)

# urlPrefix: /ceph-dashboard

# serve the dashboard at the given port.

# port: 8443

# serve the dashboard using SSL

ssl: true

# The url of the Prometheus instance

# prometheusEndpoint: <protocol>://<prometheus-host>:<port>

# Whether SSL should be verified if the Prometheus server is using https

# prometheusEndpointSSLVerify: false

# enable prometheus alerting for cluster

monitoring:

# requires Prometheus to be pre-installed

enabled: false

# Whether to disable the metrics reported by Ceph. If false, the prometheus mgr module and Ceph exporter are enabled.

# If true, the prometheus mgr module and Ceph exporter are both disabled. Default is false.

metricsDisabled: false

network:

connections:

# Whether to encrypt the data in transit across the wire to prevent eavesdropping the data on the network.

# The default is false. When encryption is enabled, all communication between clients and Ceph daemons, or between Ceph daemons will be encrypted.

# When encryption is not enabled, clients still establish a strong initial authentication and data integrity is still validated with a crc check.

# IMPORTANT: Encryption requires the 5.11 kernel for the latest nbd and cephfs drivers. Alternatively for testing only,

# you can set the "mounter: rbd-nbd" in the rbd storage class, or "mounter: fuse" in the cephfs storage class.

# The nbd and fuse drivers are *not* recommended in production since restarting the csi driver pod will disconnect the volumes.

encryption:

enabled: false

# Whether to compress the data in transit across the wire. The default is false.

# Requires Ceph Quincy (v17) or newer. Also see the kernel requirements above for encryption.

compression:

enabled: false

# Whether to require communication over msgr2. If true, the msgr v1 port (6789) will be disabled

# and clients will be required to connect to the Ceph cluster with the v2 port (3300).

# Requires a kernel that supports msgr v2 (kernel 5.11 or CentOS 8.4 or newer).

requireMsgr2: false

# enable host networking

#provider: host

# enable the Multus network provider

#provider: multus

#selectors:

# The selector keys are required to be `public` and `cluster`.

# Based on the configuration, the operator will do the following:

# 1. if only the `public` selector key is specified both public_network and cluster_network Ceph settings will listen on that interface

# 2. if both `public` and `cluster` selector keys are specified the first one will point to 'public_network' flag and the second one to 'cluster_network'

#

# In order to work, each selector value must match a NetworkAttachmentDefinition object in Multus

#

# public: public-conf --> NetworkAttachmentDefinition object name in Multus

# cluster: cluster-conf --> NetworkAttachmentDefinition object name in Multus

# Provide internet protocol version. IPv6, IPv4 or empty string are valid options. Empty string would mean IPv4

#ipFamily: "IPv6"

# Ceph daemons to listen on both IPv4 and Ipv6 networks

#dualStack: false

# Enable multiClusterService to export the mon and OSD services to peer cluster.

# This is useful to support RBD mirroring between two clusters having overlapping CIDRs.

# Ensure that peer clusters are connected using an MCS API compatible application, like Globalnet Submariner.

#multiClusterService:

# enabled: false

# enable the crash collector for ceph daemon crash collection

crashCollector:

disable: false

# Uncomment daysToRetain to prune ceph crash entries older than the

# specified number of days.

#daysToRetain: 30

# enable log collector, daemons will log on files and rotate

logCollector:

enabled: true

periodicity: daily # one of: hourly, daily, weekly, monthly

maxLogSize: 500M # SUFFIX may be 'M' or 'G'. Must be at least 1M.

# automate [data cleanup process](https://github.com/rook/rook/blob/master/Documentation/Storage-Configuration/ceph-teardown.md#delete-the-data-on-hosts) in cluster destruction.

cleanupPolicy:

# Since cluster cleanup is destructive to data, confirmation is required.

# To destroy all Rook data on hosts during uninstall, confirmation must be set to "yes-really-destroy-data".

# This value should only be set when the cluster is about to be deleted. After the confirmation is set,

# Rook will immediately stop configuring the cluster and only wait for the delete command.

# If the empty string is set, Rook will not destroy any data on hosts during uninstall.

confirmation: ""

# sanitizeDisks represents settings for sanitizing OSD disks on cluster deletion

sanitizeDisks:

# method indicates if the entire disk should be sanitized or simply ceph's metadata

# in both case, re-install is possible

# possible choices are 'complete' or 'quick' (default)

method: quick

# dataSource indicate where to get random bytes from to write on the disk

# possible choices are 'zero' (default) or 'random'

# using random sources will consume entropy from the system and will take much more time then the zero source

dataSource: zero

# iteration overwrite N times instead of the default (1)

# takes an integer value

iteration: 1

# allowUninstallWithVolumes defines how the uninstall should be performed

# If set to true, cephCluster deletion does not wait for the PVs to be deleted.

allowUninstallWithVolumes: false

# To control where various services will be scheduled by kubernetes, use the placement configuration sections below.

# The example under 'all' would have all services scheduled on kubernetes nodes labeled with 'role=storage-node' and

# tolerate taints with a key of 'storage-node'.

placement:

all:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: role

operator: In

values:

- storage-node

# podAffinity:

# podAntiAffinity:

# topologySpreadConstraints:

# tolerations:

# - key: storage-node

# operator: Exists

# The above placement information can also be specified for mon, osd, and mgr components

# mon:

# Monitor deployments may contain an anti-affinity rule for avoiding monitor

# collocation on the same node. This is a required rule when host network is used

# or when AllowMultiplePerNode is false. Otherwise this anti-affinity rule is a

# preferred rule with weight: 50.

# osd:

# prepareosd:

# mgr:

# cleanup:

annotations:

# all:

# mon:

# osd:

# cleanup:

# prepareosd:

# clusterMetadata annotations will be applied to only `rook-ceph-mon-endpoints` configmap and the `rook-ceph-mon` and `rook-ceph-admin-keyring` secrets.

# And clusterMetadata annotations will not be merged with `all` annotations.

# clusterMetadata:

# kubed.appscode.com/sync: "true"

# If no mgr annotations are set, prometheus scrape annotations will be set by default.

# mgr:

labels:

# all:

# mon:

# osd:

# cleanup:

# mgr:

# prepareosd:

# monitoring is a list of key-value pairs. It is injected into all the monitoring resources created by operator.

# These labels can be passed as LabelSelector to Prometheus

# monitoring:

# crashcollector:

resources:

#The requests and limits set here, allow the mgr pod to use half of one CPU core and 1 gigabyte of memory

# mgr:

# limits:

# cpu: "500m"

# memory: "1024Mi"

# requests:

# cpu: "500m"

# memory: "1024Mi"

osd:

limits:

cpu: "800m"

memory: "2048Mi"

requests:

cpu: "800m"

memory: "2048Mi"

# The above example requests/limits can also be added to the other components

# mon:

# osd:

# For OSD it also is a possible to specify requests/limits based on device class

# osd-hdd:

# osd-ssd:

# osd-nvme:

# prepareosd:

# mgr-sidecar:

# crashcollector:

# logcollector:

# cleanup:

# exporter:

# The option to automatically remove OSDs that are out and are safe to destroy.

removeOSDsIfOutAndSafeToRemove: false

priorityClassNames:

#all: rook-ceph-default-priority-class

mon: system-node-critical

osd: system-node-critical

mgr: system-cluster-critical

#crashcollector: rook-ceph-crashcollector-priority-class

storage: # cluster level storage configuration and selection

useAllNodes: false

useAllDevices: false

#deviceFilter:

config:

# crushRoot: "custom-root" # specify a non-default root label for the CRUSH map

# metadataDevice: "md0" # specify a non-rotational storage so ceph-volume will use it as block db device of bluestore.

# databaseSizeMB: "1024" # uncomment if the disks are smaller than 100 GB

# osdsPerDevice: "1" # this value can be overridden at the node or device level

# encryptedDevice: "true" # the default value for this option is "false"

# Individual nodes and their config can be specified as well, but 'useAllNodes' above must be set to false. Then, only the named

# nodes below will be used as storage resources. Each node's 'name' field should match their 'kubernetes.io/hostname' label.

nodes:

- name: "node-1-231"

devices: # specific devices to use for storage can be specified for each node

- name: "sdb"

- name: "node-1-231"

devices: # specific devices to use for storage can be specified for each node

- name: "sdb"

- name: "node-1-231"

devices: # specific devices to use for storage can be specified for each node

- name: "sdb"

# - name: "nvme01" # multiple osds can be created on high performance devices

# config:

# osdsPerDevice: "5"

# - name: "/dev/disk/by-id/ata-ST4000DM004-XXXX" # devices can be specified using full udev paths

# config: # configuration can be specified at the node level which overrides the cluster level config

# - name: "172.17.4.301"

# deviceFilter: "^sd."

# when onlyApplyOSDPlacement is false, will merge both placement.All() and placement.osd

onlyApplyOSDPlacement: false

# Time for which an OSD pod will sleep before restarting, if it stopped due to flapping

# flappingRestartIntervalHours: 24

# The section for configuring management of daemon disruptions during upgrade or fencing.

disruptionManagement:

# If true, the operator will create and manage PodDisruptionBudgets for OSD, Mon, RGW, and MDS daemons. OSD PDBs are managed dynamically

# via the strategy outlined in the [design](https://github.com/rook/rook/blob/master/design/ceph/ceph-managed-disruptionbudgets.md). The operator will

# block eviction of OSDs by default and unblock them safely when drains are detected.

managePodBudgets: true

# A duration in minutes that determines how long an entire failureDomain like `region/zone/host` will be held in `noout` (in addition to the

# default DOWN/OUT interval) when it is draining. This is only relevant when `managePodBudgets` is `true`. The default value is `30` minutes.

osdMaintenanceTimeout: 30

# A duration in minutes that the operator will wait for the placement groups to become healthy (active+clean) after a drain was completed and OSDs came back up.

# Operator will continue with the next drain if the timeout exceeds. It only works if `managePodBudgets` is `true`.

# No values or 0 means that the operator will wait until the placement groups are healthy before unblocking the next drain.

pgHealthCheckTimeout: 0

# healthChecks

# Valid values for daemons are 'mon', 'osd', 'status'

healthCheck:

daemonHealth:

mon:

disabled: false

interval: 45s

osd:

disabled: false

interval: 60s

status:

disabled: false

interval: 60s

# Change pod liveness probe timing or threshold values. Works for all mon,mgr,osd daemons.

livenessProbe:

mon:

disabled: false

mgr:

disabled: false

osd:

disabled: false

# Change pod startup probe timing or threshold values. Works for all mon,mgr,osd daemons.

startupProbe:

mon:

disabled: false

mgr:

disabled: false

osd:

disabled: false主要修改集群osd资源限制。

resources:

#The requests and limits set here, allow the mgr pod to use half of one CPU core and 1 gigabyte of memory

# mgr:

# limits:

# cpu: "500m"

# memory: "1024Mi"

# requests:

# cpu: "500m"

# memory: "1024Mi"

osd:

limits:

cpu: "800m"

memory: "2048Mi"

requests:

cpu: "800m"

memory: "2048Mi"确保工作节点打上标签,部署yaml文件

[root@master-1-230 examples]# kubectl get nodes --show-labels

NAME STATUS ROLES AGE VERSION LABELS

master-1-230 Ready control-plane 15d v1.27.6 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=master-1-230,kubernetes.io/os=linux,node-role.kubernetes.io/control-plane=,node.kubernetes.io/exclude-from-external-load-balancers=

node-1-231 Ready <none> 15d v1.27.6 apptype=core,beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,ingress=true,kubernetes.io/arch=amd64,kubernetes.io/hostname=node-1-231,kubernetes.io/os=linux,role=storage-node,route-reflector=true,storage=rook-ceph

node-1-232 Ready <none> 15d v1.27.6 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,ingress=true,kubernetes.io/arch=amd64,kubernetes.io/hostname=node-1-232,kubernetes.io/os=linux,role=storage-node,route-reflector=true,storage=rook-ceph

node-1-233 Ready <none> 14d v1.27.6 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=node-1-233,kubernetes.io/os=linux,role=storage-node,storage=rook-ceph

[root@master-1-230 examples]# kubectl apply -f cluster.yaml

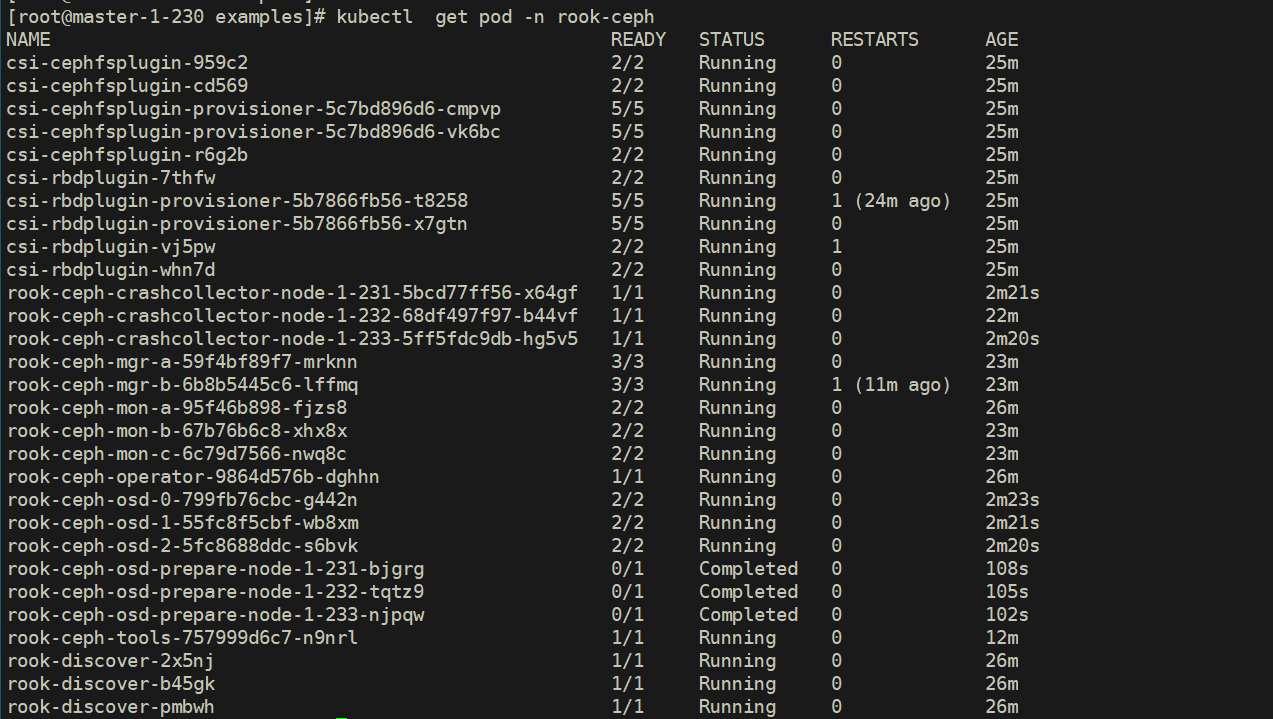

cephcluster.ceph.rook.io/rook-ceph created查看最终Pod的状态

没有发现以rook-ceph-osd-prepare pod。

[root@master-1-230 examples]# kubectl get pod -n rook-ceph

NAME READY STATUS RESTARTS AGE

csi-cephfsplugin-959c2 2/2 Running 0 25m

csi-cephfsplugin-cd569 2/2 Running 0 25m

csi-cephfsplugin-provisioner-5c7bd896d6-cmpvp 5/5 Running 0 25m

csi-cephfsplugin-provisioner-5c7bd896d6-vk6bc 5/5 Running 0 25m

csi-cephfsplugin-r6g2b 2/2 Running 0 25m

csi-rbdplugin-7thfw 2/2 Running 0 25m

csi-rbdplugin-provisioner-5b7866fb56-t8258 5/5 Running 1 (24m ago) 25m

csi-rbdplugin-provisioner-5b7866fb56-x7gtn 5/5 Running 0 25m

csi-rbdplugin-vj5pw 2/2 Running 1 25m

csi-rbdplugin-whn7d 2/2 Running 0 25m

rook-ceph-crashcollector-node-1-231-5bcd77ff56-x64gf 1/1 Running 0 2m21s

rook-ceph-crashcollector-node-1-232-68df497f97-b44vf 1/1 Running 0 22m

rook-ceph-crashcollector-node-1-233-5ff5fdc9db-hg5v5 1/1 Running 0 2m20s

rook-ceph-mgr-a-59f4bf89f7-mrknn 3/3 Running 0 23m

rook-ceph-mgr-b-6b8b5445c6-lffmq 3/3 Running 1 (11m ago) 23m

rook-ceph-mon-a-95f46b898-fjzs8 2/2 Running 0 26m

rook-ceph-mon-b-67b76b6c8-xhx8x 2/2 Running 0 23m

rook-ceph-mon-c-6c79d7566-nwq8c 2/2 Running 0 23m

rook-ceph-operator-9864d576b-dghhn 1/1 Running 0 26m

rook-ceph-osd-0-799fb76cbc-g442n 2/2 Running 0 2m23s

rook-ceph-osd-1-55fc8f5cbf-wb8xm 2/2 Running 0 2m21s

rook-ceph-osd-2-5fc8688ddc-s6bvk 2/2 Running 0 2m20s

rook-ceph-osd-prepare-node-1-231-bjgrg 0/1 Completed 0 108s

rook-ceph-osd-prepare-node-1-232-tqtz9 0/1 Completed 0 105s

rook-ceph-osd-prepare-node-1-233-njpqw 0/1 Completed 0 102s

rook-ceph-tools-757999d6c7-n9nrl 1/1 Running 0 12m

rook-discover-2x5nj 1/1 Running 0 26m

rook-discover-b45gk 1/1 Running 0 26m

rook-discover-pmbwh 1/1 Running 0 26m检查原因:

1、检查 Rook Operator 状态: 首先,请确保 Rook Operator 正在运行。运行以下命令检查 Rook Operator 的 Pod 是否正在运行:

[root@master-1-230 examples]# kubectl get pods -n rook-ceph -l app=rook-ceph-operator

NAME READY STATUS RESTARTS AGE

rook-ceph-operator-9864d576b-mdj9r 1/1 Running 0 10m2、检查集群状态: 使用以下命令检查 Rook Ceph 集群的状态:

[root@master-1-230 examples]# kubectl get cephclusters -n rook-ceph

NAME DATADIRHOSTPATH MONCOUNT AGE PHASE MESSAGE HEALTH EXTERNAL FSID

rook-ceph /var/lib/rook 3 7m38s Progressing Configuring Ceph Mons

[root@master-1-230 examples]# kubectl get cephclusters -n rook-ceph

NAME DATADIRHOSTPATH MONCOUNT AGE PHASE MESSAGE HEALTH EXTERNAL FSID

rook-ceph /var/lib/rook 3 14m Progressing Configuring Ceph OSDs

[root@master-1-230 examples]# kubectl get cephclusters -n rook-ceph

NAME DATADIRHOSTPATH MONCOUNT AGE PHASE MESSAGE HEALTH EXTERNAL FSID

rook-ceph /var/lib/rook 3 17m Progressing Configuring Ceph OSDs HEALTH_OK 29ac7b3c-9f78-4d1a-9874-838ba78d423a

[root@master-1-230 examples]# kubectl get cephclusters -n rook-ceph

NAME DATADIRHOSTPATH MONCOUNT AGE PHASE MESSAGE HEALTH EXTERNAL FSID

rook-ceph /var/lib/rook 3 35m Ready Cluster created successfully HEALTH_WARN 29ac7b3c-9f78-4d1a-9874-838ba78d423a在 Rook 部署 Ceph 时,Ceph 集群的状态有几个不同的阶段,主要包括以下几个阶段:

-

Pending(等待): 这个阶段表示 Rook 正在等待执行操作,通常是等待 Operator 的进一步指示或等待 Kubernetes 资源的创建。

-

Progressing(进行中): 这个阶段表示正在进行一些操作,比如配置 Monitors、创建 OSD 等。在这个阶段,Rook 正在自动执行配置任务,以确保 Ceph 集群的正常运行。

-

Ready(就绪): 当 Ceph 集群的所有组件都正常启动和运行时,状态将变为 "Ready"。这意味着整个 Ceph 集群已经成功初始化,并且可以开始使用。

在Rook中,Ceph集群的"Configuring Ceph OSDs"阶段表示Rook正在配置Ceph OSD(Object Storage Daemon)节点。这个阶段涉及一系列操作,以确保每个OSD节点都正确初始化并准备好加入Ceph集群。以下是此阶段可能涉及的一些操作:

-

OSD初始化: Rook会负责在每个节点上初始化OSD。这包括创建文件系统、挂载Ceph数据目录等。

-

Ceph OSD进程启动: 一旦OSD初始化完成,Rook会启动Ceph OSD进程。这将使节点成为Ceph集群的一部分,负责存储和管理数据。

-

OSD池的配置: Rook可能还涉及配置Ceph中的OSD池,以确保适当的数据分布和存储策略。

以上是pod完成后的状态,以rook-ceph-osd-prepare开头的pod 用于自动感知集群挂载硬盘,前面收到指定节点,所有这个不起作用。osd-01、osd-02、osd-03容器存在且正常,上述pod均正常启动,视为集群安装成功。

如果没有启动osd-01 这3个pod,需要检查日志

# Get the prepare pods in the cluster

[root@master-1-230 examples]# kubectl -n rook-ceph get pod -l app=rook-ceph-osd-prepare

NAME READY STATUS RESTARTS AGE

rook-ceph-osd-prepare-node-1-231-bjgrg 0/1 Completed 0 2m50s

rook-ceph-osd-prepare-node-1-232-tqtz9 0/1 Completed 0 2m47s

rook-ceph-osd-prepare-node-1-233-njpqw 0/1 Completed 0 2m44s

# view the logs for the node of interest in the "provision" container

[root@master-1-230 examples]# kubectl -n rook-ceph logs rook-ceph-osd-prepare-node-1-231-bjgrg provision

2023-11-27 12:46:59.288379 I | cephcmd: desired devices to configure osds: [{Name:sdb OSDsPerDevice:1 MetadataDevice: DatabaseSizeMB:0 DeviceClass: InitialWeight: IsFilter:false IsDevicePathFilter:false}]

2023-11-27 12:46:59.289162 I | rookcmd: starting Rook v1.12.8 with arguments '/rook/rook ceph osd provision'

2023-11-27 12:46:59.289171 I | rookcmd: flag values: --cluster-id=7760cd16-0f50-452b-817e-b2a264ef3d34, --cluster-name=rook-ceph, --data-device-filter=, --data-device-path-filter=, --data-devices=[{"id":"sdb","storeConfig":{"osdsPerDevice":1}}], --encrypted-device=false, --force-format=false, --help=false, --location=, --log-level=DEBUG, --metadata-device=, --node-name=node-1-231, --osd-crush-device-class=, --osd-crush-initial-weight=, --osd-database-size=0, --osd-store-type=bluestore, --osd-wal-size=576, --osds-per-device=1, --pvc-backed-osd=false, --replace-osd=-1

2023-11-27 12:46:59.289176 I | ceph-spec: parsing mon endpoints: b=10.107.141.230:6789,c=10.111.113.147:6789,a=10.109.192.103:6789

2023-11-27 12:46:59.298174 I | op-osd: CRUSH location=root=default host=node-1-231

2023-11-27 12:46:59.298189 I | cephcmd: crush location of osd: root=default host=node-1-231

2023-11-27 12:46:59.298201 D | exec: Running command: dmsetup version

2023-11-27 12:46:59.303187 I | cephosd: Library version: 1.02.181-RHEL8 (2021-10-20)参考:https://rook.github.io/docs/rook/latest-release/Troubleshooting/ceph-common-issues/#investigation_4

4、安装扩展

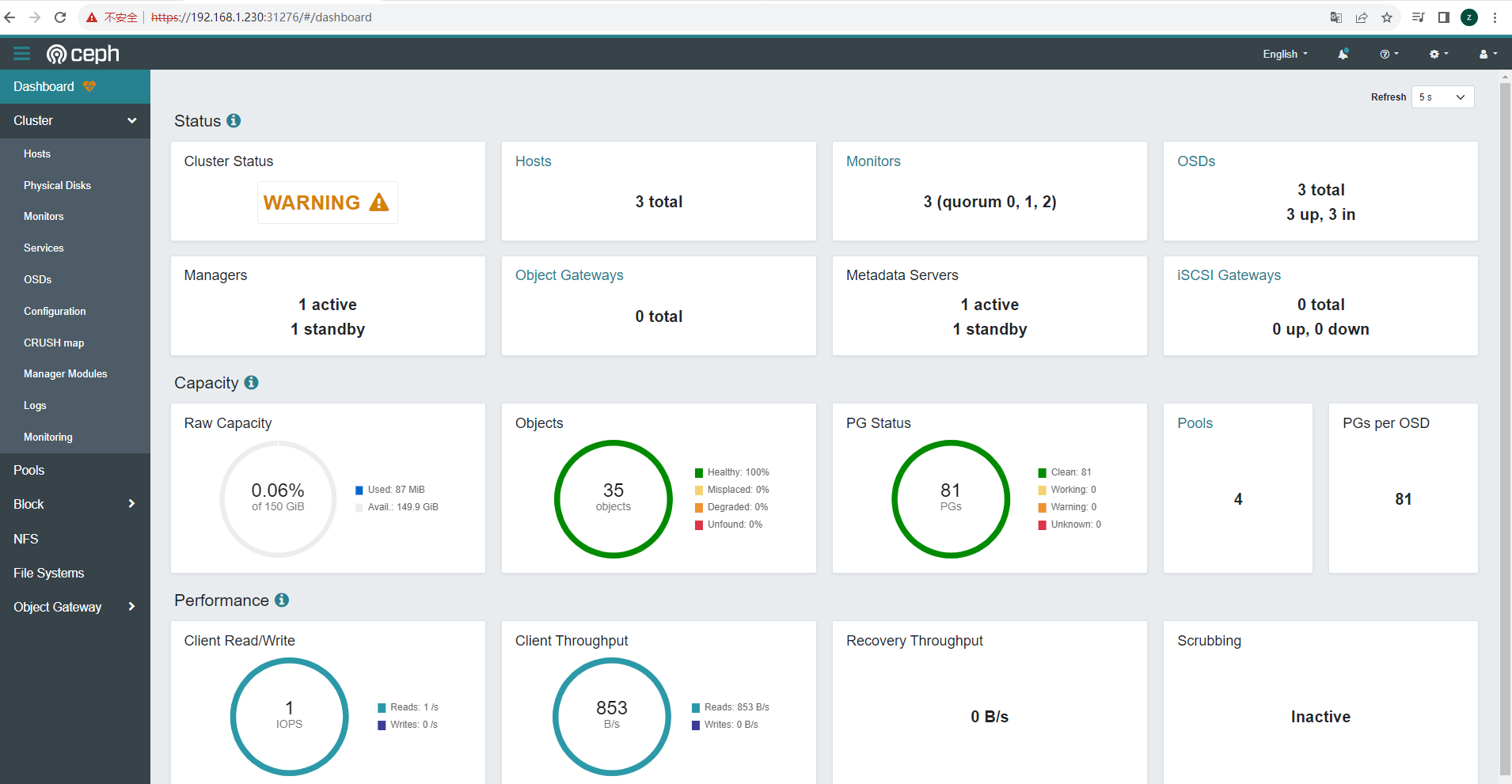

4.1、部署Ceph dashboard

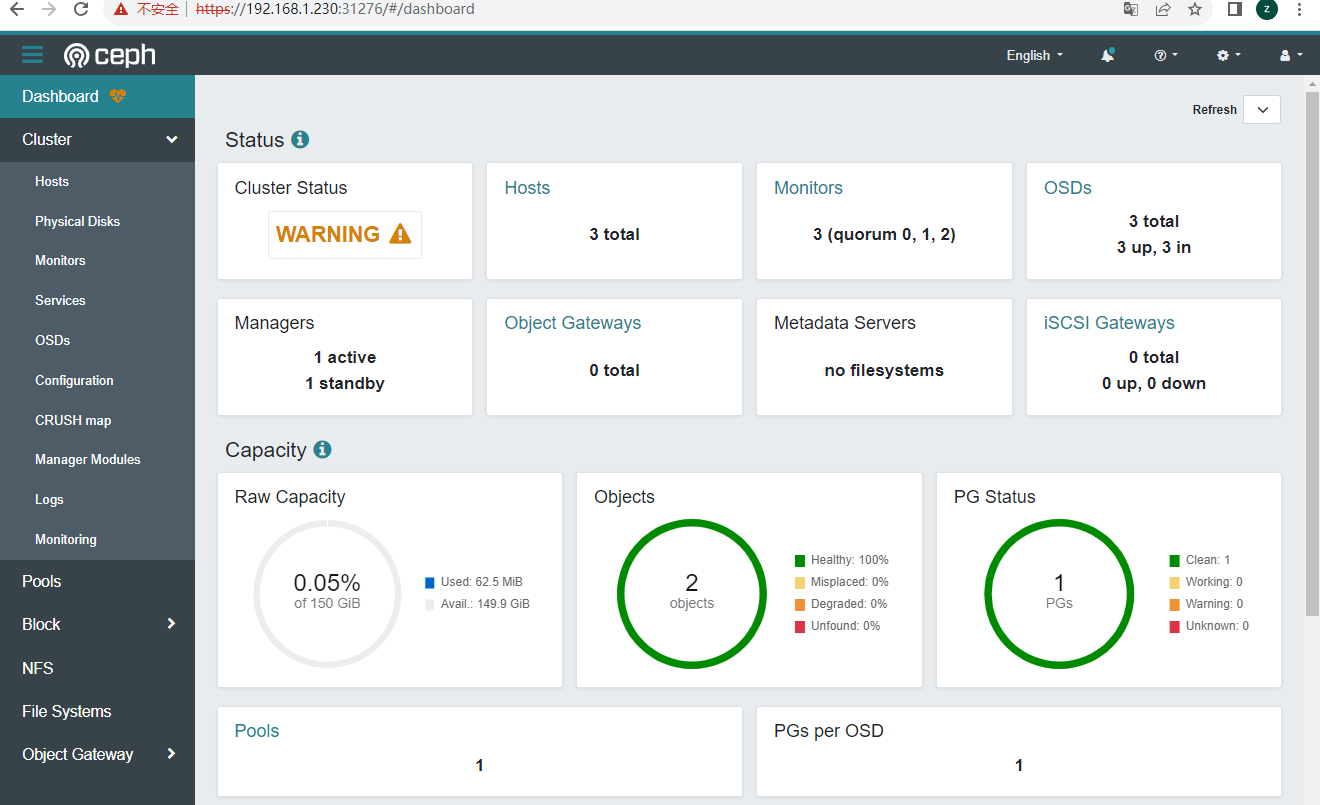

Ceph Dashnnoard 是一个内置的基于web的管理和监视应用程序,它是开源Ceph发行版的一部分。通过Dashboard可以获取Ceph集群的各种基本状态信息。

cd rook/deploy/examples

[root@master-1-230 examples]# kubectl apply -f dashboard-external-https.yaml

service/rook-ceph-mgr-dashboard-external-https created创建NodePort类型可以被外部访问

[root@master-1-230 examples]# kubectl get svc -n rook-ceph|grep dashboard

rook-ceph-mgr-dashboard ClusterIP 10.104.211.115 <none> 8443/TCP 7m55s

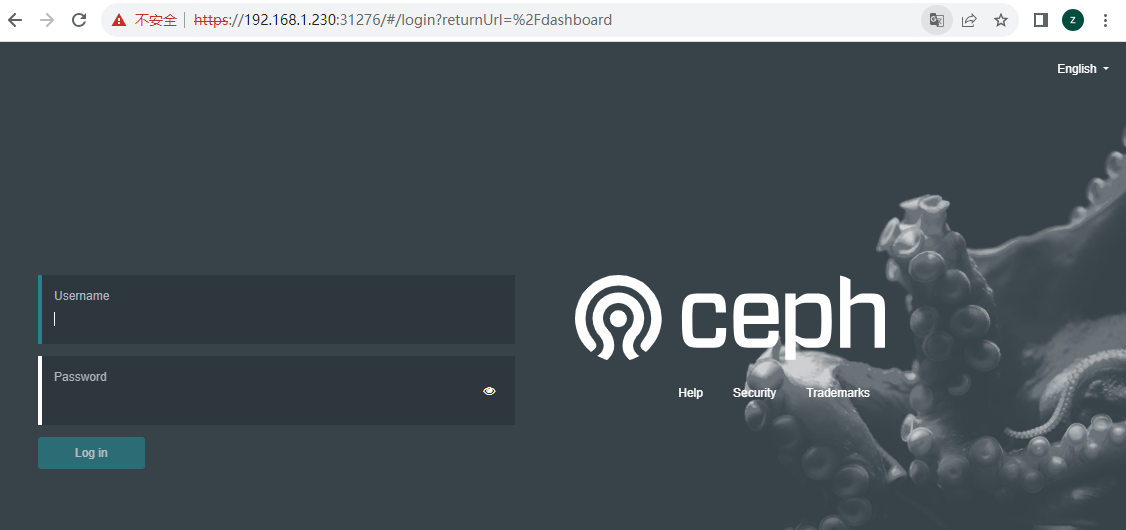

rook-ceph-mgr-dashboard-external-https NodePort 10.96.206.45 <none> 8443:31276/TCP 7s浏览器访问:https://192.168.1.230:31276/

Rook创建一个默认admin,并在运行Rook的命名空间生产一个名为rook-ceph-dashboard-admin-password的Secret,通过下面命令获取密码

[root@master-1-230 examples]# kubectl -n rook-ceph get secret rook-ceph-dashboard-password -o jsonpath="{['data']['password']}"|base64 --decode && echo

TK,hG.8@;uMa7>}o67)4

4.2 Rook 工具箱是一个包含用于Rook调试和测试的常用工具

cd rook/deploy/examples

[root@master-1-230 examples]# kubectl apply -f toolbox.yaml -n rook-ceph

deployment.apps/rook-ceph-tools created进入容器rook-ceph-tools 运行 :ceph -s

[root@master-1-230 ~]# kubectl exec -it `kubectl get pods -n rook-ceph|grep rook-ceph-tools|awk '{print $1}'` -n rook-ceph -- bash

bash-4.4$ ceph -s

cluster:

id: 1d32b39f-eaa1-43f0-a3f7-1b9ac1aa2865

health: HEALTH_WARN

2 daemons have recently crashed

services:

mon: 3 daemons, quorum a,b,c (age 25m)

mgr: a(active, since 5m), standbys: b

osd: 3 osds: 3 up (since 5m), 3 in (since 5m)

data:

pools: 1 pools, 1 pgs

objects: 2 objects, 449 KiB

usage: 62 MiB used, 150 GiB / 150 GiB avail

pgs: 1 active+clean

总结:

通过Rook在K8S集群中部署ceph服务,这种方式可以直接在生产环境使用。

- 线上生产环境如有公有云不建议自建CRPH集群

- 部署搭建只是第一步,后续维护及优化才是重点

三、基于Ceph的存储解决方案下

3、基于RBD/CephFS的StorageClass

3.1、部署RBD SrorageClass

Ceph可以同时提供对象存储RADOSGW、块存储RBD、文件系统存储CephFS。

RBD即RADOS Block Device 的简称,RBD块存储是最稳定且最常用的存储类型。

RBD块设备北路磁盘可以被挂载

RBD块设备具有快照、多副本、克隆和一致性等特性,数据以条带化的方式存储在Ceph集群的多个OSD中。注意:RBD只支持ReadWriteOnece存储类型!

1)创建StorageClass

cd rook/deploy/examples/csi/rbd

[root@master-1-230 rbd]# kubectl apply -f storageclass.yaml

cephblockpool.ceph.rook.io/replicapool created

storageclass.storage.k8s.io/rook-ceph-block created2)检查pool安装情况

[root@master-1-230 rbd]# kubectl -n rook-ceph exec -it $(kubectl -n rook-ceph get pod -l "app=rook-ceph-tools" -o jsonpath='{.items[0].metadata.name}') -- bash

bash-4.4$ ceph osd lspools

1 .mgr

2 replicapool

bash-4.4$ 3)查看StorageClass

[root@master-1-230 2.4]# kubectl get sc

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

nfs-client k8s-sigs.io/nfs-subdir-external-provisioner Delete Immediate false 46h

nfs-storageclass k8s-sigs.io/nfs-subdir-external-provisioner Retain Immediate false 46h

rook-ceph-block rook-ceph.rbd.csi.ceph.com Delete Immediate true 2m45s4)将Ceph设置为默认存储卷

[root@master-1-230 rbd]# kubectl patch storageclass rook-ceph-block -p '{"metadata":{"annotations":{"storageclass.kubernetes.io/is-default-class":"true"}}}'

storageclass.storage.k8s.io/rook-ceph-block patched修改完成后再检查StorageClass状态(有default表识)

[root@master-1-230 2.4]# kubectl get sc

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

nfs-client k8s-sigs.io/nfs-subdir-external-provisioner Delete Immediate false 46h

nfs-storageclass k8s-sigs.io/nfs-subdir-external-provisioner Retain Immediate false 46h

rook-ceph-block (default) rook-ceph.rbd.csi.ceph.com Delete Immediate true 7m49s5)测试验证

创建pvc指定storageClassName为rook-ceph-block

[root@master-1-230 2.4]# cat rbd-pvc.yml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: my-mysql-data

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 2Gi

storageClassName: rook-ceph-block

[root@master-1-230 2.4]# kubectl apply -f rbd-pvc.yml

persistentvolumeclaim/my-mysql-data created[root@master-1-230 2.4]# kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

pvc-20a64f91-4637-4bf8-b33f-27597536f44f 1Gi RWX Retain Bound default/nginx-storage-test-pvc-nginx-storage-stat-1 nfs-storageclass 46h

pvc-5cb97247-3811-477c-9b29-5100456d244f 500Mi RWX Retain Bound default/test-pvc nfs-storageclass 46h

pvc-62ff0d61-f674-4fbb-843c-de8d314e0243 1Gi RWO Retain Bound default/www-nfs-web-0 nfs-storageclass 34h

pvc-68d96576-f53a-43b0-9ff7-ac95ca8c401b 500Mi RWX Retain Bound default/test-pvc01 nfs-storageclass 34h

pvc-6e7f4303-5969-42a2-89a8-46d02f91c6f8 2Gi RWO Delete Bound default/my-mysql-data rook-ceph-block 57s

pvc-d76f65f2-0637-404f-8d08-5f174a07630a 1Gi RWX Retain Bound default/nginx-storage-test-pvc-nginx-storage-stat-2 nfs-storageclass 46h

pvc-f1106903-53db-490b-ba5f-f5981b431063 1Gi RWX Retain Bound default/nginx-storage-test-pvc-nginx-storage-stat-0 nfs-storageclass 46h

[root@master-1-230 2.4]# kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

my-mysql-data Bound pvc-6e7f4303-5969-42a2-89a8-46d02f91c6f8 2Gi RWO rook-ceph-block 59s

nginx-storage-test-pvc-nginx-storage-stat-0 Bound pvc-f1106903-53db-490b-ba5f-f5981b431063 1Gi RWX nfs-storageclass 46h

nginx-storage-test-pvc-nginx-storage-stat-1 Bound pvc-20a64f91-4637-4bf8-b33f-27597536f44f 1Gi RWX nfs-storageclass 46h

nginx-storage-test-pvc-nginx-storage-stat-2 Bound pvc-d76f65f2-0637-404f-8d08-5f174a07630a 1Gi RWX nfs-storageclass 46h

test-pvc Bound pvc-5cb97247-3811-477c-9b29-5100456d244f 500Mi RWX nfs-storageclass 46h

test-pvc01 Bound pvc-68d96576-f53a-43b0-9ff7-ac95ca8c401b 500Mi RWX nfs-storageclass 34h

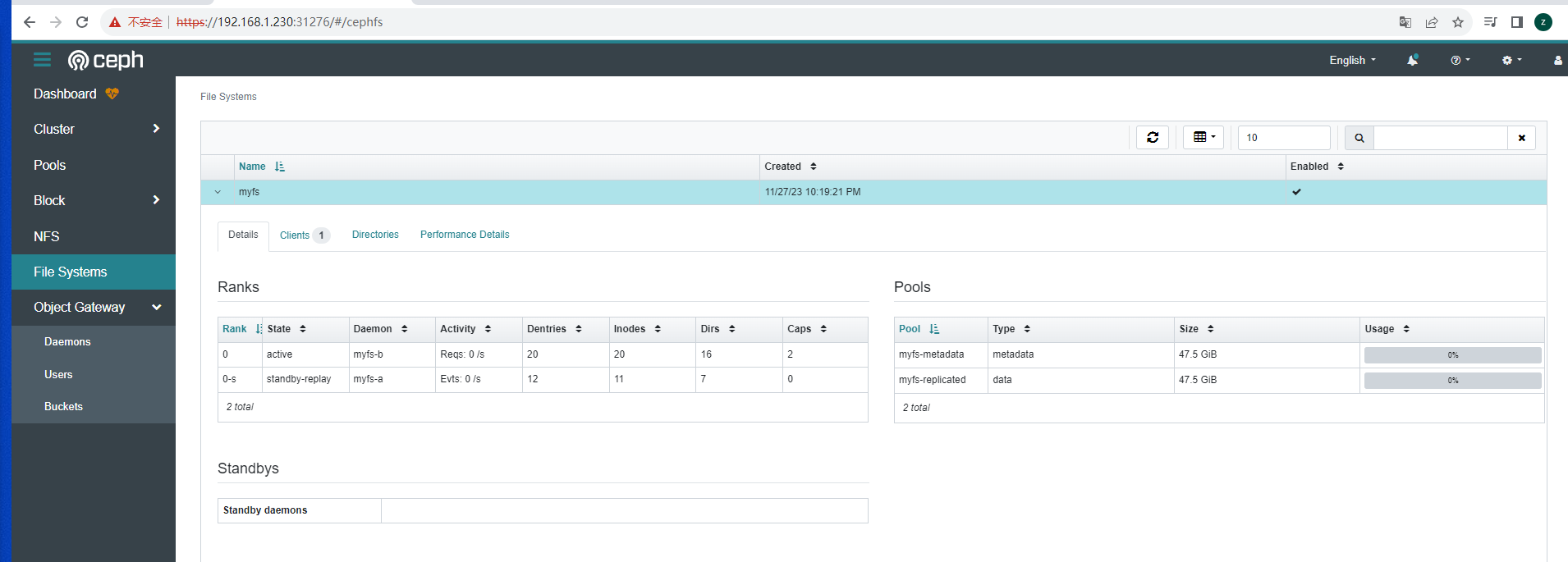

www-nfs-web-0 Bound pvc-62ff0d61-f674-4fbb-843c-de8d314e0243 1Gi RWO nfs-storageclass 34h3.2 部署CephFS StorageClass

Ceph允许用户挂载一个兼容posix的共享目录到多个主机,该存储和NFS共享存储以及CIFS共享目录类似

创建文件系统

cd rook/deploy/examples

[root@master-1-230 examples]# kubectl apply -f filesystem.yaml

cephfilesystem.ceph.rook.io/myfs created确认文件系统启动

[root@master-1-230 examples]# kubectl -n rook-ceph get pod -l app=rook-ceph-mds

NAME READY STATUS RESTARTS AGE

rook-ceph-mds-myfs-a-59ff4c8cbb-tdfnk 2/2 Running 0 85s

rook-ceph-mds-myfs-b-57db4d84fb-zsrvz 2/2 Running 0 84s查看文件系统详细状态

[root@master-1-230 ~]# kubectl exec -it `kubectl get pods -n rook-ceph|grep rook-ceph-tools|awk '{print $1}'` -n rook-ceph -- bash

bash-4.4$ ceph status

cluster:

id: 1d32b39f-eaa1-43f0-a3f7-1b9ac1aa2865

health: HEALTH_WARN

2 daemons have recently crashed

1 mgr modules have recently crashed

services:

mon: 3 daemons, quorum a,b,c (age 42m)

mgr: a(active, since 40m), standbys: b

mds: 1/1 daemons up, 1 hot standby

osd: 3 osds: 3 up (since 42m), 3 in (since 95m)

data:

volumes: 1/1 healthy

pools: 4 pools, 81 pgs

objects: 33 objects, 498 KiB

usage: 82 MiB used, 150 GiB / 150 GiB avail

pgs: 81 active+clean

io:

client: 853 B/s rd, 1 op/s rd, 0 op/s wr

bash-4.4$

1)创建StorageClass

cd rook/deploy/examples/csi/cephfs

[root@master-1-230 cephfs]# kubectl apply -f storageclass.yaml

storageclass.storage.k8s.io/rook-cephfs created2) 查看StorageClass

[root@master-1-230 cephfs]# kubectl get sc

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

nfs-client k8s-sigs.io/nfs-subdir-external-provisioner Delete Immediate false 46h

nfs-storageclass k8s-sigs.io/nfs-subdir-external-provisioner Retain Immediate false 46h

rook-ceph-block (default) rook-ceph.rbd.csi.ceph.com Delete Immediate true 13m

rook-cephfs rook-ceph.cephfs.csi.ceph.com Delete Immediate true 32sceph的使用和rbd一样,指定storageClassName即可,续要注意的是rbd只支持ReadWriteOnce,cephfs能够支持ReadWriteMany

3)测试验证

[root@master-1-230 2.4]# cat cephfs-pvc.yml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: redis-data-pvc

spec:

accessModes:

#- ReadWriteOnce

- ReadWriteMany

resources:

requests:

storage: 2Gi

storageClassName: rook-cephfs

[root@master-1-230 2.4]# kubectl apply -f cephfs-pvc.yml