作业①

实验内容

要求

指定一个网站,爬取这个网站中的所有的所有图片,例如:中国气象网(http://www.weather.com.cn)。使用scrapy框架分别实现单线程和多线程的方式爬取。

–务必控制总页数(学号尾数2位)、总下载的图片数量(尾数后3位)等限制爬取的措施。

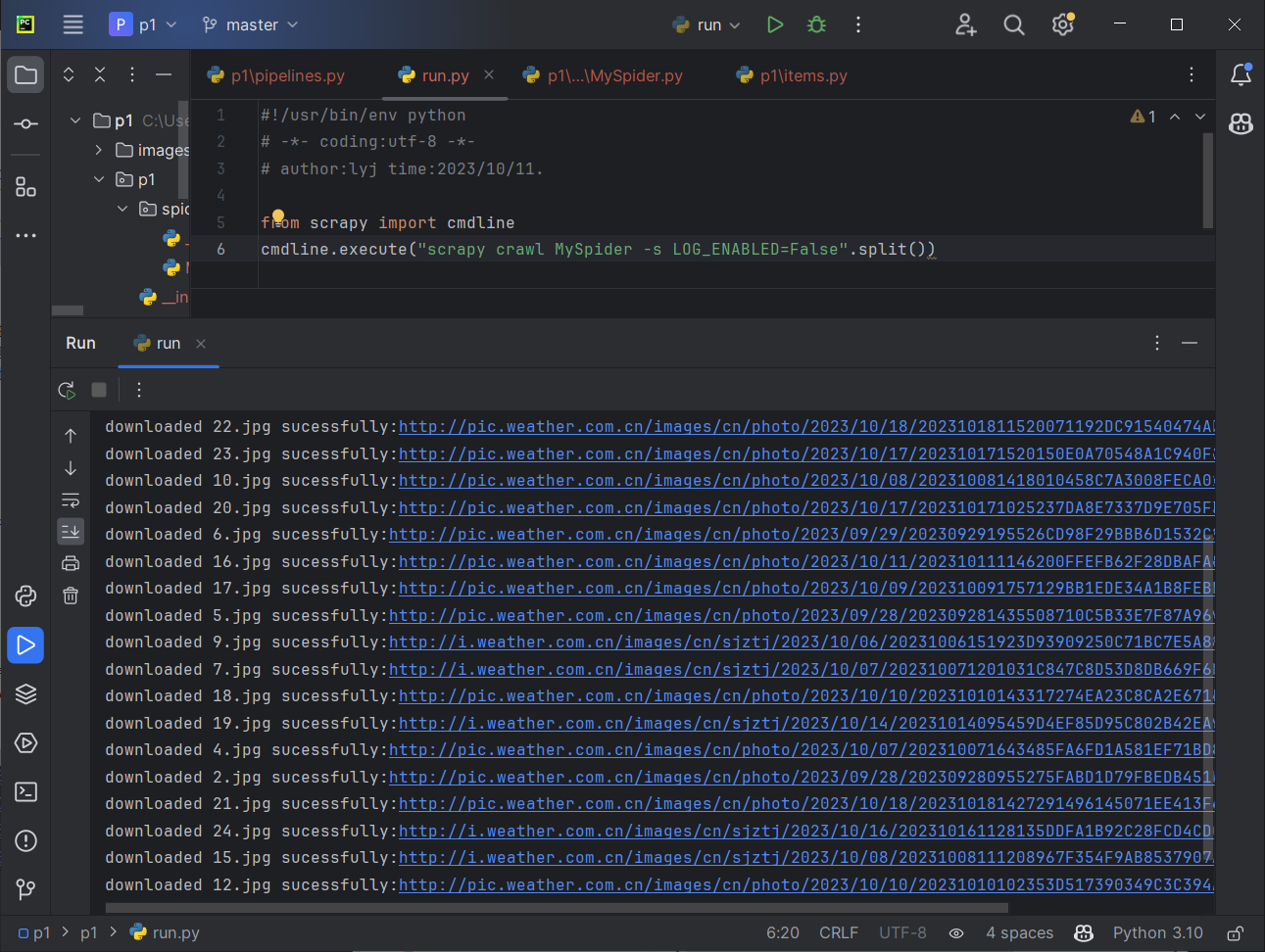

输出信息

将下载的Url信息在控制台输出,并将下载的图片存储在images子文件中,并给出截图。

码云文件夹链接

代码

MySpider:

import scrapy

from p1.items import P1Item

class MySpider(scrapy.Spider):

name = "MySpider"

def start_requests(self):

imagesUrl = 'http://p.weather.com.cn/zrds/index.shtml'

yield scrapy.Request(url=imagesUrl, callback=self.parse)

def parse(self, response, **kwargs):

data = response.body.decode(response.encoding)

selector = scrapy.Selector(text=data)

imgsUrl = selector.xpath('//div[@class="oi"]/div[@class="bt"]/a/@href').extract()

for x in imgsUrl:

yield scrapy.Request(url=x, callback=self.parse1)

def parse1(self, response):

item = P1Item()

data = response.body.decode(response.encoding)

selector = scrapy.Selector(text=data)

item["url"] = selector.xpath('//div[@class="buttons"]/span/img/@src').extract()

# print(item["url"])

yield item

pipeline:

#

# Define your item pipelines here

#

# Don't forget to add your pipeline to the ITEM_PIPELINES setting

# See: https://docs.scrapy.org/en/latest/topics/item-pipeline.html

# useful for handling different item types with a single interface

import urllib.request

headers = {"User-Agent": "Mozilla/5.0 (Windows; U; Windows NT 6.0 x64;"

" en-US; rv:1.9pre) Gecko/2008072421 Minefield/3.0.2pre"}

count = 1

class P1Pipeline:

def process_item(self, item, spider):

global count

for url in item["url"]:

# print(url)

if count <= 135:

path = '../images/' + str(count) + '.jpg'

# 将URL表示的网络对象复制到本地文件

urllib.request.urlretrieve(url, path)

print("downloaded " + str(count) + ".jpg" + ' sucessfully' + ':' + url)

count+=1

return item

# 多线程

# import time

# import urllib.request

# import threading

# from random import random

#

# headers = {"User-Agent": "Mozilla/5.0 (Windows; U; Windows NT 6.0 x64;"

# " en-US; rv:1.9pre) Gecko/2008072421 Minefield/3.0.2pre"}

# count = 1

# class P1Pipeline:

# def process_item(self, item, spider):

# global count

# global threads

# for url in item["url"]:

# count += 1

# T = threading.Thread(target=self.download, args=(url, count))

# T.setDaemon(False)

# T.start()

# threads.append(T)

# time.sleep(random.uniform(0.03, 0.06))

# if count > 135:

# break

# return item

# def download(self,url,count):

# path = '../images/' + str(count) + '.jpg'

# # 将URL表示的网络对象复制到本地文件

# print("downloaded " + str(count) + ".jpg" + ' sucessfully' + ':' + url)

# urllib.request.urlretrieve(url, path)

item:

# Define here the models for your scraped items

#

# See documentation in:

# https://docs.scrapy.org/en/latest/topics/items.html

import scrapy

class P1Item(scrapy.Item):

# define the fields for your item here like:

url = scrapy.Field()

pass

别忘了setting:

结果

单进程

多进程

保存都一样

实验心得

进一步学习了使用Scrapy框架进行网站爬取,又一次进行

作业②

实验内容

要求

熟练掌握 scrapy 中 Item、Pipeline 数据的序列化输出方法;使用scrapy框架+Xpath+MySQL数据库存储技术路线爬取股票相关信息。

候选网站:东方财富网:https://www.eastmoney.com/

输出信息

MySQL数据库存储和输出格式如下:

表头英文命名例如:序号id,股票代码:bStockNo……,由同学们自行定义设计。

| 序号 | 股票代码 | 股票名称 | 最新报价 | 涨跌幅 | 涨跌额 | 成交量 | 振幅 | 最高 | 最低 | 今开 | 昨收 |

|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 688093 | N世华 | 28.47 | 10.92 | 26.13万 | 7.6亿 | 22.34 | 32.0 | 28.08 | 30.20 | 17.55 |

代码

MySpider:

import sqlite3

import scrapy

import json

from P2.items import P2Item

class mySpider(scrapy.Spider):

name = 'mySpider'

start_urls = ["http://88.push2.eastmoney.com/api/qt/clist/get?cb=jQuery112407291657687027506_1696662230139&pn=1&pz=20&po=1&np=1&ut=bd1d9ddb04089700cf9c27f6f7426281&fltt=2&invt=2&wbp2u=|0|0|0|web&fid=f3&fs=m:0+t:6,m:0+t:80,m:1+t:2,m:1+t:23,m:0+t:81+s:2048&fields=f1,f2,f3,f4,f5,f6,f7,f8,f9,f10,f12,f13,f14,f15,f16,f17,f18,f20,f21,f23,f24,f25,f22,f11,f62,f128,f136,f115,f152&_=1696662230140"]

#start_urls = ["http://quote.eastmoney.com/center/gridlist.html#hs_a_board"]

# 'http://88.push2.eastmoney.com/api/qt/clist/get?cb=jQuery112407291657687027506_1696662230139&pn=1&pz=20&po=1&np=1&ut=bd1d9ddb04089700cf9c27f6f7426281&fltt=2&invt=2&wbp2u=|0|0|0|web&fid=f3&fs=m:0+t:6,m:0+t:80,m:1+t:2,m:1+t:23,m:0+t:81+s:2048&fields=f1,f2,f3,f4,f5,f6,f7,f8,f9,f10,f12,f13,f14,f15,f16,f17,f18,f20,f21,f23,f24,f25,f22,f11,f62,f128,f136,f115,f152&_=1696662230140'

def parse(self, response):

# 调用body_as_unicode()是为了能处理unicode编码的数据

count = 0

insertDB = stockDB()

result = response.text

result = result.replace('''jQuery112407291657687027506_1696662230139(''',"").replace(');','')#气死我了,最外层的“);”要去掉,不然一直报错。搞了好久

result = json.loads(result)

for f in result['data']['diff']:

count += 1

item = P2Item()

item["i"] = str(count)#序号

item["f12"] = f['f12']#股票代码

item["f14"] = f['f14']#股票名称

item["f2"] = f['f2']#最新价

item["f3"] = f['f3']#涨跌幅

item["f4"] = f['f4']#涨跌额

item["f5"] = f['f5']#成交量

item["f6"] = f['f6']#成交额

item["f7"] = f['f7']#振幅

item["f8"] = f["f8"]#最高

item["f9"] = f["f9"]#最低

item["f10"] = f["f10"]#今开

item["f11"] = f["f11"]#昨收

insertDB.openDB()

insertDB.insert(item['i'], item['f12'], item['f14'], item['f2'], item['f3'], item['f4'], item['f5'], item['f6'],

item['f7'],item['f8'],item['f9'],item['f10'],item['f11'])

insertDB.closeDB()

yield item

print("ok")

class stockDB:

# 开启

def openDB(self):

self.con = sqlite3.connect("stocks.db")

self.cursor = self.con.cursor()

try:

self.cursor.execute("create table stocks (Num varchar(16),"

" Code varchar(16),names varchar(16),"

"Price varchar(16),"

"Quote_change varchar(16),"

"Updownnumber varchar(16),"

"Volume varchar(16),"

"Turnover varchar(16),"

"Swing varchar(16),"

"Highest varchar(16),"

"Lowest varchar(16),"

"Today varchar(16),"

"Yesday varchar(16))")

except:

self.cursor.execute("delete from stocks")

# 关闭

def closeDB(self):

self.con.commit()

self.con.close()

# 插入数据

def insert(self,Num,Code,names,Price,Quote_change,Updownnumber,Volume,Turnover,Swing,Highest,Lowest,Today,Yesday):

try:

self.cursor.execute("insert into stocks(Num,Code,names,Price,Quote_change,Updownnumber,Volume,Turnover,Swing,Highest,Lowest,Today,Yesday)"

" values (?,?,?,?,?,?,?,?,?,?,?,?,?)",

(Num,Code,names,Price,Quote_change,Updownnumber,Volume,Turnover,Swing,Highest,Lowest,Today,Yesday))

except Exception as err:

print(err)

pipeline:

# Define your item pipelines here

#

# Don't forget to add your pipeline to the ITEM_PIPELINES setting

# See: https://docs.scrapy.org/en/latest/topics/item-pipeline.html

# useful for handling different item types with a single interface

from itemadapter import ItemAdapter

from openpyxl import Workbook

class P2Pipeline:

wb = Workbook()

ws = wb.active # 激活工作表

ws.append(["序号", "代码", "名称", "最新价(元)", "涨跌幅", "跌涨额(元)", "成交量", "成交额(元)", "振幅", "最高", "最低", "今开", "昨收"]) # 设置表头

def process_item(self, item, spider):

line = [item['i'], item['f12'], item['f14'], item['f2'], item['f3'], item['f4'], item['f5'], item['f6'],

item['f7'],item['f8'],item['f9'],item['f10'],item['f11']] # 把数据中每一项整理出来

self.ws.append(line) # 将数据以行的形式添加到xlsx中

self.wb.save(r'C:\Users\白炎\Desktop\数据采集技术\实践课\3\2\stocks.xlsx') # 保存xlsx文件

# print("写入成功")

print("{:4}\t{:8}\t{:8}\t{:8}\t{:8}\t{:8}\t{:8}\t{:16}\t{:8}\t{:8}\t{:8}\t{:8}\t{:8}".format(item['i'], item['f12'], item['f14'], item['f2'], item['f3'], item['f4'], item['f5'], item['f6'],

item['f7'],item['f8'],item['f9'],item['f10'],item['f11']))

return item

item:

# Define here the models for your scraped items

#

# See documentation in:

# https://docs.scrapy.org/en/latest/topics/items.html

import scrapy

class P2Item(scrapy.Item):

i = scrapy.Field()

f12 = scrapy.Field()

f14 = scrapy.Field()

f2 = scrapy.Field()

f3 = scrapy.Field()

f4 = scrapy.Field()

f5 = scrapy.Field()

f6 = scrapy.Field()

f7 = scrapy.Field()

f8 = scrapy.Field()

f9 = scrapy.Field()

f10 = scrapy.Field()

f11 = scrapy.Field()

pass

setting:

ROBOTSTXT_OBEY = False

ITEM_PIPELINES = {

"P2.pipelines.P2Pipeline": 300,

}

结果

作业③

实验内容

要求

熟练掌握 scrapy 中 Item、Pipeline 数据的序列化输出方法;使用scrapy框架+Xpath+MySQL数据库存储技术路线爬取外汇网站数据。

候选网站:中国银行网:https://www.boc.cn/sourcedb/whpj/

输出信息

| Currency | TBP | CBP | TSP | CSP | Time |

|---|---|---|---|---|---|

| 阿联酋迪拉姆 | 198.58 | 192.31 | 199.98 | 206.59 | 11:27:14 |

码云文件夹链接

MySpider:

#!/usr/bin/env python

# -*- coding:utf-8 -*-

# author:lyj time:2023/10/19.

import re

import sqlite3

import time

import pandas as pd

import scrapy

from items import P3Item

class Work3Spider(scrapy.Spider):

name = 'work3'

# allowed_domains = ['www.boc.cn']

start_urls = ['https://www.boc.cn/sourcedb/whpj/']

def parse(self, response):

insertDB = moneyDB()

data = response.body.decode()

selector=scrapy.Selector(text=data)

data_lists = selector.xpath('//table[@align="left"]//tr')

for data_list in data_lists:

if data_list != []:

datas = re.findall('<td>(.*?)</td>',data_list.extract())

# print(datas)

if datas!=[]:

# print(datas[0])

item = P3Item()

item['name'] = datas[0]

item['price1'] = datas[1]

item['price2'] = datas[2]

item['price3'] = datas[3]

item['price4'] = datas[4]

item['price5'] = datas[5]

item['date'] = datas[6]

# print(item)

insertDB.openDB()

insertDB.insert(item['name'], item['price1'], item['price2'], item['price3'], item['price4'], item['price5'], item['date'])

insertDB.closeDB()

#

yield item

#货币名称 现汇买入价 现钞买入价 现汇卖出价 现钞卖出价 中行折算价 发布日期

# name'], item['price1'], item['price2'], item['price3'], item['price4'], item['price5'], item['date

class moneyDB:

# 开启

def openDB(self):

self.con = sqlite3.connect("money.db")

self.cursor = self.con.cursor()

try:

self.cursor.execute("create table money (name varchar(16),"

"price1 float(16),price2 float(16),"

"price3 float(16),"

"price4 float(16),"

"price5 float(16),"

"date date(16))")

except:

self.cursor.execute("delete from money")

# 关闭

def closeDB(self):

self.con.commit()

self.con.close()

# 插入数据

def insert(self,name,price1,price2,price3,price4,price5,date):

try:

self.cursor.execute("insert into money(name,price1,price2,price3,price4,price5,date)"

" values (?,?,?,?,?,?,?)",

(name,price1,price2,price3,price4,price5,date))

except Exception as err:

print(err)

pipeline:

# Define your item pipelines here

#

# Don't forget to add your pipeline to the ITEM_PIPELINES setting

# See: https://docs.scrapy.org/en/latest/topics/item-pipeline.html

# useful for handling different item types with a single interface

from itemadapter import ItemAdapter

from openpyxl import Workbook

class P3Pipeline:

wb = Workbook()

ws = wb.active # 激活工作表

ws.append(

['货币名称', '现汇买入价', '现钞买入价', '现汇卖出价', '现钞卖出价', '中行折算价', '发布日期']) # 设置表头

print('%-10s%-10s%-10s%-10s%-10s%-10s%-10s' % (

'货币名称', '现汇买入价', '现钞买入价', '现汇卖出价', '现钞卖出价', '中行折算价', '发布日期'))

def process_item(self, item, spider):

print('%-10s%-13s%-13s%-13s%-13s%-13s%-10s' % (item['name'],item['price1'],item['price2'],item['price3'],item['price4'],item['price5'],item['date']))

line = [item['name'],item['price1'],item['price2'],item['price3'],item['price4'],item['price5'],item['date']] # 把数据中每一项整理出来

self.ws.append(line) # 将数据以行的形式添加到xlsx中

self.wb.save(r'C:\Users\白炎\Desktop\数据采集技术\实践课\3\3\money.xlsx') # 保存xlsx文件

return item

item:

# Define here the models for your scraped items

#

# See documentation in:

# https://docs.scrapy.org/en/latest/topics/items.html

import scrapy

class P3Item(scrapy.Item):

# define the fields for your item here like:

# name = scrapy.Field()

name = scrapy.Field()

price1 = scrapy.Field()

price2 = scrapy.Field()

price3 = scrapy.Field()

price4 = scrapy.Field()

price5 = scrapy.Field()

date = scrapy.Field()

pass

setting:

BOT_NAME = "p3"

USER_AGENT = 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/117.0.0.0 Safari/537.36 Edg/117.0.2045.47'

SPIDER_MODULES = ["p3.spiders"]

NEWSPIDER_MODULE = "p3.spiders"

LOG_LEVEL = 'ERROR'