作业①

要求

- 熟练掌握 Selenium 查找HTML元素、爬取Ajax网页数据、等待HTML元素等内容。

- 使用Selenium框架+ MySQL数据库存储技术路线爬取“沪深A股”、“上证A股”、“深证A股”3个板块的股票数据信息。

候选网站

输出信息:

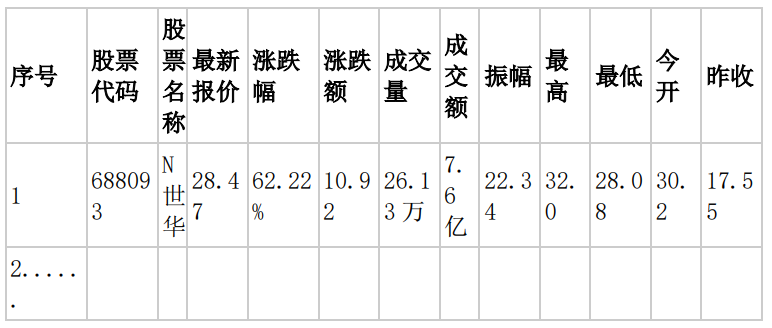

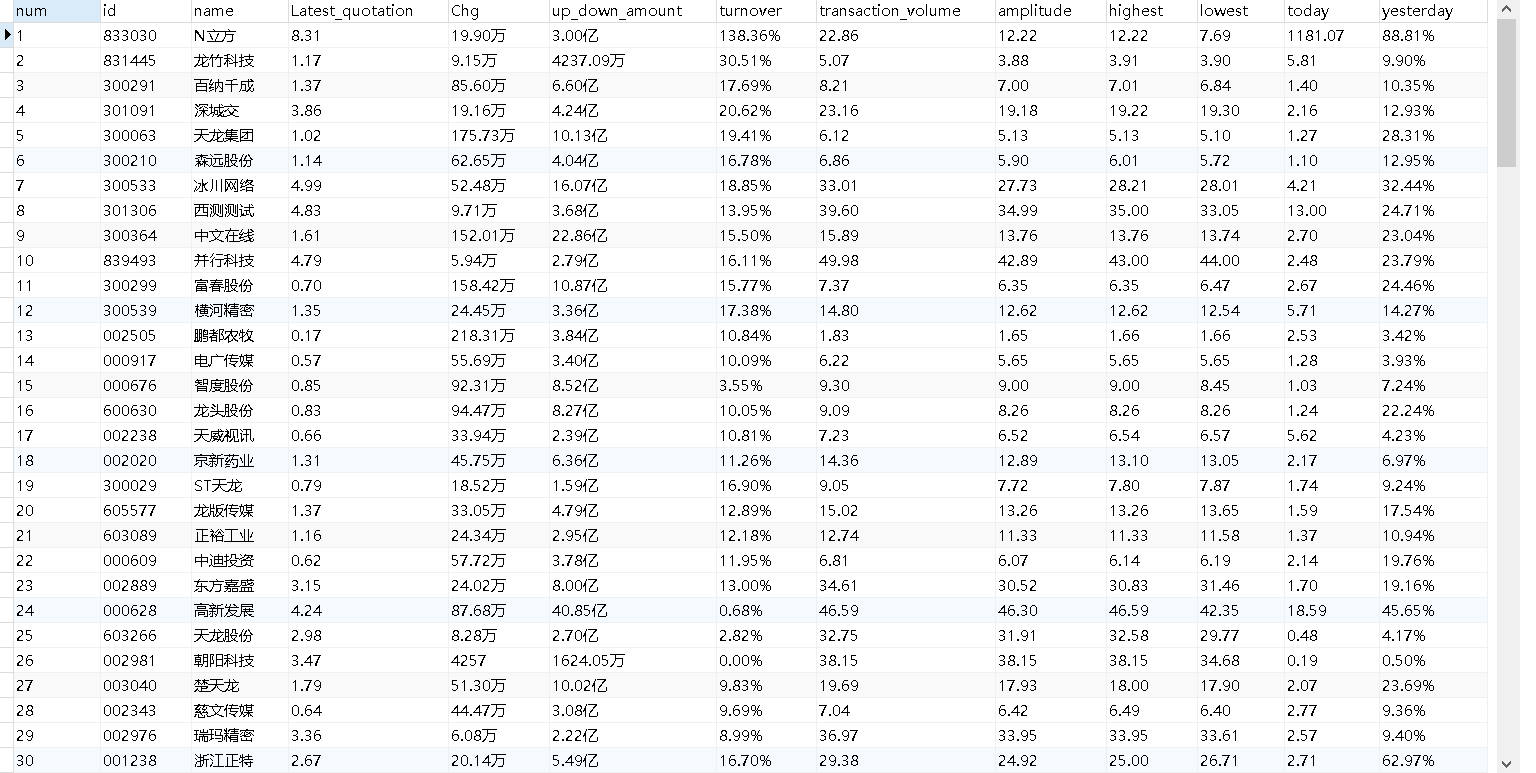

- MYSQL 数据库存储和输出格式如下,表头应是英文命名例如:序号,id,股票代码:bStockNo……,由同学们自行定义设计表头:

思路

- 之前处理这个网站主要是通过json请求的截获,而Selenium则可以直接爬取网站,不需要翻找页面数据流向找json文件的链接。

代码

StockSelenium.py

from selenium import webdriver

import time

import pymysql

class StockSelenium():

headers = {

"User-Agent": "Mozilla/5.0 (Windows; U; Windows NT 6.0 x64; en-US; rv:1.9pre) Gecko/2008072421 Minefield/3.0.2pre"}

count = 1

# def initDriver(self):

# count = 1

driver = webdriver.Chrome(r"C:\\Users\\zmk\\Desktop\\PyCharm Community Edition 2023.2.1\\chromedriver-win64\\chromedriver.exe")

option = webdriver.ChromeOptions()

option.binary_location = r"C:\\Program Files\\Google\\Chrome\\Application\\chrome.exe"

option.add_argument('--headless') # 设置无头模式,不弹出浏览器窗口

option.add_argument('--disable-gpu')

driver.get('http://quote.eastmoney.com/center/gridlist.html')

def getInfo(self):

print("Page", self.count)

self.count += 1

self.StockInfo()

try:

keyInput = self.driver.find_element_by_class_name("paginate_input")

keyInput.clear()

keyInput.send_keys(self.count)

# keyInput.send_keys(self.count.ENTER)

GoButton = self.driver.find_element_by_class_name("paginte_go")

GoButton.click()

time.sleep(3)

self.getInfo()

except:

print("err")

time.sleep(3)

self.getInfo()

def StockInfo(self):

odds = self.driver.find_elements_by_class_name("odd")

evens = self.driver.find_elements_by_class_name("even")

for i in range(len(odds)):

self.StockDetailInfo(odds[i])

self.StockDetailInfo(evens[i])

def StockDetailInfo(self, elem):

tds = elem.find_elements_by_tag_name("td")

count = tds[0].text

num = tds[1].text # 编号

name = tds[2].text # 名称

value = tds[4].text # 最新价

Quote_change = tds[5].text # 涨跌幅

Ups_and_downs = tds[6].text # 涨跌额

Volume = tds[7].text # 成交量

Turnover = tds[8].text # 成交额

amplitude = tds[9].text # 振幅

highest = tds[10].text # 最高

lowest = tds[11].text # 最低

today_begin = tds[12].text # 进开

last_day = tds[13].text # 昨收

# print(count,num,name,value,Quote_change,Ups_and_downs,Volume,Turnover,amplitude,highest,lowest,today_begin,last_day)

self.writeMySQL(count, num, name, value, Quote_change, Ups_and_downs, Volume, Turnover, amplitude, highest,

lowest, today_begin, last_day)

# for value in values:

# print(num,name,values[0].text,values[1].text)

def initDatabase(self):

try:

serverName = "127.0.0.1"

# userName = "sa"

passWord = "********"

self.con = pymysql.connect(host=serverName, port=3307, user="root", password=passWord, database="Stock",

charset="utf8")

self.cursor = self.con.cursor()

self.cursor.execute("use Stock")

print("init DB over")

self.cursor.execute("select * from stock")

except:

print("init err")

def writeMySQL(self, count, num, name, value, Quote_change, Ups_and_downs, Volume, Turnover, amplitude, highest,

lowest, today_begin, last_day):

try:

print(count, num, name, value, Quote_change, Ups_and_downs, Volume, Turnover, amplitude, highest, lowest,

today_begin, last_day)

self.cursor.execute(

"insert stock(count,num,name,value,Quote_change,Ups_and_downs,Volume,Turnover,amplitude,highest,lowest,today_begin,last_day) values (%s,%s,%s,%s,%s,%s,%s,%s,%s,%s,%s,%s,%s)",

(count, num, name, value, Quote_change, Ups_and_downs, Volume, Turnover, amplitude, highest, lowest,

today_begin, last_day))

self.con.commit()

except Exception as err:

print(err)

# self.opened = False

spider = StockSelenium()

spider.initDatabase()

spider.getInfo()

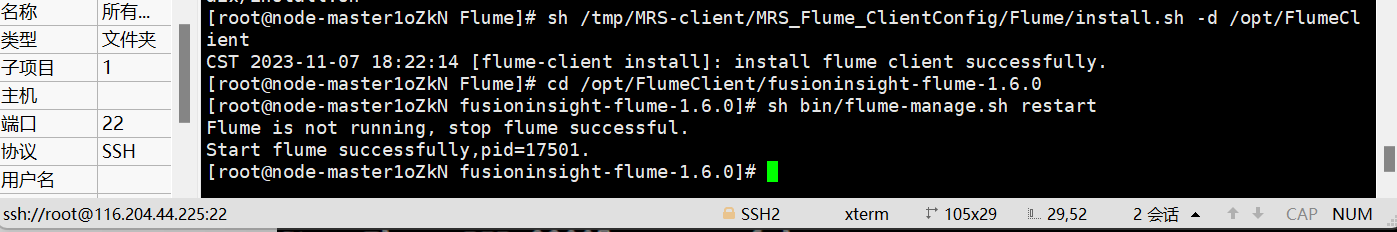

运行截图

心得体会

- 翻页的功能遇到了一点问题,所以干脆用输入页码的形式进行翻页。

- 此外,这个网站要在开盘时间才能爬到数据,且不能过于频繁地爬取数据,容易出现TIME OUT的问题,可能是网站本身的限制。

作业二

要求

- 熟练掌握 Selenium 查找 HTML 元素、实现用户模拟登录、爬取 Ajax 网页数据、

- 等待 HTML 元素等内容。

候选网站

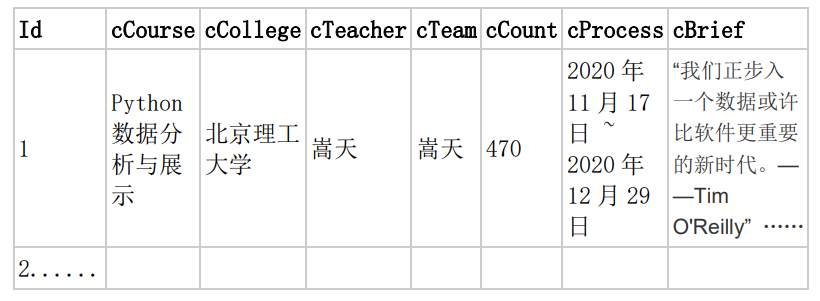

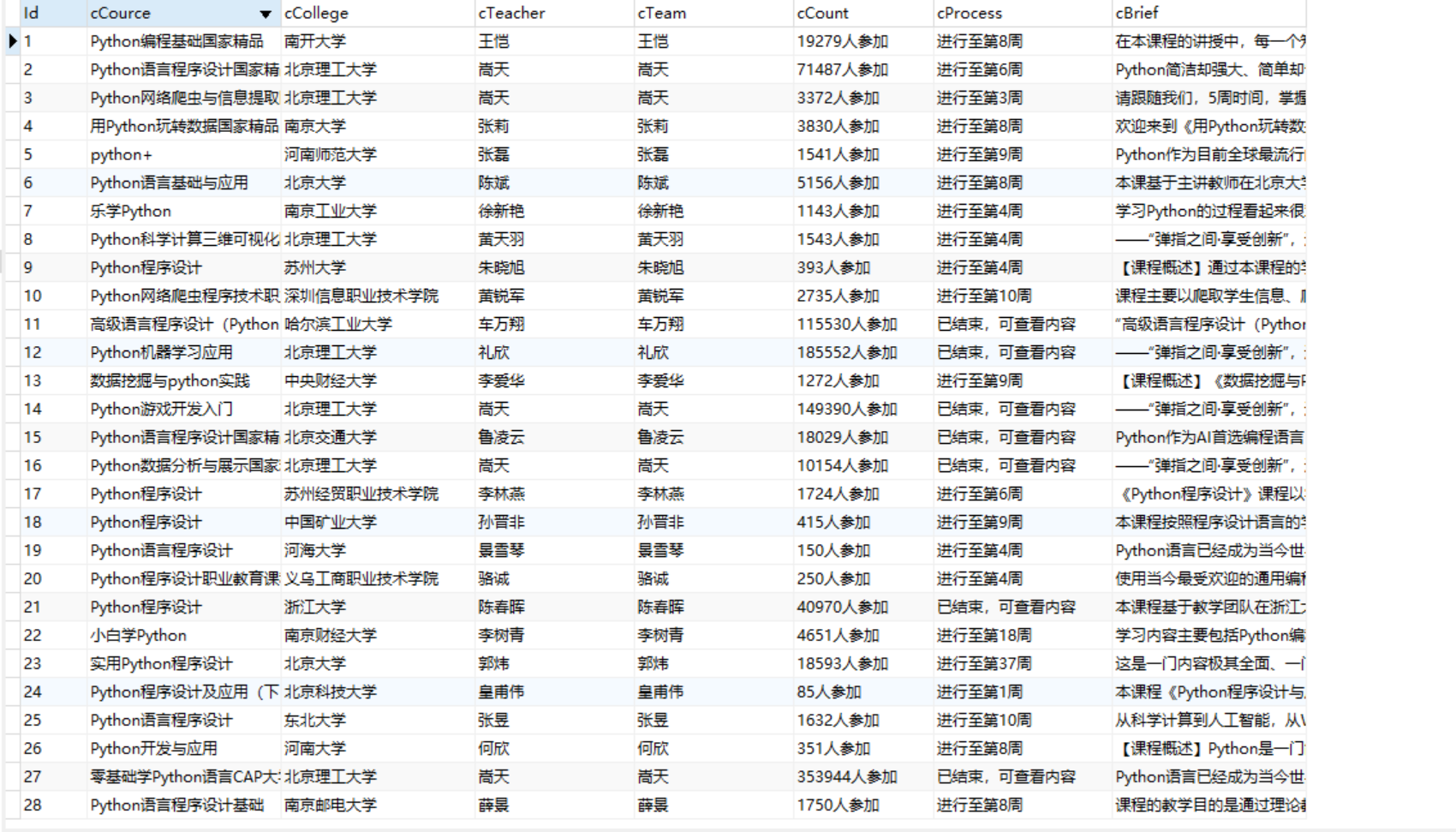

输出信息:

- MYSQL 数据库存储和输出格式

思路

- 由于mooc平台课程过多,于是选择爬取所有的国家精品课程

代码

MoocSelenium.py

from selenium import webdriver

import time

import pymysql

class MoocSelenium():

# headers = {

# "User-Agent": "Mozilla/5.0 (Windows; U; Windows NT 6.0 x64; en-US; rv:1.9pre) Gecko/2008072421 Minefield/3.0.2pre"}

count = 1

driver = webdriver.Edge(executable_path='C:\Program Files (x86)\Microsoft\Edge\Application\msedgedriver.exe')

driver.get('https://www.icourse163.org/channel/2001.htm')

driver.maximize_window()

def getInfo(self):

print("Page",self.count)

self.count += 1

courses = self.driver.find_elements_by_xpath("//div[@class='_1gBJC']//div[@class='_2mbYw']//div[@class='_3KiL7']")

titles = self.driver.find_elements_by_xpath("//div[@class='_1gBJC']/div/div//div[@class='_1Bfx4']/div//h3") #课程标题

schools = self.driver.find_elements_by_xpath("//div[@class='_1gBJC']/div/div//div[@class='_1Bfx4']/div//p") #学校

teachers = self.driver.find_elements_by_xpath("//div[@class='_1gBJC']/div/div//div[@class='_1Bfx4']/div//div[@class='_1Zkj9']") #授课老师

for i in range(len(courses)):

title = titles[i].text

school = schools[i].text

teacher = teachers[i].text

course = courses[i]

webdriver.ActionChains(self.driver).move_to_element(course).click(course).perform()

time.sleep(3)

# print(self.driver.current_url)

handles = self.driver.window_handles

self.driver.switch_to.window(handles[1])

# self.getCourseInfo()

note = self.driver.find_element_by_class_name("course-heading-intro_intro")

# print(title,school,teacher,note.text)

self.writeMySQL(title,school,teacher,note.text)

self.driver.close()

handles = self.driver.window_handles

self.driver.switch_to.window(handles[0])

self.nextPage()

def getCourseInfo(self):

try:

note = self.driver.find_element_by_class_name("course-heading-intro_intro")

print(note.text)

except:

notes = None

print("err")

def nextPage(self):

try:

GoButton = self.driver.find_element_by_xpath("//div[@class='_1lKzE']//a[@class='_3YiUU '][last()]")

GoButton.click()

time.sleep(3)

self.getInfo()

except:

print("err")

time.sleep(3)

self.getInfo()

def initDatabase(self):

try:

serverName = "127.0.0.1"

# userName = "sa"

passWord = "02071035"

self.con = pymysql.connect(host = serverName,port = 3307,user = "root",password = passWord,database = "Mooc",charset = "utf8")

self.cursor = self.con.cursor()

self.cursor.execute("use Mooc")

print("init DB over")

self.cursor.execute("select * from mooc")

except:

print("init err")

def writeMySQL(self,title,school,teacher,note):

try:

print(title,school,teacher,note)

self.cursor.execute("insert mooc(title,school,teacher,note) values (%s,%s,%s,%s)",(title,school,teacher,note))

self.con.commit()

except Exception as err:

print(err)

# self.opened = False

spider = Spider()

spider.initDatabase()

spider.getInfo()

运行截图

心得体会

- 网页有两部分,一部分是推荐课程,我们的爬取目标是所有课程,所以用xpath选择第二部分的课程信息,并用click()实现翻页。

- 还是有点难度的,主要在于课程详细信息并不保存在大目录网址中,而是需要点击进入详情页面,所以也就涉及到了window的打开、切换、关闭问题。这部分功能的主要代码如下:

webdriver.ActionChains(self.driver).move_to_element(course).click(course).perform()

time.sleep(3)

# print(self.driver.current_url)

handles = self.driver.window_handles

self.driver.switch_to.window(handles[1])

# self.getCourseInfo()

note = self.driver.find_element_by_class_name("course-heading-intro_intro")

# print(title,school,teacher,note.text)

self.writeMySQL(title,school,teacher,note.text)

self.driver.close()

handles = self.driver.window_handles

self.driver.switch_to.window(handles[0])

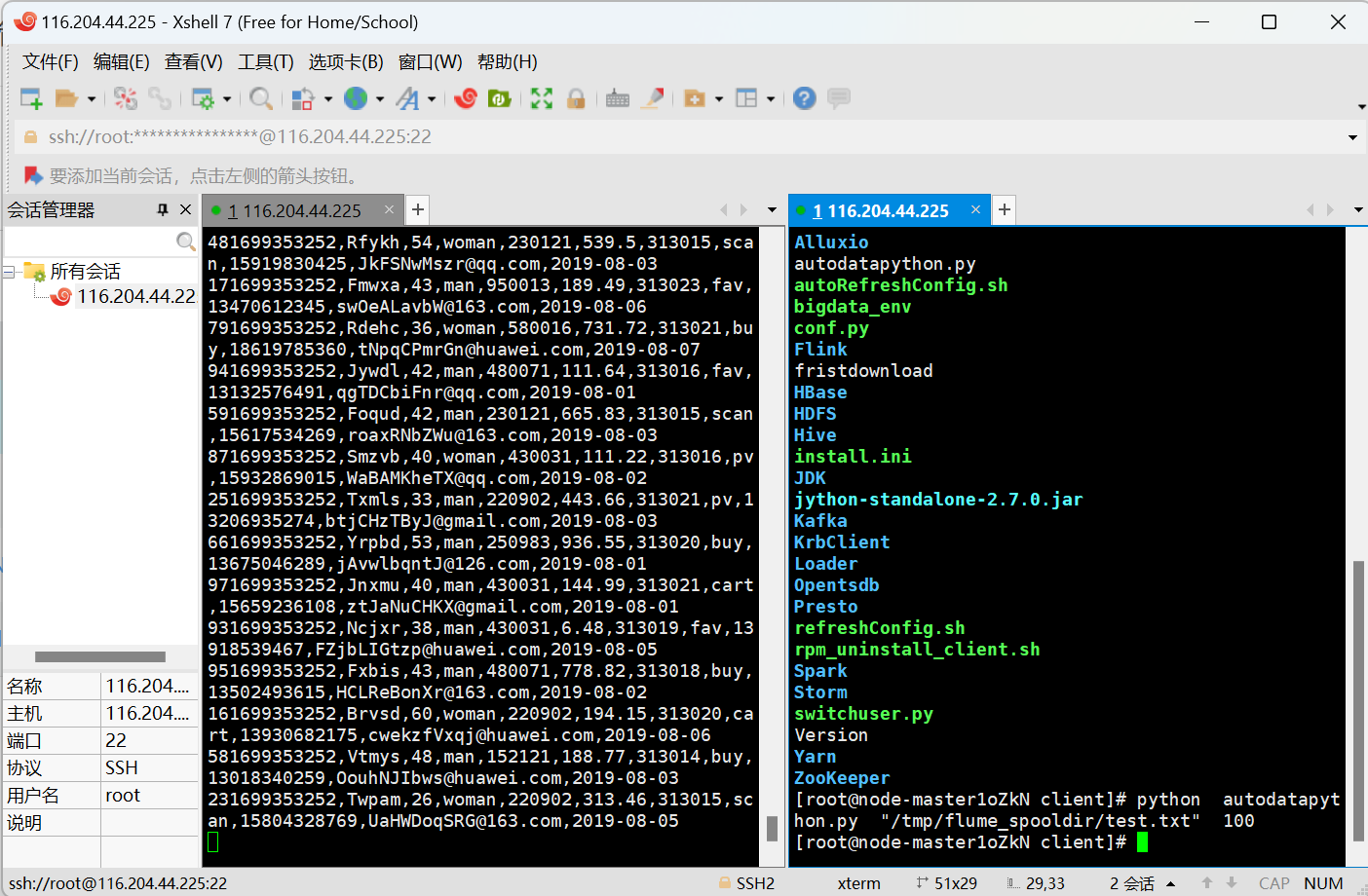

作业③

要求

- 掌握大数据相关服务,熟悉 Xshell 的使用

- 完成文档 华为云_大数据实时分析处理实验手册-Flume 日志采集实验(部分)v2.docx 中的任务,即为下面 5 个任务,具体操作见文档。

环境搭建

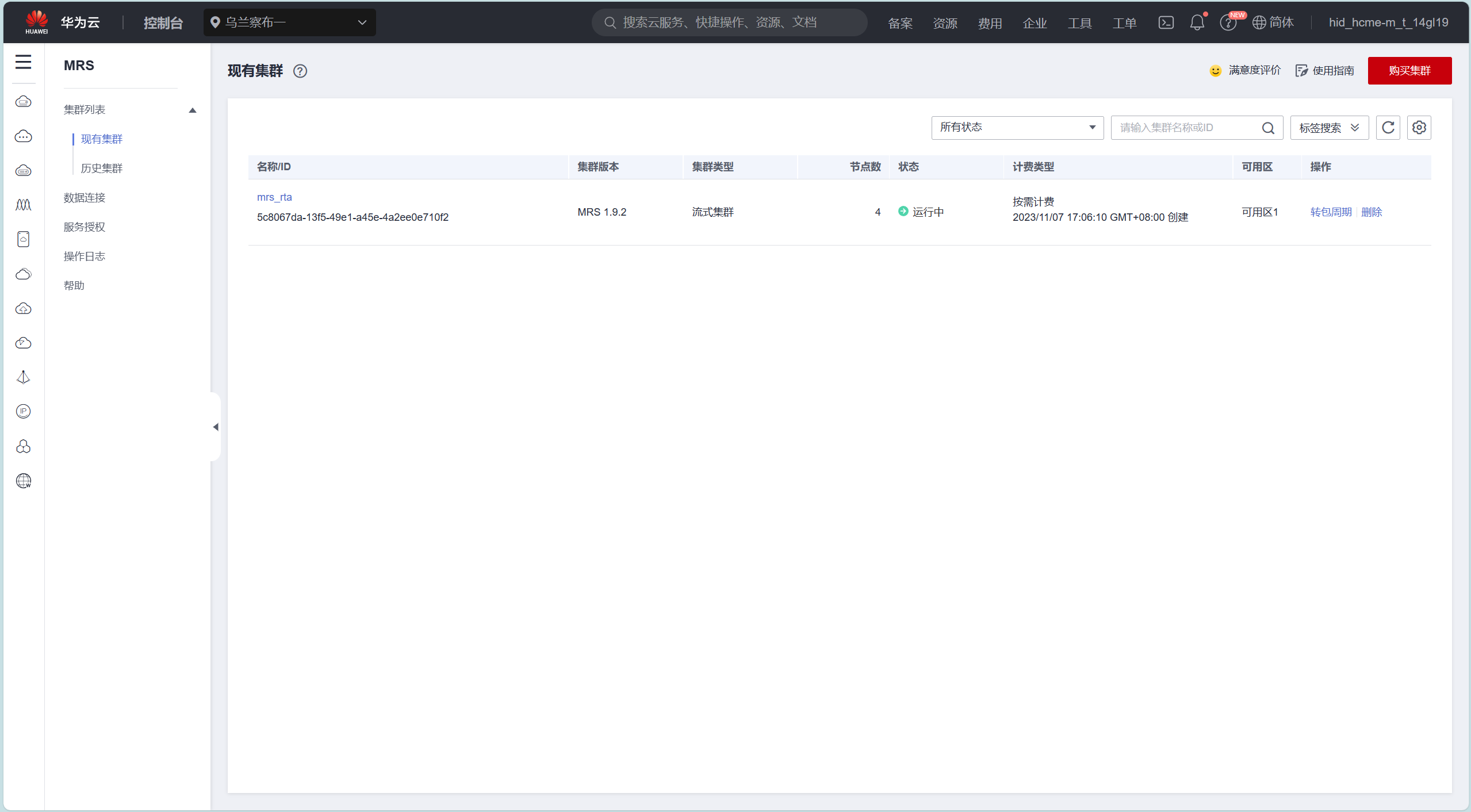

- 任务一:开通 MapReduce 服务

实时分析开发实战

-

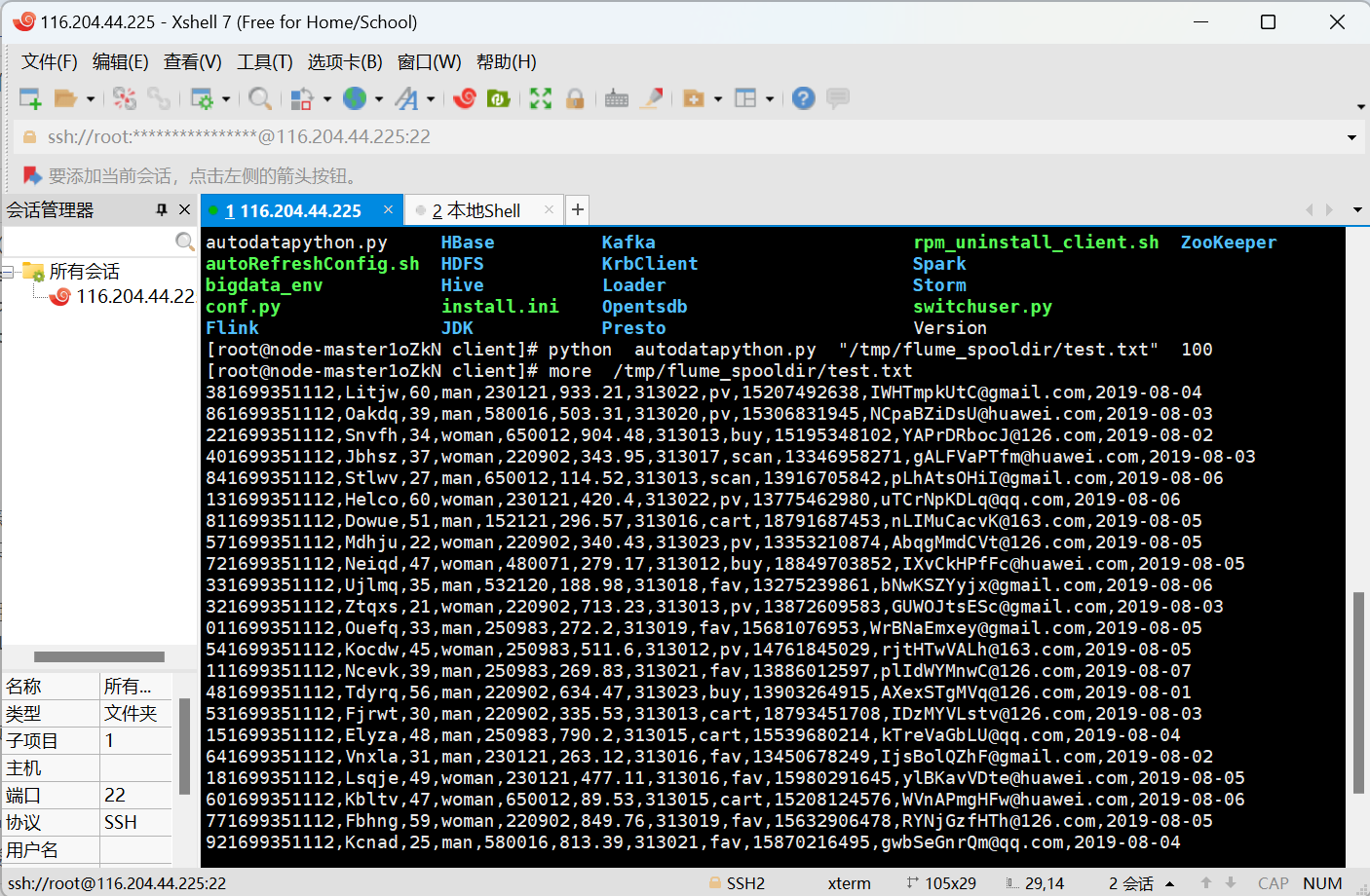

任务一:Python 脚本生成测试数据

-

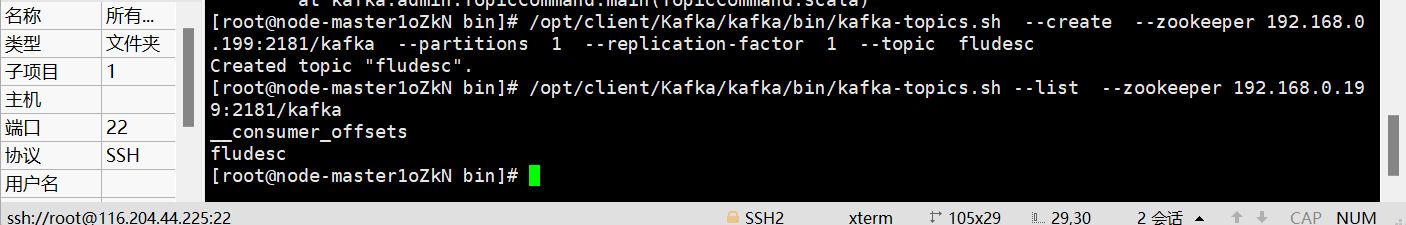

任务二:配置 Kafka

-

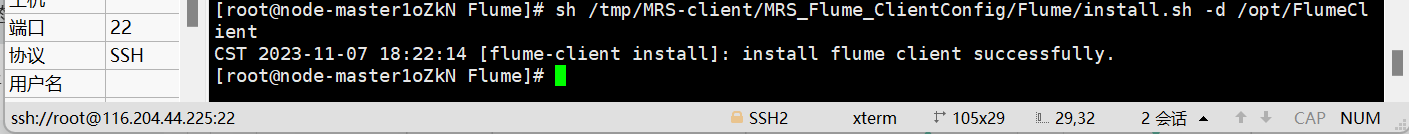

任务三: 安装 Flume 客户端

-

任务四:配置 Flume 采集数据

心得体会

- 学会了运用华为公有云的MRS服务,了解了Flume的作用和环境搭建的过程。

- 跟着老师的教程走,虽然版本变化了,但还是非常顺利地完成了。

- Xshell7和Xftp7建议自行到官网下载

下载地址

选择免费授权即可