卷积层

卷积操作

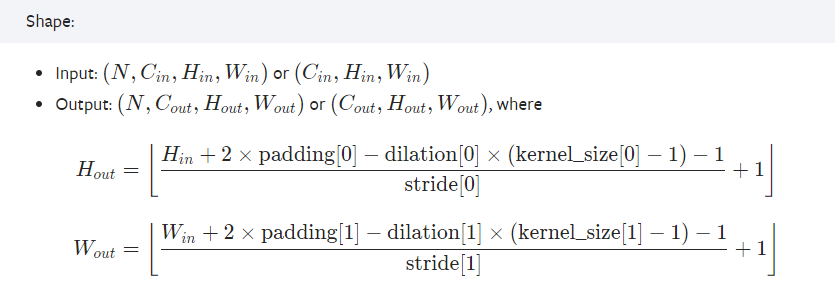

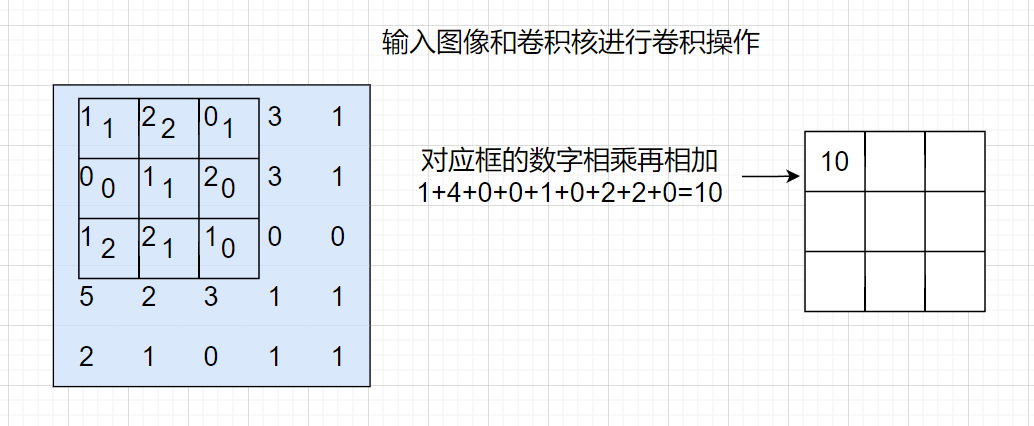

pytorch官网详细介绍了常用的卷积操作函数:conv1d、conv2d、conv3d等,其中Conv1d针对一维的向量,Conv2d针对二维的向量,Conv3d针对三维的向量。在图像处理中,Conv2d的使用次数较多,因此以conv2d为例说明卷积操作(torch.nn.Conv2d和torch.nn.function.conv2d)

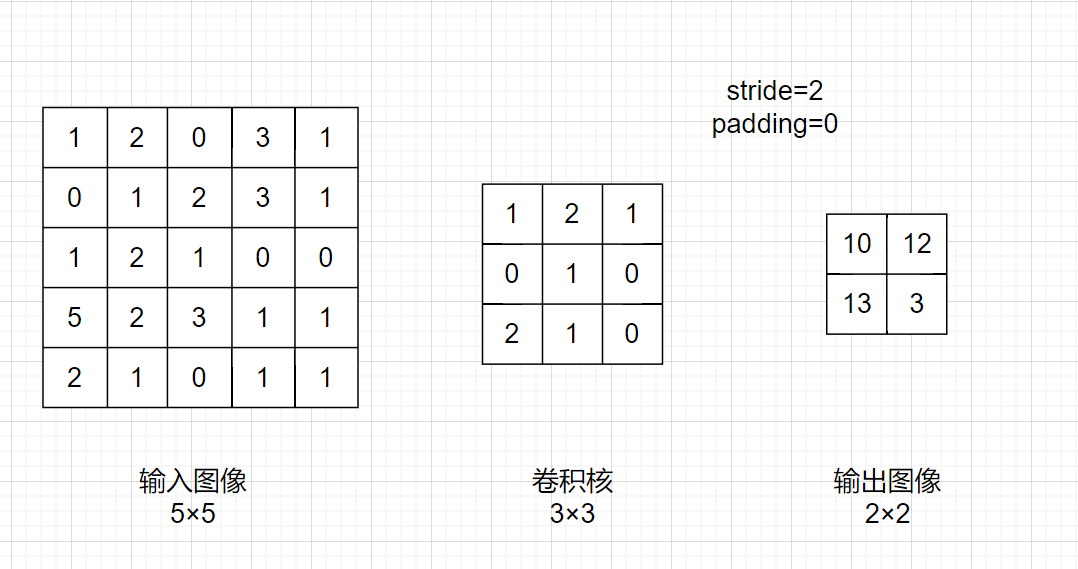

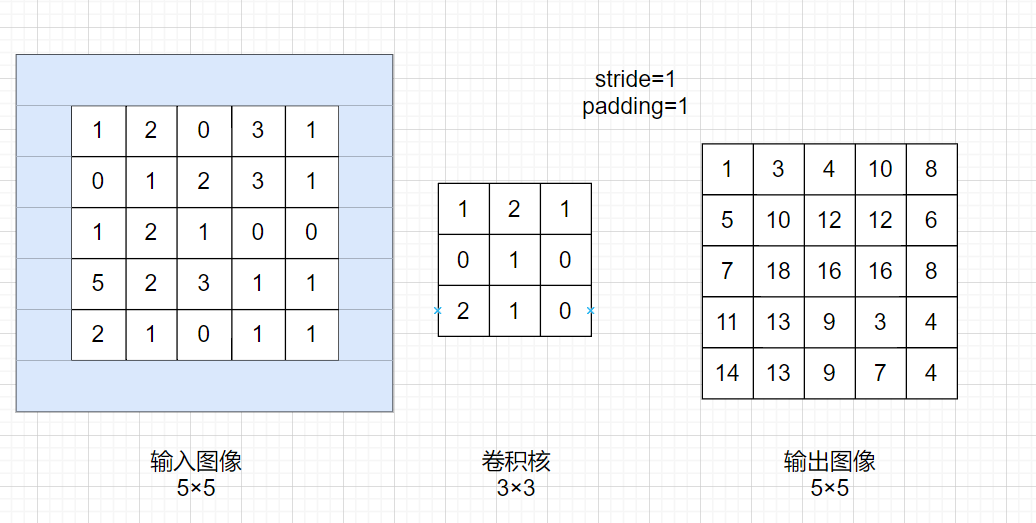

stride和padding的含义

import torch

import torch.nn.functional as F

input = torch.tensor([[1, 2, 0, 3, 1],

[0, 1, 2, 3, 1],

[1, 2, 1, 0, 0],

[5, 2, 3, 1, 1],

[2, 1, 0, 1, 1]])

kernel = torch.tensor([[1, 2, 1],

[0, 1, 0],

[2, 1, 0]])

# 由于conv2要求的输入的input的shape需要包含minibatch、in_channel、H、W,所以需要进行reshape

input = torch.reshape(input, (1, 1, 5, 5))

kernel = torch.reshape(kernel, (1, 1, 3, 3))

print(input.shape)

print(kernel.shape)

output = F.conv2d(input, kernel, stride=1)

print(output)

output2 = F.conv2d(input, kernel, stride=2)

print(output2)

output3 = F.conv2d(input, kernel, stride=1, padding=1)

print(output3)

卷积层在神经网络中的使用

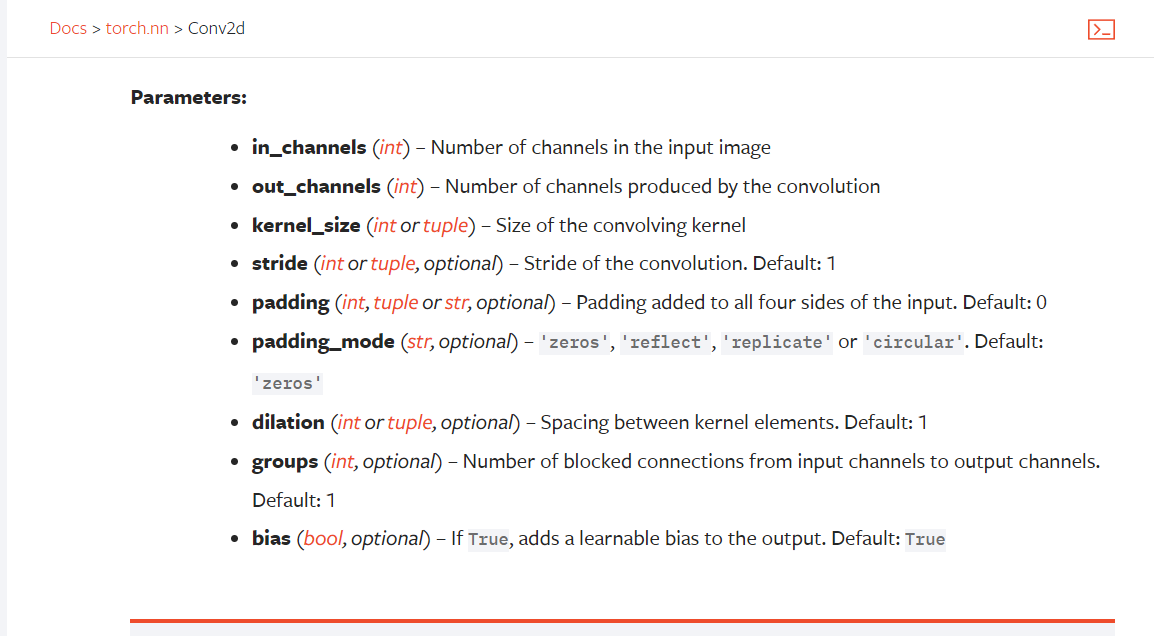

torch.nn.Conv2d(in_channels, out_channels, kernel_size, stride=1, padding=0, dilation=1, groups=1, bias=True, padding_mode='zeros', device=None, dtype=None)

补充(常用参数为上面的前五个参数):

kernel_size:卷积核的大小,当等于3时表示3×3的卷积核;当为元组(1,2)时表示1×2的卷积核。

dilation:卷积核之间的距离,默认1。参考举例

groups:输入通道和输出通道之间的连接,默认1。

代码实现

以CIFAR10数据集为例:

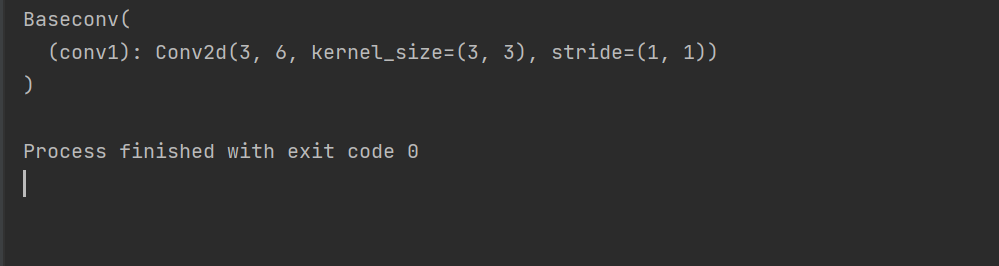

1.显示卷积层的基本信息

import torch

import torchvision

from torch import nn

from torch.nn import Conv2d

from torch.utils.data import DataLoader

dataset = torchvision.datasets.CIFAR10("./dataset2",train=False, transform=torchvision.transforms.ToTensor(),download=True)

dataloader = DataLoader(dataset, batch_size=64)

class Baseconv(nn.Module):

def __init__(self):

super(Baseconv, self).__init__()

self.conv1 = Conv2d(in_channels=3, out_channels=6, kernel_size=3, stride=1, padding=0)

def forward(self, x):

x = self.conv1(x)

return x

baseconv = Baseconv()

print(baseconv)

# 输出结果显示卷积层的各个参数输入通道、输出通道、卷积核结构、步长(横向纵向每次都是走一步)

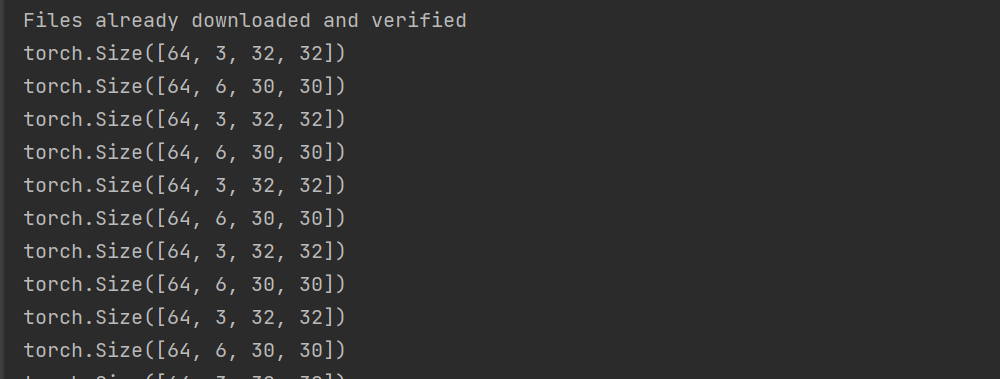

2.显示输入图像和输出图像的shape

import torch

import torchvision

from torch import nn

from torch.nn import Conv2d

from torch.utils.data import DataLoader

dataset = torchvision.datasets.CIFAR10("./dataset2",train=False, transform=torchvision.transforms.ToTensor(),download=True)

dataloader = DataLoader(dataset, batch_size=64)

class Baseconv(nn.Module):

def __init__(self):

super(Baseconv, self).__init__()

self.conv1 = Conv2d(in_channels=3, out_channels=6, kernel_size=3, stride=1, padding=0)

def forward(self, x):

x = self.conv1(x)

return x

baseconv = Baseconv()

for data in dataloader:

imgs, targets = data

output = baseconv(imgs)

print(imgs.shape)

print(output.shape)

#输入的batch-size为64,通道为3,大小32×32

#输出的batch_size为64,通道为6,大小30×30

#计算过程为(不是方形的则分别计算H和W):

输出=(输入+padding-卷积)/stride + 1

即30 = (32+0-3)/1 +1

3.借助Tensorboard观察卷积前后的图像

import torch

import torchvision

from torch import nn

from torch.nn import Conv2d

from torch.utils.data import DataLoader

from torch.utils.tensorboard import SummaryWriter

dataset = torchvision.datasets.CIFAR10("./dataset2",train=False, transform=torchvision.transforms.ToTensor(),download=True)

dataloader = DataLoader(dataset, batch_size=64)

class Baseconv(nn.Module):

def __init__(self):

super(Baseconv, self).__init__()

self.conv1 = Conv2d(in_channels=3, out_channels=6, kernel_size=3, stride=1, padding=0)

def forward(self, x):

x = self.conv1(x)

return x

baseconv = Baseconv()

writer = SummaryWriter("logs")

step = 0

for data in dataloader:

imgs, targets = data

output = baseconv(imgs)

# torch.Size([64, 3, 32, 32])

writer.add_images("Conv2d_input", imgs, step)

# 由于卷积操作之后的channels = 6,因此需要借助reshape操作将通道数改成3(不太严谨的操作)

# torch.Size([64, 6, 30, 30]) -> [xxx, 3, 30, 30]

output = torch.reshape(output, (-1, 3, 30, 30)) # -1表示根据后面的参数自动设置batch_size

# 注意是images

writer.add_images("Conv2d_output", output, step)

step = step + 1

writer.close()

输入和输出的尺寸计算