代码

import requests

from bs4 import BeautifulSoup

from multiprocessing import Pool

import sqlite3

import time

from tqdm import tqdm

your_cookie="your_cookie"

headers = {

'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,image/apng,*/*;q=0.8,application/signed-exchange;v=b3;q=0.7',

'Accept-Encoding': 'gzip, deflate, br',

'Accept-Language': 'zh-CN,zh;q=0.9,en;q=0.8,en-GB;q=0.7,en-US;q=0.6',

'Cache-Control': 'max-age=0',

# 'Referer': 'https://news.cnblogs.com/n/104394/',

'Sec-Ch-Ua': '"Microsoft Edge";v="117", "Not;A=Brand";v="8", "Chromium";v="117"',

'Sec-Ch-Ua-Mobile': '?0',

'Sec-Ch-Ua-Platform': '"Windows"',

'Sec-Fetch-Dest': 'document',

'Sec-Fetch-Mode': 'navigate',

'Sec-Fetch-Site': 'same-origin',

'Sec-Fetch-User': '?1',

'Upgrade-Insecure-Requests': '1',

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/117.0.0.0 Safari/537.36 Edg/117.0.2045.31',

'Cookie' : your_cookie

}

def get_news_info(news_id):

url = f"https://news.cnblogs.com/n/{news_id}/"

response = requests.get(url, headers=headers)

soup = BeautifulSoup(response.text, 'html.parser')

news_title_div = soup.find('div', {'id': 'news_title'})

news_info_div = soup.find('div', {'id': 'news_info'})

if news_title_div and news_title_div.a:

title = news_title_div.a.text

else:

title = 'Not Found'

if news_info_div:

# print(f"{str(news_info_div)=}")

time_span = news_info_div.find('span', {'class': 'time'})

view_span = news_info_div.find('span', {'class': 'view' ,'id': 'News_TotalView'})

if time_span:

time_text = time_span.text.strip()

# \.split(' ')[1]

else:

time_text = 'Not Found'

if view_span:

view_text = view_span.text

else:

view_text = 'Not Found'

else:

time_text = 'Not Found'

view_text = 'Not Found'

news_body = 'Not Found'

news_body_div=soup.find('div', {'id': 'news_body'})

if news_body_div:

news_body = str(news_body_div)

else:

news_body = 'Not Found'

return {

'news_id' : news_id,

'title': title,

'time': time_text,

'views': view_text,

'news_body': news_body,

'url': url,

}

def save_to_db(news_info,filename):

conn = sqlite3.connect(f'news_{filename}.db')

c = conn.cursor()

c.execute('''CREATE TABLE IF NOT EXISTS news

(news_id INT PRIMARY KEY, title TEXT, time TEXT, views TEXT, news_body TEXT, url TEXT)''')

c.execute('''INSERT INTO news VALUES (?,?,?,?,?,?)''',

(news_info['news_id'], news_info['title'], news_info['time'], news_info['views'],news_info['news_body'], news_info['url']))

conn.commit()

conn.close()

if __name__ == '__main__':

with Pool(20) as p: # Number of parallel processes

new_id_start=75995-500

new_id_end=75995

news_ids = list(range(new_id_start,new_id_end ))

start = time.time()

for news_info in tqdm(p.imap_unordered(get_news_info, news_ids), total=len(news_ids)):

save_to_db(news_info=news_info,filename=f"{new_id_start}~{new_id_end}")

end = time.time()

print(f'Time taken: {end - start} seconds')

## https://zzk.cnblogs.com/s?Keywords=facebook&datetimerange=Customer&from=2010-10-01&to=2010-11-01 也可以用博客园的找找看

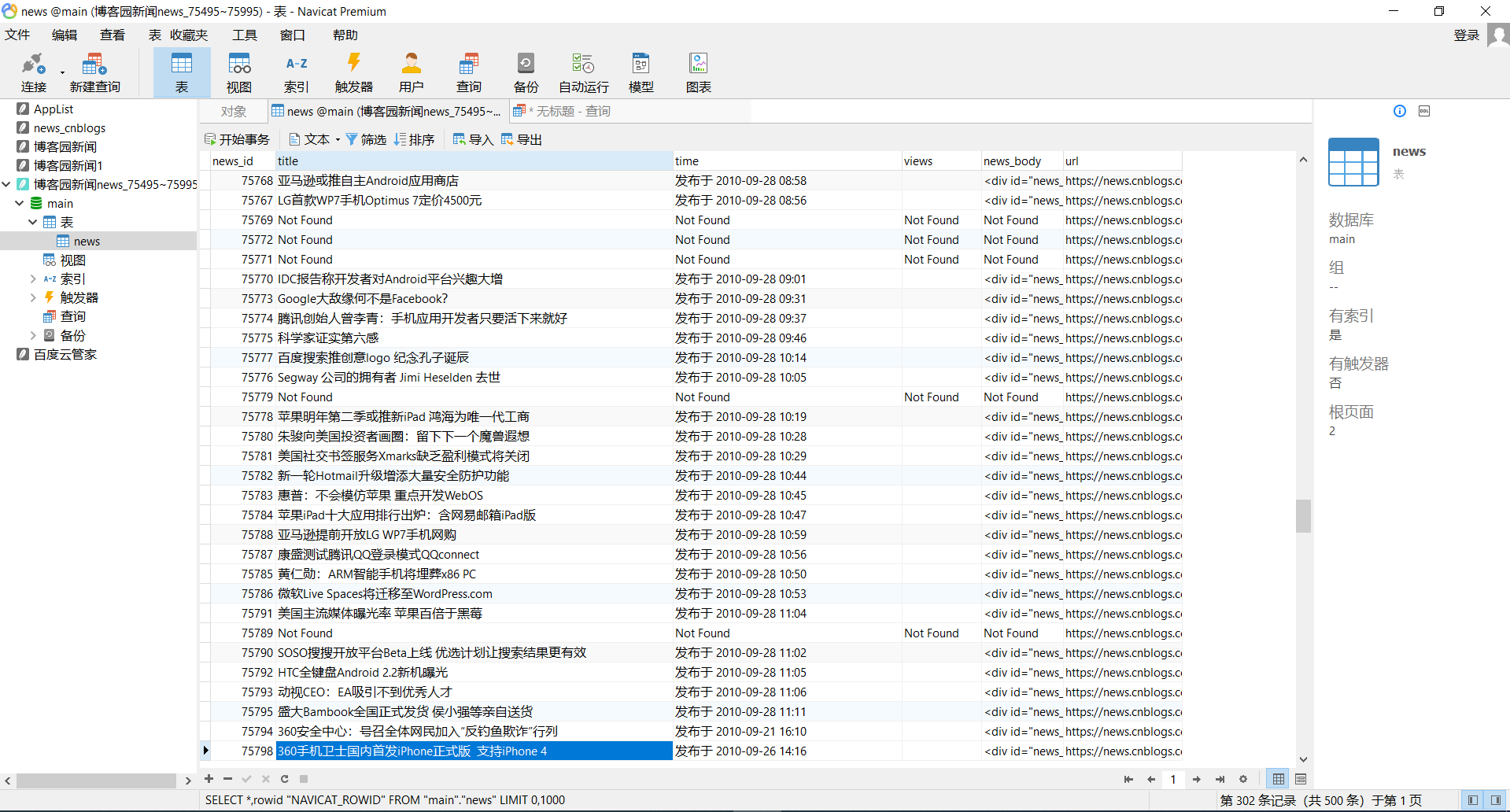

结果展示

后面可以考虑:洗数据,数据挖掘之类的。