搜狗网页

import requests url = "https://www.sogou.com" for _ in range(20): response = requests.get(url) print(f"返回状态:{response.status_code}") text_length = len(response.text) content_length = len(response.content) print(f"text()内容:{response.text}") print(f"text()属性返回网页内容长度:{text_length} 字符") print(f"content属性返回网页内容长度:{content_length} 字节") print("-" * 30)

运行结果

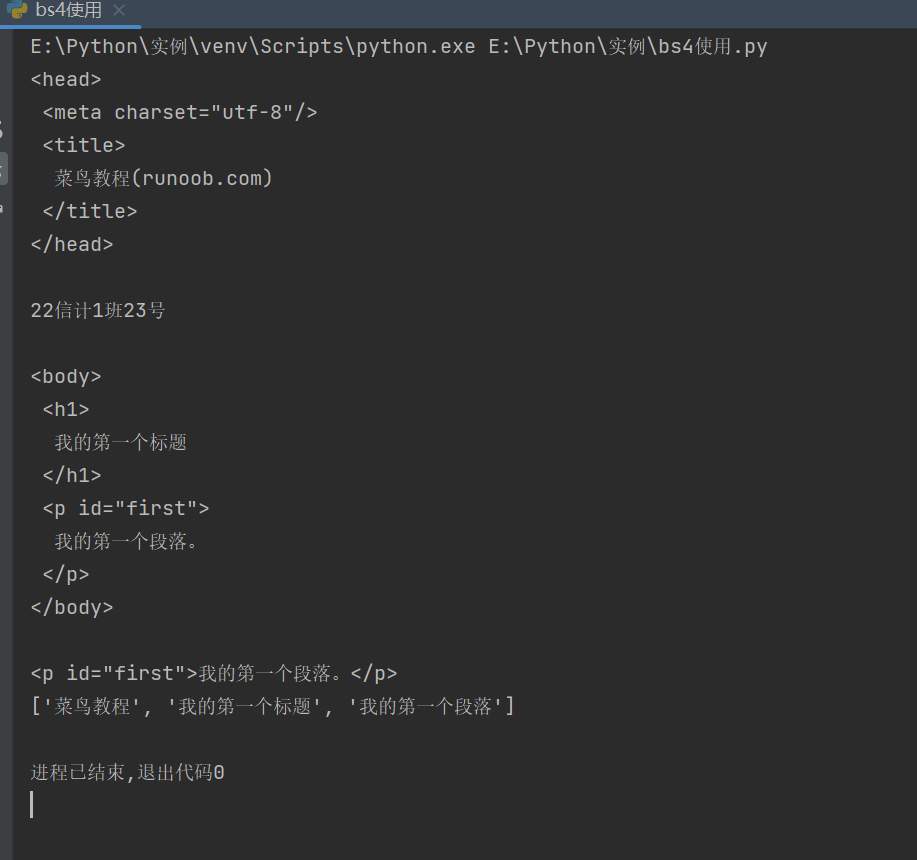

Bs4的使用

from bs4 import BeautifulSoup import re text = """ <!DOCTYPE html> <html> <head> <meta charset="utf-8"> <title>菜鸟教程(runoob.com)</title> </head> <body> <h1>我的第一个标题</h1> <p id="first">我的第一个段落。</p> </body> <table border="1"> <tr> <td>row 1, cell 1</td> <td>row 1, cell 2</td> </tr> <tr> <td>row 2, cell 1</td> <td>row 2, cell 2</td> </tr> </table> </html> """ # 创建BeautifulSoup对象 soup = BeautifulSoup(text, features="html.parser") # 打印head标签和学号后两位 print(soup.head.prettify()) print("22信计1班23号\n") # 获取body标签对象 print(soup.body.prettify()) # 获取id为first的对象 first_p = soup.find(id="first") print(first_p) # 获取打印中文字符 pattern = re.compile(u'[\u4e00-\u9fff]+') chinese_chars = pattern.findall(text) print(chinese_chars)

运行结果

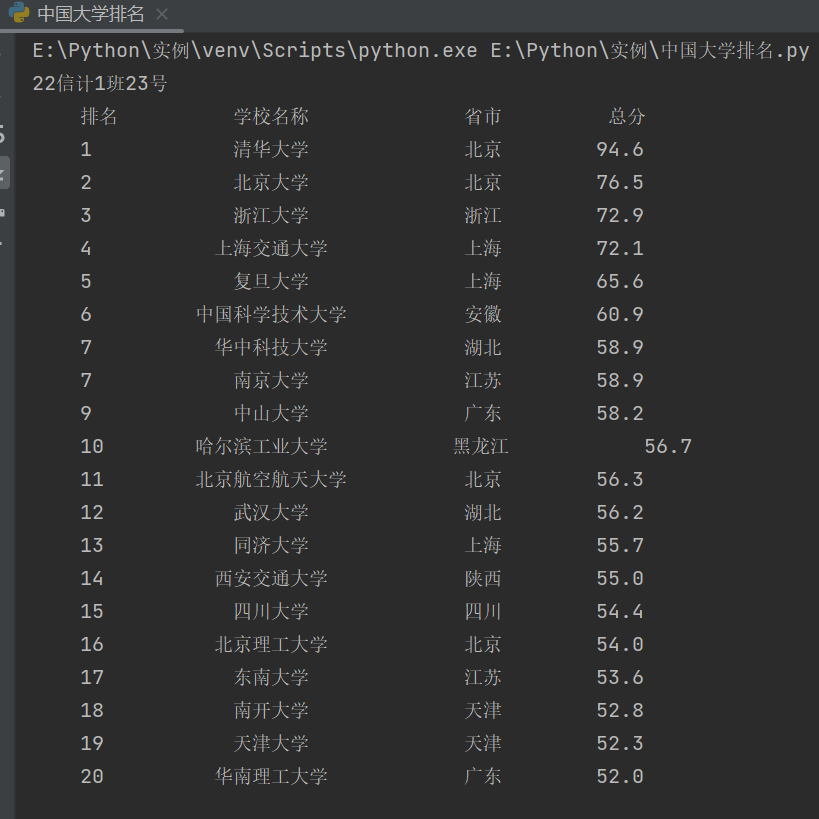

中国大学排名

import requests from bs4 import BeautifulSoup import csv all_univ = [] def get_html_text(url): try: r = requests.get(url, timeout=30) r.raise_for_status() r.encoding = 'utf-8' return r.text except: return "" def fill_univ_list(soup): data = soup.find_all('tr') for tr in data: ltd = tr.find_all('td') if len(ltd) < 5: continue single_univ = [ltd[0].string.strip(), ltd[1].find('a', 'name-cn').string.strip(), ltd[2].text.strip(), ltd[4].string.strip()] all_univ.append(single_univ) def print_univ_list(num): file_name = "大学排行.csv" print("{0:^10}\t{1:{4}^10}\t{2:^10}\t{3:^10}".format("排名", "学校名称", "省市", "总分", chr(12288))) with open(file_name, 'w', newline='', encoding='utf-8') as f: writer = csv.writer(f) writer.writerow(["排名", "学校名称", "省市", "总分"]) for i in range(num): u = all_univ[i] writer.writerow(u) print("{0:^10}\t{1:{4}^10}\t{2:^10}\t{3:^10}".format(u[0], u[1], u[2], u[3], chr(12288))) def main(num): url = "https://www.shanghairanking.cn/rankings/bcur/201911.html" html = get_html_text(url) soup = BeautifulSoup(html, features="html.parser") fill_univ_list(soup) print_univ_list(num) print("22信计1班23号") main(20)

运行结果

转为csv文件

import csv data = [ ["排名", "学校名称", "省市", "总分"], [1, "清华大学", "北京", 94.6], [2, "北京大学", "北京", 76.5], [3, "浙江大学", "浙江", 72.9], [4, "上海交通大学", "上海", 72.1], [5, "复旦大学", "上海", 65.6], [6, "中国科学技术大学", "安徽", 60.9], [7, "华中科技大学", "湖北", 58.9], [7, "南京大学", "江苏", 58.9], [9, "中山大学", "广东", 58.2], [10, "哈尔滨工业大学", "黑龙江", 56.7], [11, "北京航空航天大学", "北京", 56.3], [12, "武汉大学", "湖北", 56.2], [13, "同济大学", "上海", 55.7], [14, "西安交通大学", "陕西", 55.0], [15, "四川大学", "四川", 54.4], [16, "北京理工大学", "北京", 54.0], [17, "东南大学", "江苏", 53.6], [18, "南开大学", "天津", 52.8], [19, "天津大学", "天津", 52.3], [20, "华南理工大学", "广东", 52.0] ] with open('university_ranking.csv', 'w', newline='', encoding='utf-8-sig') as file: writer = csv.writer(file) writer.writerows(data)