一、Prometheus自动发现机制

服务发现机制:为了实现自动将被监控目标添加到Permethus

Prometheus数据源的配置分为静态配置和动态发现,常见为以下几类:

- static_configs:静态服务发现,即将配置直接写到配置文件或Configmap

- file_sd_config:文件服务器发现,创建一个专门配置target的配置文件,新增监控对象时直接修改配置文件

- dns_sd_configs:DNS 服务发现

- kubernetes_sd_configs: Kubernetes服务发现

- consul_sd_configs:Consul服务发现

在监控Kubernetes的应用场景中,频繁更新的pod,svc等资源配置能更换体现Promethes监控目标自动发现的好处。

使用consul服务实现自动发现

1.1在k8s安装consul服务

使用helm安装。

helm pull bitnami/consul --untar使用nfs的StorageClass存储持久化数据,修改values.yaml

# cat values.yaml |grep nfs-client

storageClass: "nfs-client"

storageClass: "nfs-client"helm 安装consul

# helm install prometheus-consul .

NAME: prometheus-consul

LAST DEPLOYED: Mon Oct 30 20:28:12 2023

NAMESPACE: default

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

CHART NAME: consul

CHART VERSION: 10.13.10

APP VERSION: 1.16.2

** Please be patient while the chart is being deployed **

Consul can be accessed within the cluster on port 8300 at prometheus-consul-headless.default.svc.cluster.local

In order to access to the Consul Web UI:

1. Get the Consul URL by running these commands:

kubectl port-forward --namespace default svc/prometheus-consul-ui 80:80

echo "Consul URL: http://127.0.0.1:80"

2. Access ASP.NET Core using the obtained URL.

Please take into account that you need to wait until a cluster leader is elected before using the Consul Web UI.

In order to check the status of the cluster you can run the following command:

kubectl exec -it prometheus-consul-0 -- consul members

Furthermore, to know which Consul node is the cluster leader run this other command:

kubectl exec -it prometheus-consul-0 -- consul operator raft list-peers查看pvc

# kubectl get pvc|grep consul

data-prometheus-consul-0 Bound pvc-4d020292-6829-4594-af6e-af0e51bdd00e 8Gi RWO nfs-client 67s

data-prometheus-consul-1 Bound pvc-5c1d003a-3a3d-4793-8602-ffc92b85782f 8Gi RWO nfs-client 67s

data-prometheus-consul-2 Bound pvc-45ba8ec0-5f6f-45ae-8771-a1966b2d6e4c 8Gi RWO nfs-client 67s查看pod

# kubectl get pod -o wide |grep consul

prometheus-consul-0 1/1 Running 0 10m 10.244.154.40 node-1-233 <none> <none>

prometheus-consul-1 1/1 Running 1 (54s ago) 10m 10.244.167.169 node-1-231 <none> <none>

prometheus-consul-2 1/1 Running 2 (84s ago) 10m 10.244.29.44 node-1-232 <none> <none>查看consul的service

# kubectl get svc |grep consul

prometheus-consul-headless ClusterIP None <none> 8500/TCP,8400/TCP,8301/TCP,8301/UDP,8300/TCP,8600/TCP,8600/UDP 11m

prometheus-consul-ui ClusterIP 10.104.2.14 <none> 80/TCP 通过consul接口注册数据

# curl -X PUT -d '{"id": "node-1-231","name": "node-1-231","address": "192.168.1.231","port": 9100,"tags": ["service"],"checks": [{"http": "http://192.168.1.231:9100/","interval": "5s"}]}' http://10.104.2.14/v1/agent/service/register查看数据

# curl http://10.104.2.14/v1/catalog/service/node-1-231

[{"ID":"cbfda4ba-e8cc-089c-4e9b-dcd8fd6d7b46","Node":"prometheus-consul-2","Address":"10.244.29.44","Datacenter":"dc1","TaggedAddresses":{"lan":"10.244.29.44","lan_ipv4":"10.244.29.44","wan":"10.244.29.44","wan_ipv4":"10.244.29.44"},"NodeMeta":{"consul-network-segment":"","consul-version":"1.16.2"},"ServiceKind":"","ServiceID":"node-1-231","ServiceName":"node-1-231","ServiceTags":["service"],"ServiceAddress":"192.168.1.231","ServiceTaggedAddresses":{"lan_ipv4":{"Address":"192.168.1.231","Port":9100},"wan_ipv4":{"Address":"192.168.1.231","Port":9100}},"ServiceWeights":{"Passing":1,"Warning":1},"ServiceMeta":{},"ServicePort":9100,"ServiceSocketPath":"","ServiceEnableTagOverride":false,"ServiceProxy":{"Mode":"","MeshGateway":{},"Expose":{}},"ServiceConnect":{},"ServiceLocality":null,"CreateIndex":34,"ModifyIndex":34}]1.2 将consul配置到Prometheus的配置

# cat prometheus_config.yaml

apiVersion: v1

data:

prometheus.yaml: |

global:

external_labels:

monitor: prometheus

scrape_configs:

- job_name: 'consul'

consul_sd_configs:

- server: '10.104.2.14'

- job_name: node_exporter

static_configs:

- targets:

- 192.168.1.230:9100

- 192.168.1.231:9100

- 192.168.1.232:9100

- 192.168.1.233:9100重新导入配置

# kubectl delete -f prometheus_config.yaml

configmap "prometheus-server" deleted

[root@master-1-230 9.4]# kubectl apply -f prometheus_config.yaml

configmap/prometheus-server created重启Prometheus服务

# kubectl get po |grep prometheus-server |awk '{print $1}' |xargs -i kubectl delete po {}

pod "prometheus-server-78b4b8bf58-prvr7" deleted

二、利用Consul监控Nginx

2.1 使用kubectl 创建 web01 pod,

kubectl run web01 --image=nginx:1.25.2

# kubectl get pod|grep web

web01 1/1 Running 0 8m42s2.2 进入nginx pod配置status项

# kubectl cp web01:/etc/nginx/conf.d/default.conf ./default.conf

tar: Removing leading `/' from member names

# cat default.conf |egrep -v "#|^$"

server {

listen 80;

listen [::]:80;

server_name localhost;

location /nginx_status {

stub_status;

}

location / {

root /usr/share/nginx/html;

index index.html index.htm;

}

error_page 500 502 503 504 /50x.html;

location = /50x.html {

root /usr/share/nginx/html;

}

}

# kubectl cp default.conf web01:/etc/nginx/conf.d/default.conf

加载nginx 配置

# kubectl exec -it web01 -- nginx -s reload

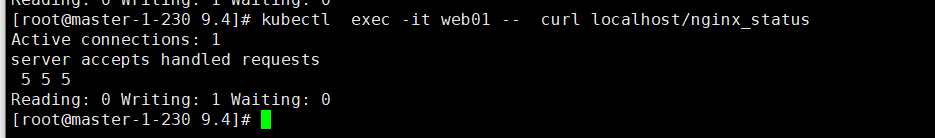

2023/10/30 13:18:49 [notice] 337#337: signal process started2.3 测试配置是否生效

# kubectl exec -it web01 -- curl localhost/nginx_status

2.4 安装nginx的exporter

helm repo add prometheus-community https://prometheus-community.github.io/helm-charts

helm repo update

helm pull prometheus-community/prometheus-nginx-exporter --untar

或者

https://github.com/prometheus-community/helm-charts/releases/download/prometheus-nginx-exporter-0.2.0/prometheus-nginx-exporter-0.2.0.tgz2.5 获取目标nginx pod 的ip

# kubectl get po -o wide|grep web01

web01 1/1 Running 0 27m 10.244.154.42 node-1-233 <none> <none>编辑values.yaml

# cd prometheus-nginx-exporter

[root@master-1-230 prometheus-nginx-exporter]# ll

总用量 16

-rw-r--r-- 1 root root 221 10月 20 04:34 Chart.lock

drwxr-xr-x 3 root root 19 10月 30 21:30 charts

-rw-r--r-- 1 root root 1027 10月 20 04:34 Chart.yaml

drwxr-xr-x 2 root root 28 10月 30 21:30 ci

-rw-r--r-- 1 root root 1512 10月 20 04:34 README.md

drwxr-xr-x 2 root root 181 10月 30 21:30 templates

-rw-r--r-- 1 root root 3207 10月 20 04:34 values.yaml

10.244.154.42

vi values.yaml

将 nginxServer: "http://{{ .Release.Name }}.{{ .Release.Namespace }}.svc.cluster.local:8080/stub_status" 这行注释,然后再增加一行

nginxServer: "http://10.244.154.42/nginx_status"2.6 安装prometheus-nginx-exporter

# helm install prometheus-nginx-exporter .

NAME: prometheus-nginx-exporter

LAST DEPLOYED: Mon Oct 30 21:33:27 2023

NAMESPACE: default

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

1. Get the application URL by running these commands:

export POD_NAME=$(kubectl get pods --namespace default -l "app.kubernetes.io/name=prometheus-nginx-exporter,app.kubernetes.io/instance=prometheus-nginx-exporter" -o jsonpath="{.items[0].metadata.name}")

export CONTAINER_PORT=$(kubectl get pod --namespace default $POD_NAME -o jsonpath="{.spec.containers[0].ports[0].containerPort}")

echo "Visit http://127.0.0.1:8080 to use your application"

kubectl --namespace default port-forward $POD_NAME 8080:$CONTAINER_PORT查看pod状态

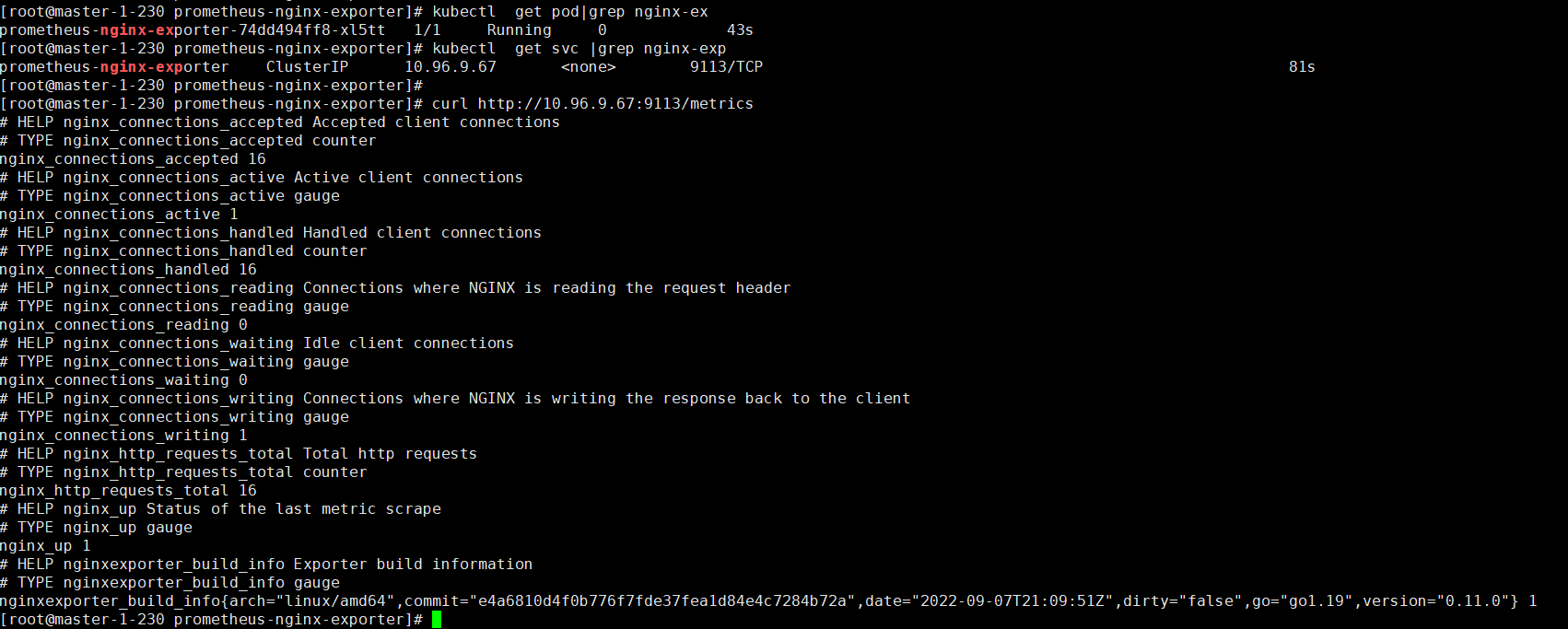

# kubectl get pod|grep nginx-ex

prometheus-nginx-exporter-74dd494ff8-xl5tt 1/1 Running 0 43s查看svc ip

# kubectl get svc |grep nginx-exp

prometheus-nginx-exporter ClusterIP 10.96.9.67 <none> 9113/TCP 2.7 访问metrics

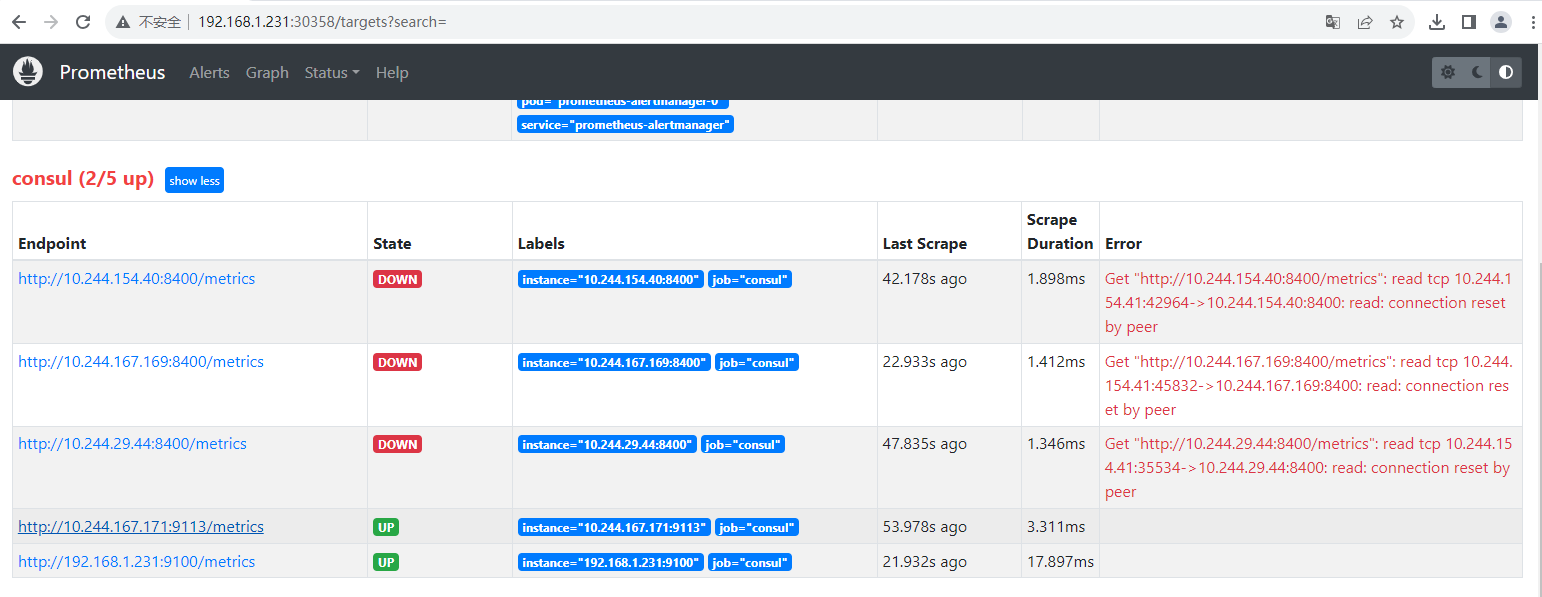

2.8 将metrics地址注册到consul

# curl -X PUT -d '{"id": "web01","name": "web01","address": "10.244.167.171","port": 9113,"tags": ["service"],"checks": [{"http": "http://10.244.167.171:9113/","interval": "5s"}]}' http://10.104.2.14/v1/agent/service/register查看注册结果

# curl http://10.104.2.14/v1/catalog/service/web01

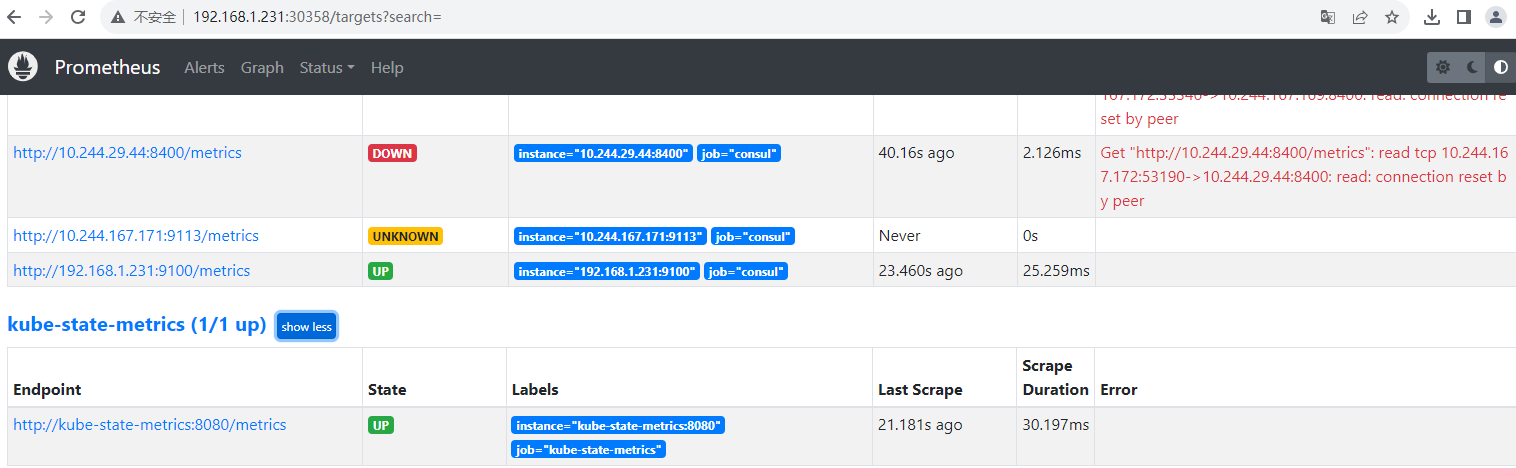

[{"ID":"cbfda4ba-e8cc-089c-4e9b-dcd8fd6d7b46","Node":"prometheus-consul-2","Address":"10.244.29.44","Datacenter":"dc1","TaggedAddresses":{"lan":"10.244.29.44","lan_ipv4":"10.244.29.44","wan":"10.244.29.44","wan_ipv4":"10.244.29.44"},"NodeMeta":{"consul-network-segment":"","consul-version":"1.16.2"},"ServiceKind":"","ServiceID":"web01","ServiceName":"web01","ServiceTags":["service"],"ServiceAddress":"10.244.167.171","ServiceTaggedAddresses":{"lan_ipv4":{"Address":"10.244.167.171","Port":9113},"wan_ipv4":{"Address":"10.244.167.171","Port":9113}},"ServiceWeights":{"Passing":1,"Warning":1},"ServiceMeta":{},"ServicePort":9113,"ServiceSocketPath":"","ServiceEnableTagOverride":false,"ServiceProxy":{"Mode":"","MeshGateway":{},"Expose":{}},"ServiceConnect":{},"ServiceLocality":null,"CreateIndex":522,"ModifyIndex":522}] 2.9 到Prometheus页面查看

三、kube-state-metrics和metrics-server

3.1 Kube-state-metrics

3.1.1 介绍

Kube-state-metrics Kubernetes组件,它提供了一种将 Kubernetes 集群中各资源状态信息转化为可监控指标的方法,以帮助用户更好地理解和监控集群的健康状态和性能。

在 Kubernetes 集群中,有许多对象(例如 Pod、Deployment、Service 等)以及它们的状态信息(例如副本数、状态、标签等)。Kube-state-metrics 通过监听 Kubernetes API 的变化,实时地获取这些对象的状态信息,并将其指标化。这些指标可以用于监控和告警,帮助运维人员了解集群中各个组件的健康状况、性能指标以及其他重要的状态信息。

Kube-state-metrics 生成的指标可以被 Prometheus 服务器采集,并用于构建仪表板、设置警报规则以及进行集群性能分析。通过将 kube-state-metrics 与 Prometheus 结合使用,您可以更好地了解 Kubernetes 集群的运行情况,并对其进行监控和管理。

3.1.2 部署

使用helm部署

# helm install kube-state-metrics bitnami/kube-state-metrics

NAME: kube-state-metrics

LAST DEPLOYED: Mon Oct 30 21:51:18 2023

NAMESPACE: default

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

CHART NAME: kube-state-metrics

CHART VERSION: 3.7.4

APP VERSION: 2.10.0

** Please be patient while the chart is being deployed **

Watch the kube-state-metrics Deployment status using the command:

kubectl get deploy -w --namespace default kube-state-metrics

kube-state-metrics can be accessed via port "8080" on the following DNS name from within your cluster:

kube-state-metrics.default.svc.cluster.local

To access kube-state-metrics from outside the cluster execute the following commands:

echo "URL: http://127.0.0.1:9100/"

kubectl port-forward --namespace default svc/kube-state-metrics 9100:80803.1.3 配置Prometheus

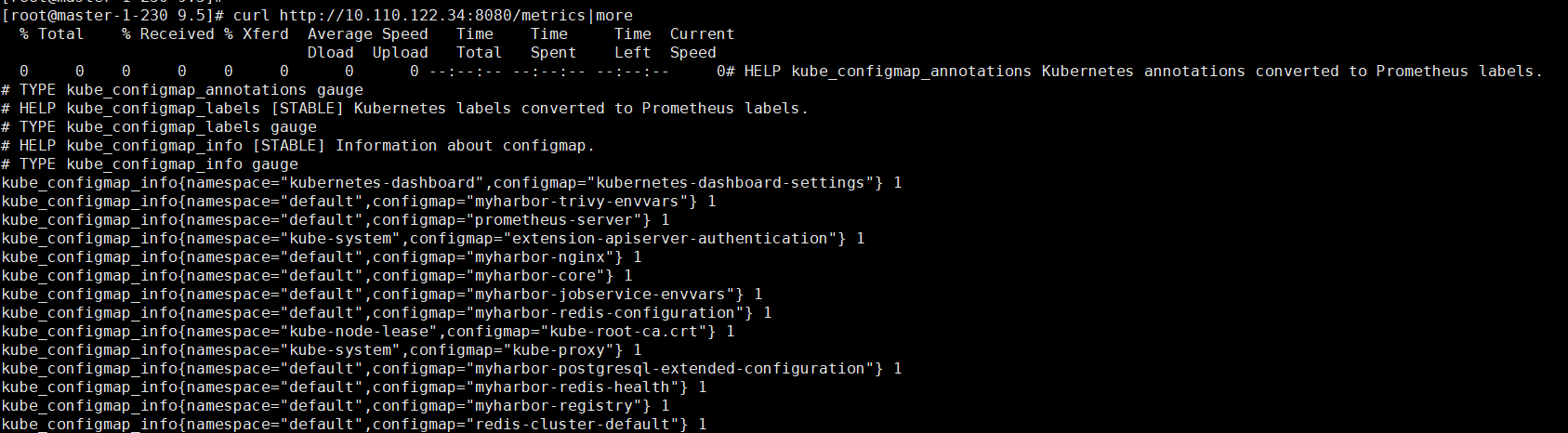

现货区svc 的ip

# kubectl get svc|grep state

kube-state-metrics ClusterIP 10.110.122.34 <none> 8080/TCP 53s测试

curl http://10.110.122.34:8080/metrics

编辑Prometheus的Configmap

vi prometheus_config.yaml ## 该文件是之前导出的,加入如下配置

- job_name: 'kube-state-metrics'

static_configs:

- targets: ['kube-state-metrics:8080']

应用yaml配置

# kubectl delete -f prometheus_config.yaml

configmap "prometheus-server" deleted

[root@master-1-230 9.5]# kubectl apply -f prometheus_config.yaml

configmap/prometheus-server created重启Prometheus服务

kubectl get po |grep prometheus-server |awk '{print $1}' |xargs -i kubectl delete po {}到Prometheus的target页面查看kube-state-metrics 服务

3.2 metrics-server

Metrics Server(指标服务器)是一个用于收集、聚合和存储 Kubernetes 集群中的指标数据的组件。它是 Kubernetes 的核心组件之一,用于提供对集群中运行的容器和节点的资源利用率和性能指标的实时监控。

Metrics Server 通过与 Kubernetes API 交互,定期收集指标数据。这些指标包括 CPU 使用率、内存使用率、文件系统使用率、网络流量等。

下载yaml文件

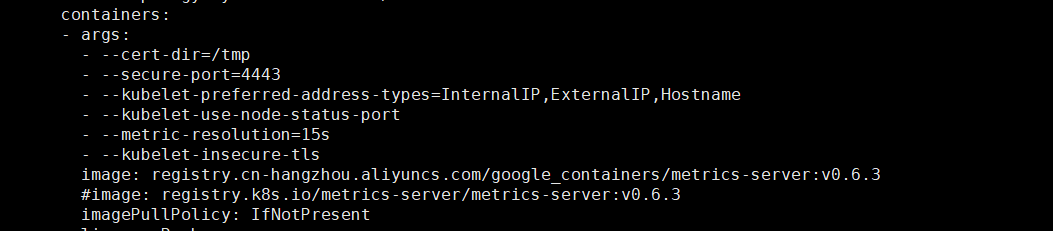

wget https://github.com/kubernetes-sigs/metrics-server/releases/download/v0.6.3/high-availability-1.21+.yamlvi high-availability-1.21+.yaml

将image: k8s.gcr.io/metrics-server/metrics-server:v0.6.3 修改为 image: registry.cn-hangzhou.aliyuncs.com/google_containers/metrics-server:v0.6.3

在image: 这行上面增加一行: - --kubelet-insecure-tls

应用yaml文件

kubectl apply -f high-availability-1.21+.yaml

serviceaccount/metrics-server created

clusterrole.rbac.authorization.k8s.io/system:aggregated-metrics-reader created

clusterrole.rbac.authorization.k8s.io/system:metrics-server created

rolebinding.rbac.authorization.k8s.io/metrics-server-auth-reader created

clusterrolebinding.rbac.authorization.k8s.io/metrics-server:system:auth-delegator created

clusterrolebinding.rbac.authorization.k8s.io/system:metrics-server created

service/metrics-server created

deployment.apps/metrics-server created

poddisruptionbudget.policy/metrics-server created

apiservice.apiregistration.k8s.io/v1beta1.metrics.k8s.io created测试

四、Prometheus监控Kubernetes集群

4.1 监控集群节点

基于Kubernetes的服务发现机制

- job_name: 'kubernetes-nodes'

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

kubernetes_sd_configs:

- role: node

relabel_configs:

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

- target_label: __address__

replacement: kubernetes.default.svc:443

- source_labels: [__meta_kubernetes_node_name]

regex: (.+)

target_label: __metrics_path__

replacement: /api/v1/nodes/${1}/proxy/metrics4.2 监控apiserver

- job_name: 'kubernetes-apiservers'

kubernetes_sd_configs:

- role: endpoints

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

relabel_configs:

- source_labels: [__meta_kubernetes_namespace, __meta_kubernetes_service_name, __meta_kubernetes_endpoint_port_name]

action: keep

regex: default;kubernetes;https4.3 监控kubelet

- job_name: 'kubernetes-kubelet'

kubernetes_sd_configs:

- role: node

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

insecure_skip_verify: true

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

relabel_configs:

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)五、 Kubernetes 常用资源对象监控

5.1 容器监控

- job_name: 'kubernetes-cadvisor'

kubernetes_sd_configs:

- role: node

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

relabel_configs:

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

replacement: $1

- source_labels: [__meta_kubernetes_node_name]

regex: (.+)

replacement: /metrics/cadvisor # <nodeip>/metrics -> <nodeip>/metrics/cadvisor

target_label: __metrics_path__5.2 service监控

- job_name: 'kubernetes-service-endpoints'

kubernetes_sd_configs:

- role: endpoints

relabel_configs:

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_scrape]

action: keep

regex: true

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_scheme]

action: replace

target_label: __scheme__

regex: (https?)

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_path]

action: replace

target_label: __metrics_path__

regex: (.+)

- source_labels: [__address__, __meta_kubernetes_service_annotation_prometheus_io_port]

action: replace

target_label: __address__

regex: ([^:]+)(?::\d+)?;(\d+)

replacement: $1:$2

- action: labelmap

regex: __meta_kubernetes_service_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

action: replace

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_service_name]

action: replace

target_label: kubernetes_name

- source_labels: [__meta_kubernetes_pod_name]

action: replace

target_label: kubernetes_pod_name

- Prometheus metrics kube-state-metrics 集群 metrics-serverprometheus metrics kube-state-metrics集群 kube-state-metrics集群prometheus metrics kube-state-metrics metrics-server metrics-server kubernetes metrics server metrics-server metrics server k8s metrics-server kubernetes指标metrics metrics-server指标metrics server prometheus机制metrics k8s 使用率 节点 集群prometheus