问题描述:flume每到半夜总是一直报错,关闭某个文件失败,虽然不影响其他日志传输,但是一直有错误日志也很难受,有一种如芒在背的感觉。

部分报错日志:

09 六月 2023 02:55:55,730 INFO [SinkRunner-PollingRunner-DefaultSinkProcessor] (org.apache.flume.sink.hdfs.BucketWriter.open:246) - Creating hdfs://cdh-master:8020/nginx/stzy/20230609/nginx-access.log.192.168.90.111.2023060902.1686250555721.gz.tmp

09 六月 2023 03:00:00,729 INFO [SinkRunner-PollingRunner-DefaultSinkProcessor] (org.apache.flume.sink.hdfs.HDFSCompressedDataStream.configure:64) - Serializer = TEXT, UseRawLocalFileSystem = false

09 六月 2023 03:00:00,739 INFO [SinkRunner-PollingRunner-DefaultSinkProcessor] (org.apache.flume.sink.hdfs.BucketWriter.open:246) - Creating hdfs://cdh-master:8020/nginx/stzy/20230609/nginx-access.log.192.168.90.111.2023060903.1686250800730.gz.tmp

09 六月 2023 03:00:55,758 INFO [hdfs-sinkhdfs-roll-timer-0] (org.apache.flume.sink.hdfs.HDFSEventSink$1.run:391) - Writer callback called.

09 六月 2023 03:00:55,758 INFO [hdfs-sinkhdfs-roll-timer-0] (org.apache.flume.sink.hdfs.BucketWriter.doClose:438) - Closing hdfs://cdh-master:8020/nginx/stzy/20230609/nginx-access.log.192.168.90.111.2023060902.1686250555721.gz.tmp

09 六月 2023 03:01:03,795 WARN [ResponseProcessor for block BP-2118334161-192.168.80.107-1685085967310:blk_1073761074_20307] (org.apache.hadoop.hdfs.DataStreamer$ResponseProcessor.run:1245) - Exception for BP-2118334161-192.168.80.107-1685085967310:blk_1073761074_20307

java.net.SocketTimeoutException: 65000 millis timeout while waiting for channel to be ready for read. ch : java.nio.channels.SocketChannel[connected local=/192.168.90.111:39318 remote=/192.168.80.7:1004]

at org.apache.hadoop.net.SocketIOWithTimeout.doIO(SocketIOWithTimeout.java:163) ~[hadoop-common-3.3.5.jar:?]

at org.apache.hadoop.net.SocketInputStream.read(SocketInputStream.java:161) ~[hadoop-common-3.3.5.jar:?]

at org.apache.hadoop.net.SocketInputStream.read(SocketInputStream.java:131) ~[hadoop-common-3.3.5.jar:?]

at org.apache.hadoop.net.SocketInputStream.read(SocketInputStream.java:118) ~[hadoop-common-3.3.5.jar:?]

at java.io.FilterInputStream.read(FilterInputStream.java:83) ~[?:1.8.0_202]

at java.io.FilterInputStream.read(FilterInputStream.java:83) ~[?:1.8.0_202]

at org.apache.hadoop.hdfs.protocolPB.PBHelperClient.vintPrefixed(PBHelperClient.java:519) ~[hadoop-hdfs-client-3.3.5.jar:?]

at org.apache.hadoop.hdfs.protocol.datatransfer.PipelineAck.readFields(PipelineAck.java:213) ~[hadoop-hdfs-client-3.3.5.jar:?]

at org.apache.hadoop.hdfs.DataStreamer$ResponseProcessor.run(DataStreamer.java:1137) ~[hadoop-hdfs-client-3.3.5.jar:?]

09 六月 2023 03:01:03,797 WARN [hdfs-sinkhdfs-roll-timer-0] (org.apache.flume.sink.hdfs.BucketWriter$CloseHandler.close:348) - Closing file: hdfs://cdh-master:8020/nginx/stzy/20230609/nginx-access.log.192.168.90.111.2023060902.1686250555721.gz.tmp failed. Will retry again in 180 seconds.

java.io.IOException: All datanodes [DatanodeInfoWithStorage[192.168.80.7:1004,DS-5da22502-2148-40a5-9610-b25a0c3063d4,DISK]] are bad. Aborting...

at org.apache.hadoop.hdfs.DataStreamer.handleBadDatanode(DataStreamer.java:1609) ~[hadoop-hdfs-client-3.3.5.jar:?]

at org.apache.hadoop.hdfs.DataStreamer.setupPipelineInternal(DataStreamer.java:1543) ~[hadoop-hdfs-client-3.3.5.jar:?]

at org.apache.hadoop.hdfs.DataStreamer.setupPipelineForAppendOrRecovery(DataStreamer.java:1529) ~[hadoop-hdfs-client-3.3.5.jar:?]

at org.apache.hadoop.hdfs.DataStreamer.processDatanodeOrExternalError(DataStreamer.java:1305) ~[hadoop-hdfs-client-3.3.5.jar:?]

at org.apache.hadoop.hdfs.DataStreamer.run(DataStreamer.java:668) ~[hadoop-hdfs-client-3.3.5.jar:?]

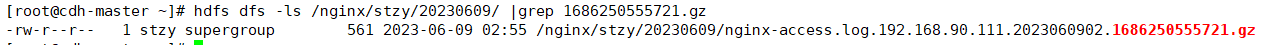

09 六月 2023 03:01:03,806 INFO [hdfs-sinkhdfs-call-runner-8] (org.apache.flume.sink.hdfs.BucketWriter$7.call:681) - Renaming hdfs://cdh-master:8020/nginx/stzy/20230609/nginx-access.log.192.168.90.111.2023060902.1686250555721.gz.tmp to hdfs://cdh-master:8020/nginx/stzy/20230609/nginx-access.log.192.168.90.111.2023060902.1686250555721.gz

09 六月 2023 03:01:13,738 WARN [ResponseProcessor for block BP-2118334161-192.168.80.107-1685085967310:blk_1073761079_20312] (org.apache.hadoop.hdfs.DataStreamer$ResponseProcessor.run:1245) - Exception for BP-2118334161-192.168.80.107-1685085967310:blk_1073761079_20312

java.net.SocketTimeoutException: 65000 millis timeout while waiting for channel to be ready for read. ch : java.nio.channels.SocketChannel[connected local=/192.168.90.111:41490 remote=/192.168.80.7:1004]

at org.apache.hadoop.net.SocketIOWithTimeout.doIO(SocketIOWithTimeout.java:163) ~[hadoop-common-3.3.5.jar:?]

at org.apache.hadoop.net.SocketInputStream.read(SocketInputStream.java:161) ~[hadoop-common-3.3.5.jar:?]

并且如果这种日志不处理,就会一直存在。

产生的原因:

从flume日志和hdfs的存储目录来看,其实这个文件已经关闭了,说实话,不是很清楚,要说网络吧,纯内网,根本无压力。要说是hdfs压力大吧,看监控也挺正常,还请知道的小伙伴给解答一下。在最近几天的观察下,发现出现error的时间总是在凌晨2:55分到3:00之间出现,在这个时间段在hdfs上的文件大小也普遍比其他时间段的小好多,感觉是这个时间段有啥网络异常。

解决方案:

添加一个失败就重试的次数

tier1.sinks.sinkhdfs.hdfs.closeTries = 10 # 官方的解释: 接收器在启动关闭尝试后必须尝试重命名文件的次数。如果设置为 1,则此接收器不会重试失败的重命名 (例如,由于 NameNode 或 DataNode 故障),并且可能会使文件处于打开状态,扩展名为.tmp。 如果设置为 0,接收器将尝试重命名文件,直到文件最终重命名(尝试的次数没有限制)。 如果关闭调用失败,文件可能仍保持打开状态,但数据将保持不变,在这种情况下,只有在 Flume 重新启动后,文件才会关闭。 后续配置上之后也确实管用,当它重试次数超过10之后,就不再重试。只是在这时间段会丢日志。

通过过滤日志,发现配置生效了,虽然没有从根本上解决问题,至少这样的日志它不会贯穿整天了。如果有其他小伙伴知道它的根本原因还希望分享一下。