安装依赖包

yum install ant asciidoc cyrus-sasl-devel cyrus-sasl-gssapi cyrus-sasl-plain gcc gcc-c++ krb5-devel libffi-devel libxml2-devel libxslt-devel make mysql mysql-devel openldap-devel python-devel sqlite-devel gmp-devel

python-devel openssl-devel libsqlite3-dev sqlite-devel libffi-dev libffi-devel gcc-c++ cyrus-sasl cyrus-sasl-devel cyrus-sasl-lib mysql-community-client mariadb-devel openldap-devel libxslt-devel rsync

安装jdk

...

安装python环境及pip

wget https://files.pythonhosted.org/packages/5a/df/b2e3d9693bb0dcbeac516a73dd7a9eb82b126ae52e4a74605a9b01beddd5/setuptools-40.1.0.zip

unzip setuptools-40.1.0.zip

cd setuptools-40.1.0

python setup.py install

wget https://files.pythonhosted.org/packages/69/81/52b68d0a4de760a2f1979b0931ba7889202f302072cc7a0d614211bc7579/pip-18.0.tar.gz

tar zxvf pip-18.0.tar.gz

cd pip-18.0

python setup.py install

安装nodejs

curl -sL https://rpm.nodesource.com/setup_10.x | bash -

yum install -y nodejs

安装mysql

安装源

wget https://dev.mysql.com/get/mysql57-community-release-el7-8.noarch.rpm

rpm -ivh mysql57-community-release-el7-8.noarch.rpm

安装

yum -y install mysql-server

启动

systemctl start mysqld

获取MySQL临时密码

grep 'temporary password' /var/log/mysqld.log

登录

mysql -uroot -p

设置密码策略,修改密码

set global validate_password_policy=LOW;

set global validate_password_length=5;

ALTER USER 'root'@'localhost' IDENTIFIED BY 'admin';

配置权限

mysql -uroot -padmin

use mysql;

select Host,User from user;

update user set Host='%' where User='root';

flush privileges;

创建用户

groupadd hadoop

useradd -g hadoop hadoop

解压编译

wget https://cdn.gethue.com/downloads/hue-4.8.0.tgz

PREFIX=/usr/share make install

集成Hive Conf

在/opt/hive/conf目录添加hive-site.xml文件

<?xml version="1.0"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<configuration>

<property>

<name>hive.metastore.local</name>

<value>false</value>

</property>

<property>

<name>hive.metastore.uris</name>

<value>thrift://172.31.6.20:9083</value>

</property>

</configuration>

Flink环境集成依赖的jar包

依赖的jar包

flink-connector-hive_2.11-1.11.3.jar

flink-sql-connector-kafka_2.11-1.11.2.jar

flink-parquet_2.11-1.11.2.jar

libfb303-0.9.3.jar

hive-exec-3.1.2.jar

重新编译hive-exec,解决guava版本冲突问题

下载hive源码,修改ql model下的pom文件,将guava版本由19.0改为20.0,重新编译

Flink环境集成Hadoop

进入Flink镜像工作目录(172.31.6.12)

cd /data/dockerfiles/flink-1.11

官网下载Hadoop安装包

wget https://archive.apache.org/dist/hadoop/common/hadoop-3.1.3/hadoop-3.1.3.tar.gz

解压安装包,删除无用文件

tar zxvf hadoop-3.1.3.tar.gz

cd hadoop-3.1.3

rm -rf bin/ etc/ include/ lib/ libexec/ LICENSE.txt NOTICE.txt README.txt sbin/

rm -rf ./share/hadoop/hdfs/lib/netty-all-4.0.52.Final.jar

rm -rf ./share/hadoop/hdfs/lib/netty-3.10.5.Final.jar

重新打包成 hadoop-3.1.3.tar.gz

tar zcvf hadoop-3.1.3.tar.gz hadoop-3.1.3

修改Dockerfile,添加如下内容

ADD hadoop-3.1.3.tar.gz /opt/flink/hadoop

打包上传镜像

docker build -t d-hub.jcinfo.com/library/flink:1.11.2-scala_2.11-java8-v1.4.3 .

docker push d-hub.jcinfo.com/library/flink:1.11.2-scala_2.11-java8-v1.4.3

Rancher中配置Hadoop环境变量

HADOOP_CLASSPATH=/opt/flink/hadoop/hadoop-3.1.3/etc/hadoop:/opt/flink/hadoop/hadoop-3.1.3/share/hadoop/common/lib/*:/opt/flink/hadoop/hadoop-3.1.3/share/hadoop/common/*:/opt/flink/hadoop/hadoop-3.1.3/share/hadoop/hdfs:/opt/flink/hadoop/hadoop-3.1.3/share/hadoop/hdfs/lib/*:/opt/flink/hadoop/hadoop-3.1.3/share/hadoop/hdfs/*:/opt/flink/hadoop/hadoop-3.1.3/share/hadoop/mapreduce/lib/*:/opt/flink/hadoop/hadoop-3.1.3/share/hadoop/mapreduce/*:/opt/flink/hadoop/hadoop-3.1.3/share/hadoop/yarn:/opt/flink/hadoop/hadoop-3.1.3/share/hadoop/yarn/lib/*:/opt/flink/hadoop/hadoop-3.1.3/share/hadoop/yarn/*

配置database

[[database]]

engine=mysql

host=192.168.56.131

port=3306

user=root

password=li1383414

name=hue

初始化数据库

build/env/bin/hue syncdb

build/env/bin/hue migrate

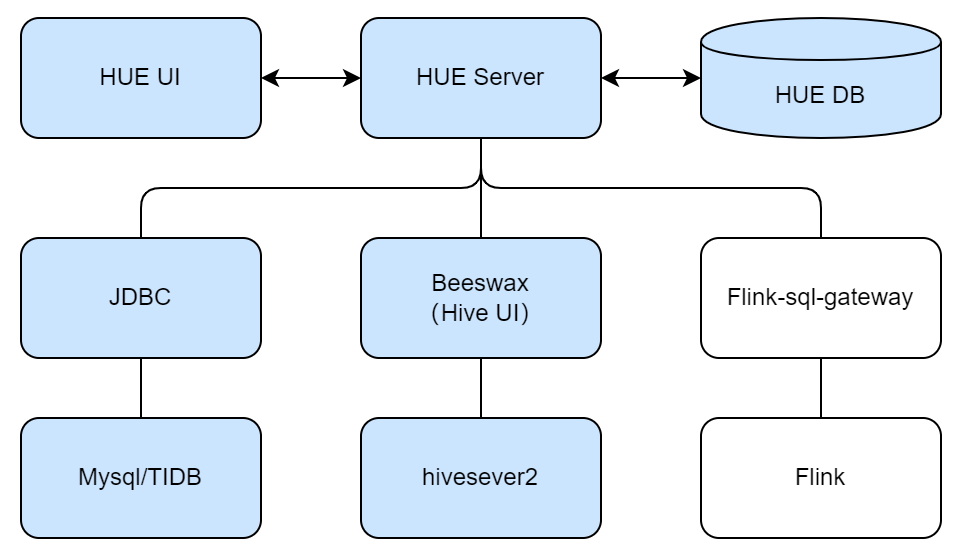

连接Mysql&TIDB

[[mysql]]]

name = MySQL

interface=sqlalchemy

options='{"url": "mysql://root@tidb.jcinfo.com:3306/test"}'

连接Hive

[[[hive]]]

name=Hive

interface=hiveserver2

[beeswax]

hive_server_host=172.31.6.20

hive_server_port=10000

hive_conf_dir=/opt/hive/conf

连接Flink SQL

安装flink-sql-gateway

解压安装

wget https://github.com/ververica/flink-sql-gateway/releases/download/flink-1.11.1/flink-sql-gateway-0.2-SNAPSHOT-bin.zip

unzip flink-sql-gateway-0.2-SNAPSHOT-bin.zip -d ../flink/

配置

server:

bind-address: 0.0.0.0 # optional: The address that the gateway binds itself (127.0.0.1 by default)

address: 0.0.0.0 # optional: The address that should be used by clients to connect to the gateway (127.0.0.1 by default)

catalogs: #[] # empty list

# A typical catalog definition looks like:

- name: myhive

type: hive

hive-conf-dir: /opt/hive/conf/

default-database: dw

启动

./bin/sql-gateway.sh

注意:与flink安装在同一台机器

添加配置

[[[flink]]]

name=Flink

interface=flink

options='{"url": "http://127.0.0.1:8083"}'

启动HUE

build/env/bin/supervisor

参考资料

https://docs.gethue.com/administrator/installation/install/

https://docs.gethue.com/releases/release-notes-4.8.0/

https://github.com/ververica/flink-sql-gateway