离线环境部署Rancher-2.5.9、Harbor、k8s-1.20.15,再升级为Rancher-2.5.16、Harbor、k8s-1.20.

服务器:腾讯云主机4台,按需或竞价计费经济实惠,注意4台机器在同一地域的同一可用区,以确保可以内网互通。

| 主机名 | 规格 | 操作系统 | 是否绑定公网IP | 角色 |

| k8s-master | 2C4G | Centos7.5 | 否 | k8s、Harbor、docker |

| rancher | 2C4G | Centos7.5 | 否 | rancher、docker |

| download-pkg | 2C4G | Centos7.5 | 是 | docker |

| win-view | 2C4G | WinServer | 是 | Google浏览器 |

1、配置主机名,hostnamectl set-hostname ***

2、离线安装docker,首先在主机“download-pkg”下载离线安装包

[root@download-pkg ~]# sudo yum install -y yum-utils [root@download-pkg ~]# sudo yum-config-manager --add-repo https://download.docker.com/linux/centos/docker-ce.repo [root@download-pkg ~]# sudo yum -y install --downloadonly docker-ce docker-ce-cli containerd.io docker-buildx-plugin docker-compose-plugin --downloaddir=/mnt [root@download-pkg ~]# ls /mnt audit-libs-python-2.8.5-4.el7.x86_64.rpm docker-ce-cli-24.0.2-1.el7.x86_64.rpm libsemanage-python-2.5-14.el7.x86_64.rpm checkpolicy-2.5-8.el7.x86_64.rpm docker-ce-rootless-extras-24.0.2-1.el7.x86_64.rpm policycoreutils-python-2.5-34.el7.x86_64.rpm containerd.io-1.6.21-3.1.el7.x86_64.rpm docker-compose-plugin-2.18.1-1.el7.x86_64.rpm python-IPy-0.75-6.el7.noarch.rpm container-selinux-2.119.2-1.911c772.el7_8.noarch.rpm fuse3-libs-3.6.1-4.el7.x86_64.rpm setools-libs-3.3.8-4.el7.x86_64.rpm docker-buildx-plugin-0.10.5-1.el7.x86_64.rpm fuse-overlayfs-0.7.2-6.el7_8.x86_64.rpm slirp4netns-0.4.3-4.el7_8.x86_64.rpm docker-ce-24.0.2-1.el7.x86_64.rpm libcgroup-0.41-21.el7.x86_64.rpm [root@download-pkg ~]# tar zcvf docker_offline_package.tar.gz /mnt/*

3、将docker离线安装包拷贝到主机“k8s-master“,”rancher“的/mnt目录

4、主机“k8s-master“,”rancher“安装docker并启动

[root@rancher mnt]# tar xvf docker_offline_package.tar.gz [root@rancher mnt]# cd mnt/ [root@rancher mnt]# yum -y install ./* [root@rancher mnt]# systemctl enable docker --now [root@rancher mnt]# #查看docker版本 [root@rancher mnt]# docker info

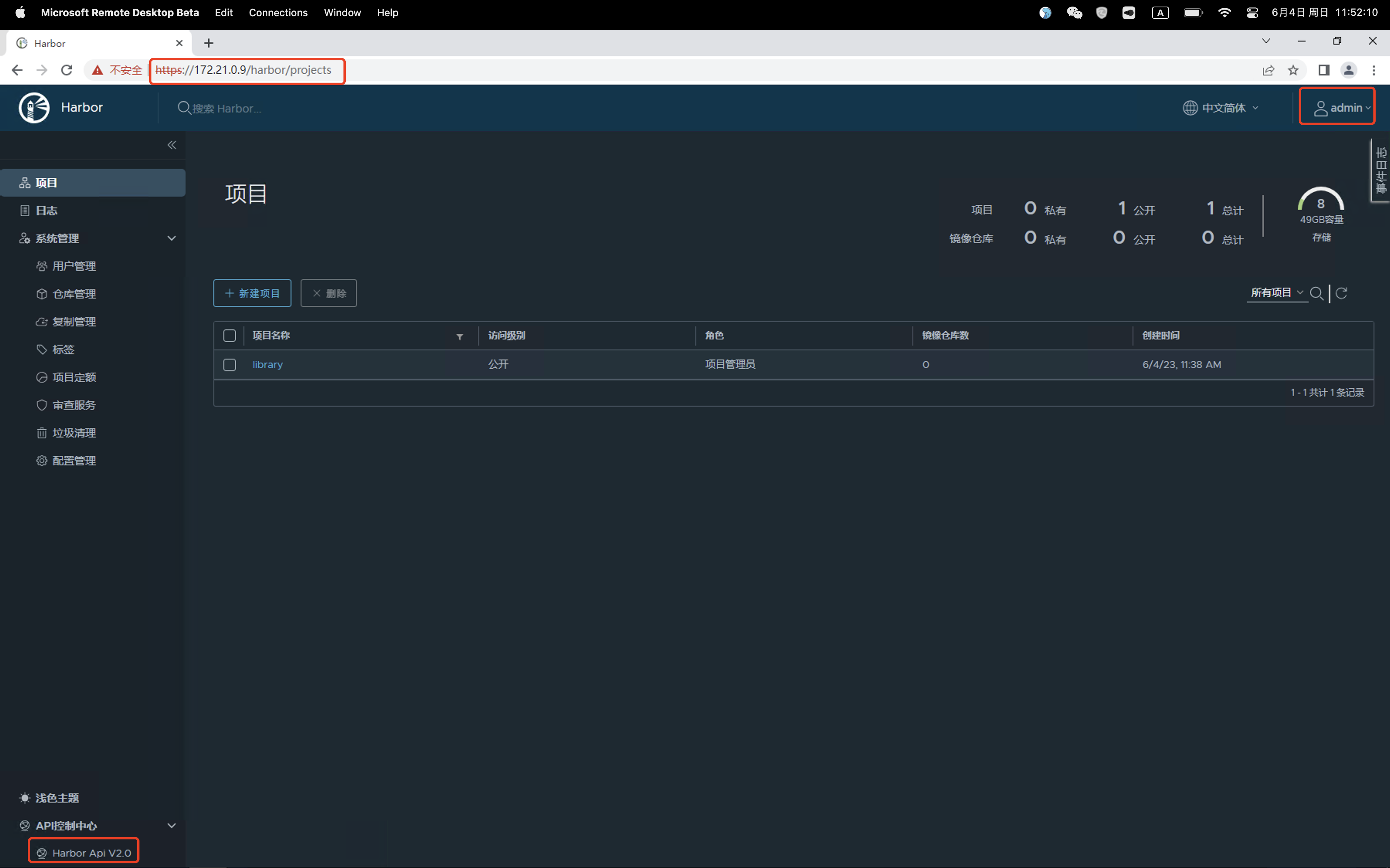

5、在主机”k8s-master“,离线安装Harbor,安装包下载地址:https://github.com/goharbor/harbor/releases/tag/v2.8.1,版本自行选择,我这里安装v2.0.0

离线安装harbor需要安装docker-compose,下载地址https://github.com/docker/compose/releases?page=9,选择版本时注意结合自己的docker版本

6、安装harbor参考文档地址:https://goharbor.io/docs/2.0.0/install-config/

[root@k8s-master ~]# mkdir /data [root@k8s-master ~]# cd /data [root@k8s-master ~]# #将下载的安装包harbor-offline-installer-v2.0.0.tgz、docker-compose-Linux-x86_64拷贝到该目录 [root@k8s-master data]# tar xf harbor-offline-installer-v2.0.0.tgz [root@k8s-master data]# #创建harbor需要使用的证书 我这里不使用域名,直接使用IP地址 # 命令如下: openssl genrsa -out ca.key 4096 openssl req -x509 -new -nodes -sha512 -days 3650 -subj "/C=CN/ST=Beijing/L=Beijing/O=example/OU=Personal/CN=172.21.0.9" -key ca.key -out ca.crt openssl genrsa -out 172.21.0.9.key 4096 openssl req -sha512 -new -subj "/C=CN/ST=Beijing/L=Beijing/O=example/OU=Personal/CN=172.21.0.9" -key 172.21.0.9.key -out 172.21.0.9.csr cat > v3.ext <<-EOF authorityKeyIdentifier=keyid,issuer basicConstraints=CA:FALSE keyUsage = digitalSignature, nonRepudiation, keyEncipherment, dataEncipherment extendedKeyUsage = serverAuth subjectAltName = IP:172.21.0.9 [alt_names] DNS.1=172.21.0.9 DNS.2=172.21.0.9 DNS.3=172.21.0.9 EOF openssl x509 -req -sha512 -days 3650 -extfile v3.ext -CA ca.crt -CAkey ca.key -CAcreateserial -in 172.21.0.9.csr -out 172.21.0.9.crt mkdir /data/cert/ cp 172.21.0.9.crt /data/cert/ cp 172.21.0.9.key /data/cert/ openssl x509 -inform PEM -in 172.21.0.9.crt -out 172.21.0.9.cert mkdir /etc/docker/certs.d/172.21.0.9/ -p cp 172.21.0.9.cert /etc/docker/certs.d/172.21.0.9/ cp 172.21.0.9.key /etc/docker/certs.d/172.21.0.9/ cp ca.crt /etc/docker/certs.d/172.21.0.9/ [root@k8s-master data]# #重启docker [root@k8s-master data]# systemctl restart docker [root@k8s-master data]# #开始配置harbor [root@k8s-master data]# cd harbor/ [root@k8s-master harbor]# cp harbor.yml.tmpl harbor.yml [root@k8s-master harbor]# #修改配置文件如下几行,其他配置我这里保持默认 hostname: 172.21.0.9 certificate: /data/cert/172.21.0.9.crt private_key: /data/cert/172.21.0.9.key [root@k8s-master harbor]# 安装docker compose [root@k8s-master harbor]# cd /data [root@k8s-master data]# chmod +x docker-compose-Linux-x86_64 [root@k8s-master data]# mv docker-compose-Linux-x86_64 docker-compose [root@k8s-master data]# cp docker-compose /usr/bin/ [root@k8s-master data]# docker-compose version [root@k8s-master data]# cd harbor/ [root@k8s-master harbor]# ./install.sh [root@k8s-master harbor]# 看到如下输出表示已经安装完毕 # ✔ ----Harbor has been installed and started successfully.----

7、使用Winserver主机验证服务页面可用性。harbor用户名admin,默认密码Harbor12345,可在文件harbor.yml中配置。

8、离线安装单节点rancher,首先在主机“download-pkg”下载rancher相关docker镜像

docker pull rancher/rancher:v2.5.9 docker pull rancher/rancher-agent:v2.5.9 docker pull rancher/shell:v0.1.6 docker pull rancher/rancher-webhook:v0.1.1 docker pull rancher/fleet:v0.3.5 docker pull rancher/gitjob:v0.1.15 docker pull rancher/coredns-coredns:1.6.9 docker pull rancher/fleet-agent:v0.3.5 docker pull rancher/rancher-operator:v0.1.4 docker save -o rancher-v2.5.9.tar rancher/rancher:v2.5.9 rancher/rancher-agent:v2.5.9 rancher/shell:v0.1.6 rancher/rancher-webhook:v0.1.1 rancher/fleet:v0.3.5 rancher/gitjob:v0.1.15 rancher/coredns-coredns:1.6.9 rancher/fleet-agent:v0.3.5 rancher/rancher-operator:v0.1.4 gzip rancher-v2.5.9.tar

9、将rancher-v2.5.9.tar.gz 拷贝到主机“rancher”

10、安装rancher,先将镜像导入到镜像仓库harbor

[root@rancher data]# docker load -i rancher-v2.5.9.tar.gz [root@rancher data]# docker images [root@rancher data]# #修改docker配置文件,配置镜像仓库,同步修改"k8s-master"主机 cat > /etc/docker/daemon.json <<-EOF { "insecure-registries" : ["172.21.0.9:443", "0.0.0.0"] } EOF [root@rancher data]# #重启docker [root@rancher data]# systemctl restart docker [root@rancher data]# #将镜像push到harbor [root@rancher data]# for i in `docker images |grep rancher |awk '{print $1":"$2}'`;do docker tag $i 172.21.0.9:443/$i;done [root@rancher data]# docker login 172.21.0.9:443 # Username: admin # Password: Harbor12345 [root@rancher data]# for i in `docker images |grep 172.21.0.9 |awk '{print $1":"$2}' `;do docker push $i;done [root@rancher data]# # 创建rancher的持久化目录 [root@rancher data]# mkdir /data/rancher/{rancher_data,auditlog} -p [root@rancher data]# #为rancher中的k3s创建私有仓库的配置文件 cat > /data/rancher/registries.yaml <<-EOF mirrors: "172.21.0.9:443": endpoint: - "https://172.21.0.9:443" configs: "172.21.0.9:443": tls: insecure_skip_verify: yes EOF [root@rancher data]# #启动rancher [root@rancher data]# docker run -d --restart=unless-stopped -p 80:80 -p 443:443 -v /data/rancher/registries.yaml:/etc/rancher/k3s/registries.yaml -v /data/rancher/rancher_data:/var/lib/rancher/ -v /data/rancher/auditlog:/var/log/auditlog -e CATTLE_SYSTEM_DEFAULT_REGISTRY=172.21.0.9:443 -e CATTLE_SYSTEM_CATALOG=bundled --privileged 172.21.0.9:443/rancher/rancher:v2.5.9

11、到这里,容器虽然启动了,但是容器内部的k3s需要启动rancher相关的组件,进入容器通过命令kubectl get pods -A 发现pod均启动失败,通过kubectl describe pods *** -n ** ,发现failed to pull and unpack image "docker.io/rancher/pause:3.1",查了一圈百度,说是rancher的镜像中内置了rancher/coredns-coredns 和 rancher/pause。但实践发现v2.5.9这个版本中并没有内置。好坑。

解决:(1)通过公网拉取镜像rancher/pause:3.1、rancher/coredns-coredns:1.6.9(镜像版本来源于kubectl describe报错)

# 在download-package服务器拉取并打包 docker pull rancher/pause:3.1 docker pull rancher/coredns-coredns:1.6.9 docker save -o rancher_basic.tar rancher/pause:3.1 rancher/coredns-coredns:1.6.9 gzip rancher_basic.tar

(2)将拉取的rancher/pause:3.1、rancher/coredns-coredns:1.6.9上传到镜像仓库harbor中

#在rancher服务器执行导入 docker load -i rancher_basic.tar.gz docker tag rancher/pause:3.1 172.21.0.9:443/rancher/pause:3.1 docker tag rancher/coredns-coredns:1.6.9 172.21.0.9:443/rancher/coredns-coredns:1.6.9 docker push 172.21.0.9:443/rancher/pause:3.1 docker push 172.21.0.9:443/rancher/coredns-coredns:1.6.9

(3) 进入rancher容器,执行手动拉取并修改tag的操作

[root@rancher data]# docker exec -it eager_brattain bash #因为我们rancher容器启动的时候指定了registries.yaml,所以可以直接从我们自己的harbor拉取镜像 #执行以下命令 k3s crictl pull 172.21.0.9:443/rancher/pause:3.1 k3s crictl pull 172.21.0.9:443/rancher/coredns-coredns:1.6.9 k3s ctr i tag 172.21.0.9:443/rancher/pause:3.1 docker.io/rancher/pause:3.1 k3s ctr i tag 172.21.0.9:443/rancher/coredns-coredns:1.6.9 docker.io/rancher/coredns-coredns:1.6.9 #注意:修改tag时docker.io不可省略,否则pod也无法启动

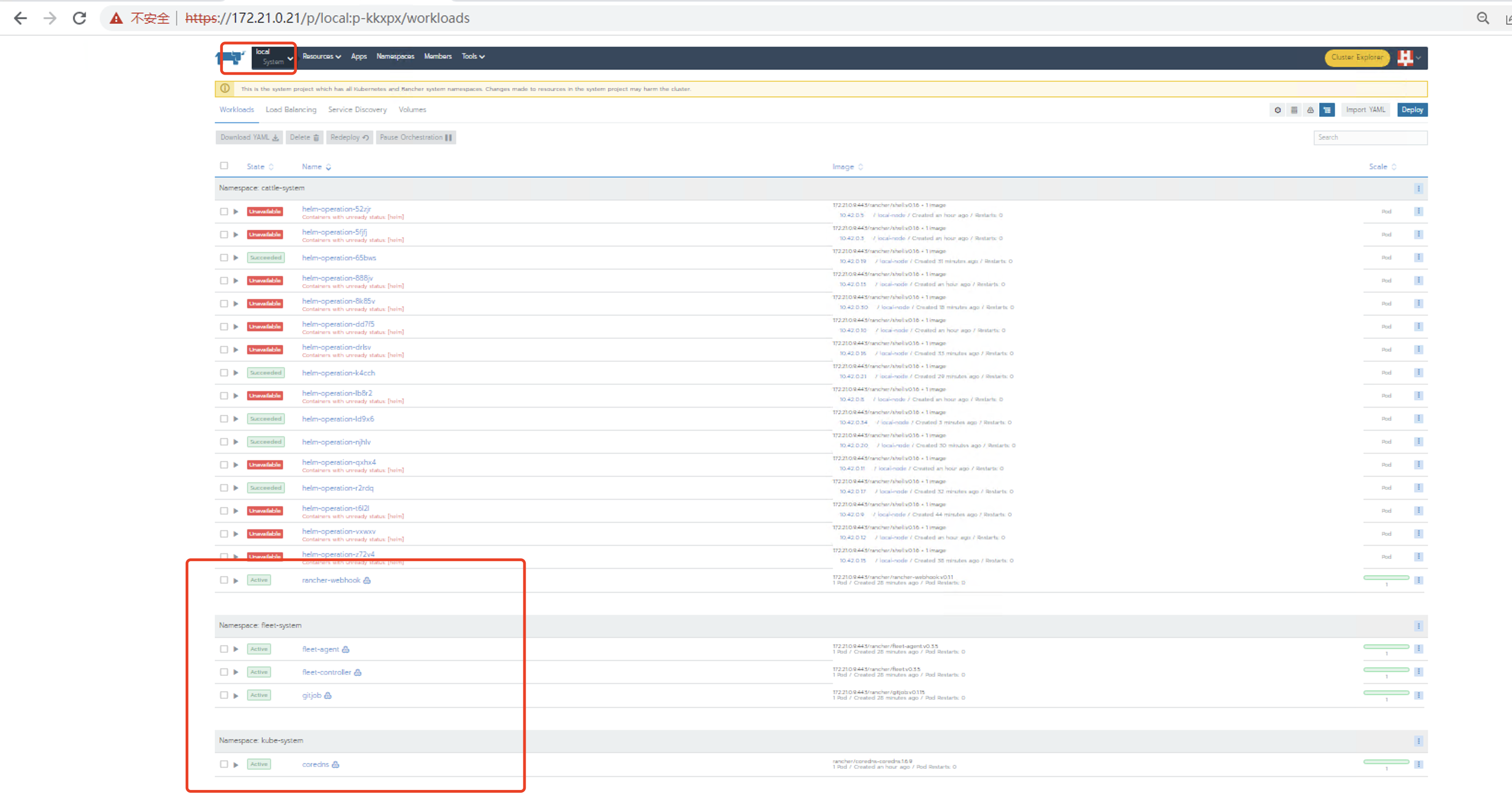

(4)执行以下命令查看服务时候正常启动,当所有的deployment都正常ready时,表示rancher启动完成。

root@646443a60efa:/var/lib/rancher# kubectl get deployment -A NAMESPACE NAME READY UP-TO-DATE AVAILABLE AGE cattle-system rancher-webhook 1/1 1 1 25m fleet-system fleet-agent 1/1 1 1 25m fleet-system fleet-controller 1/1 1 1 25m fleet-system gitjob 1/1 1 1 25m kube-system coredns 1/1 1 1 60m rancher-operator-system rancher-operator 1/1 1 1 15m root@646443a60efa:/var/lib/rancher#

(5)访问rancher界面验证服务可用性。

12、至此,rancher-v2.5.9离线部署成功。

13、离线部署k8s,首先在机器“download-pkg”上下载k8s所需的安装包

# 设置阿里云k8s的yum源 cat > /etc/yum.repos.d/kubernetes.repo << EOF [k8s] name=k8s enabled=1 gpgcheck=0 baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/ EOF [root@download-pkg ~]# mkdir /mnt/k8s yum -y install --downloadonly kubeadm-1.20.15 kubectl-1.20.15 kubelet-1.20.15 --downloaddir=/mnt/k8s [root@download-pkg ~]# cd /mnt/ [root@download-pkg mnt]# tar zcvf k8s.tar.gz k8s/

14、将k8s.tar.gz拷贝到机器"k8s-master"上的/data目录下并解压

[root@rancher data]# tar xf k8s.tar.gz [root@rancher data]# cd k8s/ [root@rancher k8s]# yum -y install *

15、至此,kubeadm已经安装上了

16、查看k8s-1.20.15需要的docker镜像,在“download-pkg"上拉取镜像并打包

[root@k8s-master k8s]# kubeadm config images list --kubernetes-version=v1.20.15

k8s.gcr.io/kube-apiserver:v1.20.15 k8s.gcr.io/kube-controller-manager:v1.20.15 k8s.gcr.io/kube-scheduler:v1.20.15 k8s.gcr.io/kube-proxy:v1.20.15 k8s.gcr.io/pause:3.2 k8s.gcr.io/etcd:3.4.13-0 k8s.gcr.io/coredns:1.7.0 # k8s.gcr.io大概率访问不到,这里我们改为从国内的镜像源拉取镜像再tag,脚本如下 [root@download-pkg opt]# cat image.sh version=1.20.15 images=`kubeadm config images list --kubernetes-version=v$version |awk -F/ '{print $NF}'` pull_images=`for i in $images;do echo $i|grep -v coredns;done` coredns_images=`for i in $images;do echo $i|grep coredns;done` pull_coredns=`echo $coredns_images |awk -Fv '{print $1$2}'` for imageName in ${pull_images[@]} do docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/$imageName docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/$imageName k8s.gcr.io/$imageName docker rmi registry.cn-hangzhou.aliyuncs.com/google_containers/$imageName done docker pull coredns/$pull_coredns docker tag coredns/$pull_coredns k8s.gcr.io/$coredns_images docker rmi coredns/$pull_coredns [root@download-pkg opt]# bash image.sh [root@download-pkg opt]# docker save -o k8s-1.20.15_image.tar k8s.gcr.io/kube-proxy:v1.20.15 k8s.gcr.io/kube-scheduler:v1.20.15 k8s.gcr.io/kube-controller-manager:v1.20.15 k8s.gcr.io/kube-apiserver:v1.20.15 k8s.gcr.io/etcd:3.4.13-0 k8s.gcr.io/coredns:1.7.0 k8s.gcr.io/pause:3.2 [root@download-pkg opt]# gzip k8s-1.20.15_image.tar

17、将k8s-1.20.15_image.tar.gz拷贝到"k8s-master"的/data目录下,导入镜像

[root@k8s-master data]# docker load -i k8s-1.20.15_image.tar.gz # 至此,kubeadm初始化k8s集群的镜像已经全部准备好,然后开始初始化集群 [root@k8s-master data]# kubeadm init --kubernetes-version=v1.20.15 --pod-network-cidr=10.244.0.0/16 #等待出现如下提示,表示集群初始化完成 kubeadm join 172.21.0.9:6443 --token 0ibv0n.pn35qfhgbqgs6omn \ --discovery-token-ca-cert-hash sha256:65fedb56f7583631c6999ec4185e892d65ed8f4548623a4ff2700e603351c569 # 设置环境变量,以使用kubectl命令 echo "export KUBECONFIG=/etc/kubernetes/admin.conf" >> /etc/profile source /etc/profile export KUBECONFIG=/etc/kubernetes/admin.conf # k8s的基础组件已经部署完成了,还需要部署一个网络插件,才可以使集群变为ready状态

18、我这里使用网络插件flannel,首先拉取rancher/mirrored-flannelcni-flannel-cni-plugin:v1.0.1、rancher/mirrored-flannelcni-flannel:v0.17.0,导入到"k8s-master"上

docker pull rancher/mirrored-flannelcni-flannel-cni-plugin:v1.0.1 docker pull rancher/mirrored-flannelcni-flannel:v0.17.0 docker save -o flannel.tar rancher/mirrored-flannelcni-flannel-cni-plugin:v1.0.1 rancher/mirrored-flannelcni-flannel:v0.17.0 gzip flannel.tar

[root@k8s-master data]# docker load -i flannel.tar.gz [root@k8s-master data]# kubectl apply -f flannel.yaml podsecuritypolicy.policy/psp.flannel.unprivileged created clusterrole.rbac.authorization.k8s.io/flannel created clusterrolebinding.rbac.authorization.k8s.io/flannel created serviceaccount/flannel created configmap/kube-flannel-cfg created daemonset.apps/kube-flannel-ds created

--- apiVersion: policy/v1beta1 kind: PodSecurityPolicy metadata: name: psp.flannel.unprivileged annotations: seccomp.security.alpha.kubernetes.io/allowedProfileNames: docker/default seccomp.security.alpha.kubernetes.io/defaultProfileName: docker/default apparmor.security.beta.kubernetes.io/allowedProfileNames: runtime/default apparmor.security.beta.kubernetes.io/defaultProfileName: runtime/default spec: privileged: false volumes: - configMap - secret - emptyDir - hostPath allowedHostPaths: - pathPrefix: "/etc/cni/net.d" - pathPrefix: "/etc/kube-flannel" - pathPrefix: "/run/flannel" readOnlyRootFilesystem: false # Users and groups runAsUser: rule: RunAsAny supplementalGroups: rule: RunAsAny fsGroup: rule: RunAsAny # Privilege Escalation allowPrivilegeEscalation: false defaultAllowPrivilegeEscalation: false # Capabilities allowedCapabilities: ['NET_ADMIN', 'NET_RAW'] defaultAddCapabilities: [] requiredDropCapabilities: [] # Host namespaces hostPID: false hostIPC: false hostNetwork: true hostPorts: - min: 0 max: 65535 # SELinux seLinux: # SELinux is unused in CaaSP rule: 'RunAsAny' --- kind: ClusterRole apiVersion: rbac.authorization.k8s.io/v1 metadata: name: flannel rules: - apiGroups: ['extensions'] resources: ['podsecuritypolicies'] verbs: ['use'] resourceNames: ['psp.flannel.unprivileged'] - apiGroups: - "" resources: - pods verbs: - get - apiGroups: - "" resources: - nodes verbs: - list - watch - apiGroups: - "" resources: - nodes/status verbs: - patch --- kind: ClusterRoleBinding apiVersion: rbac.authorization.k8s.io/v1 metadata: name: flannel roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: flannel subjects: - kind: ServiceAccount name: flannel namespace: kube-system --- apiVersion: v1 kind: ServiceAccount metadata: name: flannel namespace: kube-system --- kind: ConfigMap apiVersion: v1 metadata: name: kube-flannel-cfg namespace: kube-system labels: tier: node app: flannel data: cni-conf.json: | { "name": "cbr0", "cniVersion": "0.3.1", "plugins": [ { "type": "flannel", "delegate": { "hairpinMode": true, "isDefaultGateway": true } }, { "type": "portmap", "capabilities": { "portMappings": true } } ] } net-conf.json: | { "Network": "10.244.0.0/16", "Backend": { "Type": "vxlan" } } --- apiVersion: apps/v1 kind: DaemonSet metadata: name: kube-flannel-ds namespace: kube-system labels: tier: node app: flannel spec: selector: matchLabels: app: flannel template: metadata: labels: tier: node app: flannel spec: affinity: nodeAffinity: requiredDuringSchedulingIgnoredDuringExecution: nodeSelectorTerms: - matchExpressions: - key: kubernetes.io/os operator: In values: - linux hostNetwork: true priorityClassName: system-node-critical tolerations: - operator: Exists effect: NoSchedule serviceAccountName: flannel initContainers: - name: install-cni-plugin #image: flannelcni/flannel-cni-plugin:v1.0.1 for ppc64le and mips64le (dockerhub limitations may apply) image: rancher/mirrored-flannelcni-flannel-cni-plugin:v1.0.1 command: - cp args: - -f - /flannel - /opt/cni/bin/flannel volumeMounts: - name: cni-plugin mountPath: /opt/cni/bin - name: install-cni #image: flannelcni/flannel:v0.17.0 for ppc64le and mips64le (dockerhub limitations may apply) image: rancher/mirrored-flannelcni-flannel:v0.17.0 command: - cp args: - -f - /etc/kube-flannel/cni-conf.json - /etc/cni/net.d/10-flannel.conflist volumeMounts: - name: cni mountPath: /etc/cni/net.d - name: flannel-cfg mountPath: /etc/kube-flannel/ containers: - name: kube-flannel #image: flannelcni/flannel:v0.17.0 for ppc64le and mips64le (dockerhub limitations may apply) image: rancher/mirrored-flannelcni-flannel:v0.17.0 command: - /opt/bin/flanneld args: - --ip-masq - --kube-subnet-mgr resources: requests: cpu: "100m" memory: "50Mi" limits: cpu: "100m" memory: "50Mi" securityContext: privileged: false capabilities: add: ["NET_ADMIN", "NET_RAW"] env: - name: POD_NAME valueFrom: fieldRef: fieldPath: metadata.name - name: POD_NAMESPACE valueFrom: fieldRef: fieldPath: metadata.namespace volumeMounts: - name: run mountPath: /run/flannel - name: flannel-cfg mountPath: /etc/kube-flannel/ - name: xtables-lock mountPath: /run/xtables.lock volumes: - name: run hostPath: path: /run/flannel - name: cni-plugin hostPath: path: /opt/cni/bin - name: cni hostPath: path: /etc/cni/net.d - name: flannel-cfg configMap: name: kube-flannel-cfg - name: xtables-lock hostPath: path: /run/xtables.lock type: FileOrCreate

19、执行命令,检查节点状态

[root@k8s-master data]# kubectl get pods -A NAMESPACE NAME READY STATUS RESTARTS AGE kube-system coredns-74ff55c5b-bj9kh 1/1 Running 0 11m kube-system coredns-74ff55c5b-ggfs4 1/1 Running 0 11m kube-system etcd-k8s-master 1/1 Running 0 11m kube-system kube-apiserver-k8s-master 1/1 Running 0 11m kube-system kube-controller-manager-k8s-master 1/1 Running 0 11m kube-system kube-flannel-ds-xllwd 1/1 Running 0 95s kube-system kube-proxy-btr2v 1/1 Running 0 11m kube-system kube-scheduler-k8s-master 1/1 Running 0 11m [root@k8s-master data]# kubectl get nodes NAME STATUS ROLES AGE VERSION k8s-master Ready control-plane,master 11m v1.20.15

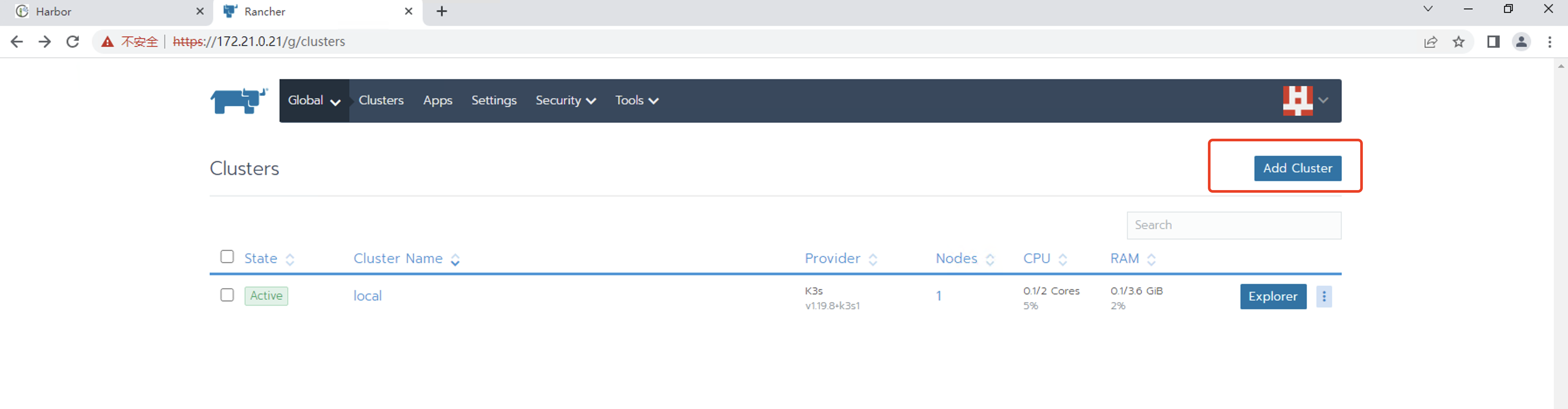

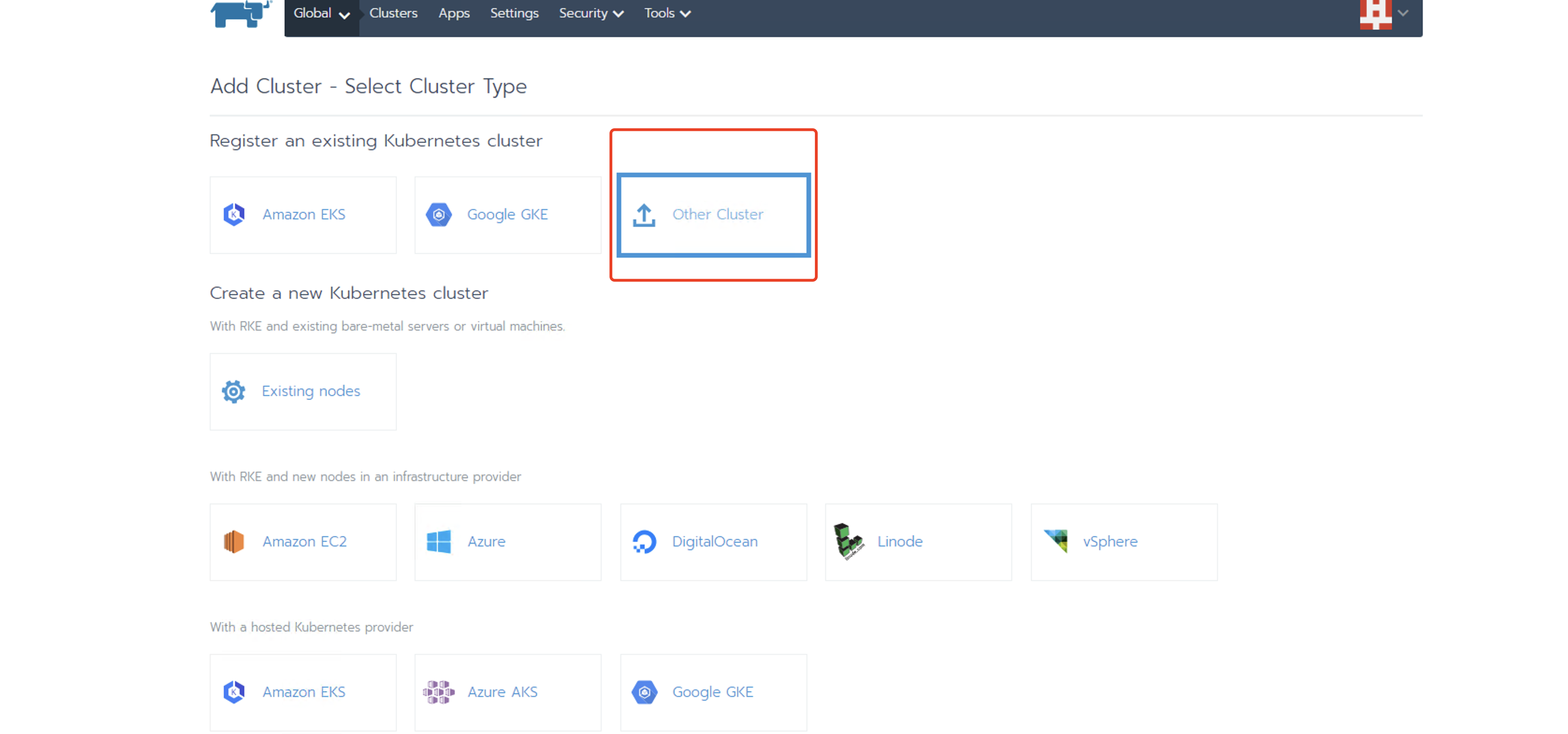

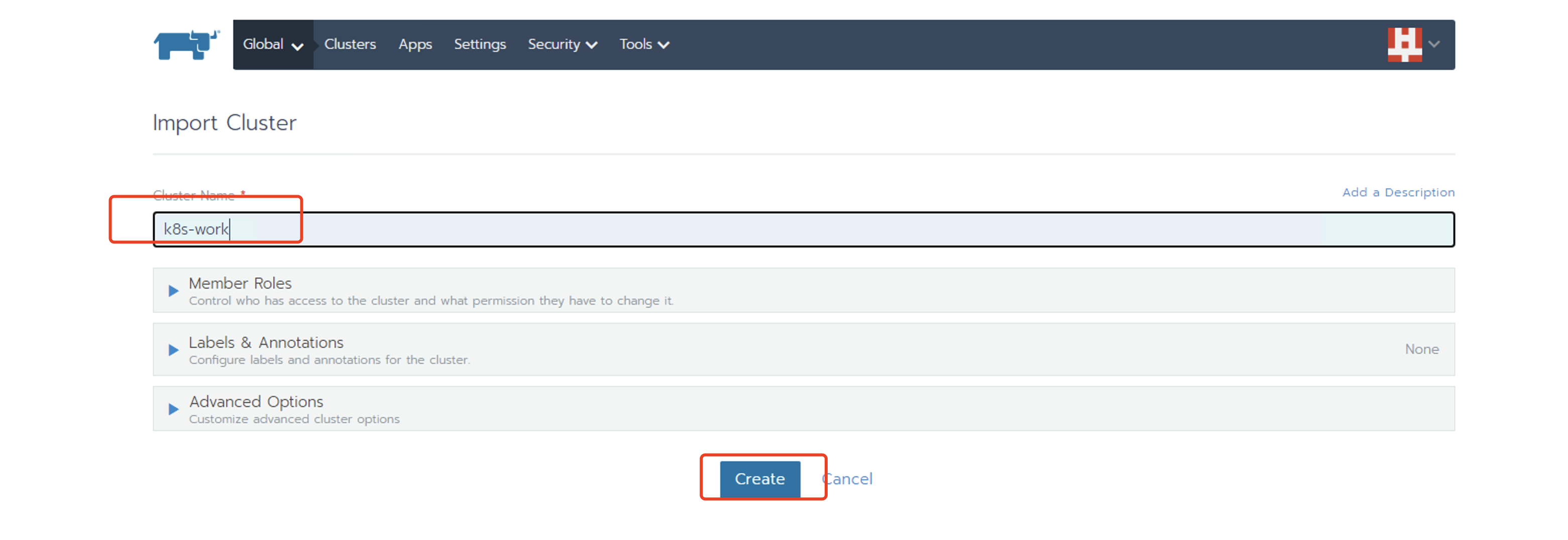

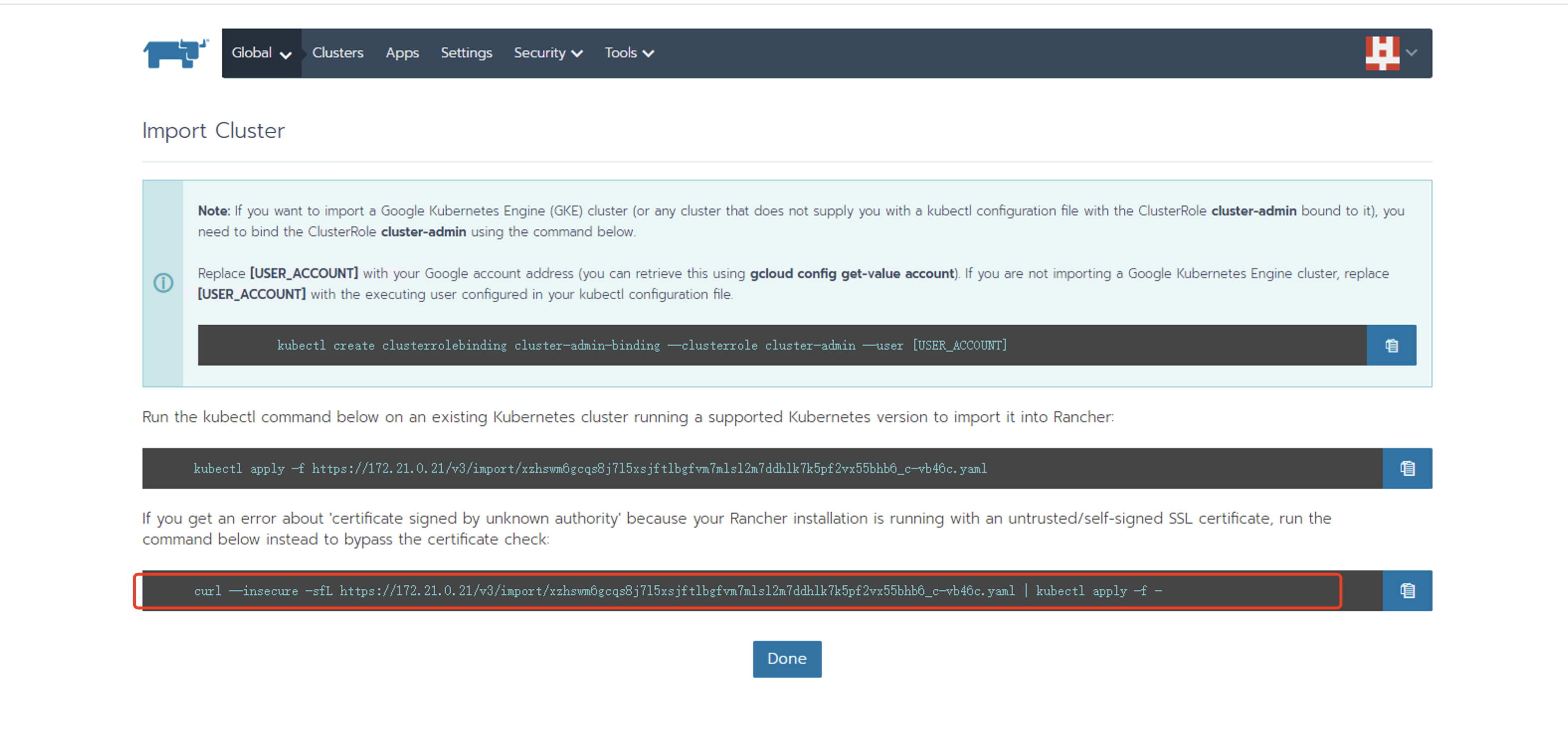

20、将k8s集群导入到rancher中,使用rancher管理k8s集群

[root@k8s-master data]# curl --insecure -sfL https://172.21.0.21/v3/import/xzhswm6gcqs8j7l5xsjftlbgfvm7mlsl2m7ddhlk7k5pf2vx55bhb6_c-vb46c.yaml | kubectl apply -f - error: no objects passed to apply. #第一遍总是不成功,再执行一次就好了 [root@k8s-master data]# curl --insecure -sfL https://172.21.0.21/v3/import/xzhswm6gcqs8j7l5xsjftlbgfvm7mlsl2m7ddhlk7k5pf2vx55bhb6_c-vb46c.yaml | kubectl apply -f - clusterrole.rbac.authorization.k8s.io/proxy-clusterrole-kubeapiserver created clusterrolebinding.rbac.authorization.k8s.io/proxy-role-binding-kubernetes-master created namespace/cattle-system created serviceaccount/cattle created clusterrolebinding.rbac.authorization.k8s.io/cattle-admin-binding created secret/cattle-credentials-11a6db7 created clusterrole.rbac.authorization.k8s.io/cattle-admin created deployment.apps/cattle-cluster-agent created

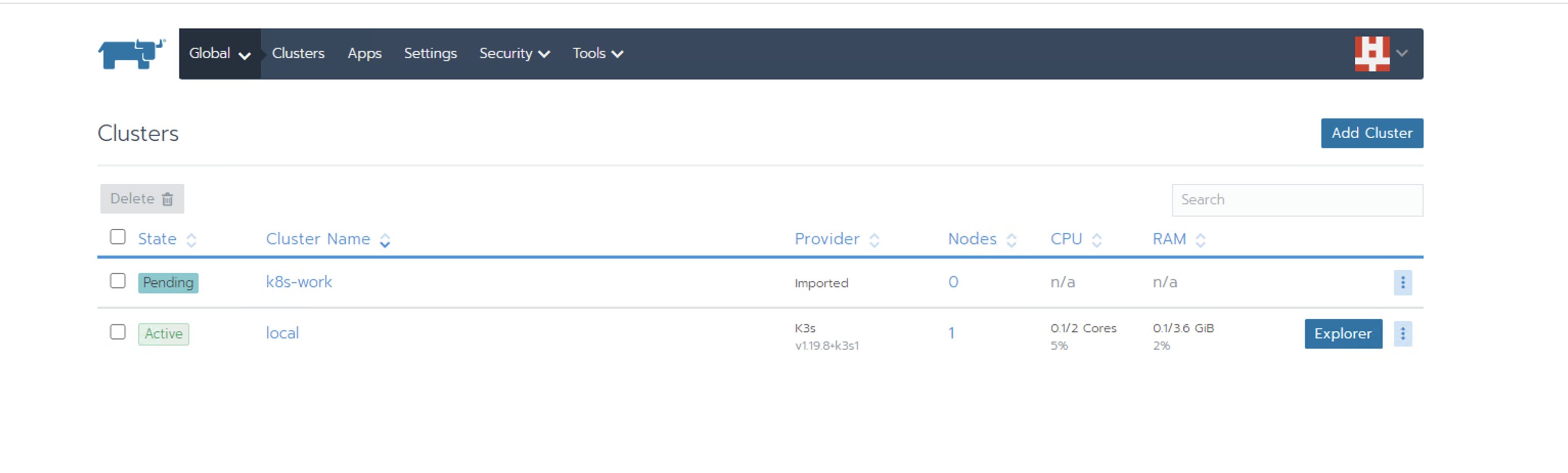

目前还是pending状态,说明rancher的agent还没启动完成,查看pod状态

[root@k8s-master data]# kubectl get pods -A NAMESPACE NAME READY STATUS RESTARTS AGE cattle-system cattle-cluster-agent-6448866fb8-jmhqv 1/1 Running 0 68s fleet-system fleet-agent-f5bd9bbd4-cpf8g 0/1 Pending 0 55s kube-system coredns-74ff55c5b-bj9kh 1/1 Running 1 22m kube-system coredns-74ff55c5b-ggfs4 1/1 Running 1 22m kube-system etcd-k8s-master 1/1 Running 1 22m kube-system kube-apiserver-k8s-master 1/1 Running 1 22m kube-system kube-controller-manager-k8s-master 1/1 Running 1 22m kube-system kube-flannel-ds-xllwd 1/1 Running 1 13m kube-system kube-proxy-btr2v 1/1 Running 1 22m kube-system kube-scheduler-k8s-master 1/1 Running 1 22m

发现fleet-agent-f5bd9bbd4-cpf8g不是running状态,查看详细信息

[root@k8s-master data]# kubectl describe pods fleet-agent-f5bd9bbd4-cpf8g -n fleet-system .... .... .... Events: Type Reason Age From Message ---- ------ ---- ---- ------- Warning FailedScheduling 0s (x4 over 2m26s) default-scheduler 0/1 nodes are available: 1 node(s) had taint {node-role.kubernetes.io/master: }, that the pod didn't tolerate.

因为集群初始化完成之后,master节点不可被调度,修改节点taint即可

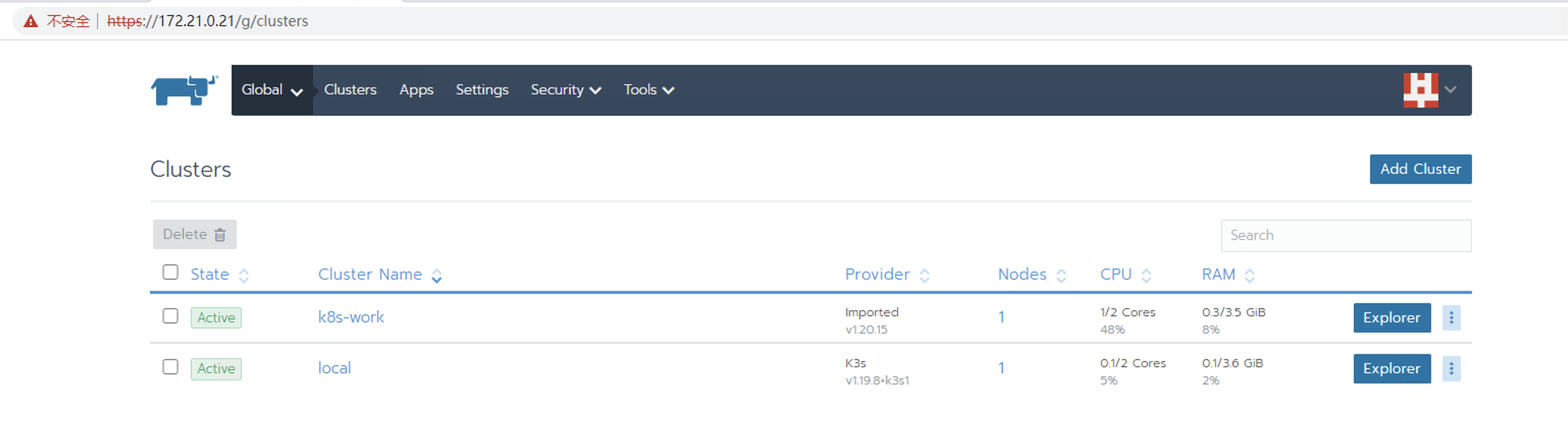

[root@k8s-master data]# kubectl describe node k8s-master Name: k8s-master Roles: control-plane,master .... .... Taints: node-role.kubernetes.io/master:NoSchedule [root@k8s-master data]# kubectl taint node k8s-master node-role.kubernetes.io/master:NoSchedule- node/k8s-master untainted [root@k8s-master data]# kubectl get pods -A #再次查看pod状态,即可发现为运行中了 NAMESPACE NAME READY STATUS RESTARTS AGE cattle-system cattle-cluster-agent-6448866fb8-jmhqv 1/1 Running 0 6m17s fleet-system fleet-agent-78fd7c8fc4-mx5fq 1/1 Running 0 7s kube-system coredns-74ff55c5b-bj9kh 1/1 Running 1 27m kube-system coredns-74ff55c5b-ggfs4 1/1 Running 1 27m kube-system etcd-k8s-master 1/1 Running 1 28m kube-system kube-apiserver-k8s-master 1/1 Running 1 28m kube-system kube-controller-manager-k8s-master 1/1 Running 1 28m kube-system kube-flannel-ds-xllwd 1/1 Running 1 18m kube-system kube-proxy-btr2v 1/1 Running 1 27m kube-system kube-scheduler-k8s-master 1/1 Running 1 28m

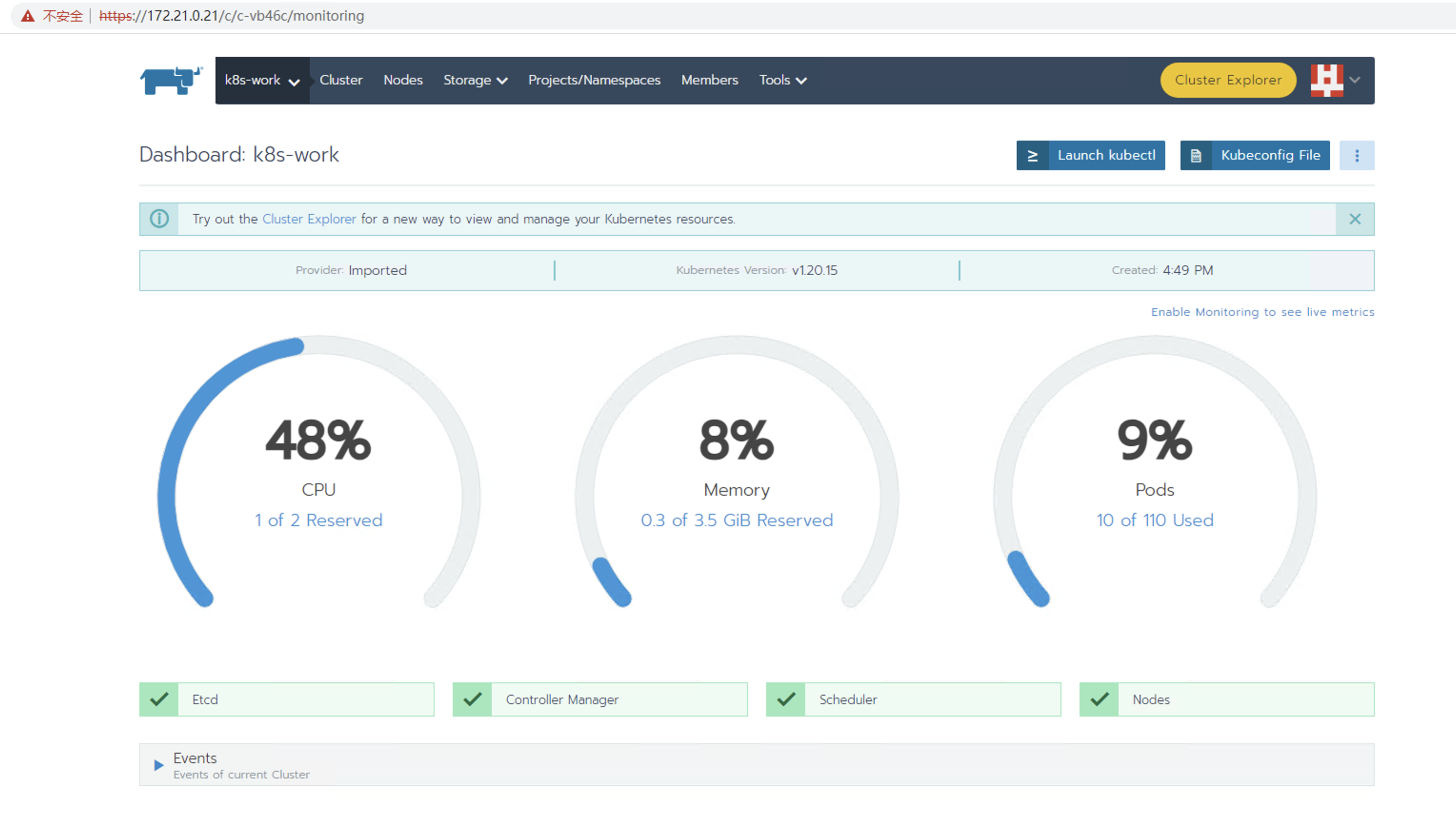

登录rancher界面查看导入的k8s集群

发现Controller Manager和Scheduler状态异常,实际pod确是正常运行的,经百度,需要修改Controller Manager和Scheduler的配置文件,再重启kubelet即可。

将/etc/kubernetes/manifests/kube-controller-manager.yaml中的- --port=0注释掉

将/etc/kubernetes/manifests/kube-scheduler.yaml中的- --port=0注释掉

重启kubelet:systemctl restart kubelet

再次在rancher上查看状态

至此,rancher-2.5.9,harbor,k8s-1.20.15部署完成。

21、离线升级rancher到2.5.16版本,参考文档:https://docs.rancher.cn/docs/rancher2.5/installation/other-installation-methods/single-node-docker/single-node-upgrades/_index

首先拉取rancher2.5.16使用的镜像,并上传到harbor上

docker pull rancher/rancher-agent:v2.5.16 docker pull rancher/rancher:v2.5.16 docker pull rancher/shell:v0.1.13 docker pull rancher/fleet:v0.3.9 docker pull rancher/gitjob:v0.1.26 docker pull rancher/coredns-coredns:1.8.3 docker pull rancher/fleet-agent:v0.3.9 docker pull rancher/rancher-webhook:v0.1.5 docker pull rancher/rancher-operator:v0.1.5 docker pull busybox:latest docker save -o rancher2.5.16.tar rancher/rancher-agent:v2.5.16 rancher/rancher:v2.5.16 rancher/shell:v0.1.13 rancher/fleet:v0.3.9 rancher/gitjob:v0.1.26 rancher/coredns-coredns:1.8.3 rancher/fleet-agent:v0.3.9 rancher/rancher-webhook:v0.1.5 rancher/rancher-operator:v0.1.5 busybox:latest gzip rancher2.5.16.tar

22、将镜像包rancher2.5.16.tar.gz拷贝到rancher机器上,并导入,修改tag后上传至harbor

docker load -i rancher2.5.16.tar.gz docker tag rancher/rancher-agent:v2.5.16 172.21.0.9:443/rancher/rancher-agent:v2.5.16 docker push 172.21.0.9:443/rancher/rancher-agent:v2.5.16 docker tag rancher/rancher:v2.5.16 172.21.0.9:443/rancher/rancher:v2.5.16 docker push 172.21.0.9:443/rancher/rancher:v2.5.16 docker tag rancher/shell:v0.1.13 172.21.0.9:443/rancher/shell:v0.1.13 docker push 172.21.0.9:443/rancher/shell:v0.1.13 docker tag rancher/fleet:v0.3.9 172.21.0.9:443/rancher/fleet:v0.3.9 docker push 172.21.0.9:443/rancher/fleet:v0.3.9 docker tag rancher/gitjob:v0.1.26 172.21.0.9:443/rancher/gitjob:v0.1.26 docker push 172.21.0.9:443/rancher/gitjob:v0.1.26 docker tag rancher/coredns-coredns:1.8.3 172.21.0.9:443/rancher/coredns-coredns:1.8.3 docker push 172.21.0.9:443/rancher/coredns-coredns:1.8.3 docker tag rancher/fleet-agent:v0.3.9 172.21.0.9:443/rancher/fleet-agent:v0.3.9 docker push 172.21.0.9:443/rancher/fleet-agent:v0.3.9 docker tag rancher/rancher-webhook:v0.1.5 172.21.0.9:443/rancher/rancher-webhook:v0.1.5 docker push 172.21.0.9:443/rancher/rancher-webhook:v0.1.5 docker tag rancher/rancher-operator:v0.1.5 172.21.0.9:443/rancher/rancher-operator:v0.1.5 docker push 172.21.0.9:443/rancher/rancher-operator:v0.1.5 docker tag busybox:latest 172.21.0.9:443/rancher/busybox:latest docker push 172.21.0.9:443/rancher/busybox:latest

23、停止老版本rancher2.5.9

docker stop eager_brattain docker create --volumes-from eager_brattain --name rancher-data 172.21.0.9:443/rancher/rancher:v2.5.9 docker run --volumes-from rancher-data -v "$PWD:/backup" --rm 172.21.0.9:443/rancher/busybox tar zcvf /backup/rancher-data-backup-2.5.9-20230604.tar.gz /var/lib/rancher [root@rancher data]# ls -l rancher-data-backup-2.5.9-20230604.tar.gz -rw-r--r-- 1 root root 428932355 6月 4 17:47 rancher-data-backup-2.5.9-20230604.tar.gz #启动新版本rancher docker run -d --volumes-from rancher-data --restart=unless-stopped -p 80:80 -p 443:443 -v /data/rancher/registries.yaml:/etc/rancher/k3s/registries.yaml -v /data/rancher/rancher_data:/var/lib/rancher/ -v /data/rancher/auditlog:/var/log/auditlog -e CATTLE_SYSTEM_DEFAULT_REGISTRY=172.21.0.9:443 -e CATTLE_SYSTEM_CATALOG=bundled --privileged 172.21.0.9:443/rancher/rancher:v2.5.16

24、仍存在基础镜像不存在的问题,参考步骤11处理

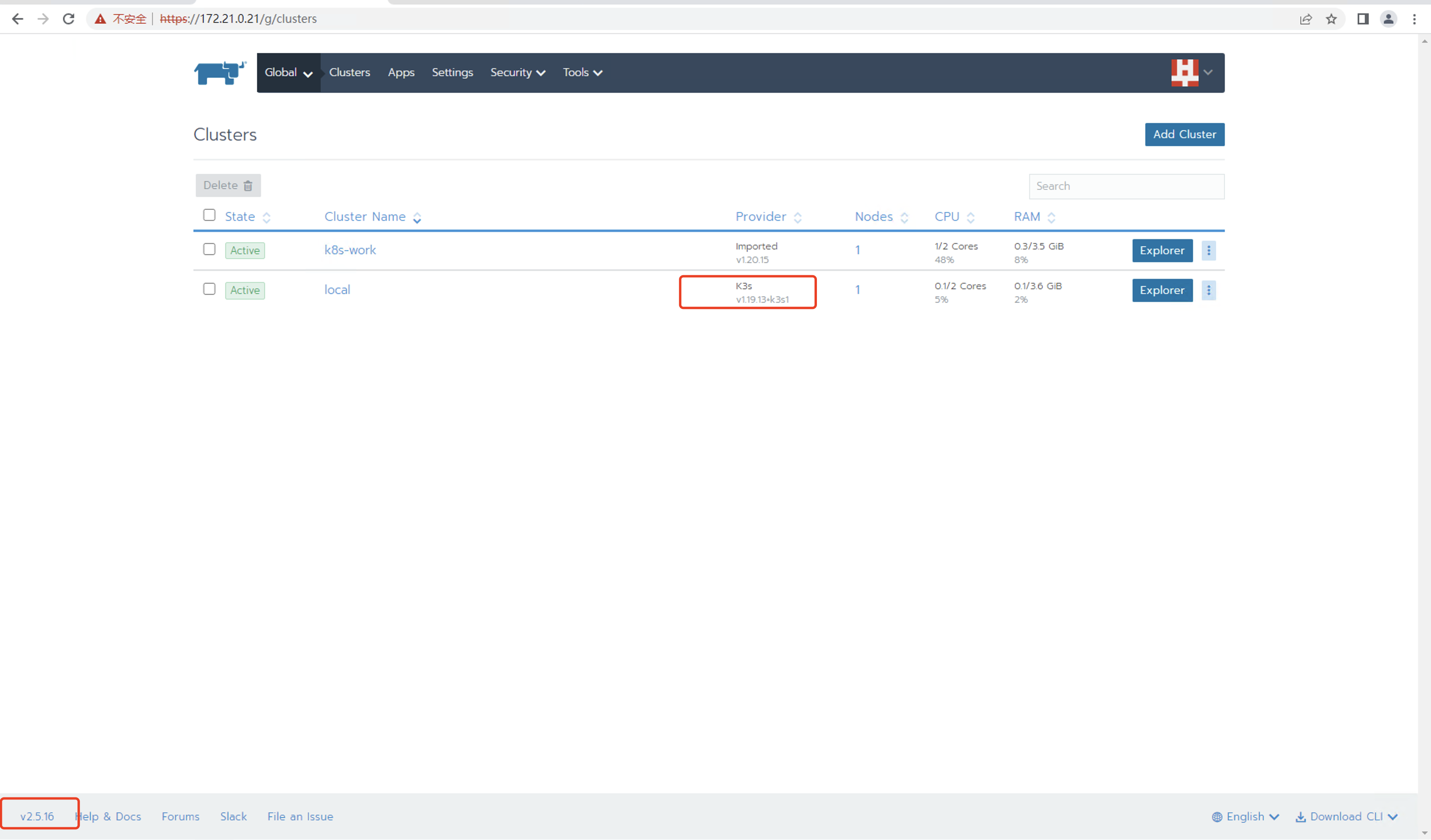

25、pod全部正常后,登录页面,查看rancher版本和k3s版本

26、删除旧版本rancher容器,否则下次docker重启时,旧容器也会启动,会导致端口冲突

docker rm -f eager_brattain

26、升级k8s版本