自定制命令

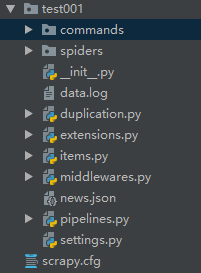

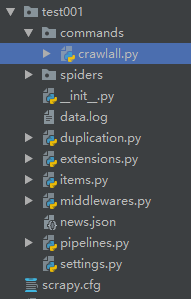

1. 在spiders同级创建任意目录,如:commands

2. 在其中创建 crawlall.py 文件 (此处文件名就是自定义的命令)

#crawlall.py文件

1 from scrapy.commands import ScrapyCommand 2 from scrapy.utils.project import get_project_settings 3 4 5 class Command(ScrapyCommand): 6 7 requires_project = True 8 9 def syntax(self): 10 return '[options]' 11 12 def short_desc(self): 13 return 'Runs all of the spiders' 14 15 def run(self, args, opts): 16 #找到所有的爬虫名称 17 print(type(self.crawler_process)) 18 from scrapy.crawler import CrawlerProcess 19 #1.第一步执行CrawlerProcess构造方法__init__ 20 #2.CrawlerProcess对象(含有配置文件)的spiders 21 #super(CrawlerProcess, self).__init__(settings) 22 #def spiders(self):return self.spider_loader 23 #self.spider_loader = _get_spider_loader(settings) 24 #loader_cls = load_object(cls_path) 25 26 #获取当前所有爬虫的名称 27 spider_list = self.crawler_process.spiders.list() #crawler_process对象 28 # spider_list = ['chouti','cnblogs'] #自定义spider 29 for name in spider_list: 30 #CrawlerProcess类无crawl方法,查看父类CrawlerRunner 31 #2.1 为了每一个爬虫创建一个Crawler对象 32 #2.2 执行d = Crawler.crawl(..) ########## 深挖 ############# 33 #d.addBoth(_done) 34 #2.3 CrawlerProces对象._active = {d,} 35 36 self.crawler_process.crawl(name, **opts.__dict__) 37 #3 38 #d = self.join() -->>d = defer.DeferredList(self._active) 39 #yield defer.DeferredList(self._active) 40 #d.addBoth(self._stop_reactor) #self._stop_reactor ==>> reactor.stop() 41 42 # reactor.run(installSignalHandlers=False) # blocking call 43 self.crawler_process.start()

1 import six 2 import signal 3 import logging 4 import warnings 5 6 import sys 7 from twisted.internet import reactor, defer 8 from zope.interface.verify import verifyClass, DoesNotImplement 9 10 from scrapy.core.engine import ExecutionEngine 11 from scrapy.resolver import CachingThreadedResolver 12 from scrapy.interfaces import ISpiderLoader 13 from scrapy.extension import ExtensionManager 14 from scrapy.settings import overridden_settings, Settings 15 from scrapy.signalmanager import SignalManager 16 from scrapy.exceptions import ScrapyDeprecationWarning 17 from scrapy.utils.ossignal import install_shutdown_handlers, signal_names 18 from scrapy.utils.misc import load_object 19 from scrapy.utils.log import ( 20 LogCounterHandler, configure_logging, log_scrapy_info, 21 get_scrapy_root_handler, install_scrapy_root_handler) 22 from scrapy import signals 23 24 logger = logging.getLogger(__name__) 25 26 27 class Crawler(object): 28 29 def __init__(self, spidercls, settings=None): 30 if isinstance(settings, dict) or settings is None: 31 settings = Settings(settings) 32 33 self.spidercls = spidercls 34 self.settings = settings.copy() 35 self.spidercls.update_settings(self.settings) 36 37 d = dict(overridden_settings(self.settings)) 38 logger.info("Overridden settings: %(settings)r", {'settings': d}) 39 40 self.signals = SignalManager(self) 41 self.stats = load_object(self.settings['STATS_CLASS'])(self) 42 43 handler = LogCounterHandler(self, level=self.settings.get('LOG_LEVEL')) 44 logging.root.addHandler(handler) 45 if get_scrapy_root_handler() is not None: 46 # scrapy root handler already installed: update it with new settings 47 install_scrapy_root_handler(self.settings) 48 # lambda is assigned to Crawler attribute because this way it is not 49 # garbage collected after leaving __init__ scope 50 self.__remove_handler = lambda: logging.root.removeHandler(handler) 51 self.signals.connect(self.__remove_handler, signals.engine_stopped) 52 53 lf_cls = load_object(self.settings['LOG_FORMATTER']) 54 self.logformatter = lf_cls.from_crawler(self) 55 self.extensions = ExtensionManager.from_crawler(self) 56 57 self.settings.freeze() 58 self.crawling = False 59 self.spider = None 60 self.engine = None 61 62 @property 63 def spiders(self): 64 if not hasattr(self, '_spiders'): 65 warnings.warn("Crawler.spiders is deprecated, use " 66 "CrawlerRunner.spider_loader or instantiate " 67 "scrapy.spiderloader.SpiderLoader with your " 68 "settings.", 69 category=ScrapyDeprecationWarning, stacklevel=2) 70 self._spiders = _get_spider_loader(self.settings.frozencopy()) 71 return self._spiders 72 73 @defer.inlineCallbacks 74 def crawl(self, *args, **kwargs): 75 assert not self.crawling, "Crawling already taking place" 76 self.crawling = True 77 78 try: 79 self.spider = self._create_spider(*args, **kwargs) 80 self.engine = self._create_engine() 81 start_requests = iter(self.spider.start_requests()) 82 yield self.engine.open_spider(self.spider, start_requests) 83 yield defer.maybeDeferred(self.engine.start) 84 except Exception: 85 # In Python 2 reraising an exception after yield discards 86 # the original traceback (see https://bugs.python.org/issue7563), 87 # so sys.exc_info() workaround is used. 88 # This workaround also works in Python 3, but it is not needed, 89 # and it is slower, so in Python 3 we use native `raise`. 90 if six.PY2: 91 exc_info = sys.exc_info() 92 93 self.crawling = False 94 if self.engine is not None: 95 yield self.engine.close() 96 97 if six.PY2: 98 six.reraise(*exc_info) 99 raise 100 101 def _create_spider(self, *args, **kwargs): 102 return self.spidercls.from_crawler(self, *args, **kwargs) 103 104 def _create_engine(self): 105 return ExecutionEngine(self, lambda _: self.stop()) 106 107 @defer.inlineCallbacks 108 def stop(self): 109 if self.crawling: 110 self.crawling = False 111 yield defer.maybeDeferred(self.engine.stop) 112 113 114 class CrawlerRunner(object): 115 """ 116 This is a convenient helper class that keeps track of, manages and runs 117 crawlers inside an already setup Twisted `reactor`_. 118 119 The CrawlerRunner object must be instantiated with a 120 :class:`~scrapy.settings.Settings` object. 121 122 This class shouldn't be needed (since Scrapy is responsible of using it 123 accordingly) unless writing scripts that manually handle the crawling 124 process. See :ref:`run-from-script` for an example. 125 """ 126 127 crawlers = property( 128 lambda self: self._crawlers, 129 doc="Set of :class:`crawlers <scrapy.crawler.Crawler>` started by " 130 ":meth:`crawl` and managed by this class." 131 ) 132 133 def __init__(self, settings=None): 134 if isinstance(settings, dict) or settings is None: 135 settings = Settings(settings) 136 self.settings = settings 137 self.spider_loader = _get_spider_loader(settings) 138 self._crawlers = set() 139 self._active = set() 140 141 @property 142 def spiders(self): 143 warnings.warn("CrawlerRunner.spiders attribute is renamed to " 144 "CrawlerRunner.spider_loader.", 145 category=ScrapyDeprecationWarning, stacklevel=2) 146 return self.spider_loader 147 148 def crawl(self, crawler_or_spidercls, *args, **kwargs): 149 """ 150 Run a crawler with the provided arguments. 151 152 It will call the given Crawler's :meth:`~Crawler.crawl` method, while 153 keeping track of it so it can be stopped later. 154 155 If `crawler_or_spidercls` isn't a :class:`~scrapy.crawler.Crawler` 156 instance, this method will try to create one using this parameter as 157 the spider class given to it. 158 159 Returns a deferred that is fired when the crawling is finished. 160 161 :param crawler_or_spidercls: already created crawler, or a spider class 162 or spider's name inside the project to create it 163 :type crawler_or_spidercls: :class:`~scrapy.crawler.Crawler` instance, 164 :class:`~scrapy.spiders.Spider` subclass or string 165 166 :param list args: arguments to initialize the spider 167 168 :param dict kwargs: keyword arguments to initialize the spider 169 """ 170 crawler = self.create_crawler(crawler_or_spidercls) 171 return self._crawl(crawler, *args, **kwargs) 172 173 def _crawl(self, crawler, *args, **kwargs): 174 self.crawlers.add(crawler) 175 d = crawler.crawl(*args, **kwargs) 176 self._active.add(d) 177 178 def _done(result): 179 self.crawlers.discard(crawler) 180 self._active.discard(d) 181 return result 182 183 return d.addBoth(_done) 184 185 def create_crawler(self, crawler_or_spidercls): 186 """ 187 Return a :class:`~scrapy.crawler.Crawler` object. 188 189 * If `crawler_or_spidercls` is a Crawler, it is returned as-is. 190 * If `crawler_or_spidercls` is a Spider subclass, a new Crawler 191 is constructed for it. 192 * If `crawler_or_spidercls` is a string, this function finds 193 a spider with this name in a Scrapy project (using spider loader), 194 then creates a Crawler instance for it. 195 """ 196 if isinstance(crawler_or_spidercls, Crawler): 197 return crawler_or_spidercls 198 return self._create_crawler(crawler_or_spidercls) 199 200 def _create_crawler(self, spidercls): 201 if isinstance(spidercls, six.string_types): 202 spidercls = self.spider_loader.load(spidercls) 203 return Crawler(spidercls, self.settings) 204 205 def stop(self): 206 """ 207 Stops simultaneously all the crawling jobs taking place. 208 209 Returns a deferred that is fired when they all have ended. 210 """ 211 return defer.DeferredList([c.stop() for c in list(self.crawlers)]) 212 213 @defer.inlineCallbacks 214 def join(self): 215 """ 216 join() 217 218 Returns a deferred that is fired when all managed :attr:`crawlers` have 219 completed their executions. 220 """ 221 while self._active: 222 yield defer.DeferredList(self._active) 223 224 225 class CrawlerProcess(CrawlerRunner): 226 """ 227 A class to run multiple scrapy crawlers in a process simultaneously. 228 229 This class extends :class:`~scrapy.crawler.CrawlerRunner` by adding support 230 for starting a Twisted `reactor`_ and handling shutdown signals, like the 231 keyboard interrupt command Ctrl-C. It also configures top-level logging. 232 233 This utility should be a better fit than 234 :class:`~scrapy.crawler.CrawlerRunner` if you aren't running another 235 Twisted `reactor`_ within your application. 236 237 The CrawlerProcess object must be instantiated with a 238 :class:`~scrapy.settings.Settings` object. 239 240 :param install_root_handler: whether to install root logging handler 241 (default: True) 242 243 This class shouldn't be needed (since Scrapy is responsible of using it 244 accordingly) unless writing scripts that manually handle the crawling 245 process. See :ref:`run-from-script` for an example. 246 """ 247 248 def __init__(self, settings=None, install_root_handler=True): 249 super(CrawlerProcess, self).__init__(settings) 250 install_shutdown_handlers(self._signal_shutdown) 251 configure_logging(self.settings, install_root_handler) 252 log_scrapy_info(self.settings) 253 254 def _signal_shutdown(self, signum, _): 255 install_shutdown_handlers(self._signal_kill) 256 signame = signal_names[signum] 257 logger.info("Received %(signame)s, shutting down gracefully. Send again to force ", 258 {'signame': signame}) 259 reactor.callFromThread(self._graceful_stop_reactor) 260 261 def _signal_kill(self, signum, _): 262 install_shutdown_handlers(signal.SIG_IGN) 263 signame = signal_names[signum] 264 logger.info('Received %(signame)s twice, forcing unclean shutdown', 265 {'signame': signame}) 266 reactor.callFromThread(self._stop_reactor) 267 268 def start(self, stop_after_crawl=True): 269 """ 270 This method starts a Twisted `reactor`_, adjusts its pool size to 271 :setting:`REACTOR_THREADPOOL_MAXSIZE`, and installs a DNS cache based 272 on :setting:`DNSCACHE_ENABLED` and :setting:`DNSCACHE_SIZE`. 273 274 If `stop_after_crawl` is True, the reactor will be stopped after all 275 crawlers have finished, using :meth:`join`. 276 277 :param boolean stop_after_crawl: stop or not the reactor when all 278 crawlers have finished 279 """ 280 if stop_after_crawl: 281 d = self.join() 282 # Don't start the reactor if the deferreds are already fired 283 if d.called: 284 return 285 d.addBoth(self._stop_reactor) 286 287 reactor.installResolver(self._get_dns_resolver()) 288 tp = reactor.getThreadPool() 289 tp.adjustPoolsize(maxthreads=self.settings.getint('REACTOR_THREADPOOL_MAXSIZE')) 290 reactor.addSystemEventTrigger('before', 'shutdown', self.stop) 291 reactor.run(installSignalHandlers=False) # blocking call 292 293 def _get_dns_resolver(self): 294 if self.settings.getbool('DNSCACHE_ENABLED'): 295 cache_size = self.settings.getint('DNSCACHE_SIZE') 296 else: 297 cache_size = 0 298 return CachingThreadedResolver( 299 reactor=reactor, 300 cache_size=cache_size, 301 timeout=self.settings.getfloat('DNS_TIMEOUT') 302 ) 303 304 def _graceful_stop_reactor(self): 305 d = self.stop() 306 d.addBoth(self._stop_reactor) 307 return d 308 309 def _stop_reactor(self, _=None): 310 try: 311 reactor.stop() 312 except RuntimeError: # raised if already stopped or in shutdown stage 313 pass 314 315 316 def _get_spider_loader(settings): 317 """ Get SpiderLoader instance from settings """ 318 if settings.get('SPIDER_MANAGER_CLASS'): 319 warnings.warn( 320 'SPIDER_MANAGER_CLASS option is deprecated. ' 321 'Please use SPIDER_LOADER_CLASS.', 322 category=ScrapyDeprecationWarning, stacklevel=2 323 ) 324 cls_path = settings.get('SPIDER_MANAGER_CLASS', 325 settings.get('SPIDER_LOADER_CLASS')) 326 loader_cls = load_object(cls_path) 327 try: 328 verifyClass(ISpiderLoader, loader_cls) 329 except DoesNotImplement: 330 warnings.warn( 331 'SPIDER_LOADER_CLASS (previously named SPIDER_MANAGER_CLASS) does ' 332 'not fully implement scrapy.interfaces.ISpiderLoader interface. ' 333 'Please add all missing methods to avoid unexpected runtime errors.', 334 category=ScrapyDeprecationWarning, stacklevel=2 335 ) 336 return loader_cls.from_settings(settings.frozencopy())

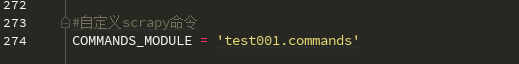

3.在settings.py 中添加配置 COMMANDS_MODULE = '项目名称.目录名称'

4.在项目目录执行命令:scrapy crawlall

1 import sys 2 from scrapy.cmdline import execute 3 4 if __name__ == '__main__': 5 execute(["scrapy","github","--nolog"])