1、需求简介

1.1、案例需求

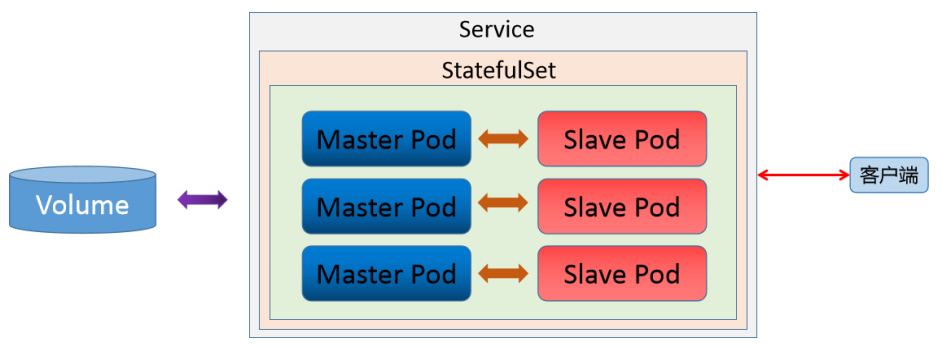

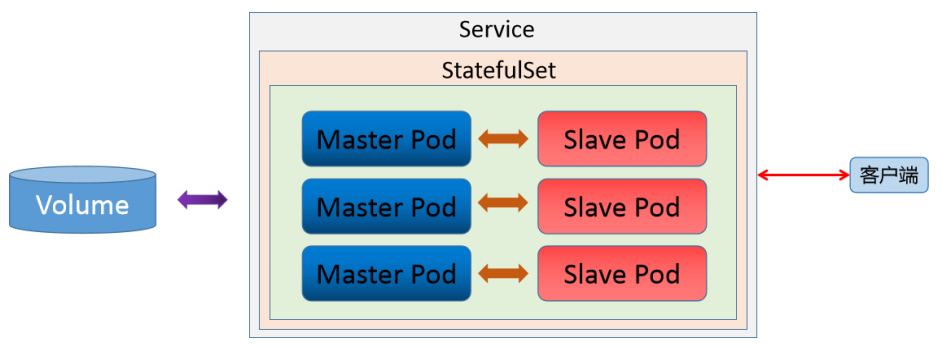

对于statefulset的使用,最常见的就是状态集群,而redis的集群就属于一种典型的状态集群效果。这里

我们基于Statefulset功能来创建一个redis多主集群,集群的效果如下:

每个Mater 都可以拥有多个slave,在我们的环境中设置6个节点,3主3从。

当Master掉线后,

redis cluster集群会从多个Slave中选举出来一个新的Matser作为代替

旧的Master重新上线后变成 Master 的Slave.

1.2、方案选择

对于redis的集群,我们可以有多种方案选择,常见的方案有:

方案1:Statefulset

方案2:Service&depolyment

虽然两种方法都可以实现,但是我们主要选择方案1:

方案2操作起来虽然可以实现功能,但是涉及到大量额外的功能组件,对于初学者甚至熟练实践者都是不太友好的。

方案1的statefulset本来就适用于通用的状态服务场景,所以我们就用方案1.

1.3、涉及模式

1.3.1、拓扑状态

应用的多个实例之间不是完全对等的关系,这个应用实例的启动必须按照某些顺序启动,比如

应用的主节点 A 要先于从节点 B 启动。而如果你把 A 和 B 两个Pod删除掉,他们再次被创建

出来是也必须严格按照这个顺序才行,并且,新创建出来的Pod,必须和原来的Pod的网络标识

一样,这样原先的访问者才能使用同样的方法,访问到这个新的Pod

1.3.2、存储状态

应用的多个实例分别绑定了不同的存储数据.对于这些应用实例来说,Pod A第一次读取到的

数据,和隔了十分钟之后再次读取到的数据,应该是同一份,哪怕在此期间Pod A被重新创建过.

一个数据库应用的多个存储实例。

1.4、Statefulset效果图

1.4.1、说明

无论是Master 还是 slave 都作为 statefulset 的一个副本,通过pv/pvc进行持久化,对外暴露一个service 接受客户端请求

2、环境准备

2.1、NFS服务配置

2.1.1、配置共享目录

mkdir /nfs-data/pv/redis{1..6} -p

echo -n "">/etc/exports

for i in {1..6}

do

echo "/nfs-data/pv/redis$i *(rw,no_root_squash,sync)" >>/etc/exports

done

register ~]# systemctl restart nfs

register ~]# exportfs

/nfs-data/pv/redis1

/nfs-data/pv/redis2

/nfs-data/pv/redis3

/nfs-data/pv/redis4

/nfs-data/pv/redis5

/nfs-data/pv/redis6

2.2、创建pv资源

2.2.1、定义资源配置清单

for i in {1..6}

do

cat >>storage-statefulset-redis-pv.yml<<-EOF

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: redis-pv00$i

spec:

capacity:

storage: 200M

accessModes:

- ReadWriteMany

nfs:

server: 192.168.10.33

path: /nfs-data/pv/redis$i

EOF

done

2.2.2、应用资源配置清单

master1 ]# kubectl apply -f storage-statefulset-redis-pv.yml

persistentvolume/redis-pv001 created

persistentvolume/redis-pv002 created

persistentvolume/redis-pv003 created

persistentvolume/redis-pv004 created

persistentvolume/redis-pv005 created

persistentvolume/redis-pv006 created

master1 ]# kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

redis-pv001 200M RWX Retain Available 3s

redis-pv002 200M RWX Retain Available 3s

redis-pv003 200M RWX Retain Available 3s

redis-pv004 200M RWX Retain Available 3s

redis-pv005 200M RWX Retain Available 3s

redis-pv006 200M RWX Retain Available 3s

2.3、将redis配置文件转为configmap资源

2.3.1、创建redis配置文件

cat > redis.conf <<-EOF

port 6379

cluster-enabled yes

cluster-config-file /var/lib/redis/nodes.conf

cluster-node-timeout 5000

appendonly yes

dir /var/lib/redis

EOF

注意:

cluster-conf-file: 选项设定了保存节点配置文件的路径,如果这个配置文件不存在,每个节点在启

动的时候都为它自身指定了一个新的ID存档到这个文件中,实例会一直使用同一个ID,在集群中保持一个独一

无二的(Unique)名字.每个节点都是用ID而不是IP或者端口号来记录其他节点,因为在k8s中,IP地址是不固

定的,而这个独一无二的标识符(Identifier)则会在节点的整个生命周期中一直保持不变,我们这个文件里面存放的是节点ID。

2.3.2、创建configmap资源

master1 ]# kubectl create configmap redis-conf --from-file=redis.conf

configmap/redis-conf created

master1 ]# kubectl get cm

NAME DATA AGE

redis-conf 1 4s

2.4、创建headless service

Headless service是StatefulSet实现稳定网络标识的基础,我们需要提前创建。

2.4.1、定义资源配置清单

cat >storage-statefulset-redis-headless-service.yml<<'EOF'

apiVersion: v1

kind: Service

metadata:

name: redis-service

labels:

app: redis

spec:

ports:

- name: redis-port

port: 6379

clusterIP: None

selector:

app: redis

appCluster: redis-cluster

EOF

2.4.2、应用资源配置清单

master1 ]# kubectl create -f storage-statefulset-redis-headless-service.yml

service/redis-service created

master1 ]# kubectl get svc redis-service

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

redis-service ClusterIP None <none> 6379/TCP 4s

2.5、创建statefulset的Pod

2.5.1、定义资源配置清单

cat >storage-statefulset-redis-cluster.yml<<'EOF'

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: redis-cluster

spec:

serviceName: "redis-service"

selector:

matchLabels:

app: redis

appCluster: redis-cluster

replicas: 6

template:

metadata:

labels:

app: redis

appCluster: redis-cluster

spec:

terminationGracePeriodSeconds: 20

affinity:

podAntiAffinity:

preferredDuringSchedulingIgnoredDuringExecution:

- weight: 100

podAffinityTerm:

labelSelector:

matchExpressions:

- key: app

operator: In

values:

- redis

topologyKey: kubernetes.io/hostname

containers:

- name: redis

image: 192.168.10.33:80/k8s/redis:latest

command:

- "redis-server"

args:

- "/etc/redis/redis.conf"

- "--protected-mode"

- "no"

# command: redis-server /etc/redis/redis.conf --protected-mode no

resources:

requests:

cpu: "100m"

memory: "100Mi"

ports:

- name: redis

containerPort: 6379

protocol: "TCP"

- name: cluster

containerPort: 16379

protocol: "TCP"

volumeMounts:

- name: "redis-conf"

mountPath: "/etc/redis"

- name: "redis-data"

mountPath: "/var/lib/redis"

volumes:

- name: "redis-conf"

configMap:

name: "redis-conf"

items:

- key: "redis.conf"

path: "redis.conf"

volumeClaimTemplates:

- metadata:

name: redis-data

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 200M

EOF

注意:

PodAntiAffinity:表示反亲和性,其决定了某个pod不可以和哪些Pod部署在同一拓扑域,可以用于

将一个服务的POD分散在不同的主机或者拓扑域中,提高服务本身的稳定性。

matchExpressions:规定了Redis Pod要尽量不要调度到包含app为redis的Node上,也即是说已经

存在Redis的Node上尽量不要再分配Redis Pod了.

2.5.2、应用资源配置清单

master1 ]# kubectl get pods

NAME READY STATUS RESTARTS AGE

redis-cluster-0 1/1 Running 0 35s

redis-cluster-1 1/1 Running 0 33s

redis-cluster-2 1/1 Running 0 31s

redis-cluster-3 1/1 Running 0 29s

redis-cluster-4 1/1 Running 0 27s

redis-cluster-5 1/1 Running 0 24s

master1 ]# kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

redis-pv001 200M RWX Retain Bound default/redis-data-redis-cluster-4 55m

redis-pv002 200M RWX Retain Bound default/redis-data-redis-cluster-0 55m

redis-pv003 200M RWX Retain Bound default/redis-data-redis-cluster-2 55m

redis-pv004 200M RWX Retain Bound default/redis-data-redis-cluster-1 55m

redis-pv005 200M RWX Retain Bound default/redis-data-redis-cluster-3 55m

redis-pv006 200M RWX Retain Bound default/redis-data-redis-cluster-5 55m

master1 ]# kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

redis-data-redis-cluster-0 Bound redis-pv002 200M RWX 52s

redis-data-redis-cluster-1 Bound redis-pv004 200M RWX 50s

redis-data-redis-cluster-2 Bound redis-pv003 200M RWX 48s

redis-data-redis-cluster-3 Bound redis-pv005 200M RWX 46s

redis-data-redis-cluster-4 Bound redis-pv001 200M RWX 44s

redis-data-redis-cluster-5 Bound redis-pv006 200M RWX 41s

2.6、验证DNS是否解析正常

2.6.1、进入pod里面测试DNS是否正常

master1 ]# kubectl run -it client --image=192.168.10.33:80/k8s/busybox:latest --rm /bin/sh

/ # nslookup 10.244.3.26

Server: 10.96.0.10

Address: 10.96.0.10:53

26.3.244.10.in-addr.arpa name = redis-cluster-0.redis-service.default.svc.cluster.local

/ # nslookup -query=A redis-cluster-0.redis-service.default.svc.cluster.local

Server: 10.96.0.10

Address: 10.96.0.10:53

Name: redis-cluster-0.redis-service.default.svc.cluster.local

Address: 10.244.3.26

每个Pod都会得到集群内的一个DNS域名,格式为$(podname).$(service name).$(namespace).svc.cluster.local。在K8S集群内部,这些Pod就可以利用该域名互相通信。

2.6.2、集群pod的更新

若Redis Pod迁移或是重启(我们可以手动删除掉一个Redis Pod来测试),则IP是会改变的,但Pod的域名、SRV records、A record都不会改变。

2.7、查看当前所有redis节点的状态

pod_list=$(kubectl get pods -o wide | grep -v NAME | awk '{print $1}')

for i in $pod_list

do

Role=$(kubectl exec -it $i -- redis-cli info Replication | grep role)

echo "$i | $Role"

done

redis-cluster-0 | role:master

redis-cluster-1 | role:master

redis-cluster-2 | role:master

redis-cluster-3 | role:master

redis-cluster-4 | role:master

redis-cluster-5 | role:master

# 目前所有的节点状态都是 master状态

3、Redis集群的配置

3.1、集群环境

3.1.1、环境说明

创建好6个Redis Pod后,我们还需要利用常用的Redis-tribe工具进行集群的初始化。由于Redis集群必

须在所有节点启动后才能进行初始化,而如果将初始化逻辑写入Statefulset中,则是一件非常复杂而且低

效的行为。我们可以在K8S上创建一个额外的容器,专门用于进行K8S集群内部某些服务的管理控制。

3.1.2、准备一个创建redis集群的pod

master1 ]# kubectl run my-redis --image=192.168.10.33:80/k8s/redis:latest

apt -y install dnsutils

# 解析nginx pod节点的域名是否解析正常

root@my-redis:/data# dig +short redis-cluster-0.redis-service.default.svc.cluster.local

10.244.3.37

3.2、创建redis集群

3.2.1、初始化集群

centos /]# redis-cli --cluster create \

redis-cluster-0.redis-service.default.svc.cluster.local:6379 \

redis-cluster-1.redis-service.default.svc.cluster.local:6379 \

redis-cluster-2.redis-service.default.svc.cluster.local:6379 \

redis-cluster-3.redis-service.default.svc.cluster.local:6379 \

redis-cluster-4.redis-service.default.svc.cluster.local:6379 \

redis-cluster-5.redis-service.default.svc.cluster.local:6379 --cluster-replicas 1

参数详解:

--cluster create: 创建一个新的集群

--cluster-replicas 1: 创建的集群中每个主节点分配一个从节点,达到3主3从,后面写上所有redis

3.2.2、初始化打印的日志

>>> Performing hash slots allocation on 6 nodes...

Master[0] -> Slots 0 - 5460

Master[1] -> Slots 5461 - 10922

Master[2] -> Slots 10923 - 16383

Adding replica redis-cluster-4.redis-service.default.svc.cluster.local:6379 to redis-cluster-0.redis-service.default.svc.cluster.local:6379

Adding replica redis-cluster-5.redis-service.default.svc.cluster.local:6379 to redis-cluster-1.redis-service.default.svc.cluster.local:6379

Adding replica redis-cluster-3.redis-service.default.svc.cluster.local:6379 to redis-cluster-2.redis-service.default.svc.cluster.local:6379

M: f82e9a3c83d4be4e1b972404404ed93bd21b48b9 redis-cluster-0.redis-service.default.svc.cluster.local:6379

slots:[0-5460] (5461 slots) master

M: 908bdffca46d09d72f6e901f5feb357f50f53f07 redis-cluster-1.redis-service.default.svc.cluster.local:6379

slots:[5461-10922] (5462 slots) master

M: 76b3aef492ff7bd0aa616638ffa4c68836e4c3ad redis-cluster-2.redis-service.default.svc.cluster.local:6379

slots:[10923-16383] (5461 slots) master

S: c4284f25d9e6da46a1f3bd44f2c54cf7aa4114ef redis-cluster-3.redis-service.default.svc.cluster.local:6379

replicates 76b3aef492ff7bd0aa616638ffa4c68836e4c3ad

S: d92da259b1f6cdac7f7d263a8fdaa70c0b991b17 redis-cluster-4.redis-service.default.svc.cluster.local:6379

replicates f82e9a3c83d4be4e1b972404404ed93bd21b48b9

S: 57474cbc2189472c45a376ef7ab9dc8bb7e5f0cf redis-cluster-5.redis-service.default.svc.cluster.local:6379

replicates 908bdffca46d09d72f6e901f5feb357f50f53f07

Can I set the above configuration? (type 'yes' to accept): yes # 需要输入yes

>>> Nodes configuration updated

>>> Assign a different config epoch to each node

>>> Sending CLUSTER MEET messages to join the cluster

Waiting for the cluster to join

.

>>> Performing Cluster Check (using node redis-cluster-0.redis-service.default.svc.cluster.local:6379)

M: f82e9a3c83d4be4e1b972404404ed93bd21b48b9 redis-cluster-0.redis-service.default.svc.cluster.local:6379

slots:[0-5460] (5461 slots) master

1 additional replica(s)

S: 57474cbc2189472c45a376ef7ab9dc8bb7e5f0cf 10.244.4.110:6379

slots: (0 slots) slave

replicates 908bdffca46d09d72f6e901f5feb357f50f53f07

M: 76b3aef492ff7bd0aa616638ffa4c68836e4c3ad 10.244.3.27:6379

slots:[10923-16383] (5461 slots) master

1 additional replica(s)

M: 908bdffca46d09d72f6e901f5feb357f50f53f07 10.244.4.108:6379

slots:[5461-10922] (5462 slots) master

1 additional replica(s)

S: c4284f25d9e6da46a1f3bd44f2c54cf7aa4114ef 10.244.4.109:6379

slots: (0 slots) slave

replicates 76b3aef492ff7bd0aa616638ffa4c68836e4c3ad

S: d92da259b1f6cdac7f7d263a8fdaa70c0b991b17 10.244.3.28:6379

slots: (0 slots) slave

replicates f82e9a3c83d4be4e1b972404404ed93bd21b48b9

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

3.2.3、连到任意一个Redis Pod中检验一下

master1 ~]# kubectl exec -it redis-cluster-0 -- redis-cli cluster info

cluster_state:ok

cluster_slots_assigned:16384

cluster_slots_ok:16384

cluster_slots_pfail:0

cluster_slots_fail:0

cluster_known_nodes:6

cluster_size:3

cluster_current_epoch:6

cluster_my_epoch:1

cluster_stats_messages_ping_sent:2882

cluster_stats_messages_pong_sent:2904

cluster_stats_messages_sent:5786

cluster_stats_messages_ping_received:2899

cluster_stats_messages_pong_received:2882

cluster_stats_messages_meet_received:5

cluster_stats_messages_received:5786

total_cluster_links_buffer_limit_exceeded:0

master1 ~]# kubectl exec -it redis-cluster-0 -- redis-cli cluster nodes

7391b88a30017d3b7d36857154975b4f5d5a84a9 10.244.3.37:6379@16379 myself,master - 0 1680093329000 1 connected 0-5460

afce12fcbc1dcec256ec2936dce2276da2e49084 10.244.3.39:6379@16379 slave 7391b88a30017d3b7d36857154975b4f5d5a84a9 0 1680093331041 1 connected

97771edf5d274720628c74f5d73173f79238b041 10.244.4.114:6379@16379 slave 34da1d26fdf0c2e14c69b5f159e0c955f2d3f068 0 1680093330031 2 connected

3e8d901ed5c2ed8a3356e750175c9f2fb28940f0 10.244.3.38:6379@16379 master - 0 1680093330536 3 connected 10923-16383

9faaf3e1e88d0081f80a3512e3b3e7c9daac1430 10.244.4.113:6379@16379 slave 3e8d901ed5c2ed8a3356e750175c9f2fb28940f0 0 1680093330031 3 connected

34da1d26fdf0c2e14c69b5f159e0c955f2d3f068 10.244.4.112:6379@16379 master - 0 1680093331547 2 connected 5461-10922

3.2.4、查看Redis挂载的数据

register ~]# tree /nfs-data/pv/

/nfs-data/pv/

├── redis1

│ ├── appendonlydir

│ │ ├── appendonly.aof.2.base.rdb

│ │ ├── appendonly.aof.2.incr.aof

│ │ └── appendonly.aof.manifest

│ ├── dump.rdb

│ └── nodes.conf

├── redis2

│ ├── appendonlydir

│ │ ├── appendonly.aof.1.base.rdb

│ │ ├── appendonly.aof.1.incr.aof

│ │ └── appendonly.aof.manifest

│ ├── dump.rdb

│ └── nodes.conf

├── redis3

│ ├── appendonlydir

│ │ ├── appendonly.aof.1.base.rdb

│ │ ├── appendonly.aof.1.incr.aof

│ │ └── appendonly.aof.manifest

│ └── nodes.conf

├── redis4

│ ├── appendonlydir

│ │ ├── appendonly.aof.1.base.rdb

│ │ ├── appendonly.aof.1.incr.aof

│ │ └── appendonly.aof.manifest

│ └── nodes.conf

├── redis5

│ ├── appendonlydir

│ │ ├── appendonly.aof.2.base.rdb

│ │ ├── appendonly.aof.2.incr.aof

│ │ └── appendonly.aof.manifest

│ ├── dump.rdb

│ └── nodes.conf

└── redis6

├── appendonlydir

│ ├── appendonly.aof.2.base.rdb

│ ├── appendonly.aof.2.incr.aof

│ └── appendonly.aof.manifest

├── dump.rdb

└── nodes.conf

3.3、创建访问service

3.3.1、需求

前面我们创建了用于实现statefulset的headless service,但该service没有cluster IP,因此不能

用于外界访问.所以我们还需要创建一个service,专用于为Redis集群提供访问和负载均衡

3.3.2、定义资源配置清单

cat >statefulset-redis-access-service.yml<<'EOF'

apiVersion: v1

kind: Service

metadata:

name: statefulset-redis-access-service

labels:

app: redis

spec:

ports:

- name: redis-port

protocol: "TCP"

port: 6379

targetPort: 6379

selector:

app: redis

appCluster: redis-cluster

EOF

属性详解:

Service名称为 redis-access-service,在K8S集群中暴露6379端口,并且会对labels name为 app: redis或appCluster: redis-cluster的pod进行负载均衡。

3.3.3、应用资源配置清单

master1 ]# kubectl apply -f statefulset-redis-access-service.yml

service/statefulset-redis-access-service created

master1 ]# kubectl get svc -l app=redis

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

redis-service ClusterIP None <none> 6379/TCP 131m

statefulset-redis-access-service ClusterIP 10.102.56.62 <none> 6379/TCP 101s

3.4、测试集群

3.4.1、查询集群pod的角色

nodelist=$(kubectl get pod -o wide | grep -v NAME | awk '{print $1}')

for i in $nodelist

do

Role=$(kubectl exec -it $i -- redis-cli info Replication | grep slave0)

echo "$i | $Role"

done

redis-cluster-0 | slave0:ip=10.244.3.28,port=6379,state=online,offset=1666,lag=1

redis-cluster-1 | slave0:ip=10.244.4.110,port=6379,state=online,offset=1666,lag=0

redis-cluster-2 | slave0:ip=10.244.4.109,port=6379,state=online,offset=1680,lag=0

redis-cluster-3 |

redis-cluster-4 |

redis-cluster-5 |

3.4.2、查询备节点的IP地址

master1 ]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

redis-cluster-0 1/1 Running 0 89m 10.244.3.31 node1 <none> <none>

redis-cluster-1 1/1 Running 0 99m 10.244.4.108 node2 <none> <none>

redis-cluster-2 1/1 Running 0 99m 10.244.3.27 node1 <none> <none>

redis-cluster-3 1/1 Running 0 99m 10.244.4.109 node2 <none> <none>

redis-cluster-4 1/1 Running 0 99m 10.244.3.28 node1 <none> <none>

redis-cluster-5 1/1 Running 0 99m 10.244.4.110 node2 <none> <none>

master1 ]# kubectl exec -it redis-cluster-0 -- redis-cli ROLE

1) "master"

2) (integer) 1932

3) 1) 1) "10.244.3.28" # 这个是备节点的IP地址即redis-cluster-4

2) "6379"

3) "1932"

3.5、主从redis切换

3.5.1、删除其中一个主redis pod

# 原来的信息

master1 ]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

redis-cluster-0 1/1 Running 0 89m 10.244.3.31 node1 <none> <none>

...

# 删除pod

[root@master1 storage]# kubectl delete pod redis-cluster-0

pod "redis-cluster-0" deleted

# 此时redis-cluster-0 IP地址变了

[root@master1 storage]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

redis-cluster-0 1/1 Running 0 5s 10.244.3.35 node1 <none> <none>

3.5.2、查询IP地址变化,从节点是否会有变动

# 继续查看

nodelist=$(kubectl get pod -o wide | grep -v NAME | awk '{print $1}')

for i in $nodelist

do

Role=$(kubectl exec -it $i -- redis-cli info Replication | grep slave0)

echo "$i | $Role"

done

redis-cluster-0 | slave0:ip=10.244.3.28,port=6379,state=online,offset=266,lag=1

redis-cluster-1 | slave0:ip=10.244.4.110,port=6379,state=online,offset=2478,lag=1

redis-cluster-2 | slave0:ip=10.244.4.109,port=6379,state=online,offset=2492,lag=1

redis-cluster-3 |

redis-cluster-4 |

redis-cluster-5 |

总结:原来的主角色已经发生改变,但是生成新的主角色与之前的效果一致,继承了从属于它之前的从节点10.244.3.28

3.6、redis集群动态扩容

3.6.1、准备工作

我们现在这个集群中有6个节点三主三从,我现在添加两个pod节点,达到4主4从

# 添加nfs共享目录

mkdir /nfs-data/pv/redis{7..8}

chmod 777 -R /nfs-data/pv/*

cat >> /etc/exports <<-EOF

/nfs-data/pv/redis7 *(rw,all_squash)

/nfs-data/pv/redis8 *(rw,all_squash)

EOF

systemctl restart nfs

3.6.2、创建pv资源

echo -n ''>storage-statefulset-redis-pv.yml

for i in {7..8}

do

cat >>storage-statefulset-redis-pv.yml<<-EOF

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: redis-pv00$i

spec:

capacity:

storage: 200M

accessModes:

- ReadWriteMany

nfs:

server: 192.168.10.33

path: /nfs-data/pv/redis$i

EOF

done

kubectl apply -f storage-statefulset-redis-pv.yml

master1 ]# kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

redis-pv007 200M RWX Retain Available 3s

redis-pv008 200M RWX Retain Available 96s

3.6.3、添加redis pod节点

# 更改redis的yml文件里面的replicas:字段8

kubectl patch statefulsets redis-cluster -p '{"spec":{"replicas":8}}'

kubectl get pod -o wide

master1 ]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

redis-cluster-0 1/1 Running 0 45m 10.244.3.35 node1 <none> <none>

redis-cluster-1 1/1 Running 0 148m 10.244.4.108 node2 <none> <none>

redis-cluster-2 1/1 Running 0 147m 10.244.3.27 node1 <none> <none>

redis-cluster-3 1/1 Running 0 147m 10.244.4.109 node2 <none> <none>

redis-cluster-4 1/1 Running 0 147m 10.244.3.28 node1 <none> <none>

redis-cluster-5 1/1 Running 0 147m 10.244.4.110 node2 <none> <none>

redis-cluster-6 1/1 Running 0 39s 10.244.3.36 node1 <none> <none>

redis-cluster-7 1/1 Running 0 36s 10.244.4.111 node2 <none> <none>

3.6.4、选择一个redis pod进入容器增加redis集群节点

添加集群节点 命令格式:

redis-cli --cluster add-node 新节点ip:端口 集群存在的节点ip:端口

# 增加的命令

redis-cli --cluster add-node \

redis-cluster-6.redis-service.default.svc.cluster.local:6379 \

redis-cluster-0.redis-service.default.svc.cluster.local:6379

redis-cli --cluster add-node \

redis-cluster-7.redis-service.default.svc.cluster.local:6379 \

redis-cluster-0.redis-service.default.svc.cluster.local:6379

注意:

add-node后面跟的是新节点的信息,后面是以前集群中的任意 一个节点

3.6.5、检查redis集群状态

# 最新的如下:

master1 ~]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

redis-cluster-6 1/1 Running 0 98s 10.244.3.43 node1 <none> <none>

redis-cluster-7 1/1 Running 0 97s 10.244.4.115 node2 <none> <none>

# 新增的如下两个

[root@master1 ~]# kubectl exec -it redis-cluster-0 -- redis-cli cluster nodes | grep -E '10.244.3.43|10.244.4.115'

2f825dd5673df6feef315588f819416456b28212 10.244.3.43:6379@16379 master - 0 1680095314000 7 connected

3e96459fef24cd112e299019a36bbba25b0a004c 10.244.4.115:6379@16379 master - 0 1680095314000 0 connected

确认集群节点信息,可以看到新的节点已加入到集群中,默认为master角色

3.6.6、redis-cluster-7设置为master

# 由于要设置redis-cluster-7(10.244.4.115)为master节点,就要为它分配槽位,新加入的节点是空的没有槽位的,

# 需要登录到集群的一个主角色上面调整槽位,然后为其分配从角色节点即可。

redis-cli --cluster reshard redis-cluster-7.redis-service.default.svc.cluster.local:6379

# 这里是指给他分配多少槽位

How many slots do you want to move (from 1 to 16384)? 4096

# 这里是指分配给谁,填写集群node的ID,给redis-cluster-7分配,就写redis-cluster-7节点的ID

What is the receiving node ID? 85cd6b08eb87bfd0e604c901e698599b963c7c68

# 这里是指从哪些节点分配,all表示从所有节点

Please enter all the source node IDs.

Type 'all' to use all the nodes as source nodes for the hash slots.

Type 'done' once you entered all the source nodes IDs.

Source node #1: all

# 上面是已经重新分配好的槽位,是否要执行上面的分配计划,这里输yes

Do you want to proceed with the proposed reshard plan (yes/no)? yes

master1 ~]# kubectl exec -it redis-cluster-0 -- redis-cli cluster nodes | grep -E '10.244.3.43|10.244.4.115'

2f825dd5673df6feef315588f819416456b28212 10.244.3.43:6379@16379 master - 0 1680095757533 7 connected

3e96459fef24cd112e299019a36bbba25b0a004c 10.244.4.115:6379@16379 master - 0 1680095758341 8 connected 0-1364 5461-6826 10923-12287

10.244.4.115:6379@16379 是master角色,分配的插槽范围:

0-1364(1035个) 5461-6826(1366个) 10923-12287(1365个) 共计4096个

3.6.7、redis-cluster-6设置为从节点slave

# node id 是master节点,这个是redis-cluster-7

# 登陆是从节点的上执行

master1 ~]# kubectl exec -it redis-cluster-6 -- redis-cli cluster replicate 3e96459fef24cd112e299019a36bbba25b0a004c

OK

master1 ~]# kubectl exec -it redis-cluster-0 -- redis-cli cluster nodes | grep -E '10.244.3.43|10.244.4.115'

2f825dd5673df6feef315588f819416456b28212 10.244.3.43:6379@16379 slave 3e96459fef24cd112e299019a36bbba25b0a004c 0 1680095987028 8 connected

3e96459fef24cd112e299019a36bbba25b0a004c 10.244.4.115:6379@16379 master - 0 1680095988545 8 connected 0-1364 5461-6826 10923-12287

3.6.8、尝试数据操作

# redis-cluster-6 写入数据

master1 ~]# kubectl exec -it redis-cluster-6 -- redis-cli -c

127.0.0.1:6379> set redis-cluster statefulset

-> Redirected to slot [6704] located at 10.244.4.115:6379

OK

10.244.4.115:6379> exit

# redis-cluster-7 读取数据

[root@master1 ~]# kubectl exec -it redis-cluster-7 -- redis-cli -c

127.0.0.1:6379> get redis-cluster

"statefulset"

127.0.0.1:6379> exit

注意:这里连接redis集群一定要用 -c 参数来启动集群模式,否则就会发生如下报错

root@master1:~/statefulset# kubectl exec -it redis-cluster-2 -- redis-cli

127.0.0.1:6379> set nihao nihao

(error) MOVED 11081 10.244.0.8:6379

3.7、redis集群-动态缩容

3.7.1、缩容的原则

删除从节点:

直接删除即可,就算有数据也无所谓。

删除主节点:

如果主节点有从节点,将从节点转移到其他主节点

如果主节点有slot,去掉分配的slot,然后在删除主节点

3.7.2、删除的命令

删除命令:

redis-cli --cluster del-node 删除节点的ip:port 删除节点的id

转移命令:

redis-cli --cluster reshard 转移节点的ip:port

3.7.3、删除一个从节点

# 删除前

master1 ~]# kubectl exec -it redis-cluster-0 -- redis-cli cluster nodes | grep 10.244.3.43

2f825dd5673df6feef315588f819416456b28212 10.244.3.43:6379@16379 myself,slave 3e96459fef24cd112e299019a36bbba25b0a004c 0 1680096658000 8 connected

# 执行删除

master1 ~]# kubectl exec -it redis-cluster-0 -- redis-cli --cluster del-node 10.244.3.43:6379 2f825dd5673df6feef315588f819416456b28212

# 删除后

master1 ~]# kubectl exec -it redis-cluster-0 -- redis-cli cluster nodes | grep 10.244.3.43

3.7.4、删除一个主节点

kubectl exec -it redis-cluster-0 -- redis-cli --cluster reshard redis-cluster-7.redis-service.default.svc.cluster.local:6379

master1 ~]# kubectl exec -it redis-cluster-0 -- redis-cli cluster nodes | grep -E '10.244.3.43|10.244.4.115'

3e96459fef24cd112e299019a36bbba25b0a004c 10.244.4.115:6379@16379 master - 0 1680096954562 8 connected 0-1364 5461-6826 10923-12287

master1 ~]# kubectl exec -it redis-cluster-0 -- redis-cli cluster nodes

3e8d901ed5c2ed8a3356e750175c9f2fb28940f0 10.244.3.38:6379@16379 master - 0 1680097081135 3 connected 12288-16383 # slot 转移至redis-cluster-2

# 将10.244.4.115:6379 转移至redis-cluster-2 node id也是填redis-cluster-2的node id

master1 ~]# kubectl exec -it redis-cluster-0 -- redis-cli --cluster reshard 10.244.4.115:6379

# 转移完成,则删除节点

kubectl exec -it redis-cluster-2 -- redis-cli --cluster del-node 10.244.4.115:6379 3e96459fef24cd112e299019a36bbba25b0a004c