作业①

实验要求:

- 熟练掌握 Selenium 查找 HTML 元素、爬取 Ajax 网页数据、等待 HTML 元素等内容。使用 Selenium 框架+ MySQL 数据库存储技术路线爬取“沪深 A 股”、“上证 A 股”、“深证 A 股”3 个板块的股票数据信息。

- 输出信息:MYSQL 数据库存储和输出格式如下,表头应是英文命名例如:序号id,股票代码:bStockNo……,由同学们自行定义设计表头:

代码

from selenium import webdriver

from selenium.webdriver.support.wait import WebDriverWait

from selenium.webdriver.support import expected_conditions as EC

from selenium.webdriver.common.by import By

from selenium.webdriver.chrome.options import Options

import time

import pymysql

# 上证

sz_url = 'http://quote.eastmoney.com/center/gridlist.html#sh_a_board'

# 沪深

hs_url = 'http://quote.eastmoney.com/center/gridlist.html#hs_a_board'

# 深证

hz_url = 'http://quote.eastmoney.com/center/gridlist.html#hz_a_board'

# 选择查询的url

type = input('输入查询的类型:\n1.上证\n2.沪深\n3.深证\n')

if type == '1':

base_url = sz_url

elif type == '2':

base_url = hs_url

elif type == '3':

base_url = hz_url

else:

print('输入错误!')

exit()

chrome_options = Options()

chrome_options.add_experimental_option('detach', True)

chrome_options.add_argument('--headless')

chrome_options.add_argument('--disable-gpu')

driver = webdriver.Chrome(options=chrome_options)

def get_info(base_url,pages=1):

# 数据结构为序号 代码 名称 股吧 数据流 数据 最新价 涨跌幅 涨跌额 成交量(手) 成交额 振幅 最高 最低 今开 昨收 量比 换手率 市盈率(动态) 市净率

rank = []

code = []

name = []

price = []

change = []

change_amount = []

volume = []

turnover = []

amplitude = []

high = []

low = []

today_open = []

yesterday_close = []

volume_ratio = []

turnover_rate = []

dynamic_pe = []

pb_ratio = []

driver.get(url=base_url)

for page in range(1,pages+1):

# 等待页面加载完成

WebDriverWait(driver, 10).until(EC.presence_of_element_located((By.XPATH, '/html/body/div[1]/div[2]/div[2]/div[5]/div/table/tbody/tr')))

# 获取页面信息

info = driver.find_elements(By.XPATH,'/html/body/div[1]/div[2]/div[2]/div[5]/div/table/tbody/tr')

for i in info:

print(i.text)

part = i.text.split(' ')

rank.append(part[0])

code.append(part[1])

name.append(part[2])

price.append(part[6])

change.append(part[7])

change_amount.append(part[8])

volume.append(part[9])

turnover.append(part[10])

amplitude.append(part[11])

high.append(part[12])

low.append(part[13])

today_open.append(part[14])

yesterday_close.append(part[15])

volume_ratio.append(part[16])

turnover_rate.append(part[17])

dynamic_pe.append(part[18])

pb_ratio.append(part[19])

# 点击下一页

driver.find_element(By.XPATH,'/html/body/div[1]/div[2]/div[2]/div[5]/div/div[2]/div/a[2]').click()

time.sleep(3)

driver.quit()

return rank,code,name,price,change,change_amount,volume,turnover,amplitude,high,low,today_open,yesterday_close,volume_ratio,turnover_rate,dynamic_pe,pb_ratio

# 把数据写入数据库

def write_to_mysql(rank,code,name,price,change,change_amount,volume,turnover,amplitude,high,low,today_open,yesterday_close,volume_ratio,turnover_rate,dynamic_pe,pb_ratio):

# 打开数据库连接

db = pymysql.connect(host='localhost', user='root', password='qazqaz123',autocommit=True,auth_plugin_map='mysql_native_password')

# 使用cursor()方法获取操作游标

cursor = db.cursor()

# 选择数据库

cursor.execute('use data')

# 如果数据表已经存在使用 execute() 方法删除表。

cursor.execute("DROP TABLE IF EXISTS stock_info")

# 创建表

sql = """CREATE TABLE stock_info (

ranks CHAR(20),

code CHAR(20),

name CHAR(20),

price CHAR(20),

changes CHAR(20),

change_amount CHAR(20),

volume CHAR(20),

turnover CHAR(20),

amplitude CHAR(20),

high CHAR(20),

low CHAR(20),

today_open CHAR(20),

yesterday_close CHAR(20),

volume_ratio CHAR(20),

turnover_rate CHAR(20),

dynamic_pe CHAR(20),

pb_ratio CHAR(20))"""

cursor.execute(sql)

# 插入数据

for i in range(0,len(rank)):

sql = 'insert into stock_info(ranks,code,name,price,changes,change_amount,volume,turnover,amplitude,high,low,today_open,yesterday_close,volume_ratio,turnover_rate,dynamic_pe,pb_ratio) values(%s,%s,%s,%s,%s,%s,%s,%s,%s,%s,%s,%s,%s,%s,%s,%s,%s)'

cursor.execute(sql,(rank[i],code[i],name[i],price[i],change[i],change_amount[i],volume[i],turnover[i],amplitude[i],high[i],low[i],today_open[i],yesterday_close[i],volume_ratio[i],turnover_rate[i],dynamic_pe[i],pb_ratio[i]))

# 关闭数据库连接

db.close()

if __name__ == '__main__':

pages = input('请输入要查询的页数:')

rank,code,name,price,change,change_amount,volume,turnover,amplitude,high,low,today_open,yesterday_close,volume_ratio,turnover_rate,dynamic_pe,pb_ratio = get_info(base_url,int(pages))

write_to_mysql(rank,code,name,price,change,change_amount,volume,turnover,amplitude,high,low,today_open,yesterday_close,volume_ratio,turnover_rate,dynamic_pe,pb_ratio)

实验结果

心得体会

复习了selenium的基础操作

作业②

实验要求

- 熟练掌握 Selenium 查找 HTML 元素、实现用户模拟登录、爬取 Ajax 网页数据、等待 HTML 元素等内容。

- 使用 Selenium 框架+MySQL 爬取中国 mooc 网课程资源信息(课程号、课程名称、学校名称、主讲教师、团队成员、参加人数、课程进度、课程简介)

- 输出信息:MYSQL 数据库存储和输出格式

实验代码

from selenium import webdriver

from selenium.webdriver.support.wait import WebDriverWait

from selenium.webdriver.support import expected_conditions as EC

from selenium.webdriver.common.by import By

from selenium.webdriver.chrome.options import Options

import time

import pymysql

base_url = 'https://www.icourse163.org/'

# base_url = 'https://www.icourse163.org/channel/2001.htm'

chrome_options = Options()

chrome_options.add_experimental_option('detach', True)

# chrome_options.add_argument('--headless')

chrome_options.add_argument('--disable-gpu')

driver = webdriver.Chrome(options=chrome_options)

driver.get(base_url)

def login():

# 等待页面加载完成

WebDriverWait(driver, 10).until(EC.presence_of_element_located((By.XPATH, '/html/body/div[4]/div[1]/div/div/div/div/div[7]/div[2]/div/div/div/a')))

lo = driver.find_element(By.XPATH,'/html/body/div[4]/div[1]/div/div/div/div/div[7]/div[2]/div/div/div/a')

lo.click()

# class="u-label f-dn"

# 获取打开的多个窗口句柄

driver.switch_to.frame(0)

time.sleep(5)

inputUserName = driver.find_element(By.XPATH, '/html/body/div[2]/div[2]/div[2]/form/div/div[2]/div[2]/input')

inputUserName.send_keys("18596883799")

inputKey = driver.find_element(By.XPATH, '/html/body/div[2]/div[2]/div[2]/form/div/div[4]/div[2]/input[2]')

inputKey.send_keys('qazqaz123')

driver.find_element(By.XPATH, '/html/body/div[2]/div[2]/div[2]/form/div/div[6]/a').click()

driver.switch_to.window(driver.window_handles[0])

time.sleep(2)

WebDriverWait(driver, 10).until(EC.presence_of_element_located(

(By.XPATH, '/html/body/div[4]/div[3]/div[3]/button[1]')))

driver.find_element(By.XPATH,'/html/body/div[4]/div[3]/div[3]/button[1]').click()

#agree.click()

def down():

driver.find_element(By.XPATH,'/html/body/div[4]/div[2]/div[1]/div/div/div[1]/div[1]/div[1]/span[1]/a').click()

name = []

university = []

lecturer = []

number = []

times = []

brief = []

time.sleep(2)

popup_window_handle = driver.window_handles[-1]

driver.switch_to.window(popup_window_handle)

info = driver.find_elements(By.XPATH,'/html/body/div[4]/div[2]/div/div/div/div[2]/div[2]/div/div/div[2]/div[1]/div')

# 从info的第三个开始

for i in info:

#time.sleep(3)

data = i.text.split('\n')

name.append(data[1])

university.append(data[2])

lecturer.append(data[3])

number.append(data[4])

times.append(data[5])

# 如果点击不开,就点击下一个

try:

i.click()

except:

continue

popup_window_handle = driver.window_handles[-1]

driver.switch_to.window(popup_window_handle)

# 等待页面加载完成

time.sleep(3)

WebDriverWait(driver, 10).until(EC.presence_of_element_located((By.XPATH, '/html/body/div[4]/div[2]/div[2]/div[2]/div[1]/div[1]/div[2]/div[2]/div[1]')))

brief.append(driver.find_element(By.XPATH,'/html/body/div[4]/div[2]/div[2]/div[2]/div[1]/div[1]/div[2]/div[2]/div[1]').text)

driver.close()

popup_window_handle = driver.window_handles[-1]

driver.switch_to.window(popup_window_handle)

for i in range(0,len(name)):

brief.append(' ')

print(name[i])

return name,university,lecturer,number,times,brief

# 数据库操作

def insert(name,university,lecturer,number,times,brief):

# 打开数据库连接

db = pymysql.connect(host='localhost', user='root', password='qazqaz123',autocommit=True,auth_plugin_map='mysql_native_password')

# 使用cursor()方法获取操作游标

cursor = db.cursor()

# 选择数据库

cursor.execute('use data')

# 如果数据表已经存在使用 execute() 方法删除表。

cursor.execute("DROP TABLE IF EXISTS mooc")

# 创建表

sql = """CREATE TABLE mooc (

name CHAR(20),

university CHAR(20),

lecturer CHAR(20),

number CHAR(20),

times CHAR(20),

brief TEXT)"""

cursor.execute(sql)

# 插入数据

for i in range(0,len(name)):

sql = 'insert into mooc(name,university,lecturer,number,times,brief) values(%s,%s,%s,%s,%s,%s)'

cursor.execute(sql,(name[i],university[i],lecturer[i],number[i],times[i],brief[i]))

if __name__ == '__main__':

login()

name,university,lecturer,number,times,brief = down()

insert(name,university,lecturer,number,times,brief)

driver.quit()

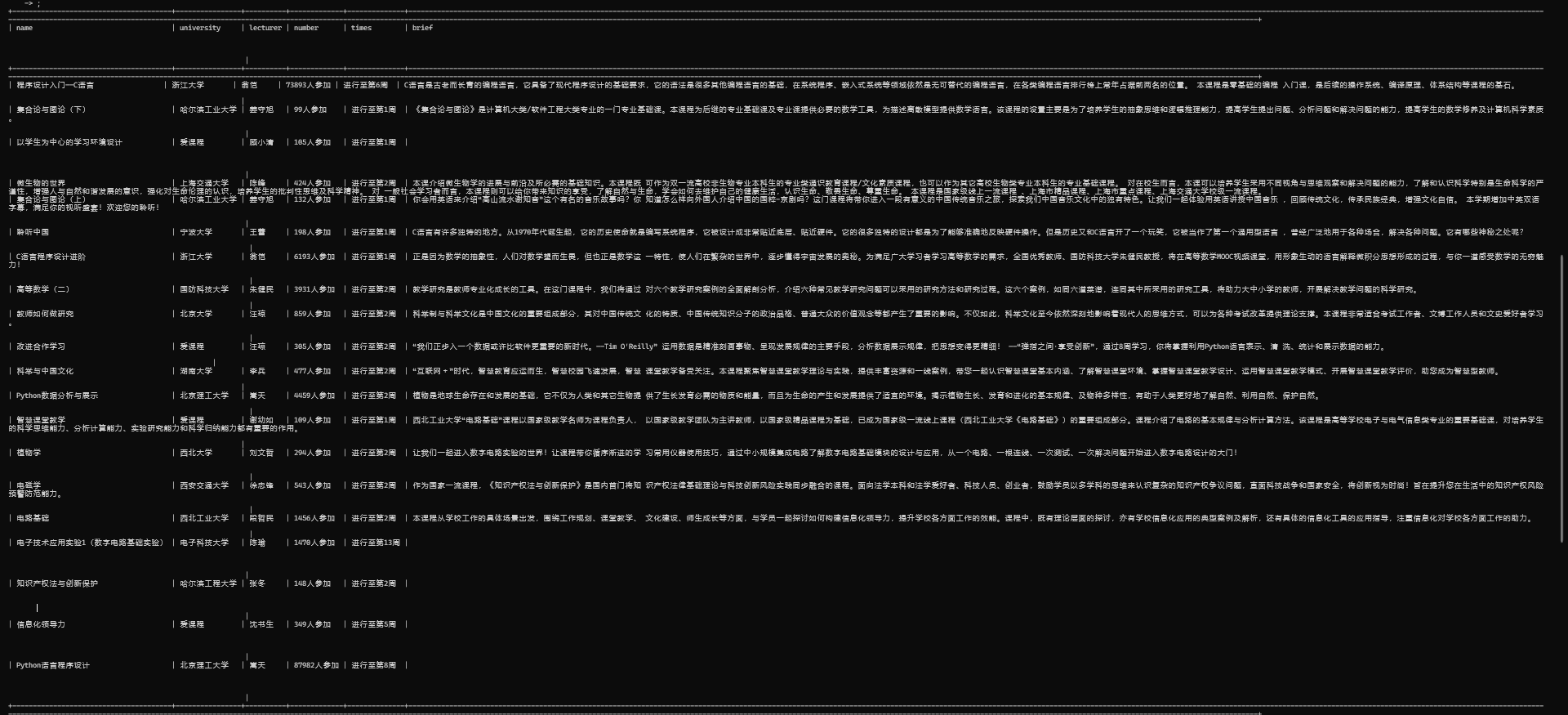

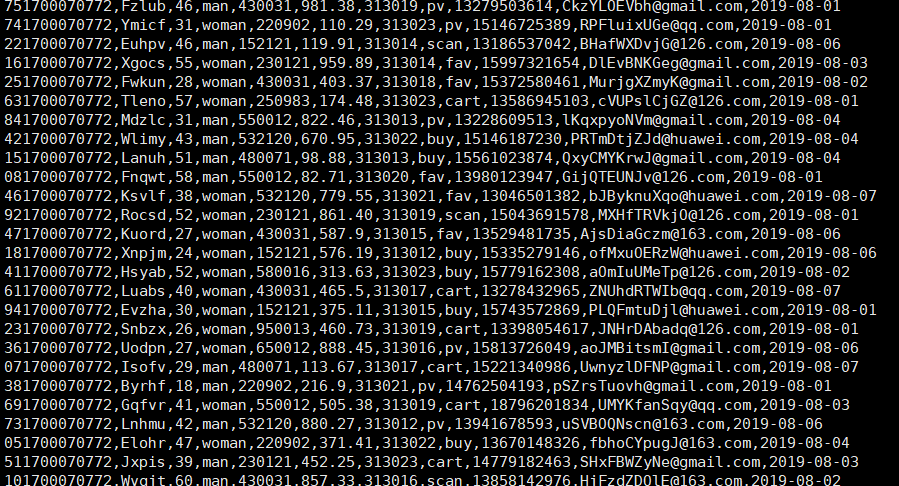

实验结果

实验心得

注意切换的窗口,辨别是frame还是新的页面或者是弹窗

作业③

作业要求

- 掌握大数据相关服务,熟悉 Xshell 的使用

- 完成文档 华为云_大数据实时分析处理实验手册-Flume 日志采集实验(部分)v2.docx 中的任务,即为下面 5 个任务,具体操作见文档。

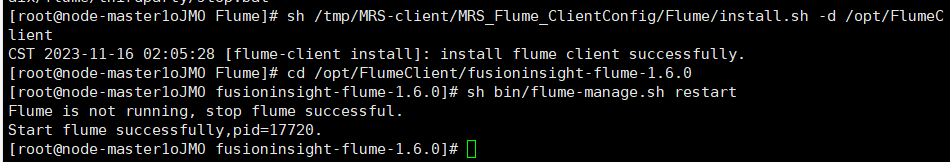

任务一:Python脚本生成测试数据

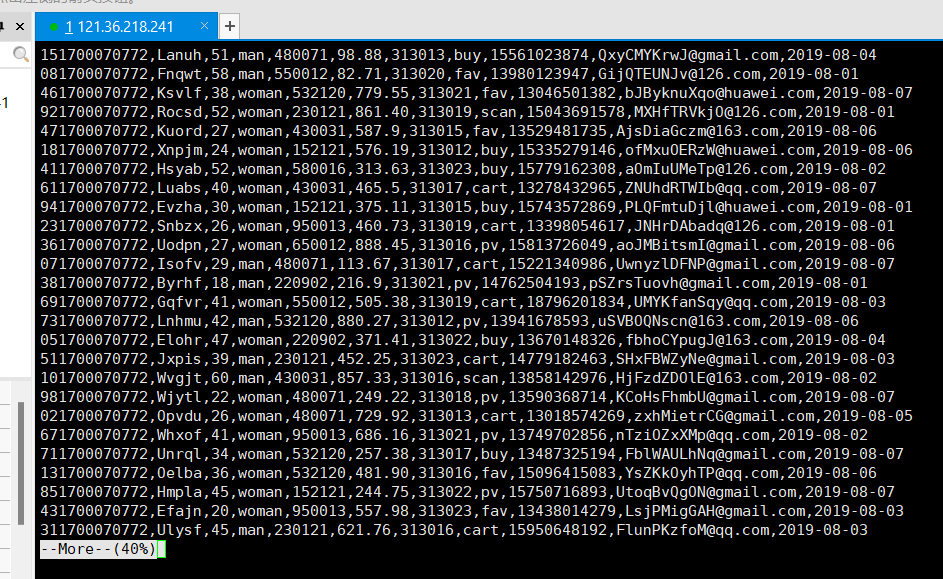

任务二:配置Kafka

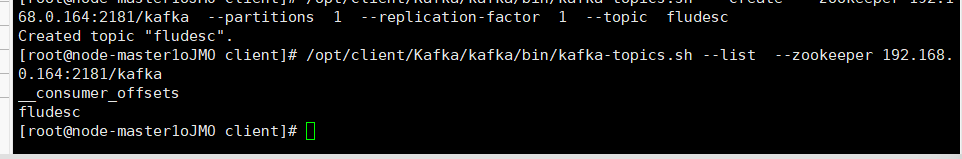

任务三:安装Flume客户端

任务四:配置Flume采集数据

心得体会

初步了解云端工作吧