记一次Hive的运行过程中的错误

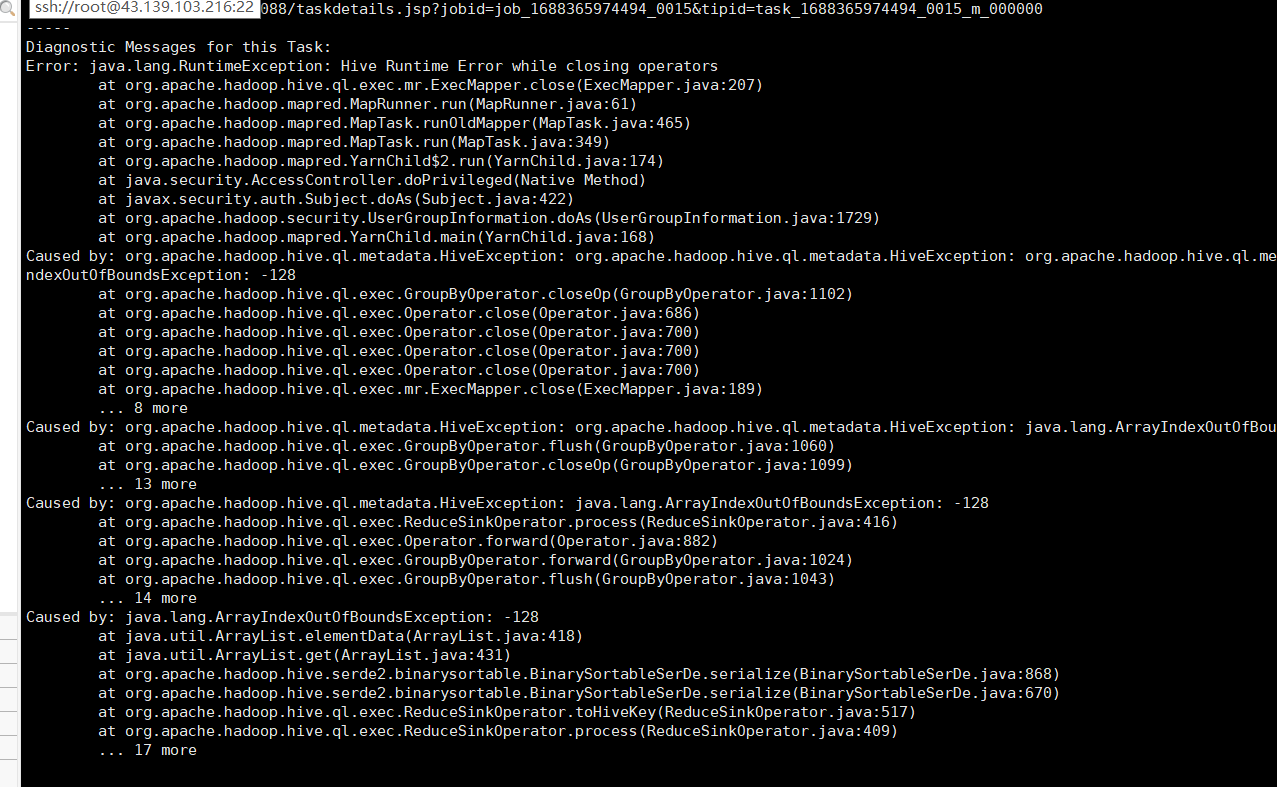

Error: java.lang.RuntimeException: Hive Runtime Error while closing operators at org.apache.hadoop.hive.ql.exec.mr.ExecMapper.close(ExecMapper.java:207) at org.apache.hadoop.mapred.MapRunner.run(MapRunner.java:61) at org.apache.hadoop.mapred.MapTask.runOldMapper(MapTask.java:465) at org.apache.hadoop.mapred.MapTask.run(MapTask.java:349) at org.apache.hadoop.mapred.YarnChild$2.run(YarnChild.java:174) at java.security.AccessController.doPrivileged(Native Method) at javax.security.auth.Subject.doAs(Subject.java:422) at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1729) at org.apache.hadoop.mapred.YarnChild.main(YarnChild.java:168) Caused by: org.apache.hadoop.hive.ql.metadata.HiveException: org.apache.hadoop.hive.ql.metadata.HiveException: org.apache.hadoop.hive.ql.metadata.HiveException: java.lang.ArrayIndexOutOfBoundsException: -128 at org.apache.hadoop.hive.ql.exec.GroupByOperator.closeOp(GroupByOperator.java:1102) at org.apache.hadoop.hive.ql.exec.Operator.close(Operator.java:686) at org.apache.hadoop.hive.ql.exec.Operator.close(Operator.java:700) at org.apache.hadoop.hive.ql.exec.Operator.close(Operator.java:700) at org.apache.hadoop.hive.ql.exec.Operator.close(Operator.java:700) at org.apache.hadoop.hive.ql.exec.mr.ExecMapper.close(ExecMapper.java:189) ... 8 more Caused by: org.apache.hadoop.hive.ql.metadata.HiveException: org.apache.hadoop.hive.ql.metadata.HiveException: java.lang.ArrayIndexOutOfBoundsException: -128 at org.apache.hadoop.hive.ql.exec.GroupByOperator.flush(GroupByOperator.java:1060) at org.apache.hadoop.hive.ql.exec.GroupByOperator.closeOp(GroupByOperator.java:1099) ... 13 more Caused by: org.apache.hadoop.hive.ql.metadata.HiveException: java.lang.ArrayIndexOutOfBoundsException: -128 at org.apache.hadoop.hive.ql.exec.ReduceSinkOperator.process(ReduceSinkOperator.java:416) at org.apache.hadoop.hive.ql.exec.Operator.forward(Operator.java:882) at org.apache.hadoop.hive.ql.exec.GroupByOperator.forward(GroupByOperator.java:1024) at org.apache.hadoop.hive.ql.exec.GroupByOperator.flush(GroupByOperator.java:1043) ... 14 more Caused by: java.lang.ArrayIndexOutOfBoundsException: -128 at java.util.ArrayList.elementData(ArrayList.java:418) at java.util.ArrayList.get(ArrayList.java:431) at org.apache.hadoop.hive.serde2.binarysortable.BinarySortableSerDe.serialize(BinarySortableSerDe.java:868) at org.apache.hadoop.hive.serde2.binarysortable.BinarySortableSerDe.serialize(BinarySortableSerDe.java:670) at org.apache.hadoop.hive.ql.exec.ReduceSinkOperator.toHiveKey(ReduceSinkOperator.java:517) at org.apache.hadoop.hive.ql.exec.ReduceSinkOperator.process(ReduceSinkOperator.java:409) ... 17 more

原因是 distinct 数量太多了,一个sql中最多支持128个distinct,超过了就会报错,见hive的issue

https://issues.apache.org/jira/browse/HIVE-6998

ps: 再不用百度搜问题了,bing国际版yyds

sql如下,可以复现

SELECT count(distinct id * floor(rand() * 0)) as id0 ,count(distinct id * floor(rand() * 1)) as id1 ,count(distinct id * floor(rand() * 2)) as id2 ,count(distinct id * floor(rand() * 3)) as id3 ,count(distinct id * floor(rand() * 4)) as id4 ,count(distinct id * floor(rand() * 5)) as id5 ,count(distinct id * floor(rand() * 6)) as id6 ,count(distinct id * floor(rand() * 7)) as id7 ,count(distinct id * floor(rand() * 8)) as id8 ,count(distinct id * floor(rand() * 9)) as id9 ,count(distinct id * floor(rand() * 10)) as id10 ,count(distinct id * floor(rand() * 11)) as id11 ,count(distinct id * floor(rand() * 12)) as id12 ,count(distinct id * floor(rand() * 13)) as id13 ,count(distinct id * floor(rand() * 14)) as id14 ,count(distinct id * floor(rand() * 15)) as id15 ,count(distinct id * floor(rand() * 16)) as id16 ,count(distinct id * floor(rand() * 17)) as id17 ,count(distinct id * floor(rand() * 18)) as id18 ,count(distinct id * floor(rand() * 19)) as id19 ,count(distinct id * floor(rand() * 20)) as id20 ,count(distinct id * floor(rand() * 21)) as id21 ,count(distinct id * floor(rand() * 22)) as id22 ,count(distinct id * floor(rand() * 23)) as id23 ,count(distinct id * floor(rand() * 24)) as id24 ,count(distinct id * floor(rand() * 25)) as id25 ,count(distinct id * floor(rand() * 26)) as id26 ,count(distinct id * floor(rand() * 27)) as id27 ,count(distinct id * floor(rand() * 28)) as id28 ,count(distinct id * floor(rand() * 29)) as id29 ,count(distinct id * floor(rand() * 30)) as id30 ,count(distinct id * floor(rand() * 31)) as id31 ,count(distinct id * floor(rand() * 32)) as id32 ,count(distinct id * floor(rand() * 33)) as id33 ,count(distinct id * floor(rand() * 34)) as id34 ,count(distinct id * floor(rand() * 35)) as id35 ,count(distinct id * floor(rand() * 36)) as id36 ,count(distinct id * floor(rand() * 37)) as id37 ,count(distinct id * floor(rand() * 38)) as id38 ,count(distinct id * floor(rand() * 39)) as id39 ,count(distinct id * floor(rand() * 40)) as id40 ,count(distinct id * floor(rand() * 41)) as id41 ,count(distinct id * floor(rand() * 42)) as id42 ,count(distinct id * floor(rand() * 43)) as id43 ,count(distinct id * floor(rand() * 44)) as id44 ,count(distinct id * floor(rand() * 45)) as id45 ,count(distinct id * floor(rand() * 46)) as id46 ,count(distinct id * floor(rand() * 47)) as id47 ,count(distinct id * floor(rand() * 48)) as id48 ,count(distinct id * floor(rand() * 49)) as id49 ,count(distinct id * floor(rand() * 50)) as id50 ,count(distinct id * floor(rand() * 51)) as id51 ,count(distinct id * floor(rand() * 52)) as id52 ,count(distinct id * floor(rand() * 53)) as id53 ,count(distinct id * floor(rand() * 54)) as id54 ,count(distinct id * floor(rand() * 55)) as id55 ,count(distinct id * floor(rand() * 56)) as id56 ,count(distinct id * floor(rand() * 57)) as id57 ,count(distinct id * floor(rand() * 58)) as id58 ,count(distinct id * floor(rand() * 59)) as id59 ,count(distinct id * floor(rand() * 60)) as id60 ,count(distinct id * floor(rand() * 61)) as id61 ,count(distinct id * floor(rand() * 62)) as id62 ,count(distinct id * floor(rand() * 63)) as id63 ,count(distinct id * floor(rand() * 64)) as id64 ,count(distinct id * floor(rand() * 65)) as id65 ,count(distinct id * floor(rand() * 66)) as id66 ,count(distinct id * floor(rand() * 67)) as id67 ,count(distinct id * floor(rand() * 68)) as id68 ,count(distinct id * floor(rand() * 69)) as id69 ,count(distinct id * floor(rand() * 70)) as id70 ,count(distinct id * floor(rand() * 71)) as id71 ,count(distinct id * floor(rand() * 72)) as id72 ,count(distinct id * floor(rand() * 73)) as id73 ,count(distinct id * floor(rand() * 74)) as id74 ,count(distinct id * floor(rand() * 75)) as id75 ,count(distinct id * floor(rand() * 76)) as id76 ,count(distinct id * floor(rand() * 77)) as id77 ,count(distinct id * floor(rand() * 78)) as id78 ,count(distinct id * floor(rand() * 79)) as id79 ,count(distinct id * floor(rand() * 80)) as id80 ,count(distinct id * floor(rand() * 81)) as id81 ,count(distinct id * floor(rand() * 82)) as id82 ,count(distinct id * floor(rand() * 83)) as id83 ,count(distinct id * floor(rand() * 84)) as id84 ,count(distinct id * floor(rand() * 85)) as id85 ,count(distinct id * floor(rand() * 86)) as id86 ,count(distinct id * floor(rand() * 87)) as id87 ,count(distinct id * floor(rand() * 88)) as id88 ,count(distinct id * floor(rand() * 89)) as id89 ,count(distinct id * floor(rand() * 90)) as id90 ,count(distinct id * floor(rand() * 91)) as id91 ,count(distinct id * floor(rand() * 92)) as id92 ,count(distinct id * floor(rand() * 93)) as id93 ,count(distinct id * floor(rand() * 94)) as id94 ,count(distinct id * floor(rand() * 95)) as id95 ,count(distinct id * floor(rand() * 96)) as id96 ,count(distinct id * floor(rand() * 97)) as id97 ,count(distinct id * floor(rand() * 98)) as id98 ,count(distinct id * floor(rand() * 99)) as id99 ,count(distinct id * floor(rand() * 100)) as id100 ,count(distinct id * floor(rand() * 101)) as id101 ,count(distinct id * floor(rand() * 102)) as id102 ,count(distinct id * floor(rand() * 103)) as id103 ,count(distinct id * floor(rand() * 104)) as id104 ,count(distinct id * floor(rand() * 105)) as id105 ,count(distinct id * floor(rand() * 106)) as id106 ,count(distinct id * floor(rand() * 107)) as id107 ,count(distinct id * floor(rand() * 108)) as id108 ,count(distinct id * floor(rand() * 109)) as id109 ,count(distinct id * floor(rand() * 110)) as id110 ,count(distinct id * floor(rand() * 111)) as id111 ,count(distinct id * floor(rand() * 112)) as id112 ,count(distinct id * floor(rand() * 113)) as id113 ,count(distinct id * floor(rand() * 114)) as id114 ,count(distinct id * floor(rand() * 115)) as id115 ,count(distinct id * floor(rand() * 116)) as id116 ,count(distinct id * floor(rand() * 117)) as id117 ,count(distinct id * floor(rand() * 118)) as id118 ,count(distinct id * floor(rand() * 119)) as id119 ,count(distinct id * floor(rand() * 120)) as id120 ,count(distinct id * floor(rand() * 121)) as id121 ,count(distinct id * floor(rand() * 122)) as id122 ,count(distinct id * floor(rand() * 123)) as id123 ,count(distinct id * floor(rand() * 124)) as id124 ,count(distinct id * floor(rand() * 125)) as id125 ,count(distinct id * floor(rand() * 126)) as id126 ,count(distinct id * floor(rand() * 127)) as id127 ,count(distinct id * floor(rand() * 128)) as id128 ,count(distinct id * floor(rand() * 129)) as id129 FROM ( select 10 as id ) x ;