爬虫之下载青春有你第二季图片

工具:PyCharm 2022.2.4 python版本:3.9,一个很好的练手项目

1、写一个python类 并方法如下

注意:里面的路径要根据自己实际情况,写成自己的

import requests

from bs4 import BeautifulSoup

import os

import datetime

import json

import chardet

class TangJingLin:

# 获取当前时间年月日

today = datetime.datetime.now().strftime("%Y%m%d")

'''

定义爬虫函数

'''

def crawl_young(self):

headers = {

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/119.0.0.0 Safari/537.36"

}

url = 'https://baike.baidu.com/item/青春有你第二季'

try:

response = requests.get(url, headers=headers)

# encoding = chardet.detect(response.text)['encoding']

# response.encoding = encoding

# print(response.text)

# 将一段文档传入BeautifulSoup的构造方法,就能得到一个文档的对象, 可以传入一段字符串

soup = BeautifulSoup(response.text, 'lxml')

# print(soup)

# 返回的是class为table-view log-set-param的<table>所有标签

tables = soup.find('div', {'data-uuid': 'gny2nzwxeh'}).find_all('table', {'class': 'tableBox_VTdNB'})

return tables

except Exception as e:

print(e)

'''

解析获取的数据

'''

def parseData(self):

bs = BeautifulSoup(str(self.crawl_young()), 'lxml')

all_trs = bs.find_all('tr')

# 定义一个数组,用来存放结果

stars = []

for i in all_trs[1:]:

all_tds = i.find_all('td')

star = {}

# 姓名

star["name"] = all_tds[0].text

# 个人百度百科链接

if (all_tds[0].find('a')):

star["link"] = 'https://baike.baidu.com' + all_tds[0].find('a').get('href')

else:

star["link"] = ''

# 籍贯

star["zone"] = all_tds[1].text

# 星座

star["constellation"] = all_tds[2].text

# 花语

star["huayu"] = all_tds[3].text

# 经济公司

star["ssCom"] = all_tds[4].text

stars.append(star)

try:

with open('C:/Users/Administrator/Desktop/qaws/' + self.today + '.json', 'w', encoding='UTF-8') as f:

json.dump(stars, f, ensure_ascii=False)

print("写入成功")

except Exception as e:

print(e)

'''

获取图片链接

'''

def craw_pic(self):

with open('C:/Users/Administrator/Desktop/qaws/' + self.today + '.json', 'r', encoding='UTF-8') as f:

json_array = json.loads(f.read())

pics = []

for i in json_array:

dics = {}

name = i['name']

link = i['link']

if (i is not None and i != ''):

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/119.0.0.0 Safari/537.36',

# 如果不加这个会有百度验证

'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,image/avif,image/webp,image/apng,'

'*/*;q=0.8,application/signed-exchange;v=b3;q=0.7',

}

try:

response = requests.get(link, headers=headers)

bs = BeautifulSoup(response.content, 'lxml')

imm = bs.find('div', {'id': 'J-lemma-main-wrapper'}).find_all("img")

if imm:

img = imm[0].get('src')

dics["name"] = name

dics["img"] = img

pics.append(dics)

except Exception as e:

print(e)

return pics

'''

根据链接下载图片

'''

def downPicByUrl(self):

pics = self.craw_pic()

os.mkdir("C:/Users/Administrator/Desktop/qaws/xuanshou/")

try:

for pic in pics:

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/119.0.0.0 Safari/537.36',

# 如果不加这个会有百度验证

'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,image/avif,image/webp,image/apng,'

'*/*;q=0.8,application/signed-exchange;v=b3;q=0.7',

}

pi = requests.get(url=pic['img'], headers=headers).content

imgPath = 'C:/Users/Administrator/Desktop/qaws/xuanshou/'+pic['name']+'.jpg'

# 将图片写入指定位置

with open(imgPath, 'wb') as f:

f.write(pi)

print("下载成功")

except Exception as e:

print(e)

2、写一个main方法运行

if __name__ == '__main__':

TangJingLin().downPicByUrl()

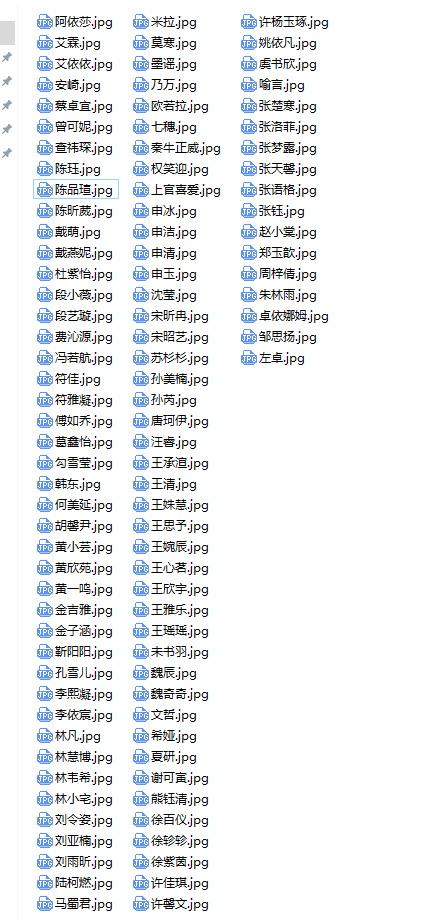

执行结果: