作业①:

实验内容

题目:

- 要求:熟练掌握 Selenium 查找HTML元素、爬取Ajax网页数据、等待HTML元素等内容。

使用Selenium框架+ MySQL数据库存储技术路线爬取“沪深A股”、“上证A股”、“深证A股”3个板块的股票数据信息。 - 候选网站:东方财富网:http://quote.eastmoney.com/center/gridlist.html#hs_a_board

- 输出信息:MYSQL数据库存储和输出格式如下,表头应是英文命名例如:序号id,股票代码:bStockNo……,由同学们自行定义设计表头。

code

from selenium import webdriver

import pymysql

import time

class MySpider:

def startUp(self, gp):

self.driver = webdriver.Chrome()

try:

self.con = pymysql.connect(host="127.0.0.1", port=3306, user="root", passwd="123456", database="stocks", charset="utf8")

self.cursor = self.con.cursor()

try:

self.cursor.execute(f"drop table if exists {gp}stocks")

self.cursor.execute(

f"create table {gp}stocks(id int,code varchar(10),name varchar(20),lastTrade varchar(10),chg varchar(10),"

"change_ varchar(10),volume varchar(10),amp varchar(10),highest varchar(10),lowest varchar(10),open varchar(10),prevClose varchar(10),primary key (id))")

except:

pass

except Exception as err:

print(err)

def closeUp(self):

try:

self.con.commit()

self.con.close()

self.driver.close()

except Exception as err:

print(err)

def insertDB(self, gp, id, code, name, lastTrade, chg, change_, volume, amp, highest, lowest, open, prevClose):

try:

self.cursor.execute(

f"insert into {gp}stocks (id, code, name, lastTrade, chg, change_, volume, amp, highest, lowest, open, prevClose) values(%s, %s, %s, %s, %s, %s, %s, %s, %s, %s, %s, %s)",

(id, code, name, lastTrade, chg, change_, volume, amp, highest, lowest, open, prevClose))

except Exception as err:

print(err)

def processSpider(self):

try:

trs = self.driver.find_elements_by_xpath("//tbody/tr")

for tr in trs:

id = tr.find_element_by_xpath(".//td[1]").text

code = tr.find_element_by_xpath(".//td[2]").text

name = tr.find_element_by_xpath(".//td[3]").text

lastTrade = tr.find_element_by_xpath(".//td[5]").text # 最新报价

chg = tr.find_element_by_xpath(".//td[6]").text # 涨跌幅

change_ = tr.find_element_by_xpath(".//td[7]").text # 涨跌额

volume = tr.find_element_by_xpath(".//td[8]").text # 成交量

amp = tr.find_element_by_xpath(".//td[10]").text # 振幅

highest = tr.find_element_by_xpath(".//td[11]").text # 最高

lowest = tr.find_element_by_xpath(".//td[12]").text # 最低

open = tr.find_element_by_xpath(".//td[13]").text # 今开

prevClose = tr.find_element_by_xpath(".//td[14]").text # 昨收

self.insertDB(gp, id, code, name, lastTrade, chg, change_, volume, amp, highest, lowest, open, prevClose)

try:

link = self.driver.find_elements_by_xpath("//a[@class='next paginate_button']")[0]

if int(link.get_attribute("data-index")) >= 3: # 爬取2页

return

link.click()

time.sleep(1) # 等1秒等待网页数据加载

self.processSpider()

except Exception as err:

print(err)

except Exception as err:

print(err)

def executeSpider(self, gp):

self.startUp(gp)

self.driver.get(f"http://quote.eastmoney.com/center/gridlist.html#{gp}_a_board")

time.sleep(1) # 等1秒等待网页数据加载

self.processSpider()

self.closeUp()

spider = MySpider()

gps = ["hs", "sh", "sz"]

for gp in gps:

spider.executeSpider(gp)

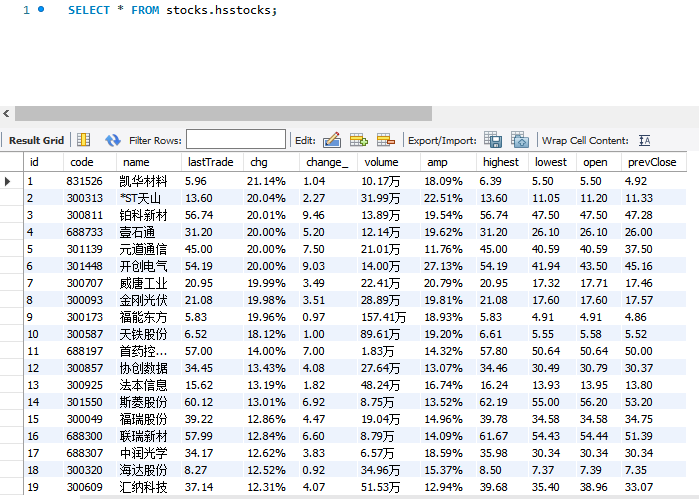

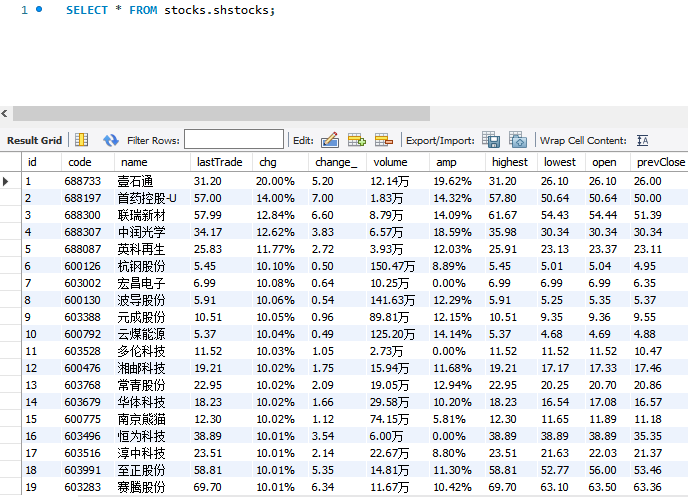

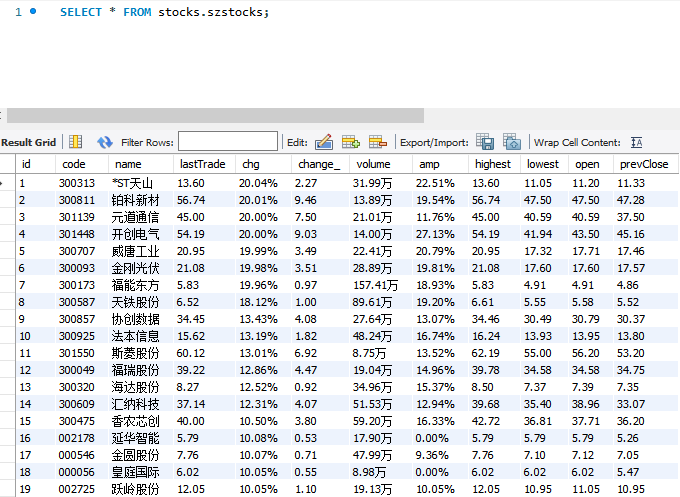

运行结果:

心得体会

重顾了一次selenium的相关操作。

作业②:

实验内容

题目:

- 要求:熟练掌握 Selenium 查找HTML元素、实现用户模拟登录、爬取Ajax网页数据、等待HTML元素等内容。

使用Selenium框架+MySQL爬取中国mooc网课程资源信息(课程号、课程名称、学校名称、主讲教师、团队成员、参加人数、课程进度、课程简介) - 候选网站:中国mooc网:https://www.icourse163.org

- 输出信息:MYSQL数据库存储和输出格式

Gitee文件夹链接

code

from selenium import webdriver

from selenium.webdriver.chrome.options import Options

import pymysql

import time

from selenium.webdriver.support.wait import WebDriverWait

from selenium.webdriver.support import expected_conditions as EC

from selenium.webdriver.common.by import By

class MySpider:

def __init__(self):

try:

option = Options()

option.add_experimental_option('excludeSwitches', ['enable-automation'])

self.driver = webdriver.Chrome(executable_path='chromedriver.exe', options=option)

self.driver.maximize_window() # 使浏览器窗口最大化

self.con = pymysql.connect(host="127.0.0.1", port=3306, user="root", passwd="123456", database="mooc",

charset="utf8")

self.cursor = self.con.cursor()

self.cursor.execute("drop table if exists CourseInfo")

sql = '''create table CourseInfo(cCourse varchar(20),cCollege varchar(20),cTeacher varchar(20),

cTeam varchar(40),cCount varchar(10),cProcess varchar(20),cBrief varchar(2048))'''

self.cursor.execute(sql)

except Exception as e:

print(e)

def close(self):

try:

self.con.commit()

self.con.close()

self.driver.quit()

except Exception as err:

print(err)

def insert_db(self, cCourse, cCollege, cTeacher, cTeam, cCount, cProcess, cBrief):

try:

self.cursor.execute(

"insert into CourseInfo (cCourse, cCollege, cTeacher, cTeam, cCount, cProcess, cBrief) values(%s, %s, %s, %s, %s, %s, %s)",

(cCourse, cCollege, cTeacher, cTeam, cCount, cProcess, cBrief))

except Exception as err:

print(err)

def login(self):

WebDriverWait(self.driver, 10, 0.48).until(EC.presence_of_element_located((By.XPATH, '//a[@class="f-f0 navLoginBtn"]'))).click()

self.driver.switch_to.default_content()

iframe = WebDriverWait(self.driver, 10, 0.48).until(EC.presence_of_element_located((By.XPATH, '//*[@frameborder="0"]')))

self.driver.switch_to.frame(iframe)

# 输入账号密码并点击登录按钮

self.driver.find_element_by_xpath('//*[@id="phoneipt"]').send_keys("15105909274")

time.sleep(2)

self.driver.find_element_by_xpath('//*[@class="j-inputtext dlemail"]').send_keys("xcl2357.")

time.sleep(2)

self.driver.find_element_by_id('submitBtn').click()

self.driver.switch_to.default_content()

self.driver.get(WebDriverWait(self.driver,10,0.48).until(EC.presence_of_element_located((By.XPATH,'//*[@id="app"]/div/div/div[1]/div[1]/div[1]/span[1]/a'))).get_attribute('href'))

def process_spider(self):

time.sleep(5) # 等5秒等待网页数据加载

print("begin")

try:

courses = self.driver.find_elements(By.XPATH, '//*[@id="channel-course-list"]/div/div/div[2]/div[1]/div')

current_window_handle = self.driver.current_window_handle

for course in courses:

cCourse = course.find_element_by_xpath(".//h3").text

cCollege = course.find_element_by_xpath(".//p[@class='_2lZi3']").text

cTeacher = course.find_element_by_xpath(".//div[@class='_1Zkj9']").text

cCount = course.find_element_by_xpath(".//div[@class='jvxcQ']/span").text

cProcess = course.find_element_by_xpath(".//div[@class='jvxcQ']/div").text

course.click()

Handles = self.driver.window_handles

self.driver.switch_to.window(Handles[1])

cBrief = self.driver.find_element_by_xpath('//*[@id="j-rectxt2"]').text.strip()

cTeam = ""

while True:

Teachers = self.driver.find_elements_by_xpath("//div[@class='um-list-slider_con_item']/div/div/h3")

for Teacher in Teachers:

cTeam += Teacher.text+"."

try:

self.driver.find_element_by_xpath("//div[@class='um-list-slider_next f-pa']").click()

except:

break

print(cCourse, cCollege, cTeacher, cTeam, cCount, cProcess, cBrief)

self.driver.close() # 关闭新标签页

self.driver.switch_to.window(current_window_handle) # 跳转回原始页面

self.insert_db(cCourse, cCollege, cTeacher, cTeam, cCount, cProcess, cBrief)

try: # 翻页

link = self.driver.find_element_by_xpath('//*[@id="channel-course-list"]/div/div/div[2]/div[2]/div')

if int(link.find_element_by_xpath(".//a[@class='_20fst _6jBq']").text) <= 3:

try:

link.find_element_by_xpath(".//a[@class='_3YiUU '][2]").click()

except:

link.find_element_by_xpath(".//a[@class='_3YiUU '][1]").click()

js = 'window.scrollTo(0,document.body.scrollTop=0)'

self.driver.execute_script(js)

self.process_spider()

except Exception as err:

print(err)

except Exception as err:

print(err)

def execute_spider(self, url):

self.driver.get(url)

self.login()

self.process_spider()

spider = MySpider()

spider.execute_spider("https://www.icourse163.org") # 中国mooc网

spider.close()

运行结果:

心得体会

对selenum的操作有了更多的认识。

作业③

实验内容

题目:

-

要求:掌握大数据相关服务,熟悉Xshell的使用

完成文档 华为云_大数据实时分析处理实验手册-Flume日志采集实验(部分)v2.docx 中的任务,即为下面5个任务,具体操作见文档。 -

输出:实验关键步骤或结果截图。

实验过程:

环境搭建:

任务一:开通MapReduce服务

这是创建使用后删除的MRS集群,删除前没截图。

实时分析开发实战:

任务一:Python脚本生成测试数据

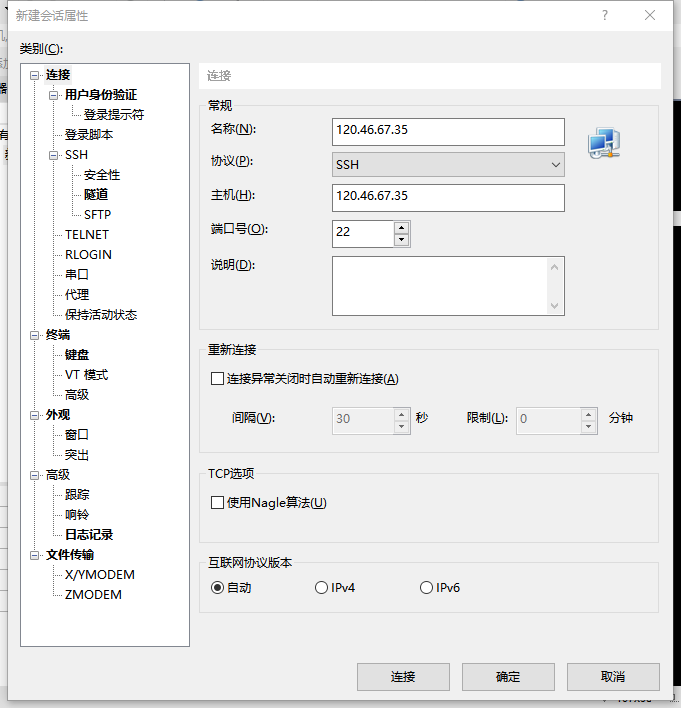

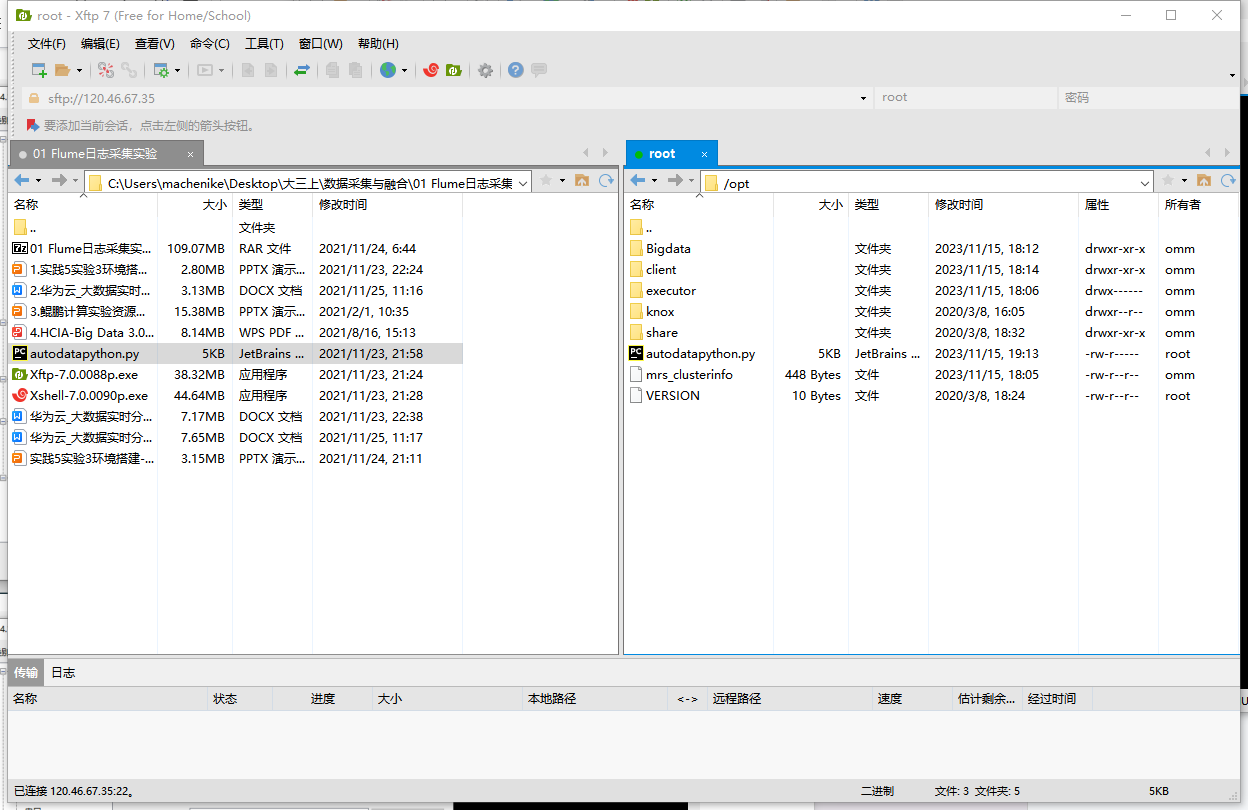

xshell连接服务器

xftp传输文件

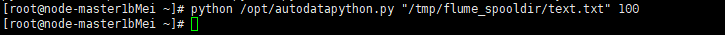

运行python脚本

输出结果

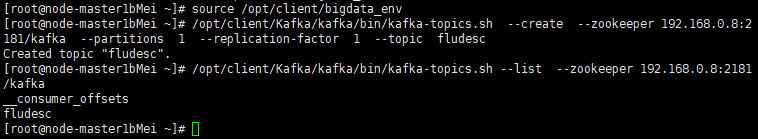

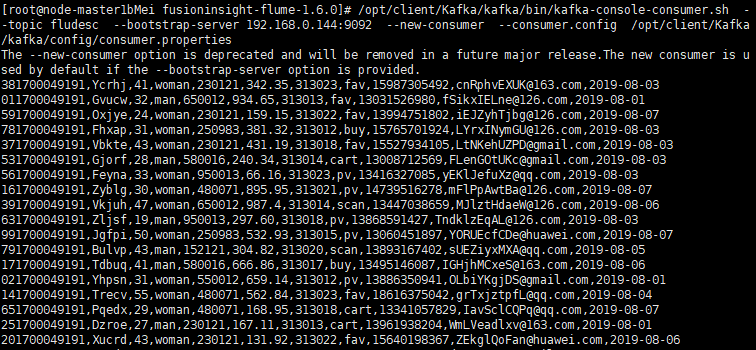

任务二:配置Kafka

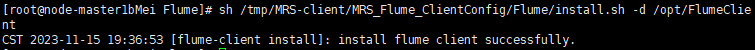

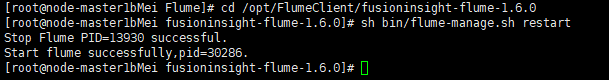

任务三: 安装Flume客户端

任务四:配置Flume采集数据

心得体会

学会了使用xshell连接到服务器并进行操作,并学会了大数据服务的相关配置。

码云链接

wait...