一、选题背景:

中超联赛作为中国顶级足球赛事,吸引了广泛的关注,其球员数据包含了丰富的信息,涵盖球员技术、表现和比赛策略等方面。随着数据科学技术的不断发展,对于足球俱乐部和教练来说,充分利用这些数据进行分析和挖掘,以制定更有效的战术和管理策略变得愈发重要。

选题背景重点:

1. 数据驱动的足球管理:中超俱乐部和教练需要通过深度分析球员数据来了解球员表现、评估战术,以及预测比赛结果,从而制定更有效的管理和竞技策略。

2. 决策支持和智能化分析:利用大数据分析、机器学习和统计建模等技术,为决策者提供智能化的分析工具,辅助他们做出更准确的战术和球员管理决策。

3. 培养数据科学与足球运动结合的跨学科能力:通过这门课程,学生将能够学习和应用数据科学技术,结合足球运动领域的实际问题进行数据分析和解决方案设计。

4. 促进足球运动的科技创新:通过分析球员数据,发掘潜在的技术创新机会,为球队带来竞争优势和创新性的解决方案。

这个选题背景将侧重于中超联赛球员数据的分析,强调数据科学在提升足球运动管理、战术决策和推动创新方面的作用。

二、目标实现设计方案:

1.数据获取:

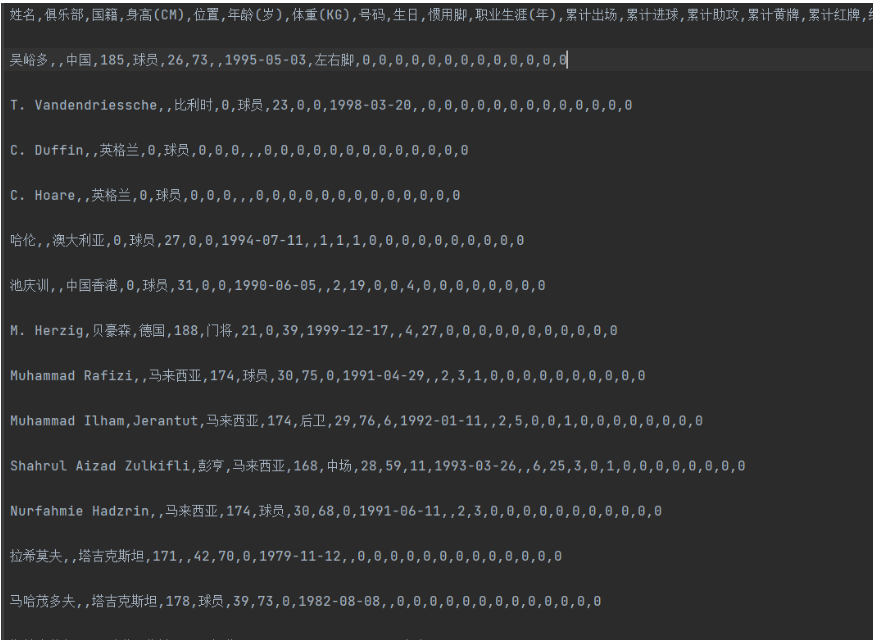

数据来源:从官方网站、API或其他数据提供商获取中超联赛球员数据。

数据类型:球员基本信息、比赛统计数据(进球数、助攻数、传球成功率等)、位置信息等。

数据格式:采用 JSON、CSV 或其他常用数据格式。

2. 数据处理与清洗:

数据清洗:处理缺失值、重复值和异常值。

数据整合:整合多个数据源,确保数据格式一致性。

特征工程:构建新特征、转换数据类型,以支持后续的分析需求。

3. 数据分析:

基本统计分析:球员得分、助攻、传球成功率等基本指标的统计。

比较分析:不同球队、位置或赛季的数据对比分析。

预测分析:使用机器学习或统计模型预测球员未来表现或比赛结果。

4.结果呈现与报告:

数据解释与结论:解释分析结果,提出洞察和结论。

数据分析报告:要求学生提交数据分析报告或进行展示,以呈现他们的分析发现和建议。

三、主题页面的结构特征分析:

四、网络爬虫程序设计:

导入所需要的库,并验证获取信息的网站是否可靠

1 import urllib 2 import csv 3 from bs4 import BeautifulSoup 4 from lxml import etree 5 6 # 检查是否存在球员 7 def checkHtml(num): 8 url = "https://www.dongqiudi.com/player/%s.html" % num 9 html = askURL(url) 10 soup = BeautifulSoup(html, "html.parser") 11 name = soup.find('p', attrs={'class': 'china-name'}) 12 if (name == None): 13 print('无效网站') 14 return 'none' 15 else: 16 return soup 17 18 # 获取数据,并储存 19 def getData(soup): 20 # url = "https://www.dongqiudi.com/player/%s.html" % num 21 # html = askURL(url) 22 # soup = BeautifulSoup(html, "html.parser")

获取球员详细信息

1 # 姓名 2 name = soup.find('p', attrs={'class': 'china-name'}) 3 name = str(name) 4 con = etree.HTML(name) 5 namestr = con.xpath("//p/text()") 6 name = namestr[0] 7 print(name) 8 9 10 # 获取详细信息list 11 detail_list = [] 12 detail_info_div = soup.find('div',attrs={'class': 'detail-info'}) 13 # con2 = etree.HTML(detail_info_div) 14 detail_info_ul = detail_info_div.find_all('li') 15 for each in detail_info_ul: 16 detail = each.text.strip() 17 detail_list.append(detail) 18 # print(detail_list) 19 20 # 俱乐部 21 club = str(detail_list[0]).replace('俱乐部:' ,'') 22 # print('俱乐部', club) 23 # 国籍 24 contry = str(detail_list[1]).replace('国 籍:' ,'') 25 # print('国籍', contry) 26 # 身高 27 height = 0 28 heightstr = str(detail_list[2]).replace('CM', '') 29 heightstr = heightstr.replace('身 高:', '') 30 if heightstr != '': 31 height = int(heightstr) 32 # print('身高', height) 33 # 位置 34 location = str(detail_list[3]).replace('位 置:', '') 35 # print('位置', location) 36 # 年龄 37 age = 0 38 agestr = str(detail_list[4]).replace('年 龄:', '') 39 agestr = agestr.replace('岁', '') 40 if agestr != '': 41 age = int(agestr) 42 # print('年龄', age) 43 # 体重 44 weight = 0 45 weightstr = str(detail_list[5]).replace('体 重:', '') 46 weightstr = weightstr.replace('KG', '') 47 if weightstr != '': 48 weight = weightstr 49 # print('体重', weight) 50 # 号码 51 number = 0 52 numberstr = str(detail_list[6]).replace('号 码:', '') 53 numberstr = numberstr.replace('号', '') 54 if numberstr != '': 55 number = int(numberstr) 56 # print('号码', number) 57 # 生日 58 birth = str(detail_list[7]).replace('生 日:', '') 59 # print(birth) 60 # 惯用脚 61 foot = str(detail_list[8]).replace('惯用脚:', '') 62 # print(foot) 63 64 # 获取俱乐部比赛数据详细信息list 65 total_con_wrap_div = soup.find('div', attrs={'class': 'total-con-wrap'}) 66 total_con_wrap_td = str(total_con_wrap_div.find_all('p', attrs={'class': 'td'})) 67 con3 = etree.HTML(total_con_wrap_td) 68 detail_info_list = con3.xpath("//p//span/text()") 69 detail_info_list_years = con3.xpath("//p") 70 71 # 一线队时间(年) 72 years = len(detail_info_list_years) - 1 73 # print('一线队时长', len(detail_info_list_years) - 1) 74 75 # 总计上场次数 76 total_session = 0 77 for i in range(2, len(detail_info_list), 9): 78 if detail_info_list[i] == '~': 79 detail_info_list[i] = 0 80 total_session = total_session+int(detail_info_list[i]) 81 # print('累计出场数', total_session) 82 83 # 总计进球数 84 total_goals = 0 85 for i in range(4, len(detail_info_list), 9): 86 if detail_info_list[i] == '~': 87 detail_info_list[i] = 0 88 total_goals = total_goals + int(detail_info_list[i]) 89 # print('累计进球数', total_goals) 90 91 # 总计助攻数 92 total_assist = 0 93 for i in range(5, len(detail_info_list), 9): 94 if detail_info_list[i] == '~': 95 detail_info_list[i] = 0 96 total_assist = total_assist + int(detail_info_list[i]) 97 # print('累计助攻数', total_assist) 98 99 # 总计黄牌数 100 total_yellow_card = 0 101 for i in range(6, len(detail_info_list), 9): 102 if detail_info_list[i] == '~': 103 detail_info_list[i] = 0 104 total_yellow_card = total_yellow_card + int(detail_info_list[i]) 105 # print('累计黄牌数', total_yellow_card) 106 107 # 总计红牌数 108 total_red_card = 0 109 for i in range(7, len(detail_info_list), 9): 110 if detail_info_list[i] == '~': 111 detail_info_list[i] = 0 112 total_red_card = total_red_card + int(detail_info_list[i]) 113 # print('累计红牌数', total_red_card) 114 115 116 # 获取总评分 117 average = 0 118 speed = 0 119 power = 0 120 guard = 0 121 dribbling = 0 122 passing = 0 123 shooting = 0 124 grade_average = soup.find('p', attrs={'class': 'average'}) 125 if grade_average != None: 126 con4 = etree.HTML(str(grade_average)) 127 average = con4.xpath("//p//b/text()") 128 average = int(average[0]) 129 # print('综合能力', average) 130 # 详细评分 131 grade_detail_div = soup.find('div', attrs={'class': 'box_chart'}) 132 if grade_detail_div != None: 133 con5 = etree.HTML(str(grade_detail_div)) 134 grade_detail = con5.xpath("//div//span/text()") 135 # 速度 136 speed = int(grade_detail[0]) 137 # print(speed) 138 # 力量 139 power = int(grade_detail[1]) 140 # print(power) 141 # 防守 142 guard = int(grade_detail[2]) 143 # print(guard) 144 # 盘带 145 dribbling = int(grade_detail[3]) 146 # print(dribbling) 147 # 传球 148 passing = int(grade_detail[4]) 149 # print(passing) 150 # 射门 151 shooting = int(grade_detail[5]) 152 # print(shooting)

将获取到的信息写入文件

1 csv.writer(f).writerow([name, club, contry, height, location, age, weight, number, birth, foot, years, total_session, 2 total_goals, total_assist, total_yellow_card, total_red_card, average, speed, power, 3 guard, dribbling, passing, shooting])

得到指定一个URL的网页内容

1 def askURL(url): 2 head = { # 模拟浏览器头部信息,向豆瓣服务器发送消息 3 "User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/96.0.4664.45 Safari/537.36 Edg/96.0.1054.29" 4 } 5 # 用户代理,表示告诉豆瓣服务器,我们是什么类型的机器、浏览器(本质上是告诉浏览器,我们可以接收什么水平的文件内容) 6 7 request = urllib.request.Request(url, headers=head) 8 html = "" 9 try: 10 response = urllib.request.urlopen(request) 11 html = response.read().decode("utf-8") 12 except urllib.error.URLError as e: 13 if hasattr(e, "code"): 14 print(e.code) 15 if hasattr(e, "reason"): 16 print(e.reason) 17 return html

将数据写入csv文件

1 f = open("足球运动员.csv", mode="a", encoding='utf-8') 2 # csv.writer(f).writerow(["姓名","俱乐部","国籍","身高(CM)","位置","年龄(岁)","体重(KG)","号码","生日","惯用脚","职业生涯(年)", 3 # "累计出场","累计进球","累计助攻","累计黄牌","累计红牌","综合能力","速度","力量","防守","盘带","传球","射门"]) 4 for num in range(50184113, 50184150): 5 print(num) 6 soup = checkHtml(num) 7 if soup != 'none': 8 getData(soup) 9 # getData(num)

结果截图

在获取我所需要的数据后,制作一个中超球员的年龄散点图

1 import csv 2 import matplotlib.pyplot as plt 3 import matplotlib 4 5 # 设置中文字体,确保中文显示正常 6 matplotlib.rcParams['font.sans-serif'] = ['SimHei'] # 设置中文字体为黑体 7 matplotlib.rcParams['axes.unicode_minus'] = False # 解决坐标轴负号'-'显示问题 8 9 # 读取CSV文件并提取年龄数据 10 ages = [] 11 with open('足球运动员.csv', mode='r', encoding='utf-8') as csv_file: 12 csv_reader = csv.reader(csv_file) 13 next(csv_reader) # 跳过标题行 14 for row in csv_reader: 15 age = int(row[5]) # 年龄在CSV文件的第6列(索引为5) 16 ages.append(age) 17 18 # 创建散点图 19 plt.figure(figsize=(8, 6)) 20 plt.scatter(range(1, len(ages) + 1), ages, color='blue', alpha=0.5) 21 plt.title('年龄散点图') 22 plt.xlabel('球员编号') 23 plt.ylabel('年龄') 24 plt.grid(True) 25 plt.show()

我们都知道中国足球很大程度上依赖归化球员,所以一支球队往往有来自多个国家,不同国籍的球员,在知道了他们年龄的分布状态后,我还需要知道他们的国籍分布状态

1 import csv 2 import matplotlib.pyplot as plt 3 import matplotlib 4 5 # 设置中文字体,确保中文显示正常 6 matplotlib.rcParams['font.sans-serif'] = ['SimHei'] 7 matplotlib.rcParams['axes.unicode_minus'] = False 8 9 # 读取CSV文件并统计各个国籍的球员数量 10 nationalities = {} 11 with open('足球运动员.csv', mode='r', encoding='utf-8') as csv_file: 12 csv_reader = csv.reader(csv_file) 13 next(csv_reader) # 跳过标题行 14 for row in csv_reader: 15 nationality = row[2] # 国籍在CSV文件的第3列(索引为2) 16 if nationality in nationalities: 17 nationalities[nationality] += 1 18 else: 19 nationalities[nationality] = 1 20 21 # 获取国籍和对应的球员数量 22 countries = list(nationalities.keys()) 23 player_counts = list(nationalities.values()) 24 25 # 创建散点图 26 plt.figure(figsize=(10, 6)) 27 plt.scatter(countries, player_counts, color='red', alpha=0.7) 28 plt.title('球员各国籍散点图') 29 plt.xlabel('国籍') 30 plt.ylabel('球员数量') 31 plt.xticks(rotation=45) # 旋转x轴标签,防止重叠 32 plt.grid(True) 33 plt.tight_layout() 34 plt.show()

绘制球员的身高柱状图

1 import csv 2 import matplotlib.pyplot as plt 3 4 # 读取CSV文件并统计不同身高范围内的球员数量 5 height_ranges = {'150-160': 0, '161-170': 0, '171-180': 0, '181-190': 0, '191-200': 0, '200以上': 0} 6 with open('足球运动员.csv', mode='r', encoding='utf-8') as csv_file: 7 csv_reader = csv.reader(csv_file) 8 next(csv_reader) # 跳过标题行 9 for row in csv_reader: 10 height = int(row[3]) # 身高在CSV文件的第4列(索引为3) 11 if 150 <= height <= 160: 12 height_ranges['150-160'] += 1 13 elif 161 <= height <= 170: 14 height_ranges['161-170'] += 1 15 elif 171 <= height <= 180: 16 height_ranges['171-180'] += 1 17 elif 181 <= height <= 190: 18 height_ranges['181-190'] += 1 19 elif 191 <= height <= 200: 20 height_ranges['191-200'] += 1 21 else: 22 height_ranges['200以上'] += 1 23 24 # 获取身高范围和对应的球员数量 25 height_labels = list(height_ranges.keys()) 26 player_counts = list(height_ranges.values()) 27 28 # 创建柱状图 29 plt.figure(figsize=(10, 6)) 30 plt.bar(height_labels, player_counts, color='blue') 31 plt.title('球员身高柱状图') 32 plt.xlabel('身高范围') 33 plt.ylabel('球员数量') 34 plt.xticks(rotation=45) # 旋转x轴标签,防止重叠 35 plt.tight_layout() 36 plt.show()

五、总代码:

1 import urllib 2 import csv 3 from bs4 import BeautifulSoup 4 from lxml import etree 5 6 # 检查是否存在球员 7 def checkHtml(num): 8 url = "https://www.dongqiudi.com/player/%s.html" % num 9 html = askURL(url) 10 soup = BeautifulSoup(html, "html.parser") 11 name = soup.find('p', attrs={'class': 'china-name'}) 12 if (name == None): 13 print('无效网站') 14 return 'none' 15 else: 16 return soup 17 18 # 获取数据,并储存 19 def getData(soup): 20 # url = "https://www.dongqiudi.com/player/%s.html" % num 21 # html = askURL(url) 22 # soup = BeautifulSoup(html, "html.parser") 23 24 # 姓名 25 name = soup.find('p', attrs={'class': 'china-name'}) 26 name = str(name) 27 con = etree.HTML(name) 28 namestr = con.xpath("//p/text()") 29 name = namestr[0] 30 print(name) 31 32 33 # 获取详细信息list 34 detail_list = [] 35 detail_info_div = soup.find('div',attrs={'class': 'detail-info'}) 36 # con2 = etree.HTML(detail_info_div) 37 detail_info_ul = detail_info_div.find_all('li') 38 for each in detail_info_ul: 39 detail = each.text.strip() 40 detail_list.append(detail) 41 # print(detail_list) 42 43 # 俱乐部 44 club = str(detail_list[0]).replace('俱乐部:' ,'') 45 # print('俱乐部', club) 46 # 国籍 47 contry = str(detail_list[1]).replace('国 籍:' ,'') 48 # print('国籍', contry) 49 # 身高 50 height = 0 51 heightstr = str(detail_list[2]).replace('CM', '') 52 heightstr = heightstr.replace('身 高:', '') 53 if heightstr != '': 54 height = int(heightstr) 55 # print('身高', height) 56 # 位置 57 location = str(detail_list[3]).replace('位 置:', '') 58 # print('位置', location) 59 # 年龄 60 age = 0 61 agestr = str(detail_list[4]).replace('年 龄:', '') 62 agestr = agestr.replace('岁', '') 63 if agestr != '': 64 age = int(agestr) 65 # print('年龄', age) 66 # 体重 67 weight = 0 68 weightstr = str(detail_list[5]).replace('体 重:', '') 69 weightstr = weightstr.replace('KG', '') 70 if weightstr != '': 71 weight = weightstr 72 # print('体重', weight) 73 # 号码 74 number = 0 75 numberstr = str(detail_list[6]).replace('号 码:', '') 76 numberstr = numberstr.replace('号', '') 77 if numberstr != '': 78 number = int(numberstr) 79 # print('号码', number) 80 # 生日 81 birth = str(detail_list[7]).replace('生 日:', '') 82 # print(birth) 83 # 惯用脚 84 foot = str(detail_list[8]).replace('惯用脚:', '') 85 # print(foot) 86 87 # 获取俱乐部比赛数据详细信息list 88 total_con_wrap_div = soup.find('div', attrs={'class': 'total-con-wrap'}) 89 total_con_wrap_td = str(total_con_wrap_div.find_all('p', attrs={'class': 'td'})) 90 con3 = etree.HTML(total_con_wrap_td) 91 detail_info_list = con3.xpath("//p//span/text()") 92 detail_info_list_years = con3.xpath("//p") 93 94 # 一线队时间(年) 95 years = len(detail_info_list_years) - 1 96 # print('一线队时长', len(detail_info_list_years) - 1) 97 98 # 总计上场次数 99 total_session = 0 100 for i in range(2, len(detail_info_list), 9): 101 if detail_info_list[i] == '~': 102 detail_info_list[i] = 0 103 total_session = total_session+int(detail_info_list[i]) 104 # print('累计出场数', total_session) 105 106 # 总计进球数 107 total_goals = 0 108 for i in range(4, len(detail_info_list), 9): 109 if detail_info_list[i] == '~': 110 detail_info_list[i] = 0 111 total_goals = total_goals + int(detail_info_list[i]) 112 # print('累计进球数', total_goals) 113 114 # 总计助攻数 115 total_assist = 0 116 for i in range(5, len(detail_info_list), 9): 117 if detail_info_list[i] == '~': 118 detail_info_list[i] = 0 119 total_assist = total_assist + int(detail_info_list[i]) 120 # print('累计助攻数', total_assist) 121 122 # 总计黄牌数 123 total_yellow_card = 0 124 for i in range(6, len(detail_info_list), 9): 125 if detail_info_list[i] == '~': 126 detail_info_list[i] = 0 127 total_yellow_card = total_yellow_card + int(detail_info_list[i]) 128 # print('累计黄牌数', total_yellow_card) 129 130 # 总计红牌数 131 total_red_card = 0 132 for i in range(7, len(detail_info_list), 9): 133 if detail_info_list[i] == '~': 134 detail_info_list[i] = 0 135 total_red_card = total_red_card + int(detail_info_list[i]) 136 # print('累计红牌数', total_red_card) 137 138 139 # 获取总评分 140 average = 0 141 speed = 0 142 power = 0 143 guard = 0 144 dribbling = 0 145 passing = 0 146 shooting = 0 147 grade_average = soup.find('p', attrs={'class': 'average'}) 148 if grade_average != None: 149 con4 = etree.HTML(str(grade_average)) 150 average = con4.xpath("//p//b/text()") 151 average = int(average[0]) 152 # print('综合能力', average) 153 # 详细评分 154 grade_detail_div = soup.find('div', attrs={'class': 'box_chart'}) 155 if grade_detail_div != None: 156 con5 = etree.HTML(str(grade_detail_div)) 157 grade_detail = con5.xpath("//div//span/text()") 158 # 速度 159 speed = int(grade_detail[0]) 160 # print(speed) 161 # 力量 162 power = int(grade_detail[1]) 163 # print(power) 164 # 防守 165 guard = int(grade_detail[2]) 166 # print(guard) 167 # 盘带 168 dribbling = int(grade_detail[3]) 169 # print(dribbling) 170 # 传球 171 passing = int(grade_detail[4]) 172 # print(passing) 173 # 射门 174 shooting = int(grade_detail[5]) 175 # print(shooting) 176 177 178 # 写进文件 179 180 csv.writer(f).writerow([name, club, contry, height, location, age, weight, number, birth, foot, years, total_session, 181 total_goals, total_assist, total_yellow_card, total_red_card, average, speed, power, 182 guard, dribbling, passing, shooting]) 183 184 # 得到指定一个URL的网页内容 185 def askURL(url): 186 head = { # 模拟浏览器头部信息,向豆瓣服务器发送消息 187 "User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/96.0.4664.45 Safari/537.36 Edg/96.0.1054.29" 188 } 189 # 用户代理,表示告诉豆瓣服务器,我们是什么类型的机器、浏览器(本质上是告诉浏览器,我们可以接收什么水平的文件内容) 190 191 request = urllib.request.Request(url, headers=head) 192 html = "" 193 try: 194 response = urllib.request.urlopen(request) 195 html = response.read().decode("utf-8") 196 except urllib.error.URLError as e: 197 if hasattr(e, "code"): 198 print(e.code) 199 if hasattr(e, "reason"): 200 print(e.reason) 201 return html 202 203 204 205 f = open("足球运动员.csv", mode="a", encoding='utf-8') 206 # csv.writer(f).writerow(["姓名","俱乐部","国籍","身高(CM)","位置","年龄(岁)","体重(KG)","号码","生日","惯用脚","职业生涯(年)", 207 # "累计出场","累计进球","累计助攻","累计黄牌","累计红牌","综合能力","速度","力量","防守","盘带","传球","射门"]) 208 for num in range(50184113, 50184150): 209 print(num) 210 soup = checkHtml(num) 211 if soup != 'none': 212 getData(soup) 213 # getData(num) 214 215 216 217 218 import csv 219 import matplotlib.pyplot as plt 220 import matplotlib 221 222 # 设置中文字体,确保中文显示正常 223 matplotlib.rcParams['font.sans-serif'] = ['SimHei'] # 设置中文字体为黑体 224 matplotlib.rcParams['axes.unicode_minus'] = False # 解决坐标轴负号'-'显示问题 225 226 # 读取CSV文件并提取年龄数据 227 ages = [] 228 with open('足球运动员.csv', mode='r', encoding='utf-8') as csv_file: 229 csv_reader = csv.reader(csv_file) 230 next(csv_reader) # 跳过标题行 231 for row in csv_reader: 232 age = int(row[5]) # 假设年龄在CSV文件的第6列(索引为5) 233 ages.append(age) 234 235 # 创建散点图 236 plt.figure(figsize=(8, 6)) 237 plt.scatter(range(1, len(ages) + 1), ages, color='blue', alpha=0.5) 238 plt.title('年龄散点图') 239 plt.xlabel('球员编号') 240 plt.ylabel('年龄') 241 plt.grid(True) 242 plt.show() 243 import csv 244 import matplotlib.pyplot as plt 245 import matplotlib 246 247 # 设置中文字体,确保中文显示正常 248 matplotlib.rcParams['font.sans-serif'] = ['SimHei'] 249 matplotlib.rcParams['axes.unicode_minus'] = False 250 251 # 读取CSV文件并统计各个国籍的球员数量 252 nationalities = {} 253 with open('足球运动员.csv', mode='r', encoding='utf-8') as csv_file: 254 csv_reader = csv.reader(csv_file) 255 next(csv_reader) # 跳过标题行 256 for row in csv_reader: 257 nationality = row[2] # 假设国籍在CSV文件的第3列(索引为2) 258 if nationality in nationalities: 259 nationalities[nationality] += 1 260 else: 261 nationalities[nationality] = 1 262 263 # 获取国籍和对应的球员数量 264 countries = list(nationalities.keys()) 265 player_counts = list(nationalities.values()) 266 267 # 创建散点图 268 plt.figure(figsize=(10, 6)) 269 plt.scatter(countries, player_counts, color='red', alpha=0.7) 270 plt.title('球员各国籍散点图') 271 plt.xlabel('国籍') 272 plt.ylabel('球员数量') 273 plt.xticks(rotation=45) # 旋转x轴标签,防止重叠 274 plt.grid(True) 275 plt.tight_layout() 276 plt.show() 277 import csv 278 import matplotlib.pyplot as plt 279 280 # 读取CSV文件并统计不同身高范围内的球员数量 281 height_ranges = {'150-160': 0, '161-170': 0, '171-180': 0, '181-190': 0, '191-200': 0, '200以上': 0} 282 with open('足球运动员.csv', mode='r', encoding='utf-8') as csv_file: 283 csv_reader = csv.reader(csv_file) 284 next(csv_reader) # 跳过标题行 285 for row in csv_reader: 286 height = int(row[3]) # 假设身高在CSV文件的第4列(索引为3) 287 if 150 <= height <= 160: 288 height_ranges['150-160'] += 1 289 elif 161 <= height <= 170: 290 height_ranges['161-170'] += 1 291 elif 171 <= height <= 180: 292 height_ranges['171-180'] += 1 293 elif 181 <= height <= 190: 294 height_ranges['181-190'] += 1 295 elif 191 <= height <= 200: 296 height_ranges['191-200'] += 1 297 else: 298 height_ranges['200以上'] += 1 299 300 # 获取身高范围和对应的球员数量 301 height_labels = list(height_ranges.keys()) 302 player_counts = list(height_ranges.values()) 303 304 # 创建柱状图 305 plt.figure(figsize=(10, 6)) 306 plt.bar(height_labels, player_counts, color='blue') 307 plt.title('球员身高柱状图') 308 plt.xlabel('身高范围') 309 plt.ylabel('球员数量') 310 plt.xticks(rotation=45) # 旋转x轴标签,防止重叠 311 plt.tight_layout() 312 plt.show()

六、总结:

中超足球联赛自诞生以来一直面临着诸多挑战和困难,这些问题导致了其在国际足球舞台上的落后。其中,影响最大的原因包括:

1. 财政投入不足: 尽管中超俱乐部在引进外援和球员方面投入了大量资金,但整体对基础设施、青训体系以及联赛的长期发展投入不足。这导致了对足球整体发展的限制,与一些欧洲豪门相比,中国俱乐部在发展的长期规划和整体实力上仍有较大差距。

2. 青训体系不完善:近年来有关注青训的努力,但中国足球的青训系统仍处于起步阶段。与一流足球国家相比,中国足球基层训练和青少年培养的体系和质量有较大差距。这使得在培养本土球星和提高整体水平方面存在难度。

3.管理层面问题 :中超联赛中的俱乐部管理、赛事组织、裁判水平等方面存在一定问题。这些问题可能导致比赛质量和整体联赛形象下降,也可能影响球员的发展和态度。

4. 国内外援政策的调整: 针对外援政策的不断调整也影响了中超联赛的整体水平。过度依赖外援导致国内球员发展受限,而频繁的政策变化可能给球队战术体系和球员建设带来困扰。

虽然中超面临着诸多问题,但也不乏着改善和发展的希望。需要各方共同努力,提高青训水平,完善联赛管理体系,加大对足球基础设施的投入,并长期持续地推动足球发展,这样才能逐渐缩小与国际顶级联赛之间的差距。

最后,在完成该项目后,我深刻明白自己的水平实属不行,很多代码功能不能按照自己的设想去实现,作品距离真正的作为分析数据的工具是远远不够的,我还需要继续努力学习。