训练阿里云多人训练语音合成模型

- model_link:https://modelscope.cn/models/speech_tts/speech_sambert-hifigan_tts_zh-cn_multisp_pretrain_24k/summary

- 1.获取KANTTS算法训练框架

- 2.配置虚拟环境(conda)

需要下载pytorch及其他kantts算法依赖

- 3.数据准备:https://modelscope.cn/datasets/speech_tts/AISHELL-3/summary

- 4.数据重新采样,例如手中有48k采样率的音频文件,你需要训练的24k的模型,那么就需要把相关文件采样率转换为24k(根据具体需求完成)

转换采样率

import os

from pydub import AudioSegment

input_folder = "/path/aida/wav_24k"

output_folder = "/path/aida/new_wav_24k"

if not os.path.exists(output_folder):

os.makedirs(output_folder)

for file in os.listdir(input_folder):

if file.endswith(".wav"):

input_file = os.path.join(input_folder, file)

output_file = os.path.join(output_folder, file)

audio = AudioSegment.from_file(input_file, format="wav")

audio = audio.set_frame_rate(24000)

audio.export(output_file, format="wav")

print("转换完成!")

# 检验采样率

import wave

def get_sample_rate(file_path):

with wave.open(file_path, 'rb') as wav_file:

sample_rate = wav_file.getframerate()

return sample_rate

file_path = '/path/aida/wav_24k/002192.wav'

sample_rate = get_sample_rate(file_path)

print(f'采样率为:{sample_rate} Hz')

- 5.特征提取

# 特征提取

python kantts/preprocess/data_process.py --voice_input_dir aishell3_16k/SSB0009 --voice_output_dir training_stage/SSB0009_feats --audio_config kantts/configs/audio_config_16k.yaml --speaker SSB0009

这是特征提取后的结果

主要是注意am_train.lst/am_vilid.lst/raw_metafile.txt里面是否有内容

提取完成后需要训练am

命令提示linux:

查看显卡nvdia-msi

- 6.训练am(声学模型)

CUDA_VISIBLE_DEVICES=0 python kantts/bin/train_sambert.py --model_config speech_sambert-hifigan_tts_zh-cn_multisp_pretrain_16k/basemodel_16k/sambert/config.yaml --resume_path speech_sambert-hifigan_tts_zh-cn_multisp_pretrain_16k/basemodel_16k/sambert/ckpt/checkpoint_980000.pth --root_dir training_stage/SSB0009_feats --stage_dir training_stage/SSB0009_sambert_ckpt

可以添加nohup后台挂起,这个时间稍微有一点久

- 7.训练voc

CUDA_VISIBLE_DEVICES=7 python kantts/bin/train_hifigan.py --model_config /home/syl01840793/speech_sambert-hifigan_tts_zh-cn_multisp_pretrain_24k/basemodel_24k/hifigan/config.yaml --resume_path /home/syl01840793/speech_sambert-hifigan_tts_zh-cn_multisp_pretrain_24k/basemodel_24k/hifigan/ckpt/checkpoint_2000000.pth --root_dir /home/syl01840793/train_config_test/Training_stage/aida/feat --stage_dir /home/syl01840793/train_config_test/Training_stage/aida/hifigan

mode_config 在基础base_model的chekpoint上增加100k,10ksave

- 8.合成语音

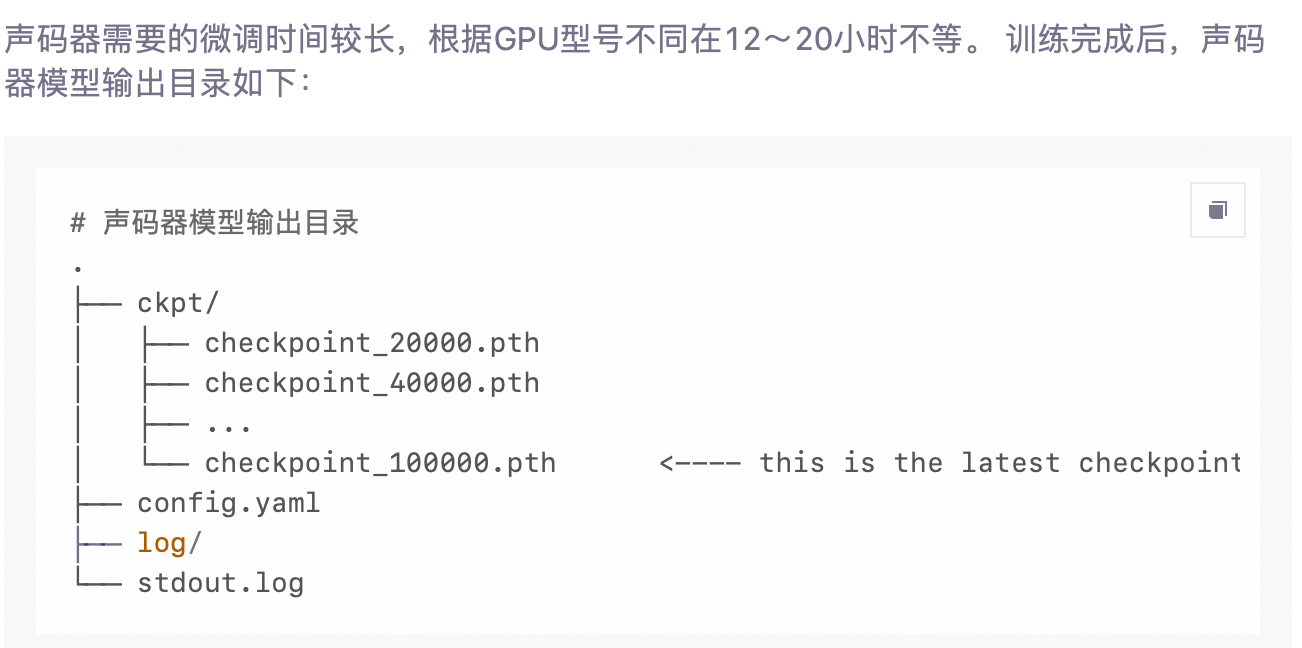

voc结束后会输出这些东西

找到hifigan/ckpt下,最新(最大的)check_point

运行合成语音

CUDA_VISIBLE_DEVICES=0 python kantts/bin/text_to_wav.py --txt test.txt --output_dir res/SSB0009_syn --res_zip speech_sambert-hifigan_tts_zh-cn_multisp_pretrain_16k/resource.zip --am_ckpt training_stage/SSB0009_sambert_ckpt/ckpt/checkpoint_1100000.pth --voc_ckpt training_stage/SSB0009_hifigan_ckpt/ckpt/checkpoint_2100000.pth --speaker SSB0009

合成语音且通过audition来检测质量即可