1、在外部使用java代码对数据进行清洗,并存储到一个新的txt文件中(需要提前创建好)

package org.example;

import java.io.BufferedReader;

import java.io.BufferedWriter;

import java.io.File;

import java.io.FileNotFoundException;

import java.io.FileOutputStream;

import java.io.FileReader;

import java.io.IOException;

import java.io.OutputStreamWriter;

import java.io.UnsupportedEncodingException;

import java.io.Writer;

public class CleanData {

public static void main(String[] args) throws FileNotFoundException, UnsupportedEncodingException {

FileReader read = new FileReader("F:\\Data\\result.txt");

BufferedReader br = new BufferedReader(read);

Writer writer = null;

File outFile = new File("F:\\Data\\result2.txt");

writer = new OutputStreamWriter(new FileOutputStream(outFile),"utf-8");

BufferedWriter bw = new BufferedWriter(writer);

String row;

String[] data=new String[6];

int hang=1;

try {

while((row = br.readLine())!=null){

data=change(row);

data=chage(data);

for(int i=0;i<data.length;i++) {

System.out.print(data[i]+"\t");

}

System.out.println();

row=data[0]+","+data[1]+","+data[2]+","+data[3]+","+data[4]+","+data[5];

bw.write(row + "\r\n");

//i++;

}

} catch (IOException e) {

// TODO Auto-generated catch block

e.printStackTrace();

}

}

private static String[] chage(String[] data) {

/*

* for(int i=0;i<data.length;i++) { data[] }

*/

data[0]=data[0];

char[] str=data[1].toCharArray();

String[] time=new String[7];

int j=0;

int k=0;

for(int i=0;i<str.length;i++) {

if(str[i]=='/'||str[i]==':'||str[i]==32) {

time[k]=data[1].substring(j,i);

j=i+1;

k++;

}

}

time[k]=data[1].substring(j, data[1].length());

switch(time[1]) { case "Jan":time[1]="01";break; case

"Feb":time[1]="02";break; case "Mar":time[1]="03";break; case

"Apr":time[1]="04";break; case "May":time[1]="05";break; case

"Jun":time[1]="06";break; case "Jul":time[1]="07";break; case

"Aug":time[1]="08";break; case "Sep":time[1]="09";break; case

"Oct":time[1]="10";break; case "Nov":time[1]="11";break; case

"Dec":time[1]="12";break; }

data[1]=time[2]+"-"+time[1]+"-"+time[0]+" "+time[3]+":"+time[4]+":"+time[5];

data[3]=data[3].substring(0, data[3].length()-1);

return data;

}

private static String [] change(String row) {

char [] str1=row.toCharArray();

String [] data =new String [6];

int j=0;

int k=0;

for(int i=0;i<str1.length;i++) {

if(str1[i]==',') {

data[k]=row.substring(j, i);

j=i+1;

k++;

}

}

data[k]=row.substring(j, str1.length);

return data;

}

}

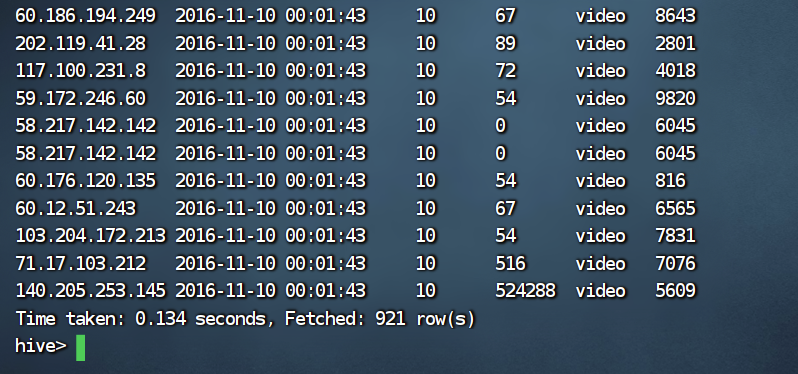

2、将数据文件加载到hive表中

create table result1(

ip String,

time1 String,

day String,

traffic String,

type String,

id String)

row format delimited fields terminated by ',' stored as textfile;

3、数据分析处理

1、统计最受欢迎的视频/文章的Top10访问次数 (video/article)

create table result2_article(

id String,

count String)

row format delimited fields terminated by ',' stored as textfile;

insert overwrite table result2_article select id,count(*) as count from result1 where type='article' group by id order by count desc limit 10;

我是将文章和视频的类型分开写的,大致略过,这么写的话,文章类型只有5条数据;

create table result2_video(

id String,

count String)

row format delimited fields terminated by ',' stored as textfile;

insert overwrite table result2_video select id,count(*) as count from result1 where type='video' group by id order by count desc limit 10;

2、按照地市统计最受欢迎的Top10课程 (ip)

create table result2_ip(

id String,

ip String,

count String)

row format delimited fields terminated by ',' stored as textfile;

insert overwrite table result2_ip select id,ip,count(*) as count from result1 group by id,ip order by count desc limit 10;

3、按照流量统计最受欢迎的Top10课程 (traffic)

create table result2_traffic(

id String,

time1 String,

traffic String,

count String)

row format delimited fields terminated by ',' stored as textfile;

insert overwrite table result2_traffic select id,time1,traffic,count(*) as count from result1 group by id,time1,traffic order by count desc limit 10;

4、在mysql中建立结构相同的表

5、利用sqoop将数据传到mysql中

bin/sqoop export \

--connect jdbc:mysql://node1:3306/transport \

--username root \

--password hadoop \

--table result2_article \

--num-mappers 1 \

--export-dir /user/hive/warehouse/sss.db/result2_article\

--input-fields-terminated-by ','

CREATE TEMPORARY FUNCTION dboutput AS 'org.apache.hadoop.hive.contrib.genericudf.example.GenericUDFDBOutput';

select dboutput('jdbc:mysql://node1:3306/transport?useSSL=false','root','hadoop','INSERT INTO result2_article(id,count) VALUES (?,?)',id,count) from result2_article;

select dboutput('jdbc:mysql://node1:3306/transport?useSSL=false','root','hadoop','INSERT INTO result2_video(id,count) VALUES (?,?)',id,count) from result2_video;

select dboutput('jdbc:mysql://node1:3306/transport?useSSL=false','root','hadoop','INSERT INTO result2_ip(id,ip,count) VALUES (?,?,?)',id,ip,count) from result2_ip;

select dboutput('jdbc:mysql://node1:3306/transport?useSSL=false','root','hadoop','INSERT INTO result2_traffic(id,time1,traffic,count) VALUES (?,?,?,?)',id,time1,traffic,count) from result2_traffic;

完美实现!