%%time

from utils.utils import create_dataset, Trainer

from layer.layer import Embedding, FeaturesEmbedding, EmbeddingsInteraction, MultiLayerPerceptron

import torch

import torch.nn as nn

import torch.optim as optim

device = torch.device('cuda:0' if torch.cuda.is_available() else 'cpu')

print('Training on [{}].'.format(device))

Training on [cpu].

CPU times: user 2.59 s, sys: 1.43 s, total: 4.03 s

Wall time: 1.95 s

%%time

dataset = create_dataset('lrmovielens100k', read_part=False, sample_num=1000000, device=device)

field_dims, (train_X, train_y), (valid_X, valid_y), (test_X, test_y) = dataset.train_valid_test_split()

CPU times: user 933 ms, sys: 30.5 ms, total: 964 ms

Wall time: 1.38 s

class FactorizationMachine(nn.Module):

def __init__(self, field_dims, embed_dim=4):

super(FactorizationMachine, self).__init__()

self.embed1 = FeaturesEmbedding(field_dims, 1)

self.embed2 = FeaturesEmbedding(field_dims, embed_dim)

self.bias = nn.Parameter(torch.zeros((1, )))

def forward(self, x):

# x shape: (batch_size, num_fields)

# embed(x) shape: (batch_size, num_fields, embed_dim)

square_sum = self.embed2(x).sum(dim=1).pow(2).sum(dim=1)

sum_square = self.embed2(x).pow(2).sum(dim=1).sum(dim=1)

output = self.embed1(x).squeeze(-1).sum(dim=1) + self.bias + (square_sum - sum_square) / 2

output = torch.sigmoid(output).unsqueeze(-1)

return output

%%time

EMBEDDING_DIM = 8

LEARNING_RATE = 1e-4

REGULARIZATION = 1e-6

BATCH_SIZE = 4096

EPOCH = 600

TRIAL = 100

fm = FactorizationMachine(field_dims, EMBEDDING_DIM).to(device)

optimizer = optim.Adam(fm.parameters(), lr=LEARNING_RATE, weight_decay=REGULARIZATION)

criterion = nn.BCELoss()

trainer = Trainer(fm, optimizer, criterion, BATCH_SIZE)

trainer.train(train_X, train_y, epoch=EPOCH, trials=TRIAL, valid_X=valid_X, valid_y=valid_y)

test_loss, test_metric = trainer.test(test_X, test_y)

print('test_loss: {:.5f} | test_metric: {:.5f}'.format(test_loss, test_metric))

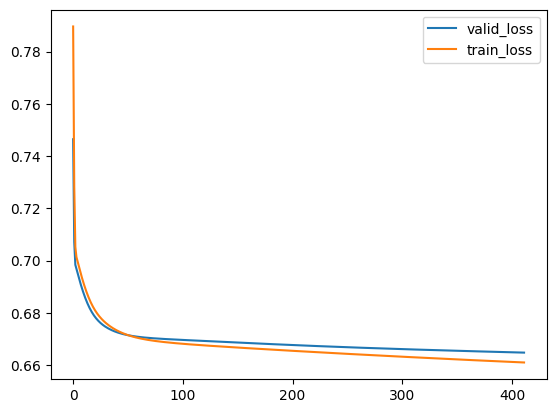

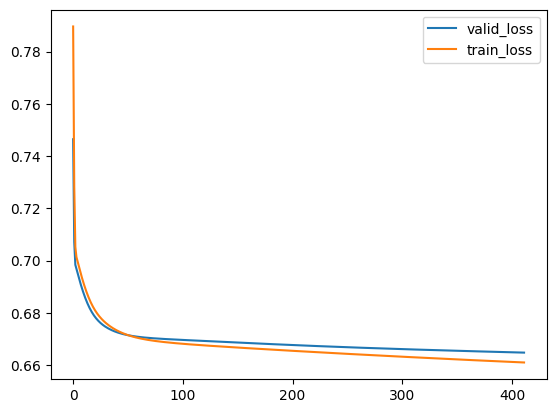

68%|█████████████████████████████████████████▊ | 411/600 [03:54<01:47, 1.75it/s]

train_loss: 0.66286 | train_metric: 0.60023

valid_loss: 0.66593 | valid_metric: 0.59360

test_loss: 0.66421 | test_metric: 0.59830

CPU times: user 15min 34s, sys: 1.09 s, total: 15min 35s

Wall time: 3min 55s

from sklearn import metrics

test_loss, test_metric = trainer.test(test_X, test_y, metricer=metrics.precision_score)

print('test_loss: {:.5f} | test_metric: {:.5f}'.format(test_loss, test_metric))

test_loss: 0.66421 | test_metric: 0.61538

class LogisticRegression(nn.Module):

def __init__(self, field_dims):

super(LogisticRegression, self).__init__()

self.bias = nn.Parameter(torch.zeros((1, )))

self.embed = FeaturesEmbedding(field_dims, 1)

def forward(self, x):

# x shape: (batch_size, num_fields)

output = self.embed(x).sum(dim=1) + self.bias

output = torch.sigmoid(output)

return output

%%time

LEARNING_RATE = 1e-4

REGULARIZATION = 1e-6

BATCH_SIZE = 4096

EPOCH = 600

TRIAL = 100

lr = LogisticRegression(field_dims).to(device)

optimizer = optim.Adam(lr.parameters(), lr=LEARNING_RATE, weight_decay=REGULARIZATION)

criterion = nn.BCELoss()

trainer = Trainer(lr, optimizer, criterion, BATCH_SIZE)

trainer.train(train_X, train_y, epoch=EPOCH, trials=TRIAL, valid_X=valid_X, valid_y=valid_y)

test_loss, test_metric = trainer.test(test_X, test_y)

print('test_loss: {:.5f} | test_metric: {:.5f}'.format(test_loss, test_metric))

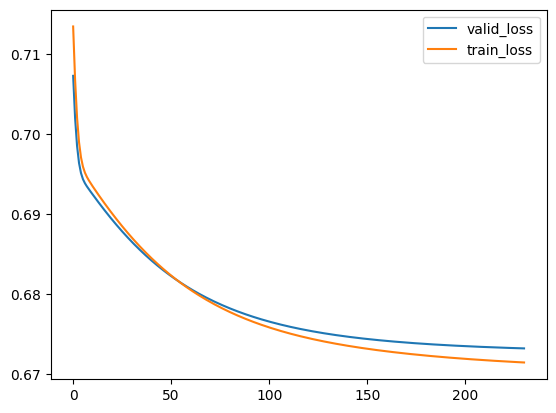

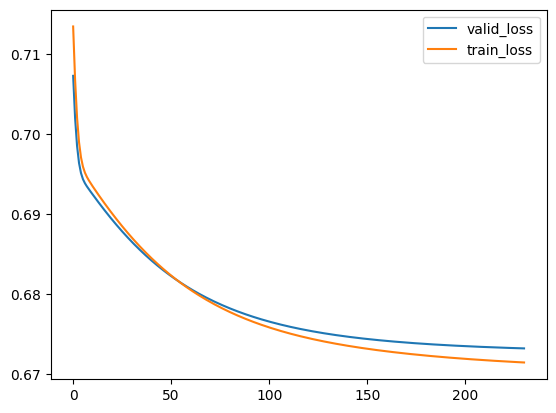

38%|███████████████████████▍ | 230/600 [00:21<00:34, 10.76it/s]

train_loss: 0.67392 | train_metric: 0.57983

valid_loss: 0.67499 | valid_metric: 0.58050

test_loss: 0.67422 | test_metric: 0.57600

CPU times: user 1min 25s, sys: 239 ms, total: 1min 25s

Wall time: 21.6 s

from sklearn import metrics

test_loss, test_metric = trainer.test(test_X, test_y, metricer=metrics.precision_score)

print('test_loss: {:.5f} | test_metric: {:.5f}'.format(test_loss, test_metric))

test_loss: 0.67422 | test_metric: 0.58674