一、基于Prometheus的HPA自动伸缩

1、背景

- Kubernetes集群规模大、动态变化快,而且容器化应用部署和服务治理机制的普及,传统的基础设施监控方式已经无法满足Kubernetes集群的监控需求。

- 需要使用专门针对Kubernetes集群设计的监控工具来监控集群的状态和服务质量。

Prometheus则是目前Kubernetes集群中最常用的监控工具之一,它可以通过Kubernetes API中的 metrics-server 获取 Kubernetes 集群的指标数据,从而实现对Kubernetes集群的应用层面监控,以及基于它们的水平自动伸缩对象HorizontalPodAutoscaler 。

2、Metrics-server

Metrics Server 是一个专门用来收集 Kubernetes 核心资源指标(metrics)的工具,它定时从所有节点的 kubelet 里采集信息,但是对集群的整体性能影响极小,每个节点只大约会占用 1m 的 CPU 和 2MB 的内存,所以性价比非常高。

Metrics Server工作原理

图中从右到左的架构组件包括:

- cAdvisor: 用于收集、聚合和公开 Kubelet 中包含的容器指标的守护程序。

- kubelet: 用于管理容器资源的节点代理。 可以使用 /metrics/resource 和/stats kubelet API 端点访问资源指标。

- Summary API: kubelet 提供的 API,用于发现和检索可通过 /stats 端点获得的每个节点的汇总统计信息。

- metrics-server: 集群插件组件,用于收集和聚合从每个 kubelet 中提取的资源指标。 API 服务器提供 Metrics API 以供 HPA、VPA 和 kubectl top 命令使用。

Metrics Server 是 Metrics API 的参考实现。 - Metrics API: Kubernetes API 支持访问用于工作负载自动缩放的 CPU 和内存。 要在你的集群中进行这项工作,你需要一个提供 Metrics API 的 API 扩展服务器。

2.1 Metrics-server 部署配置

Metrics Server 的项目网址(https://github.com/kubernetes-sigs/metrics-server)

https://github.com/kubernetes-sigs/metrics-server/releases

wget https://github.com/kubernetes-sigs/metrics-server/releases/download/v0.6.4/components.yaml && mv components.yaml metrics-server.yaml修改yam文件

# cat metrics-server.yaml

[root@master-1-230 7.3]# cat metrics-server.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: metrics-server

name: metrics-server

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

k8s-app: metrics-server

rbac.authorization.k8s.io/aggregate-to-admin: "true"

rbac.authorization.k8s.io/aggregate-to-edit: "true"

rbac.authorization.k8s.io/aggregate-to-view: "true"

name: system:aggregated-metrics-reader

rules:

- apiGroups:

- metrics.k8s.io

resources:

- pods

- nodes

verbs:

- get

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

k8s-app: metrics-server

name: system:metrics-server

rules:

- apiGroups:

- ""

resources:

- nodes/metrics

verbs:

- get

- apiGroups:

- ""

resources:

- pods

- nodes

verbs:

- get

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

labels:

k8s-app: metrics-server

name: metrics-server-auth-reader

namespace: kube-system

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: extension-apiserver-authentication-reader

subjects:

- kind: ServiceAccount

name: metrics-server

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

k8s-app: metrics-server

name: metrics-server:system:auth-delegator

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:auth-delegator

subjects:

- kind: ServiceAccount

name: metrics-server

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

k8s-app: metrics-server

name: system:metrics-server

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:metrics-server

subjects:

- kind: ServiceAccount

name: metrics-server

namespace: kube-system

---

apiVersion: v1

kind: Service

metadata:

labels:

k8s-app: metrics-server

name: metrics-server

namespace: kube-system

spec:

ports:

- name: https

port: 443

protocol: TCP

targetPort: https

selector:

k8s-app: metrics-server

---

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

k8s-app: metrics-server

name: metrics-server

namespace: kube-system

spec:

selector:

matchLabels:

k8s-app: metrics-server

strategy:

rollingUpdate:

maxUnavailable: 0

template:

metadata:

labels:

k8s-app: metrics-server

spec:

containers:

- args:

- --kubelet-insecure-tls

- --cert-dir=/tmp

- --secure-port=4443

- --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname

- --kubelet-use-node-status-port

- --metric-resolution=15s

image: registry.k8s.io/metrics-server/metrics-server:v0.6.4

imagePullPolicy: IfNotPresent

livenessProbe:

failureThreshold: 3

httpGet:

path: /livez

port: https

scheme: HTTPS

periodSeconds: 10

name: metrics-server

ports:

- containerPort: 4443

name: https

protocol: TCP

readinessProbe:

failureThreshold: 3

httpGet:

path: /readyz

port: https

scheme: HTTPS

initialDelaySeconds: 20

periodSeconds: 10

resources:

requests:

cpu: 100m

memory: 200Mi

securityContext:

allowPrivilegeEscalation: false

readOnlyRootFilesystem: true

runAsNonRoot: true

runAsUser: 1000

volumeMounts:

- mountPath: /tmp

name: tmp-dir

nodeSelector:

kubernetes.io/os: linux

priorityClassName: system-cluster-critical

serviceAccountName: metrics-server

volumes:

- emptyDir: {}

name: tmp-dir

---

apiVersion: apiregistration.k8s.io/v1

kind: APIService

metadata:

labels:

k8s-app: metrics-server

name: v1beta1.metrics.k8s.io

spec:

group: metrics.k8s.io

groupPriorityMinimum: 100

insecureSkipTLSVerify: true

service:

name: metrics-server

namespace: kube-system

version: v1beta1

versionPriority: 100Metrics Server 默认使用TLS协议,验证证书才能与kubelet 通信,测试使用内网环境先取消配置

应用yaml

[root@master-1-230 7.3]# kubectl apply -f metrics-server.yaml

serviceaccount/metrics-server created

clusterrole.rbac.authorization.k8s.io/system:aggregated-metrics-reader created

clusterrole.rbac.authorization.k8s.io/system:metrics-server created

rolebinding.rbac.authorization.k8s.io/metrics-server-auth-reader created

clusterrolebinding.rbac.authorization.k8s.io/metrics-server:system:auth-delegator created

clusterrolebinding.rbac.authorization.k8s.io/system:metrics-server created

service/metrics-server created

deployment.apps/metrics-server created

apiservice.apiregistration.k8s.io/v1beta1.metrics.k8s.io created验证:

[root@master-1-230 7.3]# kubectl top node

NAME CPU(cores) CPU% MEMORY(bytes) MEMORY%

master-1-230 465m 11% 1905Mi 69%

node-1-231 427m 7% 1645Mi 16%

node-1-232 358m 5% 1168Mi 15%

node-1-233 270m 4% 1129Mi 14%

[root@master-1-230 7.3]#

[root@master-1-230 7.3]# kubectl top node -n kube-system

NAME CPU(cores) CPU% MEMORY(bytes) MEMORY%

master-1-230 465m 11% 1905Mi 69%

node-1-231 427m 7% 1645Mi 16%

node-1-232 358m 5% 1168Mi 15%

node-1-233 270m 4% 1129Mi 14% 3、HorizontalPodAutoscaler

HorizontalPodAutoscaler (HPA)是Kubernetes中的一个控制器,用于动态地调整Pod副本的数量。HPA可以根据Metrics-server提供的指标(如CPU使用率、内存使用率等)或内部指标(如每秒的请求数)来自动调整Pod的副本数量,以确保应用程序具有足够的资源,并且不会浪费资源。

HPA是Kubernetes扩展程序中非常常用的部分,特别是在负载高峰期自动扩展应用程序时。

3.1 使用HorizontalPodAutoscaler

创建一个Nginx 应用,定义Deployment 和Service ,作为自动伸缩的目标对象

apiVersion: apps/v1

kind: Deployment

metadata:

name: ngx-hpa-dep

spec:

replicas: 1

selector:

matchLabels:

app: ngx-hpa-dep

template:

metadata:

labels:

app: ngx-hpa-dep

spec:

containers:

- image: nginx:alpine

name: nginx

ports:

- containerPort: 80

resources:

requests:

cpu: 50m

memory: 10Mi

limits:

cpu: 100m

memory: 20Mi

---

apiVersion: v1

kind: Service

metadata:

name: ngx-hpa-svc

spec:

ports:

- port: 80

protocol: TCP

targetPort: 80

selector:

app: ngx-hpa-dep[root@master-1-230 7.3]# kubectl apply -f nginx-demo-hpa.yaml

deployment.apps/ngx-hpa-dep created

service/ngx-hpa-svc created注意他在spec一定使用resources字段写清楚资源配额,否则HorizontalPodAutoscaler 会无法获取Pod的指标,也就无法实现自动化扩容。

接下来使用kubectl autoscale 创建HorizontalPodAutoscaler的样本YAML文件,他有三个参数:

- min,Pod数量的最小值,缩容的下限

- max,Pod数量的最大值,扩容的上线

- cpu-percent,CPU使用率指标,当大于这个值时扩容,小于这个值时缩容

现在我们使用Nginx应用创建 HorizontalPodAutoscaler,指定Pod数量最少为2个,最多为8个,CPU使用率设置小一点,5%,方便观察扩容现象

kubectl autoscale deploy ngx-hpa-dep --min=2 --max=8 --cpu-percent=5 --dry-run=client -o yaml > nginx-demo-hpa.yaml查看yaml文件

[root@master-1-230 7.3]# cat nginx-demo-hpa1.yaml

apiVersion: autoscaling/v1

kind: HorizontalPodAutoscaler

metadata:

creationTimestamp: null

name: ngx-hpa-dep

spec:

maxReplicas: 8

minReplicas: 2

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: ngx-hpa-dep

targetCPUUtilizationPercentage: 5

status:

currentReplicas: 0

desiredReplicas: 0通过应用yaml 创建HorizontalPodAutoscaler 后,它会发现

Deployment 里的实例只有 1 个,不符合 min 定义的下限的要求,就先扩容到 2 个:

[root@master-1-230 7.3]# kubectl apply -f nginx-demo-hpa1.yaml

horizontalpodautoscaler.autoscaling/ngx-hpa-dep created

[root@master-1-230 7.3]# kubectl get deploy

NAME READY UP-TO-DATE AVAILABLE AGE

harbor10-core 1/1 1 1 13d

harbor10-jobservice 1/1 1 1 13d

harbor10-nginx 1/1 1 1 13d

harbor10-portal 1/1 1 1 13d

harbor10-registry 1/1 1 1 13d

nginx 1/1 1 1 6d

ngx-hpa-dep 2/2 2 2 2m59s3.2 测试验证:

我们给Nginx加上压力流量,运行一个测试pod,使用httpd:alpine 它里面有HTTP性能测试工具ab (Apache Bench)

[root@master-1-230 ~]# kubectl run test it --image=httpd:alpine -- sh

pod/test created然后向Nginx 发送请求,持续一分钟,再用kubectl get hpa观察HorizontalPodAutoscaler 的运行情况

ab -c 10 -t 60 -n 1000000 'http://ngx-hpa-svc/'[root@master-1-230 ~]# kubectl run test -it --image=httpd:alpine -- sh

If you don't see a command prompt, try pressing enter.

/usr/local/apache2 # ab -c 10 -t 60 -n 1000000 'http://ngx-hpa-svc/'

This is ApacheBench, Version 2.3 <$Revision: 1903618 $>

Copyright 1996 Adam Twiss, Zeus Technology Ltd, http://www.zeustech.net/

Licensed to The Apache Software Foundation, http://www.apache.org/

Benchmarking ngx-hpa-svc (be patient)

apr_socket_recv: Connection refused (111)

Total of 14209 requests completed

/usr/local/apache2 # Metrics Server 大约15秒采集一次数据,所以HorizontalPodAutoscaler 的自动化扩容和缩容也按照这个时间点逐步处理

当他发现目标的CPU使用率超过预定的5%后,就会以2的倍数开始扩容,一直到数量上线,然后持续监控一段时间。

如果CPU使用率回落,就会再缩容到最小值(默认会等待五分钟如果负载没有上去,就会缩容到最低水平,防止抖动)

^C[root@master-1-230 ~]# kubectl get po -w |grep ngx

ngx-hpa-dep-5df685854d-9g5d4 1/1 Running 0 6m6s

ngx-hpa-dep-5df685854d-k6792 1/1 Running 0 6m21s

ngx-hpa-dep-5df685854d-fw8wq 0/1 Pending 0 0s

ngx-hpa-dep-5df685854d-tjmjg 0/1 Pending 0 0s

ngx-hpa-dep-5df685854d-fw8wq 0/1 Pending 0 0s

ngx-hpa-dep-5df685854d-tjmjg 0/1 Pending 0 0s

ngx-hpa-dep-5df685854d-tjmjg 0/1 ContainerCreating 0 0s

ngx-hpa-dep-5df685854d-fw8wq 0/1 ContainerCreating 0 0s

ngx-hpa-dep-5df685854d-fw8wq 0/1 ContainerCreating 0 1s

ngx-hpa-dep-5df685854d-tjmjg 0/1 ContainerCreating 0 1s

ngx-hpa-dep-5df685854d-fw8wq 1/1 Running 0 2s

ngx-hpa-dep-5df685854d-tjmjg 1/1 Running 0 3s

ngx-hpa-dep-5df685854d-fdzzx 0/1 Pending 0 0s

ngx-hpa-dep-5df685854d-sz5jl 0/1 Pending 0 0s

ngx-hpa-dep-5df685854d-px99l 0/1 Pending 0 0s

ngx-hpa-dep-5df685854d-fdzzx 0/1 Pending 0 0s

ngx-hpa-dep-5df685854d-sz5jl 0/1 Pending 0 0s

ngx-hpa-dep-5df685854d-px99l 0/1 Pending 0 0s

ngx-hpa-dep-5df685854d-fdzzx 0/1 ContainerCreating 0 0s

ngx-hpa-dep-5df685854d-d5k94 0/1 Pending 0 0s

ngx-hpa-dep-5df685854d-d5k94 0/1 Pending 0 0s

ngx-hpa-dep-5df685854d-px99l 0/1 ContainerCreating 0 0s

ngx-hpa-dep-5df685854d-sz5jl 0/1 ContainerCreating 0 0s

ngx-hpa-dep-5df685854d-d5k94 0/1 ContainerCreating 0 0s

ngx-hpa-dep-5df685854d-fdzzx 0/1 ContainerCreating 0 1s

ngx-hpa-dep-5df685854d-sz5jl 0/1 ContainerCreating 0 1s

ngx-hpa-dep-5df685854d-px99l 0/1 ContainerCreating 0 1s

ngx-hpa-dep-5df685854d-d5k94 0/1 ContainerCreating 0 1s

ngx-hpa-dep-5df685854d-fdzzx 1/1 Running 0 3s

ngx-hpa-dep-5df685854d-px99l 1/1 Running 0 3s

ngx-hpa-dep-5df685854d-d5k94 1/1 Running 0 3s

ngx-hpa-dep-5df685854d-sz5jl 1/1 Running 0 3s

4 、总结

- Metrics Server 是Kubernetes中的一个组件,它可以将集群中的散布的资源使用情况数据收集并聚合起来。收集的数据包括节点的CPU和内存使用情况等。

- 通过API提供给Kubernetes 中的其它组件(如HPA)使用。Metrics Server可以帮助集群管理员和应用程序开发者更好的了解集群中资源的使用情况,并根据

这些数据做出合理的决策,例如调整Pod副本数、扩展集群等。 - Metrics Server 对于Kubernetes中的资源管理和应用程序扩展非常重要。

二、基于Prometheus的全方位监控平台-黑盒监控(Blackbox)

Prometheus监控分为两种

- 黑盒监控

- 白盒监控

白盒监控:指我们日常监控主机的资源使用量、容器的运行状态的运行数据

黑盒监控:常见的黑盒监控包括:HTTP探针、TCP探针、DNS、ICMP等用于检测站点、服务的可访问性、服务的联通性,以及访问效率等

两者比较:

- 黑盒监控是以故障为导向当故障发生时,黑盒监控能快速发现故障

- 白盒监控侧重于主动发现或者预测潜在的问题

一个完善的监控目标是要能从白盒的角度发现潜在问题,能够在黑盒的角度快速发现已经发生的问题

目前支持的应用场景:

- ICMP 测试:主机探活机制

- TCP测试:

业务组件端口状态监听

应用层协议定义与监听 - HTTP测试:

定义Request Header信息

判断Http status/http Response Header/http body内容 - POST 测试:接口连通性

- SSL证书过期时间

1、Blackbox Exporter部署

Exporter Configmap 定义,可以参考下面两个链接

blackbox_exporter/CONFIGURATION.md at master · prometheus/blackbox_exporter · GitHub

blackbox_exporter/example.yml at master · prometheus/blackbox_exporter · GitHub

首先声明一个Blackbox的Deployment 并利用Configmap为Blackbox提供配置文件

Configmap:

参考BlackBox Exporter的github示例配置文件:blackbox_exporter/example.yml at master · prometheus/blackbox_exporter · GitHub

[root@master-1-230 7.4]# cat blackbox-configmap.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: blackbox-exporter

namespace: monitor

labels:

app: blackbox-exporter

data:

blackbox.yml: |-

modules:

## ----------- DNS 检测配置 -----------

dns_tcp:

prober: dns

dns:

transport_protocol: "tcp"

preferred_ip_protocol: "ip4"

query_name: "kubernetes.default.svc.cluster.local" # 用于检测域名可用的网址

query_type: "A"

## ----------- TCP 检测模块配置 -----------

tcp_connect:

prober: tcp

timeout: 5s

## ----------- ICMP 检测配置 -----------

icmp:

prober: icmp

timeout: 5s

icmp:

preferred_ip_protocol: "ip4"

## ----------- HTTP GET 2xx 检测模块配置 -----------

http_get_2xx:

prober: http

timeout: 10s

http:

method: GET

preferred_ip_protocol: "ip4"

valid_http_versions: ["HTTP/1.1","HTTP/2"]

valid_status_codes: [200] # 验证的HTTP状态码,默认为2xx

no_follow_redirects: false # 是否不跟随重定向

## ----------- HTTP GET 3xx 检测模块配置 -----------

http_get_3xx:

prober: http

timeout: 10s

http:

method: GET

preferred_ip_protocol: "ip4"

valid_http_versions: ["HTTP/1.1","HTTP/2"]

valid_status_codes: [301,302,304,305,306,307] # 验证的HTTP状态码,默认为2xx

no_follow_redirects: false # 是否不跟随重定向

## ----------- HTTP POST 监测模块 -----------

http_post_2xx:

prober: http

timeout: 10s

http:

method: POST

preferred_ip_protocol: "ip4"

valid_http_versions: ["HTTP/1.1", "HTTP/2"]

#headers: # HTTP头设置

# Content-Type: application/json

#body: '{}' # 请求体设置Deployment:

[root@master-1-230 7.4]# cat blackbox-exporter.yaml

apiVersion: v1

kind: Service

metadata:

name: blackbox-exporter

namespace: monitor

labels:

k8s-app: blackbox-exporter

spec:

type: ClusterIP

ports:

- name: http

port: 9115

targetPort: 9115

selector:

k8s-app: blackbox-exporter

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: blackbox-exporter

namespace: monitor

labels:

k8s-app: blackbox-exporter

spec:

replicas: 1

selector:

matchLabels:

k8s-app: blackbox-exporter

template:

metadata:

labels:

k8s-app: blackbox-exporter

spec:

containers:

- name: blackbox-exporter

image: prom/blackbox-exporter:v0.21.0

imagePullPolicy: IfNotPresent

args:

- --config.file=/etc/blackbox_exporter/blackbox.yml

- --web.listen-address=:9115

- --log.level=info

ports:

- name: http

containerPort: 9115

resources:

limits:

cpu: 200m

memory: 256Mi

requests:

cpu: 100m

memory: 50Mi

livenessProbe:

tcpSocket:

port: 9115

initialDelaySeconds: 5

timeoutSeconds: 5

periodSeconds: 10

successThreshold: 1

failureThreshold: 3

readinessProbe:

tcpSocket:

port: 9115

initialDelaySeconds: 5

timeoutSeconds: 5

periodSeconds: 10

successThreshold: 1

failureThreshold: 3

volumeMounts:

- name: config

mountPath: /etc/blackbox_exporter

volumes:

- name: config

configMap:

name: blackbox-exporter

defaultMode: 420应用yaml文件

[root@master-1-230 7.4]# kubectl apply -f blackbox-configmap.yaml

configmap/blackbox-exporter created

[root@master-1-230 7.4]#

[root@master-1-230 7.4]# kubectl apply -f blackbox-exporter.yaml

service/blackbox-exporter created

deployment.apps/blackbox-exporter created查看部署后的资源

[root@master-1-230 7.4]# kubectl get all -n monitor |grep blackbox

pod/blackbox-exporter-5b4f75cf4c-rzx2h 1/1 Running 0 34s

service/blackbox-exporter ClusterIP 10.100.39.179 <none> 9115/TCP 35s

deployment.apps/blackbox-exporter 1/1 1 1 35s

replicaset.apps/blackbox-exporter-5b4f75cf4c 1 1 1 34s2、DNS监控

- job_name: "kubernetes-dns"

metrics_path: /probe # 不是 metrics,是 probe

params:

module: [dns_tcp] # 使用 DNS TCP 模块

static_configs:

- targets:

- kube-dns.kube-system:53 # 不要省略端口号

- 8.8.4.4:53

- 8.8.8.8:53

- 223.5.5.5:53

relabel_configs:

- source_labels: [__address__]

target_label: __param_target

- source_labels: [__param_target]

target_label: instance

- target_label: __address__

replacement: blackbox-exporter.monitor:9115 # 服务地址,和上面的 Service 定义保持一致参数解释:

################ DNS 服务器监控 ###################

- job_name: "kubernetes-dns"

metrics_path: /probe

params:

## 配置要使用的模块,要与blackbox exporter配置中的一致

## 这里使用DNS模块

module: [dns_tcp]

static_configs:

## 配置要检测的地址

- targets:

- kube-dns.kube-system:53

- 8.8.4.4:53

- 8.8.8.8:53

- 223.5.5.5

relabel_configs:

## 将上面配置的静态DNS服务器地址转换为临时变量 “__param_target”

- source_labels: [__address__]

target_label: __param_target

## 将 “__param_target” 内容设置为 instance 实例名称

- source_labels: [__param_target]

target_label: instance

## BlackBox Exporter 的 Service 地址

- target_label: __address__

replacement: blackbox-exporter.monitor:9115更新prometheus-config.yaml配置

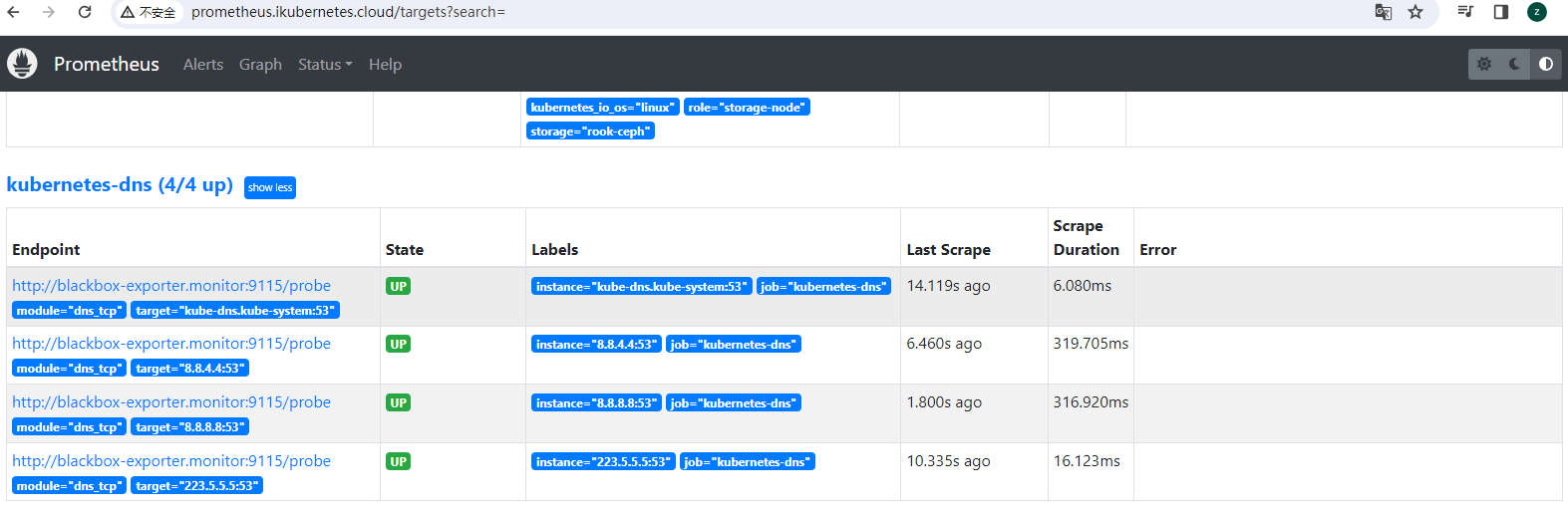

curl -XPOST http://prometheus.ikubernetes.cloud/-/reload打开Prometheus的Target页面,可以看到上面定义的blackbox-k8s-service-dns任务

grafana页面,可以使用probe_success 和probe_duration_seconds等来检测历史结果

3、ICMP监控

- job_name: icmp-status

metrics_path: /probe

params:

module: [icmp]

static_configs:

- targets:

- 192.168.1.232

labels:

group: icmp

relabel_configs:

- source_labels: [__address__]

target_label: __param_target

- source_labels: [__param_target]

target_label: instance

- target_label: __address__

replacement: blackbox-exporter.monitor:9115按上面方法加载Prometheus,打开Prometheus的target页面,可以看到上面定义的任务

curl -XPOST http://prometheus.ikubernetes.cloud/-/reload4、HTTP监控(K8S内部发现方法)

4.1 自定义发现Service 监控端口和路径

可以如下设置

- job_name: 'kubernetes-services'

metrics_path: /probe

params:

module: ## 使用HTTP_GET_2xx与HTTP_GET_3XX模块

- "http_get_2xx"

- "http_get_3xx"

kubernetes_sd_configs: ## 使用Kubernetes动态服务发现,且使用Service类型的发现

- role: service

relabel_configs: ## 设置只监测Kubernetes Service中Annotation里配置了注解prometheus.io/http_probe: true的service

- action: keep

source_labels: [__meta_kubernetes_service_annotation_prometheus_io_http_probe]

regex: "true"

- action: replace

source_labels:

- "__meta_kubernetes_service_name"

- "__meta_kubernetes_namespace"

- "__meta_kubernetes_service_annotation_prometheus_io_http_probe_port"

- "__meta_kubernetes_service_annotation_prometheus_io_http_probe_path"

target_label: __param_target

regex: (.+);(.+);(.+);(.+)

replacement: $1.$2:$3$4

- target_label: __address__

replacement: blackbox-exporter.monitor:9115 ## BlackBox Exporter 的 Service 地址

- source_labels: [__param_target]

target_label: instance

- action: labelmap

regex: __meta_kubernetes_service_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_service_name]

target_label: kubernetes_name 然后在Service中配置annotation

annotations:

prometheus.io/http-probe: "true" ## 开启 HTTP 探针

prometheus.io/http-probe-port: "8080" ## HTTP 探针会使用 8080 端口来进行探测

prometheus.io/http-probe-path: "/healthCheck" ## HTTP 探针会请求 /healthCheck 路径来进行探测,以检查应用程序是否正常运行示例:java应用的svc

apiVersion: v1

kind: Service

metadata:

name: springboot

annotations:

prometheus.io/http-probe: "true"

prometheus.io/http-probe-port: "8080"

prometheus.io/http-probe-path: "/apptwo"

spec:

type: ClusterIP

selector:

app: springboot

ports:

- name: http

port: 8080

protocol: TCP

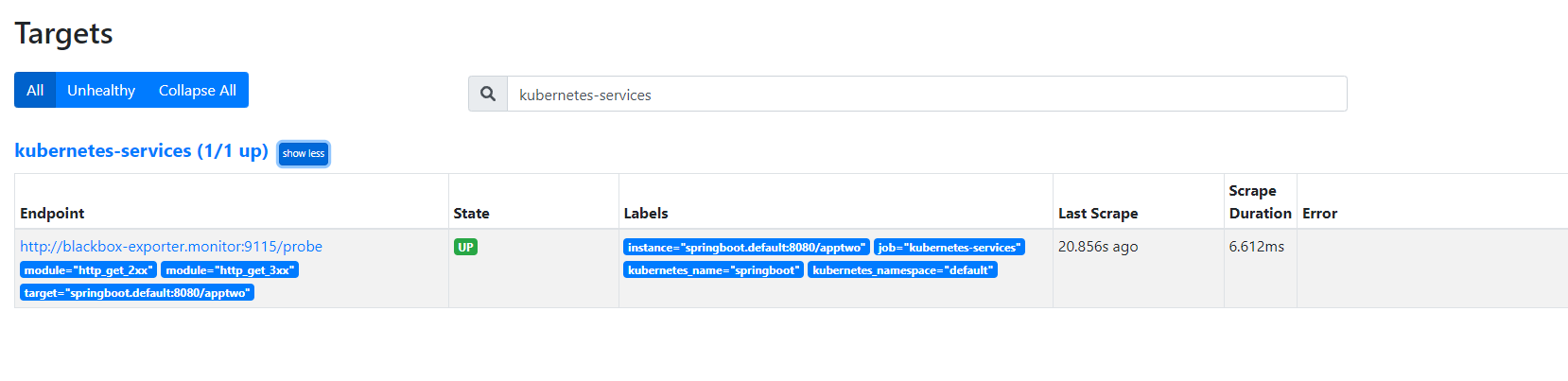

targetPort: 8080按上面方法加载Prometheus,打开Prometheus的target页面,可以看到上面定义的任务

curl -XPOST http://prometheus.ikubernetes.cloud/-/reload

4.2 TCP检测

- job_name: "service-tcp-probe"

scrape_interval: 1m

metrics_path: /probe

# 使用blackbox exporter配置文件的tcp_connect的探针

params:

module: [tcp_connect]

kubernetes_sd_configs:

- role: service

relabel_configs:

# 保留prometheus.io/scrape: "true"和prometheus.io/tcp-probe: "true"的service

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_scrape, __meta_kubernetes_service_annotation_prometheus_io_tcp_probe]

action: keep

regex: true;true

# 将原标签名__meta_kubernetes_service_name改成service_name

- source_labels: [__meta_kubernetes_service_name]

action: replace

regex: (.*)

target_label: service_name

# 将原标签名__meta_kubernetes_service_name改成service_name

- source_labels: [__meta_kubernetes_namespace]

action: replace

regex: (.*)

target_label: namespace

# 将instance改成 `clusterIP:port` 地址

- source_labels: [__meta_kubernetes_service_cluster_ip, __meta_kubernetes_service_annotation_prometheus_io_http_probe_port]

action: replace

regex: (.*);(.*)

target_label: __param_target

replacement: $1:$2

- source_labels: [__param_target]

target_label: instance

# 将__address__的值改成 `blackbox-exporter.monitor:9115`

- target_label: __address__

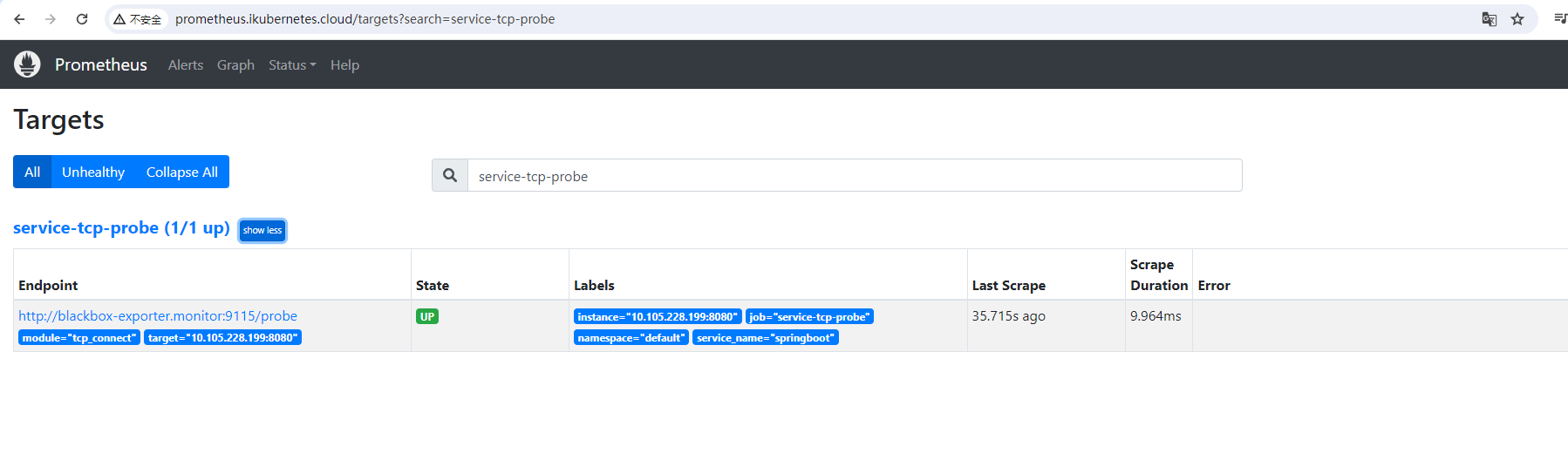

replacement: blackbox-exporter.monitor:9115按上面方法加载Prometheus,打开Prometheus的target页面,可以看到上面定义的任务

curl -XPOST http://prometheus.ikubernetes.cloud/-/reload则需要在service上添加注释必须有以下三行

annotations:

prometheus.io/scrape: "true" ## 这个服务是可以被采集指标的,Prometheus 可以对这个服务进行数据采集

prometheus.io/tcp-probe: "true" ## 开启 TCP 探针

prometheus.io/http-probe-port: "8080" ## HTTP 探针会使用 8080 端口来进行探测,以检查应用程序是否正常运行示例:java应用svc

[root@master-1-230 7.4]# cat java-svc.yaml

apiVersion: v1

kind: Service

metadata:

name: springboot

annotations:

prometheus.io/http-probe: "true"

prometheus.io/http-probe-port: "8080"

prometheus.io/http-probe-path: "/apptwo"

prometheus.io/scrape: "true"

prometheus.io/tcp-probe: "true"

spec:

type: ClusterIP

selector:

app: springboot

ports:

- name: http

port: 8080

protocol: TCP

targetPort: 8080按上面方法加载Prometheus,打开Prometheus的target页面,可以看到上面定义的任务

curl -XPOST http://prometheus.ikubernetes.cloud/-/reload

4.3 Ingress服务的探测

- job_name: 'blackbox-k8s-ingresses'

scrape_interval: 30s

scrape_timeout: 10s

metrics_path: /probe

params:

module: [http_get_2xx] # 使用定义的http模块

kubernetes_sd_configs:

- role: ingress # ingress 类型的服务发现

relabel_configs:

# 只有ingress的annotation中配置了 prometheus.io/http_probe=true 的才进行发现

- source_labels: [__meta_kubernetes_ingress_annotation_prometheus_io_http_probe]

action: keep

regex: true

- source_labels: [__meta_kubernetes_ingress_scheme,__address__,__meta_kubernetes_ingress_path]

regex: (.+);(.+);(.+)

replacement: ${1}://${2}${3}

target_label: __param_target

- target_label: __address__

replacement: blackbox-exporter.monitor:9115

- source_labels: [__param_target]

target_label: instance

- action: labelmap

regex: __meta_kubernetes_ingress_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_ingress_name]

target_label: kubernetes_name则需要在ingress上添加注释必须有以下三行

annotations:

prometheus.io/http_probe: "true"

prometheus.io/http-probe-port: '8080'

prometheus.io/http-probe-path: '/healthz'示例:java应用ingress

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

#konghq.com/https-redirect-status-code: "301"

#konghq.com/protocols: http

#konghq.com/regex-priority: "1000"

prometheus.io/http_probe: "true"

prometheus.io/http-probe-port: '8080'

prometheus.io/http-probe-path: '/healthz'

kubernetes.io/ingress.class: "nginx"

name: springboot-ing

spec:

ingressClassName: nginx

rules:

- host: api-test2.ikubernetes.cloud

http:

paths:

- backend:

service:

name: springboot

port:

number: 8080

path: /

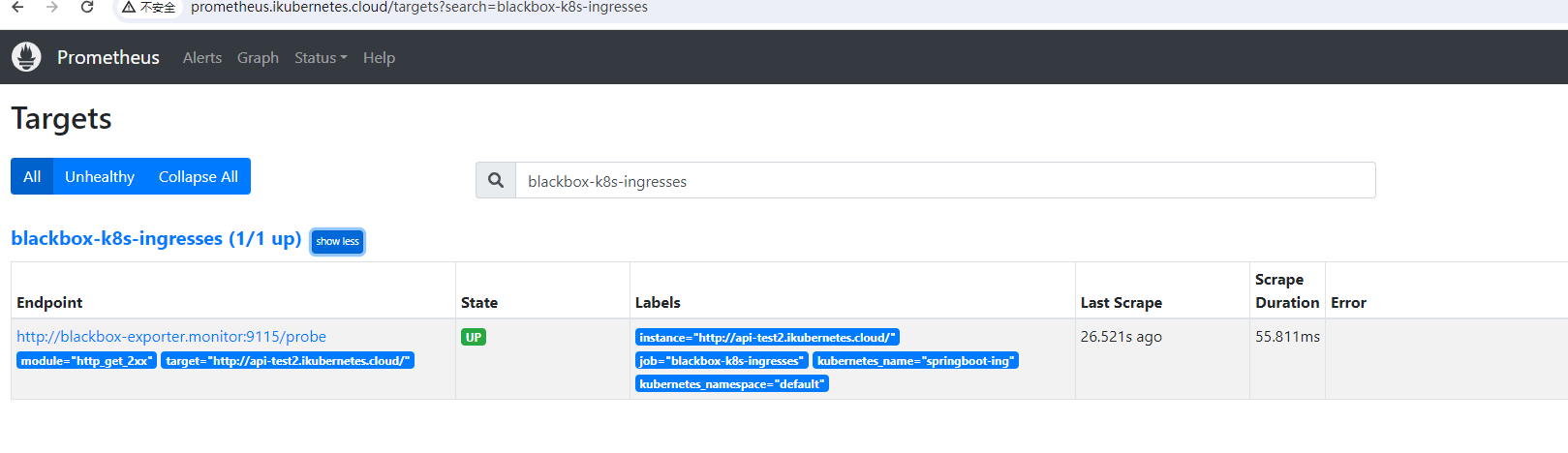

pathType: ImplementationSpecific验证:

5、HTTP监控(监控外部域名)

- job_name: "blackbox-external-website"

scrape_interval: 30s

scrape_timeout: 15s

metrics_path: /probe

params:

module: [http_get_2xx]

static_configs:

- targets:

- https://www.baidu.com # 改为公司对外服务的域名

- https://www.jd.com

relabel_configs:

- source_labels: [__address__]

target_label: __param_target

- source_labels: [__param_target]

target_label: instance

- target_label: __address__

replacement: blackbox-exporter.monitor:9115按上面方法重载 Prometheus,打开 Prometheus 的 Target 页面,就会看到 上面定义的 blackbox-external-website

curl -XPOST http://prometheus.ikubernetes.cloud/-/reload

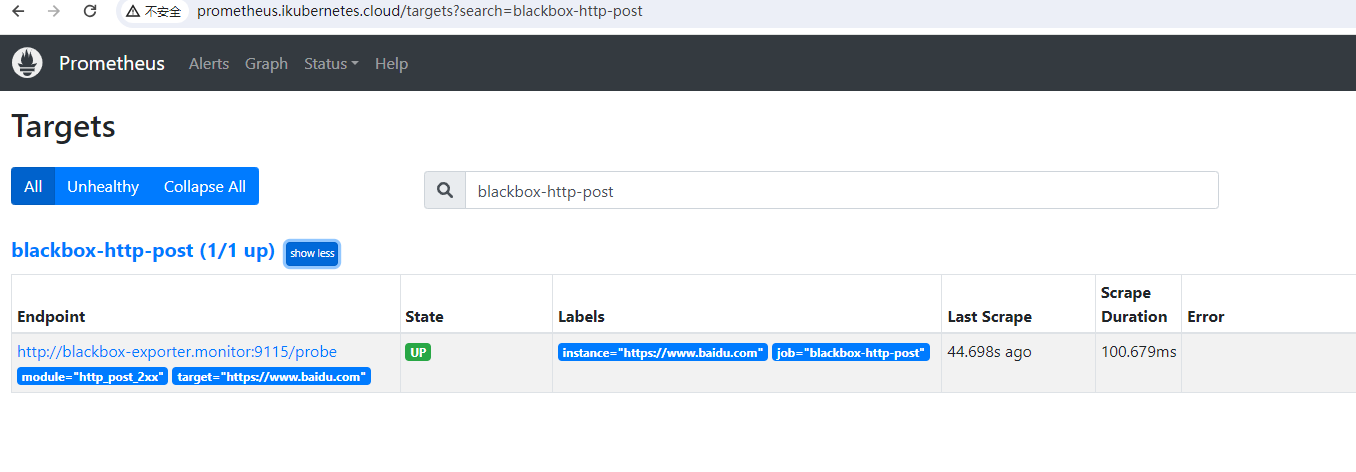

- job_name: 'blackbox-http-post'

metrics_path: /probe

params:

module: [http_post_2xx]

static_configs:

- targets:

- https://www.baidu.com # 要检查的网址

relabel_configs:

- source_labels: [__address__]

target_label: __param_target

- source_labels: [__param_target]

target_label: instance

- target_label: __address__

replacement: blackbox-exporter.monitor:9115

curl -XPOST http://prometheus.ikubernetes.cloud/-/reload

三、自定义资源接入监控系统

基于Prometheus的全方位监控平台--自定义资源接入

Prometheus使用各种Exporter来监控资源。Exporter可以看成是监控agent端,它负责手机对应资源的指标,并提供给到Prometheus读取

1、虚拟数据抓取

1.1 配置安装node-exporter

docker run -d -p 9100:9100 \

-v "/proc:/host/proc" \

-v "/sys:/host/sys" \

-v "/:/rootfs" \

-v "/etc/localtime:/etc/localtime" \

prom/node-exporter \

--path.procfs /host/proc \

--path.sysfs /host/sys \

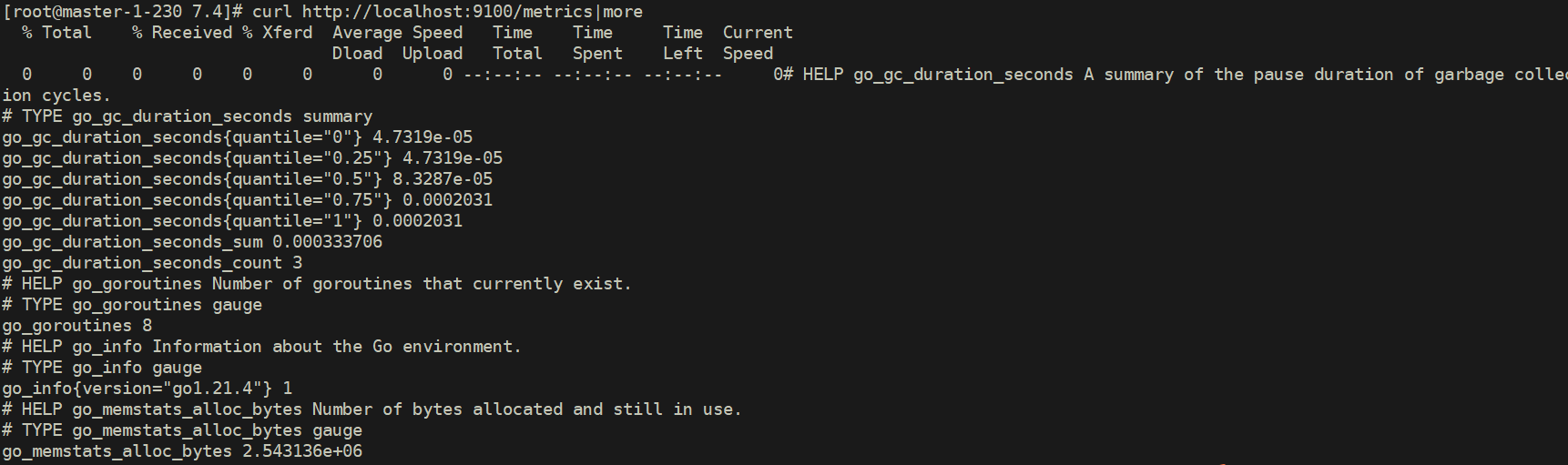

--collector.filesystem.ignored-mount-points "^/(sys|proc|dev|host|etc)($|/)"验证数据收集

[root@master-1-230 7.4]# curl http://localhost:9100/metrics

1.2 配置prometheus-config.yaml

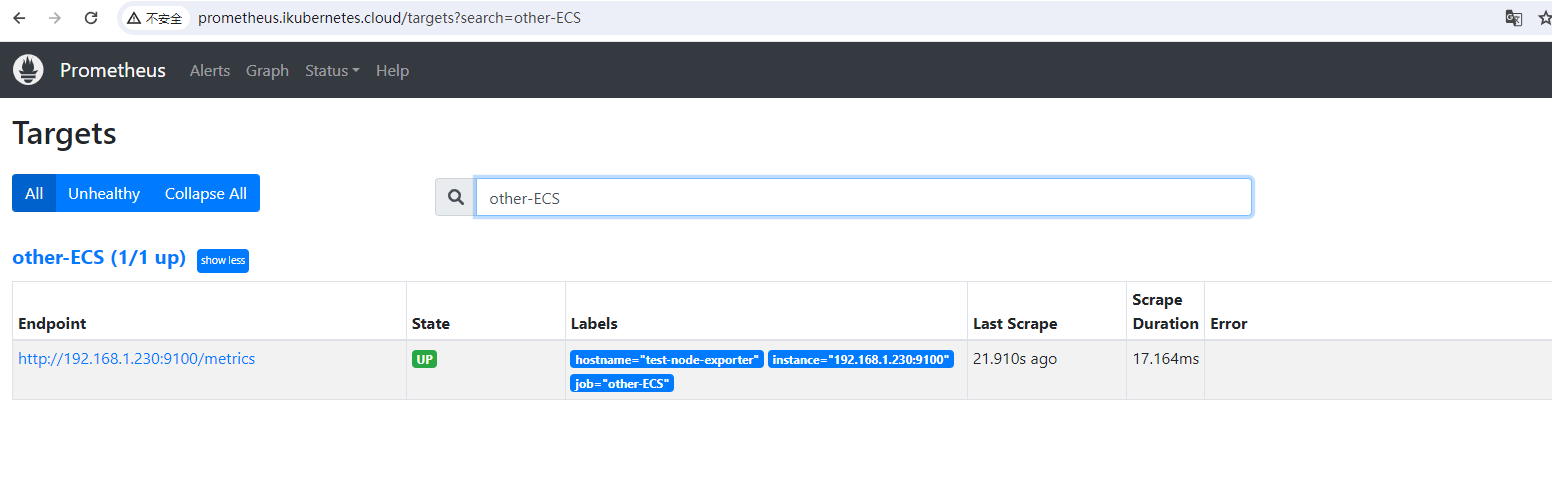

- job_name: 'other-ECS'

static_configs:

- targets: ['192.168.1.230:9100']

labels:

hostname: 'test-node-exporter'按上面方法重载 Prometheus,打开 Prometheus 的 Target 页面,就会看到 上面定义的 other-ECS 任务

[root@master-1-230 7.5]# curl -XPOST http://prometheus.ikubernetes.cloud/-/reload

2、process-exporter进程监控

2.1 创建挂载目录

mkdir -p /opt/process-exporter/config

cat /opt/process-exporter/config/process-exporter.yml

process_names:

- name: "{{.Matches}}"

cmdline:

- 'sd-api'2.2 配置安装process-exporter

docker run -itd --rm -p 9256:9256 --privileged -v /proc:/host/proc -v /opt/process-exporter/config:/config ncabatoff/process-exporter --procfs /host/proc -config.path config/process-exporter.yml2.3 配置文件

- 匹配sd-api 的进程(ps -ef|grep "etcd --advertise-client-urls")

- 通过process-exporter的网页查看监控数据,包含:namedprocess_namegroup_num_procs{groupname="map[:etcd --advertise-client-urls]"} 即代表启动正确

## 指定过程进行监控

# cat process-exporter.yml

process_names:

- name: "{{.Matches}}"

cmdline:

- 'etcd --advertise-client-urls'

# - name: "{{.Matches}}"

# cmdline:

# - 'mysqld'

# - name: "{{.Matches}}"

# cmdline:

# - 'org.apache.zookeeper.server.quorum.QuorumPeerMain'2.4 测试验证:

展示当前主机层面的"etcd --advertise-client-urls"这个进程数量

[root@master-1-230 config]# curl localhost:9256/metrics |grep namedprocess_namegroup_num_procs

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 13899 0 13899 0 0 731k 0 --:--:-- --:--:-- --:--:-- 754k

# HELP namedprocess_namegroup_num_procs number of processes in this group

# TYPE namedprocess_namegroup_num_procs gauge

namedprocess_namegroup_num_procs{groupname="map[:etcd --advertise-client-urls]"} 主机层面测试:

[root@master-1-230 config]# ps aux|grep "etcd --advertise-client-urls" |egrep -v grep

root 2017 6.3 11.4 11486720 333796 ? Ssl 16:41 15:01 etcd --advertise-client-urls=https://192.168.1.230:2379 --cert-file=/etc/kubernetes/pki/etcd/server.crt --client-cert-auth=true --data-dir=/var/lib/etcd --experimental-initial-corrupt-check=true --experimental-watch-progress-notify-interval=5s --initial-advertise-peer-urls=https://192.168.1.230:2380 --initial-cluster=master-1-230=https://192.168.1.230:2380 --key-file=/etc/kubernetes/pki/etcd/server.key --listen-client-urls=https://127.0.0.1:2379,https://192.168.1.230:2379 --listen-metrics-urls=http://0.0.0.0:2381 --listen-peer-urls=https://192.168.1.230:2380 --name=master-1-230 --peer-cert-file=/etc/kubernetes/pki/etcd/peer.crt --peer-client-cert-auth=true --peer-key-file=/etc/kubernetes/pki/etcd/peer.key --peer-trusted-ca-file=/etc/kubernetes/pki/etcd/ca.crt --snapshot-count=10000 --trusted-ca-file=/etc/kubernetes/pki/etcd/ca.crt修改prometheus文件

新增job

- job_name: 'process-exporter'

static_configs:

- targets: ['192.168.1.230:9256']process-exporter

curl -XPOST http://prometheus.ikubernetes.cloud/-/reload3、自定义中间件监控

3.1 创建mysql监听用户并授权

[root@master-1-230 7.5]# helm list |grep mysql

mysql default 5 2023-12-09 16:49:03.310995002 +0800 CST deployed mysql-9.14.4 8.0.35

[root@master-1-230 7.5]# MYSQL_ROOT_PASSWORD=$(kubectl get secret --namespace default mysql -o jsonpath="{.data.mysql-root-password}" | base64 -d)

[root@master-1-230 7.5]# echo $MYSQL_ROOT_PASSWORD

TQtH0tjCLt

[root@master-1-230 7.5]# kubectl get svc|grep mysql

mysql NodePort 10.110.136.173 <none> 3306:32000/TCP 7d4h

[root@master-1-230 7.5]# mysql -uroot -p -h 10.110.136.173

Enter password:

Welcome to the MariaDB monitor. Commands end with ; or \g.

Your MySQL connection id is 9151

Server version: 8.0.35 Source distribution

Copyright (c) 2000, 2018, Oracle, MariaDB Corporation Ab and others.

Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

MySQL [(none)]> CREATE USER 'exporter'@'%' IDENTIFIED BY '123asdZXC';

Query OK, 0 rows affected (0.02 sec)

MySQL [(none)]> GRANT PROCESS, REPLICATION CLIENT, SELECT ON *.* TO 'exporter'@'%';

Query OK, 0 rows affected (0.00 sec)

MySQL [(none)]> flush privileges;

Query OK, 0 rows affected (0.00 sec)

MySQL [(none)]> exit

Bye3.2 启动容器:

[root@master-1-230 7.5]# cat /tmp/mysql.cnf

[client]

host=10.110.136.173

port=3306

user=exporter

password=123asdZXCdocker run -d --restart=always -v /tmp/mysql.cnf:/tmp/mysql.cnf --name mysqld-exporter -p 9104:9104 prom/mysqld-exporter --config.my-cnf=/tmp/mysql.cnf3.3 测试验证:

curl localhost:9104/metrics3.4 修改prometheus文件

- job_name: 'mysql-exporter'

static_configs:

- targets: ['192.168.1.230:9104']按上面方法重载 Prometheus,打开 Prometheus 的 Target 页面,就会看到 上面定义的 mysql-exporter 任务

curl -XPOST http://prometheus.ikubernetes.cloud/-/reload

4、总结

- 安装部署自定义 Exporter 组件;

- 配置prometheus-config文件做数据抓取;

- 配置prometheus-rules做监控告警;

- 配置grafana;