实习僧数据分析与可视化

-

选题背景

随着中国经济的不断发展,实习市场也变得日益重要。学生们在求学期间通过实习获取工作经验,而企业则通过实习生计划发现并培养潜在的人才。实习僧作为一家专注于实习和校园招聘的在线平台,收集了大量的实习相关数据。

通过对实习僧的数据进行爬取和分析,我们可以深入了解中国实习市场的各种趋势,包括但不限于:

热门实习行业和领域: 哪些行业受到学生追捧?哪些领域提供了更多的实习机会?

地域分布: 实习机会在中国的不同城市之间是否存在显著差异?哪些城市是实习热点?

需求职位和技能: 企业对于实习生有哪些具体的职位需求?

薪酬水平: 实习生在不同行业和城市的薪酬水平如何变化?

-

主题式网络爬虫设计方案

- 名称:实习僧数据分析与可视化

- 爬取的数据内容: 岗位名称,所在城市,每周实习天数,总共实习月数, 薪资范围, 公司名,公司规模,行业领域, 实习福利,公司标签

- 爬虫设计方案概述:实现思路:本次案例主要使用Scrapy爬虫框架进行数据爬取, 使用xpath提取网页数据,将爬取的数据存储到csv文件中,之后使用pandas、matplotlib、pyecharts对数据进行清洗和可视化。

技术难点:实习僧网站采用字体反爬,需要对爬取的数据进行重新编码映射,才能获取到正常的数据,否则直接爬取将会获取到乱码的数据。

-

主题式页面结构特征分析

(数据集:https://www.shixiseng.com/ )

使用xpath提取所需数据

job_name = job.xpath( './/a[@class="title ellipsis font"]/text()').get() salary = job.xpath('.//span[@class="day font"]/text()').get() city = job.xpath( './/div[contains(@class, "intern-detail__job")]//p[@class="tip"]/span[1]/text()').get() week_time = job.xpath( './/div[contains(@class, "intern-detail__job")]//p[@class="tip"]/span[3]/text()').get() total_time = job.xpath( './/div[contains(@class, "intern-detail__job")]//p[@class="tip"]/span[5]/text()').get() job_welfare = job.xpath( './/div[@class="f-l"]/span/text()').getall() com_name = job.xpath( './/div[contains(@class, "intern-detail__company")]//a[@class="title ellipsis"]/text()').get() com_field = job.xpath( './/div[contains(@class, "intern-detail__company")]//p[@class="tip"]/span[1]/text()').get() com_scale = job.xpath( './/div[contains(@class, "intern-detail__company")]//p[@class="tip"]/span[3]/text()').get() com_tags = job.xpath( './/div[@class="f-r ellipsis"]/span/text()').get() -

网络爬虫设计

-

爬取内容

import scrapy from shixiseng.items import JobItem class NationalSpider(scrapy.Spider): name = "national" allowed_domains = ["shixiseng.com"] start_urls = ["https://www.shixiseng.com/interns?page=1&type=intern"] page_num = 1 def parse(self, response): job_list = response.xpath('//div[contains(@class, "intern-wrap")]') for job in job_list: job_name = job.xpath( './/a[@class="title ellipsis font"]/text()').get() salary = job.xpath('.//span[@class="day font"]/text()').get() city = job.xpath( './/div[contains(@class, "intern-detail__job")]//p[@class="tip"]/span[1]/text()').get() week_time = job.xpath( './/div[contains(@class, "intern-detail__job")]//p[@class="tip"]/span[3]/text()').get() total_time = job.xpath( './/div[contains(@class, "intern-detail__job")]//p[@class="tip"]/span[5]/text()').get() job_welfare = job.xpath( './/div[@class="f-l"]/span/text()').getall() com_name = job.xpath( './/div[contains(@class, "intern-detail__company")]//a[@class="title ellipsis"]/text()').get() com_field = job.xpath( './/div[contains(@class, "intern-detail__company")]//p[@class="tip"]/span[1]/text()').get() com_scale = job.xpath( './/div[contains(@class, "intern-detail__company")]//p[@class="tip"]/span[3]/text()').get() com_tags = job.xpath( './/div[@class="f-r ellipsis"]/span/text()').get() job_item = JobItem() job_item['job_name'] = job_name job_item['salary'] = salary job_item['city'] = city job_item['week_time'] = week_time job_item['total_time'] = total_time job_item['job_welfare'] = job_welfare job_item['com_name'] = com_name job_item['com_field'] = com_field job_item['com_scale'] = com_scale job_item['com_tags'] = com_tags yield job_item has_next_page = response.xpath('//button[@class="btn-next"]/@disabled').get() != "disabled" if has_next_page: self.page_num += 1 next_page_url = "https://www.shixiseng.com/interns?page={}&type=intern".format( self.page_num) yield response.follow(url=next_page_url, callback=self.parse)处理字体反爬, 使用中间件和正则表达式将获取的每个请求的内容进行编码替换

class DecryptFontMiddleware: FONT_URL = 'https://www.shixiseng.com/interns/iconfonts/file' FONT_FILE = '../../resource/new_font.woff' XML_FILE = '../../resource/new_font.xml' def process_response(self, request, response, spider): html_content = response.body.decode('utf-8') if not os.path.exists(self.XML_FILE): self.download_and_parse_font() word_dict = self.load_word_dict_from_xml() html_content = self.replace_html_content(html_content, word_dict) response = response.replace(body=html_content.encode('utf-8')) return response def download_and_parse_font(self): try: if not os.path.exists(self.FONT_FILE): self.download_font() self.parse_font() except Exception as e: print(f"Error during font download/parse: {e}") raise def download_font(self): try: r = requests.get(self.FONT_URL) r.raise_for_status() with open(self.FONT_FILE, 'wb') as f: f.write(r.content) except requests.exceptions.RequestException as e: print(f"Error during font download: {e}") raise def parse_font(self): font = TTFont(self.FONT_FILE) font.saveXML(self.XML_FILE) def load_word_dict_from_xml(self): with open(self.XML_FILE) as f: xml = f.read() keys = re.findall('<map code="(0x.*?)" name="uni.*?"/>', xml)[:99] values = re.findall('<map code="0x.*?" name="uni(.*?)"/>', xml)[:99] for i in range(len(values)): if len(values[i]) < 4: values[i] = ('\\u00' + values[i] ).encode('utf-8').decode('unicode_escape') else: values[i] = ('\\u' + values[i] ).encode('utf-8').decode('unicode_escape') word_dict = dict(zip(keys, values)) return word_dict def replace_html_content(self, html_content, word_dict): keys = list(word_dict.keys()) for key in keys: pattern = '&#x' + key[2:] html_content = re.sub(pattern, word_dict[key], html_content) return html_content -

数据清洗

import pandas as pd import matplotlib.pyplot as plt df = pd.read_csv('../resource/job_list.csv') df[['min_salary', 'max_salary']] = df['salary'].str.extract( r'(\d+)(?:-(\d+))?').astype(float) df['max_salary'].fillna(df['min_salary'], inplace=True) df['city'] = df['city'].str.strip() df['city'] = df['city'].apply(lambda x: x.rstrip('市') if '市' in x else x) df['week_time'] = df['week_time'].str.extract('(\d+)').astype(int) df['total_time'] = df['total_time'].str.extract('(\d+)').astype(float) df['total_time'].fillna(df['total_time'].mean(), inplace=True) df['total_time'] = df['total_time'].astype("int") df['com_name'] = df['com_name'].str.strip() scale_mapping = { '少于15人': 0, '15-50人': 1, '50-150人': 2, '150-500人': 3, '500-2000人': 4, '2000人以上': 5, '15人以下': 0, '50-100人': 2, '100-499人': 3, '未知规模': -1, } df['com_scale'] = df['com_scale'].map(scale_mapping).fillna(-1).astype(int) df.drop(['salary'], axis=1, inplace=True) df['com_field'] = df['com_field'].str.split('/').str[0] df.to_csv('../resource/job_list_cleaned.csv', index=False, encoding='utf-8') -

数据可视化

-

不同行业在各城市的实习岗位数量占比

-

实习岗位数前10的行业的平均日薪

-

实习时长的分布

-

不同日薪范围的岗位数量占比

-

公司规模与日薪分布关系

-

岗位数量前10城市的平均薪资

-

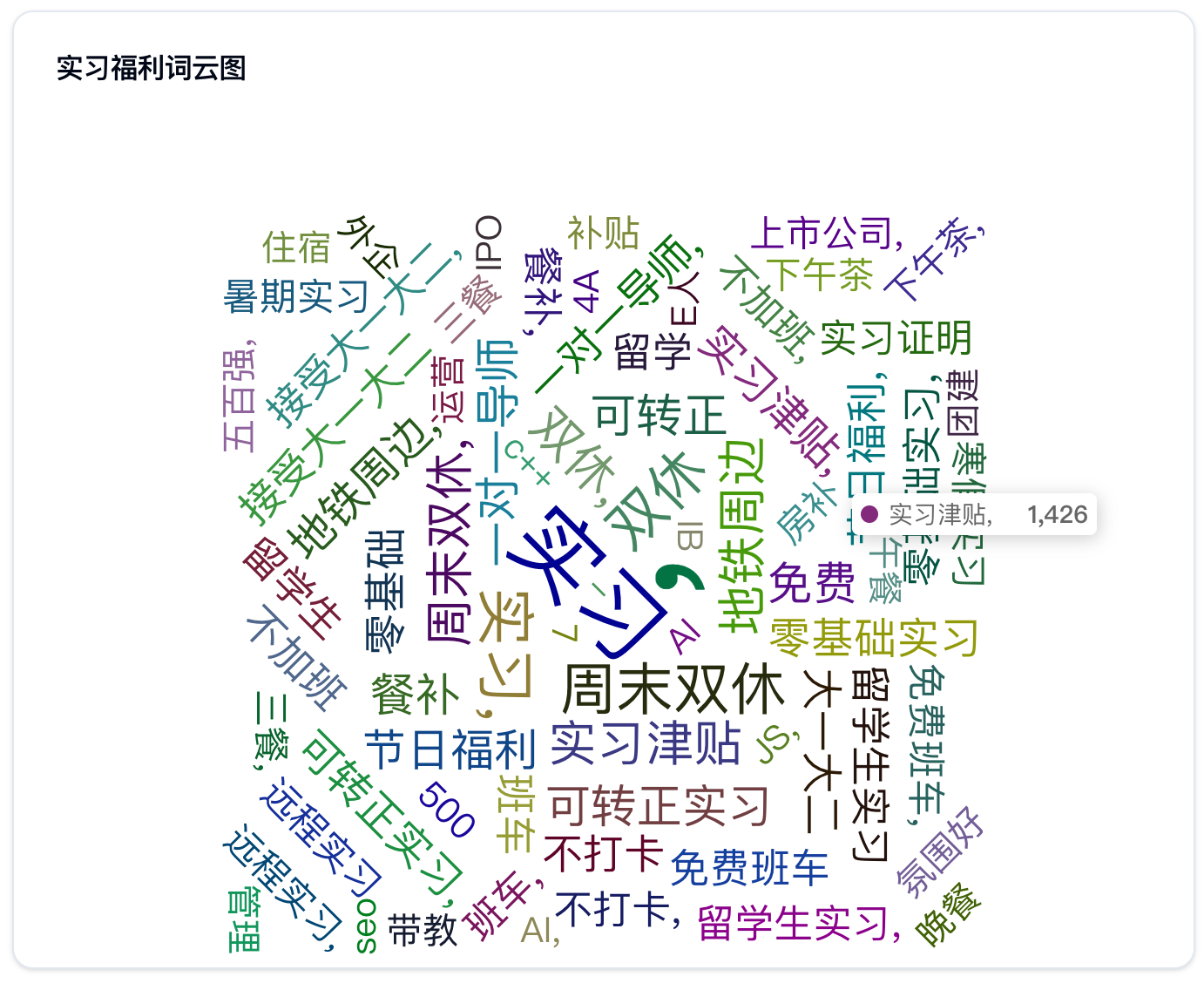

实习福利词云图

-

公司标签词云图

-

-

-

全部代码如下

from flask import ( Blueprint, flash, g, redirect, render_template, request, url_for, jsonify, send_file, Response ) import pandas as pd from random import randrange from pyecharts import options as opts from pyecharts.charts import Bar, Pie, WordCloud import matplotlib import matplotlib.pyplot as plt from io import BytesIO import base64 import json matplotlib.use('agg') plt.rcParams['font.sans-serif'] = ['kai'] plt.rcParams['axes.unicode_minus'] = False bp = Blueprint('job', __name__, url_prefix='/api/job') csv_path = '../resource/job_list_cleaned.csv' @bp.route('top', methods=['GET']) def get_top_chart(): df = pd.read_csv(csv_path, encoding='utf-8') # 统计各城市不同行业的岗位数量 city_industry_counts = df.groupby(['city', 'com_field']).size( ).unstack().fillna(0).stack().reset_index(name='count') # 获取所有城市和行业的列表 cities = city_industry_counts['city'].unique().tolist() industries = city_industry_counts['com_field'].unique().tolist() # 仅保留岗位数量前10的城市 top_cities = city_industry_counts.groupby('city')['count'].sum( ).sort_values(ascending=False).head(10).index.tolist() # 过滤数据 city_industry_counts = city_industry_counts[city_industry_counts['city'].isin( top_cities)] # 创建柱状图 bar = ( Bar() .add_xaxis(top_cities) .set_global_opts( title_opts=opts.TitleOpts(title="实习岗位数Top10城市不同行业的岗位数量"), xaxis_opts=opts.AxisOpts( axislabel_opts=opts.LabelOpts(font_size=14)), legend_opts=opts.LegendOpts( type_="scroll", pos_top="center", pos_right="0", orient="vertical"), tooltip_opts=opts.TooltipOpts( is_show=True, trigger="axis", axis_pointer_type="shadow" ), datazoom_opts=[ opts.DataZoomOpts(), opts.DataZoomOpts(type_="inside")], ) .set_series_opts(bar_width='60') ) # 添加柱状图数据 for industry in industries: bar.add_yaxis( industry, city_industry_counts[city_industry_counts['com_field'] == industry]['count'].tolist(), label_opts=opts.LabelOpts(is_show=False), ) # 创建饼图 pie = ( Pie() .add("", [list(z) for z in zip(industries, city_industry_counts.groupby('com_field')['count'].sum())], radius="20%", center=["75%", "25%"]) .set_series_opts(tooltip_opts=opts.TooltipOpts(is_show=True, trigger="item")) ) bar.overlap(pie) return bar.dump_options_with_quotes() def remove_spines(ax): ax.spines['top'].set_visible(False) ax.spines['right'].set_visible(False) ax.spines['bottom'].set_visible(False) ax.spines['left'].set_visible(False) def add_values_on_bars(bars, ax): for bar in bars: yval = bar.get_height() ax.text(bar.get_x() + bar.get_width() / 2, yval, round(yval, 1), ha='center', va='bottom') def setup_plot_style(theme): if theme == "dark": style = { 'axes.facecolor': '#020817', 'figure.facecolor': '#020817', 'figure.edgecolor': '#020817', 'xtick.color': '#FFFFFF', 'ytick.color': '#FFFFFF', 'axes.labelcolor': '#FFFFFF', 'axes.edgecolor': '#FFFFFF', 'text.color': '#FFFFFF', 'legend.facecolor': '#020817', 'legend.edgecolor': '#020817', } else: style = 'default' plt.style.use(style) plt.rcParams['font.sans-serif'] = ['kai'] plt.rcParams['axes.unicode_minus'] = False def plot_to_base64(fig): img = BytesIO() fig.savefig(img, format='png') img.seek(0) img_str = "data:image/png;base64," + \ base64.b64encode(img.read()).decode('utf-8') plt.close() return img_str def plot_duration_distribution(df, theme): setup_plot_style(theme) fig, ax = plt.subplots(figsize=(8, 6)) duration_counts = df['total_time'].value_counts().sort_index() bars = ax.bar(duration_counts.index.astype(str), duration_counts.values, color='#ADFA1D') ax.set_xlabel("实习时长(月)", fontsize=14) ax.set_ylabel("实习机会数量", fontsize=14) remove_spines(ax) add_values_on_bars(bars, ax) return { 'title': '实习时长分布', 'img': plot_to_base64(fig) } def plot_salary_distribution(df, salary_ranges, theme): setup_plot_style(theme) fig, ax = plt.subplots(figsize=(8, 6)) df['salary_range'] = pd.cut(df['min_salary'], bins=[r[0] for r in salary_ranges] + [99999], labels=[f"{r[0]}-{r[1]}" if r[1] < 99999 else f"{r[0]}以上" for r in salary_ranges]) salary_counts = df['salary_range'].value_counts().sort_index() bars = ax.bar(salary_counts.index, salary_counts.values, color='#ADFA1D', width=0.3) ax.set_xlabel('日薪范围(元/天)', fontsize=14) ax.set_ylabel('实习工作数量', fontsize=14) remove_spines(ax) add_values_on_bars(bars, ax) return { 'title': '不同日薪范围的实习岗位数量', 'img': plot_to_base64(fig) } def plot_salary_vs_company_scale(df, scale_mapping, theme): setup_plot_style(theme) fig, ax = plt.subplots(figsize=(8, 6)) ax.scatter(df['com_scale'], df['max_salary'], alpha=0.5, color='#ADFA1D') ax.set_xlabel('公司规模', fontsize=14) ax.set_ylabel('最高薪资(元/天)', fontsize=14) ax.set_xticks(ticks=list(scale_mapping.values())) ax.set_xticklabels(list(scale_mapping.keys())) remove_spines(ax) return { 'title': '公司规模与日薪分布关系', 'img': plot_to_base64(fig) } def plot_mean_salary_by_city(df, theme): setup_plot_style(theme) fig, ax = plt.subplots(figsize=(8, 6)) city_job_counts = df['city'].value_counts() selected_cities = city_job_counts[city_job_counts >= 100].index df_selected_cities = df[df['city'].isin(selected_cities)] mean_salary_by_city = df_selected_cities.groupby( 'city')['max_salary'].mean().reset_index().dropna() bars = ax.bar( mean_salary_by_city['city'], mean_salary_by_city['max_salary'], color='#ADFA1D') ax.set_xlabel('城市', fontsize=14) ax.set_ylabel('最高薪资的平均值', fontsize=14) remove_spines(ax) add_values_on_bars(bars, ax) return { 'title': '各城市平均薪资(岗位数在100以上)', 'img': plot_to_base64(fig) } @bp.route('/get_charts', methods=['GET']) def get_charts(): theme = request.args.get('theme', default='light') df = pd.read_csv(csv_path, encoding='utf-8') scale_mapping = { '少于15人': 0, '15-50人': 1, '50-150人': 2, '150-500人': 3, '500-2000人': 4, '2000人以上': 5, '未知规模': -1, } salary_ranges = [ (0, 50), (50, 100), (100, 150), (150, 200), (200, 99999) ] charts = [ plot_duration_distribution(df, theme), plot_salary_distribution(df, salary_ranges, theme), plot_salary_vs_company_scale(df, scale_mapping, theme), plot_mean_salary_by_city(df, theme), ] return jsonify(charts) @bp.route("get_wordcloud", methods=['GET']) def get_wordcloud(): # 读取数据 df = pd.read_csv(csv_path, encoding='utf-8') # 处理 job_welfare 列的词云 job_welfare_text = " ".join(df['job_welfare'].dropna().tolist()) job_welfare_wordcloud = ( WordCloud() .add("", [(word, job_welfare_text.count(word)) for word in set(job_welfare_text.split())], word_size_range=[20, 100]) ) job_welfare_option = job_welfare_wordcloud.dump_options_with_quotes() job_welfare_title = "实习福利词云图" # 处理 com_tags 列的词云 com_tags_text = " ".join(df['com_tags'].dropna().tolist()) com_tags_wordcloud = ( WordCloud() .add("", [(word, com_tags_text.count(word)) for word in set(com_tags_text.split())], word_size_range=[20, 100]) ) com_tags_option = com_tags_wordcloud.dump_options_with_quotes() com_tags_title = "公司标签词云图" return jsonify([ {'title': job_welfare_title, 'option': json.loads(job_welfare_option)}, {'title': com_tags_title, 'option': json.loads(com_tags_option)} ]) @bp.route("getDashboardChart", methods=['GET']) def getDashboardChart(): # 读取数据 df = pd.read_csv(csv_path, encoding='utf-8') # 计算每个行业的平均薪资和岗位数量 industry_stats = df.groupby('com_field')['max_salary'].mean().reset_index() industry_counts = df['com_field'].value_counts().reset_index() industry_counts.columns = ['com_field', 'job_count'] industry_stats = pd.merge( industry_stats, industry_counts, on='com_field', how='left') # 选择岗位数前10的行业 top_industries = industry_stats.sort_values( by='job_count', ascending=False).head(10) # 使用 pyecharts 生成柱状图 bar = ( Bar() .add_xaxis(top_industries['com_field'].tolist()) .add_yaxis( "平均薪资", top_industries['max_salary'].round(2).tolist(), # 保留两位小数 label_opts=opts.LabelOpts(is_show=False), itemstyle_opts=opts.ItemStyleOpts(color='#ADFA1D') # 设置柱状条颜色 ) .set_global_opts( xaxis_opts=opts.AxisOpts( axislabel_opts=opts.LabelOpts( font_size=14, interval=0), # 显示所有 x 轴数据 # 去除 x 轴刻度线 axisline_opts=opts.AxisLineOpts(is_show=False), axistick_opts=opts.AxisTickOpts(is_show=False), ), yaxis_opts=opts.AxisOpts( # 去除 y 轴刻度线和标签 axisline_opts=opts.AxisLineOpts(is_show=False), axistick_opts=opts.AxisTickOpts(is_show=False), splitline_opts=opts.SplitLineOpts(is_show=False), # 去除 y 轴分割线 ), tooltip_opts=opts.TooltipOpts(formatter="{b}: {c}元"), # 显示两位小数 legend_opts=opts.LegendOpts(is_show=False) ) ) return { "title": "岗位数Top10行业的平均薪资", "option": json.loads(bar.dump_options_with_quotes()) } @bp.route("getDashboardData", methods=['GET']) def getDashboardData(): # 读取数据 df = pd.read_csv(csv_path, encoding='utf-8') # 1. 总岗位数 total_jobs = len(df) # 2. 平均日薪 average_daily_salary = df['max_salary'].mean() average_daily_salary = round(average_daily_salary, 2) # 3. 岗位最多城市 most_common_city = df['city'].mode().values[0] # 4. 岗位最多行业 most_common_industry = df['com_field'].mode().values[0] return { "total_jobs": total_jobs, "average_daily_salary": average_daily_salary, "most_common_city": most_common_city, "most_common_industry": most_common_industry, } @bp.route("getDashboardTop", methods=['GET']) def getDashboardTop(): # 读取数据 df = pd.read_csv(csv_path, encoding='utf-8') top_salary_ranking = df[['job_name', 'com_name', 'max_salary']].sort_values( by='max_salary', ascending=False).head(5) return top_salary_ranking.to_dict('records') -

总结

就业竞争力提升:

项目分析显示,互联网实习岗位的需求量较大,这说明拥有实习经历和相关技能将有助于提高就业竞争力。对于求职者来说,获取实际工作经验变得更加重要。薪资水平观察:

通过数据可视化呈现了不同实习岗位的薪资水平,结果显示实习岗位的薪资水平较为丰富。这为求职者提供了更多选择,并突显了不同行业的薪资差异。通过项目的设计和实施,我不仅学到了Python爬虫的基本技巧,也熟练掌握了数据处理和分析的方法。同时,对数据可视化的基本原理和方法有了更深刻的理解。这次项目为我提供了一个实践机会,使我更好地应用所学知识,并加强了我在数据领域的实际能力。