1. 项目背景

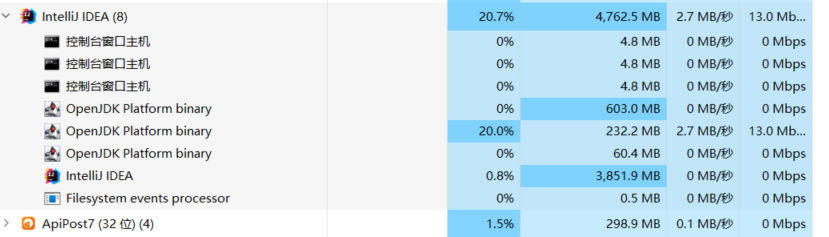

由于项目中需要接入海康平台的摄像头,并将摄像头采集到的视频流过算法处理,所以对服务器本身要求较高,代码的性能同样如此,经过多方讨论,主流方向主要有两种:1、视频流的每一帧经过算法处理后,将每一帧合成视频流推送出去(这种方法基本上逃离不了ffmpeg,pyav、Javacv底层也全是ffmpeg。。。。。。)2、视频流的每一帧经过算法处理后,直接返回给前端,其方式主要有key-value形式:通过后端实时改变key的值,也就是每一帧图片,前端实时获取实现,这种性能损耗最低,但是视频会一卡一卡的,甚至有时能直接看出图片一直在变;另外一种方式是通过长连接或者订阅推送实现,最后性能和效果都不错的是订阅推送;下面所讲述方法为方法一,为当时简单测试,效果还行吧,因为有缓冲区,需要加载,有时会卡一下,在我的电脑上CPU性能占20%左右(处理器:i7-7700hq)

2. 代码实现

首先,Java代码实现图片转视频流主要有两种方式:1、jcodec将图片转视频流 2、javacv图片转视频流 其中jcodec我简单实现了一下,发现jcodec是通过将文件夹下的图片转为视频文件实现,而非推流地址,故而不符合需求;

下面我将详细阐述Javacv实现方式:

2.1. 导包

dependencies {

implementation 'org.springframework.boot:spring-boot-starter-web'

implementation 'org.mybatis.spring.boot:mybatis-spring-boot-starter:3.0.2'

implementation 'com.baomidou:mybatis-plus-boot-starter:3.5.3.1'

// Sa-Token 权限认证,在线文档:https://sa-token.cc

implementation 'cn.dev33:sa-token-spring-boot3-starter:1.35.0.RC'

// https://mvnrepository.com/artifact/org.jcodec/jcodec

implementation 'org.jcodec:jcodec:0.2.5'

// https://mvnrepository.com/artifact/org.springframework.boot/spring-boot-starter-data-redis

implementation 'org.springframework.boot:spring-boot-starter-data-redis:3.1.5'

// https://mvnrepository.com/artifact/redis.clients/jedis

implementation 'redis.clients:jedis:5.0.2'

// https://mvnrepository.com/artifact/org.glassfish/javax.annotation

implementation 'org.glassfish:javax.annotation:10.0-b28'

// https://mvnrepository.com/artifact/com.twelvemonkeys.imageio/imageio-jpeg

implementation 'com.twelvemonkeys.imageio:imageio-jpeg:3.10.0'

// https://mvnrepository.com/artifact/com.twelvemonkeys.imageio/imageio-jpeg

implementation 'com.twelvemonkeys.imageio:imageio-jpeg:3.10.0'

// https://mvnrepository.com/artifact/org.apache.commons/commons-pool2

implementation 'org.apache.commons:commons-pool2:2.12.0'

implementation 'cn.hutool:hutool-all:5.8.16'

// https://mvnrepository.com/artifact/org.bytedeco/ffmpeg

implementation 'org.bytedeco:ffmpeg:6.0-1.5.9'

// https://mvnrepository.com/artifact/org.bytedeco/ffmpeg-platform

implementation 'org.bytedeco:ffmpeg-platform:6.0-1.5.9'

// https://mvnrepository.com/artifact/org.bytedeco/javacv

implementation 'org.bytedeco:javacv:1.5.9'

// https://mvnrepository.com/artifact/com.github.hoary.ffmpeg/JavaAV-FFmpeg

implementation 'com.github.hoary.ffmpeg:JavaAV-FFmpeg:1.0'

// Excel导入

implementation 'org.apache.poi:poi:5.2.2'

implementation 'org.apache.poi:poi-ooxml:5.2.2'

implementation 'com.alibaba:easyexcel:3.3.2'

// 文件下载

implementation 'commons-io:commons-io:2.11.0'

compileOnly 'org.projectlombok:lombok'

runtimeOnly 'com.mysql:mysql-connector-j'

annotationProcessor 'org.projectlombok:lombok'

testImplementation 'org.springframework.boot:spring-boot-starter-test'

testImplementation 'org.mybatis.spring.boot:mybatis-spring-boot-starter-test:3.0.2'

}

由于测试的较多,我就将所有导包全放在这里了,应该是只有几种用到了(javacv、hutool、JavaAV-FFmpeg),这里建议全部导入

2.2. 工具类

图像流处理类

package com.example.study.test;

import lombok.extern.slf4j.Slf4j;

import org.bytedeco.ffmpeg.global.avcodec;

import org.bytedeco.javacv.FFmpegFrameRecorder;

import org.bytedeco.javacv.FFmpegLogCallback;

import org.bytedeco.javacv.Frame;

import org.bytedeco.javacv.Java2DFrameConverter;

import org.springframework.stereotype.Component;

import javax.annotation.PreDestroy;

import java.awt.image.BufferedImage;

import java.util.concurrent.atomic.AtomicBoolean;

/**

* 图像流处理类

* author honor

*/

@Slf4j

@Component

public class ImageStream {

/**

* 视频流参数

*/

private FFmpegFrameRecorder recorder;

private Java2DFrameConverter converter = null;

//推送视频的帧频

private Integer FRAME_RATE = 30;

private Integer GOP_SIZE = 30;

private String FORMAT_RTSP = "rtsp";

private final AtomicBoolean startFlag = new AtomicBoolean(false);

public void initRtspRecorder(String pushUrl, int width, int height) {

try {

// avutil.av_log_set_level(avutil.AV_LOG_ERROR);

FFmpegLogCallback.set();

converter = new Java2DFrameConverter();

recorder = new FFmpegFrameRecorder(pushUrl, width, height);

// recorder.setOption("timeout", "10000"); // 5000毫秒(5秒)的连接超时

//

//// 设置读写超时(数据传输的最长时间,以毫秒为单位)

// recorder.setOption("stimeout", "20000"); // 10000毫秒(10秒)的读写超时

recorder.setVideoCodec(avcodec.AV_CODEC_ID_H264);

// recorder.setVideoOption("skip_frame", "1"); // 设置编码器选项来启用帧跳过

// recorder.setVideoBitrate(2000000);

// recorder.setOption("skip_frame", "nonref+bidir");

recorder.setGopSize(GOP_SIZE);

recorder.setFormat(FORMAT_RTSP);

recorder.setFrameRate(FRAME_RATE);

recorder.setOption("rtsp_transport", "tcp"); // 设置 RTSP 传输协议 tcp/udp

recorder.start();

startFlag.set(true);

System.out.println("recorder init ok");

} catch (Exception e) {

System.out.println("recorder init fail: "+e);

destroy();

}

}

public void pushStream(String pushUrl, int width, int height, BufferedImage bufferedImage) {

if (!startFlag.get()) {

initRtspRecorder(pushUrl, width, height);

}

try {

//压缩图片

BufferedImage bufferedImageCompressed = bufferedImage;

//给视频增加时间窗

// Date date = new Date();

// SimpleDateFormat dateFormat = new SimpleDateFormat("yyyy-MM-dd HH:mm:ss");

// String formattedDate = dateFormat.format(date);

// BufferedImage bufferedImageWithWaterMark = ImageUtils.addTextWaterMark(bufferedImageCompressed, Color.RED, 80, formattedDate);

Java2DFrameConverter converter = new Java2DFrameConverter();//5

Frame frame = converter.getFrame(bufferedImageCompressed);

System.out.println("-----------------------------");

System.out.println(frame);

recorder.record(frame);

System.out.println("+++++++++++++++++++++++++++");

} catch (Exception e) {

System.out.println("recorder record fail: "+e);

}

}

@PreDestroy

public void destroy() {

if (startFlag.get()) {

try {

recorder.close();

converter.close();

startFlag.set(false);

recorder = null;

System.out.println("recorder destroy ok");

} catch (Exception e) {

System.out.println("recorder destroy fail: "+ e);

}

}

}

}

2.3. Controller接口

package com.example.study.redis;

import com.example.study.test.ImageStream;

import org.apache.commons.codec.binary.Base64;

import org.springframework.data.redis.core.RedisTemplate;

import org.springframework.web.bind.annotation.GetMapping;

import org.springframework.web.bind.annotation.RequestParam;

import org.springframework.web.bind.annotation.RestController;

import redis.clients.jedis.Jedis;

import redis.clients.jedis.JedisPubSub;

import javax.imageio.ImageIO;

import java.awt.image.BufferedImage;

import java.io.ByteArrayInputStream;

import java.io.IOException;

@RestController

public class RedisController {

@GetMapping("/publish")

public void publish(@RequestParam String message) {

Jedis jedis = new Jedis("192.168.110.200",6379);

jedis.auth("rootroot");

// 发送消息

// 下面这里需要配置发送的CHANNEL名称

jedis.publish("rtsp://192.168.110.219:8554/show/397/6/20231101095951", message);

}

@GetMapping("/subscribe")

public void subscribe() {

Jedis jedis = new Jedis("192.168.110.200",6379);

ImageStream imageStream = new ImageStream();

jedis.auth("rootroot");

jedis.subscribe(new JedisPubSub() {

@Override

public void onMessage(String channel, String message) {

// System.out.println("Received message:" + message);

// System.out.println(bufferedImage);

try {

System.out.println("=============================");

System.out.println(Base64ToBufferedImage(message));

imageStream.pushStream("rtsp://127.0.0.1:8554/live",640,480,Base64ToBufferedImage(message));

System.out.println("#############################");

} catch (IOException e) {

throw new RuntimeException(e);

}

}

}, "rtsp://192.168.110.219:8554/show/6/6/20231103164532");

}

public BufferedImage Base64ToBufferedImage(String bs) throws IOException {

String base64Image = bs; // 替换为你的Base64图像数据

// 解码Base64字符串为字节数组

byte[] imageBytes = Base64.decodeBase64(base64Image);

// 将字节数组转换为BufferedImage

BufferedImage bufferedImage = ImageIO.read(new ByteArrayInputStream(imageBytes));

return bufferedImage;

}

}

Base64ToBufferedImage为将base64图片转为BufferedImage的方法,publish为向redis推送消息,subscribe为订阅主题为 "rtsp://192.168.110.219:8554/show/6/6/20231103164532" 的消息,一旦有新消息就获取

2.4. 测试

由于需要推流,所以你的电脑上没有推流服务器是不可以的,我这里用的是rtsp服务器,如需下载,点击下面连接

这个服务在外网上,打开会慢

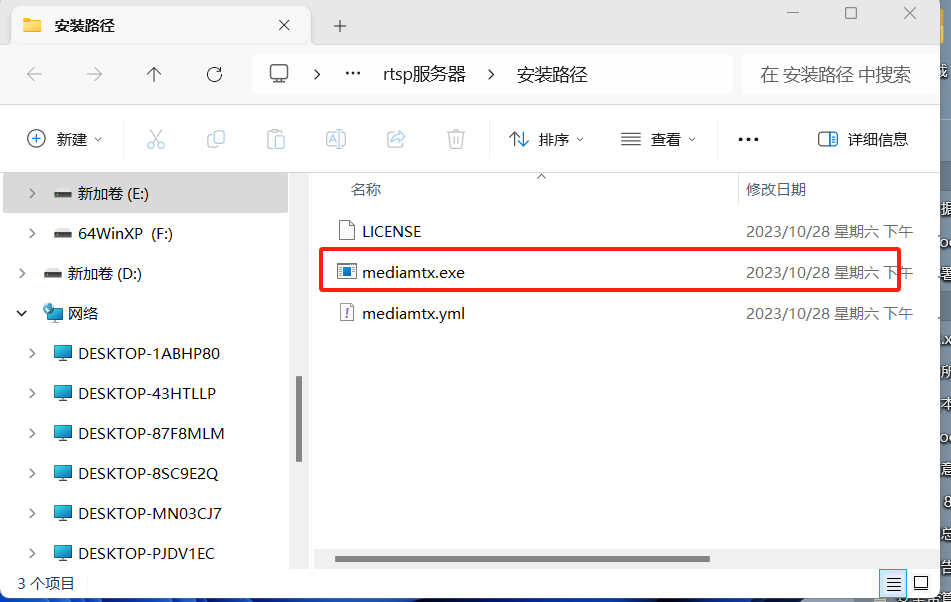

下载完成后解压缩如下图

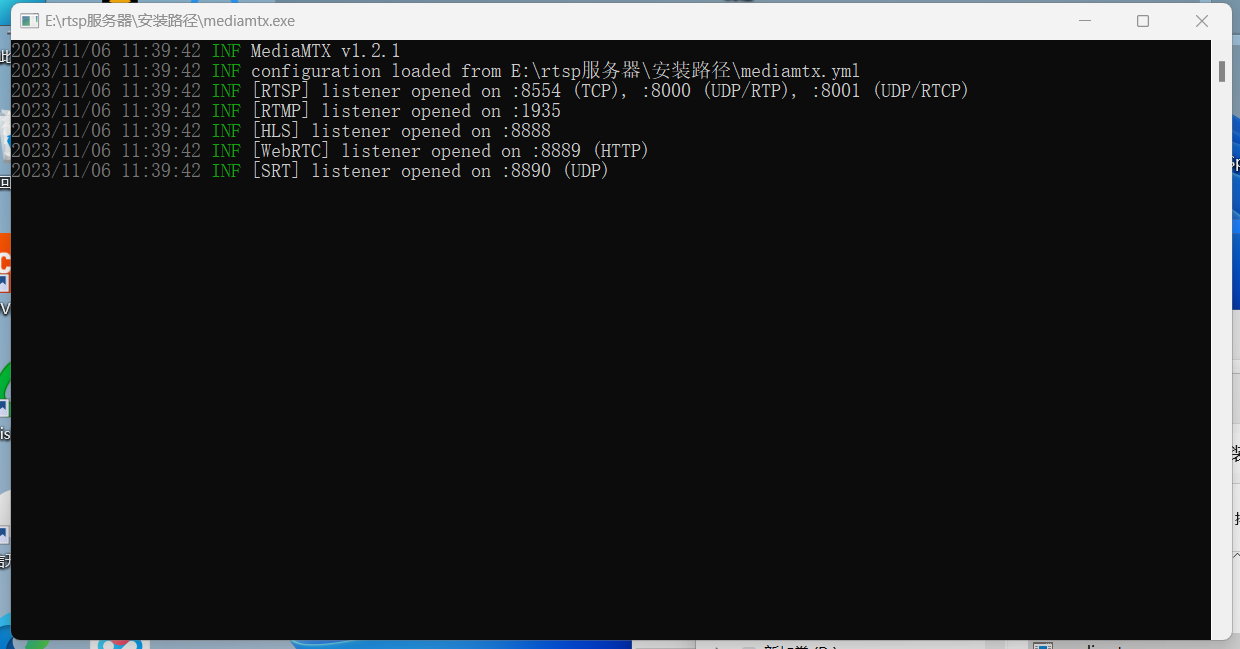

双击.exe 文件打开rtsp服务器,如下图:

运行后端

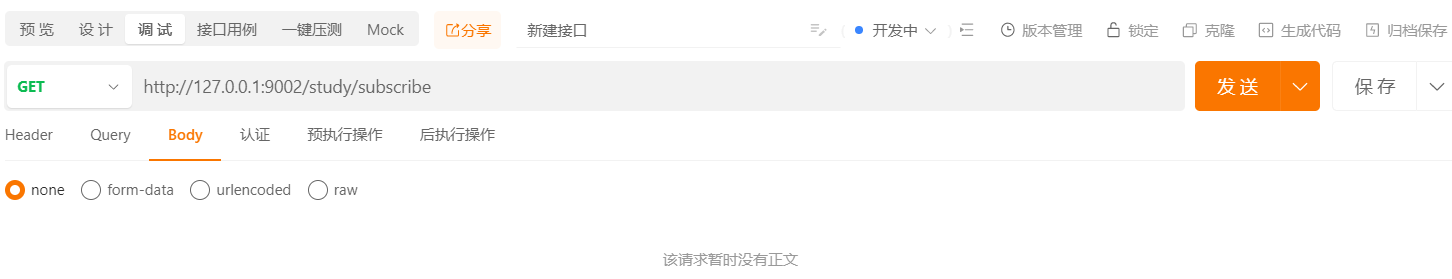

打开postman或者APIpost等接口调试工具调试

打开potplayer或者vlc等播放器播放流

打开任务管理器,查看性能:

如果出现下述错误,为rtsp服务器没有或者出错,可以下载或者重启:

Warning: [libopenh264 @ 0000018643983440] [OpenH264] this = 0x000001863cea0c70, Warning:layerId(0) doesn't support profile(578), change to UNSPECIFIC profile Warning: [libopenh264 @ 0000018643983440] [OpenH264] this = 0x000001863cea0c70, Warning:bEnableFrameSkip = 0,bitrate can't be controlled for RC_QUALITY_MODE,RC_BITRATE_MODE and RC_TIMESTAMP_MODE without enabling skip frame.

参考:

推流参考:https://blog.csdn.net/m0_54088427/article/details/132878748

redis参考:https://blog.csdn.net/weixin_73310020/article/details/130394340