一、源码安装munge

1、下载munge

下载地址:https://github.com/dun/munge/releases2、安装编译

tar -Jxvf munge-0.5.15.tar.xz ./bootstrap ./configure --prefix=/usr/local/munge \ --sysconfdir=/usr/local/munge/etc \ --localstatedir=/usr/local/munge/local \ --with-runstatedir=/usr/local/munge/run \ --libdir=/usr/local/munge/lib64 make make install

3、创建用户并修改目录权限

useradd -s /sbin/nologin -u 601 munge sudo -u munge mkdir -p /usr/local/munge/run/munge // sudo -u munge mkdir /usr/local/munge/var/munge /usr/local/munge/var/run // sudo -u munge mkdir /usr/local/munge/var/run/munge chown -R munge.munge /usr/local/munge/ chmod 700 /usr/local/munge/etc/ chmod 711 /usr/local/munge/local/ chmod 755 /usr/local/munge/run chmod 711 /usr/local/munge/lib

4、创建munge.key文件

执行以下命令完成以后,在/usr/local/munge/etc/munge/下面会生成munge.key,需修改munge.key的权限sudo -u munge /usr/local/munge/sbin/mungekey --verbose chmod 600 /usr/local/munge/etc/munge/munge.key

【注意】:如果有多台服务器,需将服务端的munge.key发给客户端,客户端无需自己生成

5、生成链接文件并启动服务

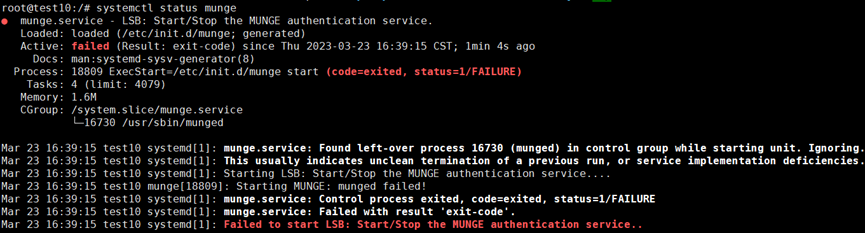

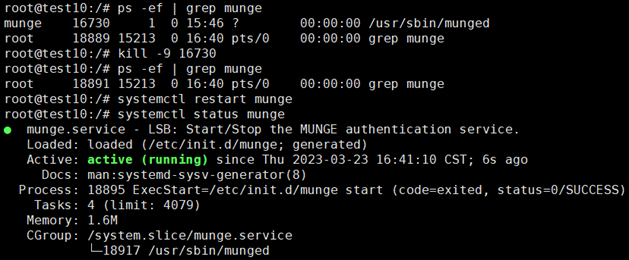

ln -s /usr/local/munge/lib/systemd/system/munge.service /usr/lib/systemd/system/munge.service (cp /usr/local/munge/lib/systemd/system/munge.service /usr/lib/systemd/system/) systemctl daemon-reload systemctl start munge systemctl status munge

6、安装中会出现的问题

(1)configure报错

【解决方式】:apt -y install openssl-devel openssl

这里采用符合GPL许可的Open SSL加密库,如果是源码编译的此库环境,编译时需要通过--with-crypto-lib选择指定

或者源码安装openssl后--with-openssl-prefix=/usr/local/openssl

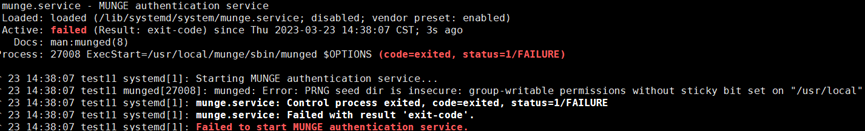

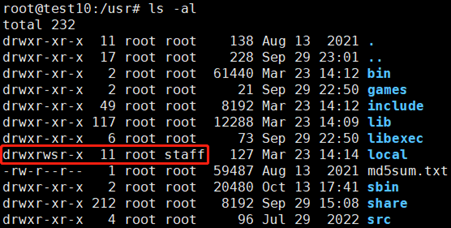

(2)文件权限和所有者有问题

/usr/local的文件权限和所有者有问题

【解决方式】:修改/usr/local的文件权限和所有者

chown -R root.root /usr/local chmod -R 755 /usr/local

二、源码安装slurm

apt-get install make hwloc libhwloc-dev libmunge-dev libmunge2 munge mariadb-server libmysalclient-dey -y

1、下载并解压安装包

下载地址:https://www.schedmd.com/downloads.phptar -jxvf slurm-22.05.8.tar.bz2 // find . -name "config.guess" cp /usr/share/misc/config.* auxdir/ // cp /usr/share/libtool/build-aux/config.* .

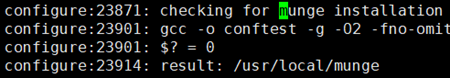

2、编译安装

./configure --prefix=/usr/local/slurm \ --with-munge=/usr/local/munge \ sysconfdir=/usr/local/slurm/etc \ --localstatedir=/usr/local/slurm/local \ --runstatedir=/usr/local/slurm/run \ --libdir=/usr/local/slurm/lib64

如果下面显示no,则需要重新./configure并指定,--with-mysql_config=/usr/bin

make -j make install

3、配置数据库

// 生成slurm用户,以便该用户操作slurm_acct_db数据库,其密码是123456 create user 'slurm'@'localhost' identified by '123456'; // 生成账户数据库slurm_acct_db create database slurm_acct_db; // 赋予slurm从本机localhost采用密码123456登录具备操作slurm_acct_db数据下所有表的全部权限 grant all on slurm_acct_db.* TO 'slurm'@'localhost' identified by '123456' with grant option; // 赋予slurm从system0采用密码123456登录具备操作slurm_acct_db数据下所有表的全部权限 grant all on slurm_acct_db.* TO 'slurm'@'system0' identified by '123456' with grant option; // 生成作业信息数据库slurm_jobcomp_db create database slurm_jobcomp_db; // 赋予slurm从本机localhost采用密码123456登录具备操作slurm_jobcomp_db数据下所有表的全部权限 grant all on slurm_jobcomp_db.* TO 'slurm'@'localhost' identified by '123456' with grant option; // 赋予slurm从system0采用密码123456登录具备操作slurm_jobcomp_db数据下所有表的全部权限 grant all on slurm_jobcomp_db.* TO 'slurm'@'system0' identified by '123456' with grant option;

4、编辑配置文件(示例配置文件在源码包中的etc下)

cp slurm.conf.example /usr/local/slurm/etc/slurm.conf cp slurmdbd.conf.example /usr/local/slurm/etc/ slurmdbd.conf cp cgroup.conf.example /usr/local/slurm/etc/cgroup.conf chmod 600 slurmdbd.conf cd /usr/local/slurm mkdir run slurm log

5、配置环境变量

vim /etc/profile.d/slurm.sh export PATH=$PATH:/usr/local/slurm/bin:/usr/local/slurm/sbin export LD_LIBRARY_PATH=/usr/local/slurm/lib64:$LD_LIBRARY_PATH

6、启动服务(服务启动文件在源码包中的etc下)

// cp etc/slurmctld.service etc/slurmdbd.service etc/slurmd.service /etc/systemd/system/ cp etc/slurmctld.service etc/slurmdbd.service etc/slurmd.service /usr/lib/systemd/system/ systemctl daemon-reload systemctl start slurmctld systemctl start slurmd systemctl start slurmdbd

正常情况下显示绿色的active状态;如果失败,则用下面命令查看错误日志

slurmctld -Dvvvvv slurmdbd -Dvvvvv slurmd -Dvvvvv

scontrol update nodename=sw01 state=idle

7、其它

重启slurmctld服务systemctl restart slurmctld scp -r /usr/local/slurm test10:/usr/local/ scp /etc/profile.d/slurm.sh test10:/etc/profile.d/ scp /etc/systemd/system/slurmd.service test10:/etc/systemd/system/

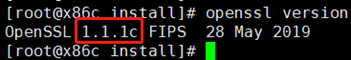

三、openssl源码安装

1、查看版本openssl version

2、下载相应版本openssl

下载地址:https://www.openssl.org/source/old/tar -zxvf openssl-1.1.1s.tar.gz

./config --prefix=/usr/local/openssl

./config -t

make & make install

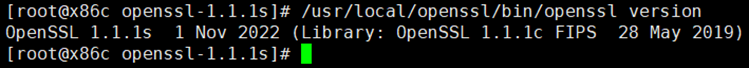

3、测试(/usr/local/openssl/bin/openssl version)

如果正确显示版本号,则安装成功。某些版本的操作系统会报下列错误openssl: symbol lookup error: openssl: undefined symbol: EVP_mdc2, version OPENSSL_1_1_0

// 此时需要配置下系统库: // echo “/usr/local/openssl/lib” >> /etc/ld.so.conf.d/libc.conf && ldconfig // 最后将/usr/local/openssl/bin/openssl添加到系统路径 // ln -s /usr/local/openssl/bin/openssl /bin/openssl

4、切换openssl版本

// mv /usr/bin/openssl /usr/bin/openssl.bak // mv /usr/include/openssl /usr/include/openssl.bak // ln -s /usr/local/openssl/bin/openssl /usr/bin/openssl // ln -s /usr/local/openssl/include/openssl /usr/include/openssl // echo "/usr/local/openssl/lib" >> /etc/ld.so.conf ldconfig -v // ln -s /usr/local/openssl/lib/libssl.so.1.1 /usr/lib64/libssl.so.1.1 // ln -s /usr/local/openssl/lib/libcrypto.so.1.1 /usr/lib64/libcrypto.so.1.1 // 【注意】:不能直接删除软链接 // 如需使用新版本开发,则需替换原来的软链接指向,即替换原动态库,进行版本升级。 // 替换/lib(lib64)和/usr/lib(lib64)和/usr/local/lib(lib64)存在的相应动态库: // ln -sf /usr/local/openssl/lib/libssl.so.1.1 /usr/lib64/libssl.so // ln -sf /usr/local/openssl/lib/libcrypto.so.1.1 /usr/lib64/libcrypto.so

四、直接安装munge

1、添加munge用户

groupadd -g 972 munge useradd -g 972 -u 972 munge

2、下载munge

apt-get install munge -y

3、执行以下命令,创建munge.key文件:

create-munge-key

4、修改权限

执行完以后,在/etc/munge/下面会生成munge.key,需修改munge.key的权限以及所属用户,把所属用户改成munge(/etc和/usr应为root权限)chown -R munge: /etc/munge/ /var/log/munge/ /var/lib/munge/ /var/run/munge/ chmod 400 /etc/munge/munge.key

ps -ef | grep munge kill -9 16730

五、slurm配置文件

(1)slurm.conf配置文件

######################################################## # Configuration file for Slurm - 2021-08-20T10:27:23 # ######################################################## # # # ################################################ # CONTROL # ################################################ ClusterName=Sunway # 集群名 SlurmUser=root # 主节点管理账号 SlurmctldHost=sw01 # 主节点名 #SlurmctldHost=psn2 #备控制器的主机名 SlurmctldPort=6817 SlurmdPort=6818 SlurmdUser=root # ################################################ # LOGGING & OTHER PATHS # ################################################ SlurmctldLogFile=/usr/local/slurm/log/slurmctld.log # 主节点log文件 SlurmdLogFile=/usr/local/slurm/log/slurmd.log # 子节点log文件 SlurmdPidFile=/usr/local/slurm/run/slurmd.pid # 子节点进程文件 SlurmdSpoolDir=/usr/local/slurm/slurm/d # 子节点状态文件夹 #SlurmSchedLogFile= SlurmctldPidFile=/usr/local/slurm/run/slurmctld.pid # 主服务进程文件 StateSaveLocation=/usr/local/slurm/slurm/state # 主节点状态文件夹 # ################################################ # ACCOUNTING # ################################################ #AccountingStorageBackupHost=psn2 #slurmdbd备机 AccountingStorageEnforce=associations,limits,qos AccountingStorageHost=sw01 # 主节点 AccountingStoragePort=6819 AccountingStorageType=accounting_storage/slurmdbd #AccountingStorageUser= #AccountingStoreJobComment=Yes AcctGatherEnergyType=acct_gather_energy/none AcctGatherFilesystemType=acct_gather_filesystem/none AcctGatherInterconnectType=acct_gather_interconnect/none AcctGatherNodeFreq=0 #AcctGatherProfileType=acct_gather_profile/none ExtSensorsType=ext_sensors/none ExtSensorsFreq=0 JobAcctGatherFrequency=30 JobAcctGatherType=jobacct_gather/linux # ################################################ # SCHEDULING & ALLOCATION # ################################################ PreemptMode=OFF PreemptType=preempt/none PreemptExemptTime=00:00:00 PriorityType=priority/basic #SchedulerParameters= SchedulerTimeSlice=30 SchedulerType=sched/backfill #SelectType=select/cons_tres SelectType=select/linear #SelectTypeParameters=CR_CPU SlurmSchedLogLevel=0 # ################################################ # TOPOLOGY # ################################################ TopologyPlugin=topology/none # ################################################ # TIMERS # ################################################ BatchStartTimeout=10 CompleteWait=0 EpilogMsgTime=2000 GetEnvTimeout=2 InactiveLimit=0 KillWait=30 MinJobAge=300 SlurmctldTimeout=60 SlurmdTimeout=60 WaitTime=0 # ################################################ # POWER # ################################################ #ResumeProgram= ResumeRate=300 ResumeTimeout=60 #SuspendExcNodes= #SuspendExcParts= #SuspendProgram= SuspendRate=60 SuspendTime=NONE SuspendTimeout=30 # ################################################ # DEBUG # ################################################ DebugFlags=NO_CONF_HASH SlurmctldDebug=info SlurmdDebug=info # ################################################ # EPILOG & PROLOG # ################################################ #Epilog=/usr/local/etc/epilog #Prolog=/usr/local/etc/prolog #SrunEpilog=/usr/local/etc/srun_epilog #SrunProlog=/usr/local/etc/srun_prolog #TaskEpilog=/usr/local/etc/task_epilog #TaskProlog=/usr/local/etc/task_prolog # ################################################ # PROCESS TRACKING # ################################################ ProctrackType=proctrack/pgid # ################################################ # RESOURCE CONFINEMENT # ################################################ #TaskPlugin=task/none #TaskPlugin=task/affinity #TaskPlugin=task/cgroup #TaskPluginParam= # ################################################ # OTHER # ################################################ #AccountingStorageExternalHost= #AccountingStorageParameters= AccountingStorageTRES=cpu,mem,energy,node,billing,fs/disk,vmem,pages AllowSpecResourcesUsage=No #AuthAltTypes= #AuthAltParameters= #AuthInfo= AuthType=auth/munge #BurstBufferType= #CliFilterPlugins= #CommunicationParameters= CoreSpecPlugin=core_spec/none #CpuFreqDef= CpuFreqGovernors=Performance,OnDemand,UserSpace CredType=cred/munge #DefMemPerNode= #DependencyParameters= DisableRootJobs=No EioTimeout=60 EnforcePartLimits=NO #EpilogSlurmctld= #FederationParameters= FirstJobId=1 #GresTypes= GpuFreqDef=high,memory=high GroupUpdateForce=1 GroupUpdateTime=600 #HealthCheckInterval=0 #HealthCheckNodeState=ANY #HealthCheckProgram= InteractiveStepOptions=--interactive #JobAcctGatherParams= JobCompHost=localhost JobCompLoc=/var/log/slurmjobcomp.log JobCompPort=0 JobCompType=jobcomp/mysql JobCompUser=slurm JobCompPass=123456 JobContainerType=job_container/none #JobCredentialPrivateKey= #JobCredentialPublicCertificate= #JobDefaults= JobFileAppend=0 JobRequeue=1 #JobSubmitPlugins= #KeepAliveTime= KillOnBadExit=0 #LaunchParameters= LaunchType=launch/slurm #Licenses= LogTimeFormat=iso8601_ms #MailDomain= #MailProg=/bin/mail MaxArraySize=1001 MaxDBDMsgs=20012 MaxJobCount=10000 #最大的作业数 MaxJobId=67043328 MaxMemPerNode=UNLIMITED MaxStepCount=40000 MaxTasksPerNode=512 MCSPlugin=mcs/none #MCSParameters= MessageTimeout=10 MpiDefault=pmi2 ##启用MPI #MpiParams= #NodeFeaturesPlugins= OverTimeLimit=0 PluginDir=/usr/local/slurm/lib64/slurm #PlugStackConfig= #PowerParameters= #PowerPlugin= #PrEpParameters= PrEpPlugins=prep/script #PriorityParameters= #PrioritySiteFactorParameters= #PrioritySiteFactorPlugin= PrivateData=none #PrologEpilogTimeout=65534 #PrologSlurmctld= #PrologFlags= PropagatePrioProcess=0 PropagateResourceLimits=ALL #PropagateResourceLimitsExcept= #RebootProgram= #ReconfigFlags= #RequeueExit= #RequeueExitHold= #ResumeFailProgram= #ResvEpilog= ResvOverRun=0 #ResvProlog= ReturnToService=0 RoutePlugin=route/default #SbcastParameters= #ScronParameters= #SlurmctldAddr= #SlurmctldSyslogDebug= #SlurmctldPrimaryOffProg= #SlurmctldPrimaryOnProg= #SlurmctldParameters= #SlurmdParameters= #SlurmdSyslogDebug= #SlurmctldPlugstack= SrunPortRange=0-0 SwitchType=switch/none TCPTimeout=2 TmpFS=/tmp #TopologyParam= TrackWCKey=No TreeWidth=50 UsePam=No #UnkillableStepProgram= UnkillableStepTimeout=60 VSizeFactor=0 #X11Parameters= # ################################################ # NODES # ################################################ #NodeName=Intel Sockets=2 CoresPerSocket=16 ThreadsPerCore=1 RealMemory=480000 #NodeName=Dell Sockets=2 CoresPerSocket=24 ThreadsPerCore=1 RealMemory=100000 #NodeName=swa CPUS=16 CoresPerSocket=1 ThreadsPerCore=1 Sockets=16 RealMemory=48000 State=UNKNOWN #NodeName=swb CPUS=64 CoresPerSocket=32 ThreadsPerCore=1 Sockets=2 RealMemory=100000 State=UNKNOWN #NodeName=sw5a0[1-3] CPUS=4 Sockets=4 CoresPerSocket=1 ThreadsPerCore=1 RealMemory=1 NodeName=sw01 CPUs=1 Sockets=1 CoresPerSocket=1 ThreadsPerCore=1 State=UNKNOWN # ################################################ # PARTITIONS # ################################################ #PartitionName=x86 AllowGroups=all MinNodes=0 Nodes=Dell Default=YES State=UP #PartitionName=multicore AllowGroups=all MinNodes=0 Nodes=swa,swb,swc,swd State=UP #PartitionName=manycore Default=YES AllowGroups=all MinNodes=0 Nodes=sw5a0[1-3] State=UP PartitionName=Manycore AllowGroups=all MinNodes=0 Nodes=sw01 State=UP Default=YES

(2)slurmdbd.conf配置文件

# # Example slurmdbd.conf file. # # See the slurmdbd.conf man page for more information. # # Archive info #ArchiveJobs=yes #ArchiveDir="/tmp" #ArchiveSteps=yes #ArchiveScript= #JobPurge=12 #StepPurge=1 # # Authentication info AuthType=auth/munge #AuthInfo=/var/run/munge/munge.socket.2 # # slurmDBD info为启用slurmdbd的管理服务器,与slurm.conf中的AccountingStorageHost一致 DbdHost=sw01 #DbdBackupAddr=172.17.0.2 #DbdBackupHost=mn02 DbdPort=6819 SlurmUser=root MessageTimeout=30 DebugLevel=7 #DefaultQOS=normal,standby LogFile=/usr/local/slurm/log/slurmdbd.log PidFile=/usr/local/slurm/run/slurmdbd.pid #PluginDir=/usr/lib/slurm #PrivateData=accounts,users,usage,jobs PrivateData=jobs #TrackWCKey=yes # # Database info StorageType=accounting_storage/mysql StorageHost=localhost #StorageBackupHost=mn02 StoragePort=3306 StoragePass=123456 StorageUser=slurm StorageLoc=slurm_acct_db CommitDelay=1